使用Horizontal Pod Autoscaler (HPA)

实验目标:

学习如何使用 HPA 实现自动扩展。

实验步骤:

- 创建一个 Deployment,并设置 CPU 或内存的资源请求。

- 创建一个 HPA,设置扩展策略。

- 生成负载,观察 HPA 如何自动扩展 Pod 数量。

今天继续我们k8s未做完的实验:如何使用 HPA 实现自动扩展

创建

1、创建namespace

kubectl create namespace nginx-hpa

2、创建deployment

# /kubeapi/data/project5/nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-hpa

spec:

replicas: 1

selector:

matchLabels:

app: nginx-hpa

template:

metadata:

labels:

app: nginx-hpa

spec:

containers:

- name: nginx-hpa

image: nginx:1.18

ports:

- containerPort: 80

resources:

requests:

cpu: "10m"

limits:

cpu: "20m"

应用此Deployment

kubectl apply -f nginx-hpa.yaml

顺带创建一下service

kubectl create service nodeport nginx-hpa --tcp=80:80 -n nginx-hpa

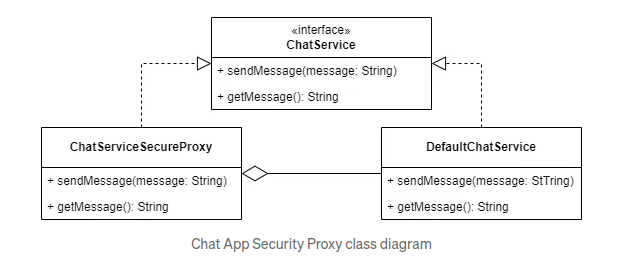

3、创建HPA

# /kubeapi/data/project5/nginx-hpa.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: nginx-hpa

namespace: nginx-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 1

应用此HPA:

kubectl apply -f nginx-hpa.yaml

4、生成负载以观察自动扩展效果

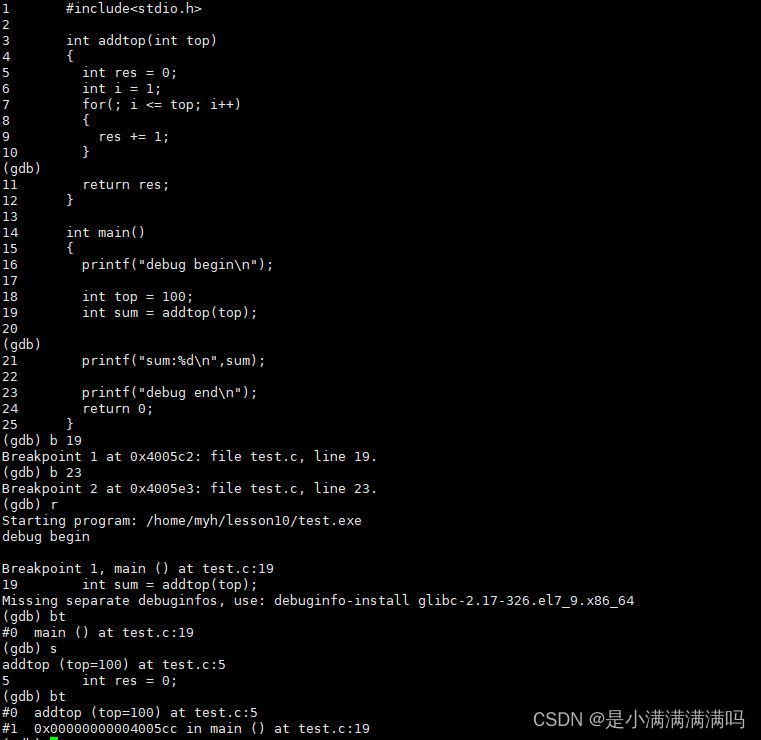

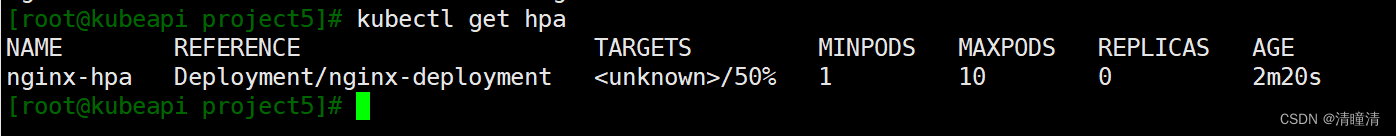

从上边的图片我们可以看到,hpa实际并没有获取到资源的使用率

这里我们先安装一下 Metrics Server

curl -LO https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

编辑 components.yaml ,建议直接复制,替换掉之前的文件内容。需要修改的地方我都有标注

需要替换的原因就是:Metrics Server 遇到的主要问题是无法验证节点证书的 x509 错误,因为节点的证书中不包含任何 IP SANs(Subject Alternative Names)。这是一个常见的问题,尤其是在使用自签名证书的 Kubernetes 集群中。为了解决这个问题,可以调整 Metrics Server 的配置,使其忽略证书验证

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=10250

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls # 添加此行

image: registry.k8s.io/metrics-server/metrics-server:v0.7.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 10250

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

seccompProfile:

type: RuntimeDefault

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true # 确保这一行存在

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

使用命令应用配置文件

kubectl apply -f components.yaml

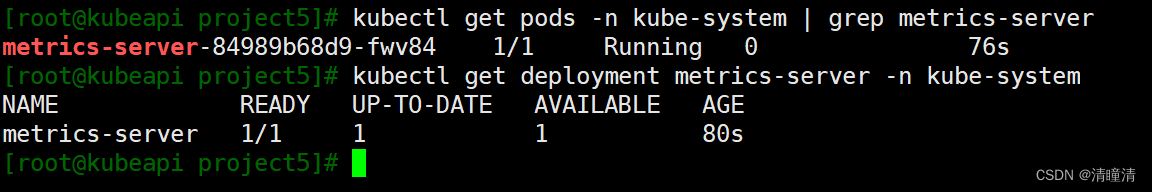

检查 Metrics Server 部署状态

kubectl get deployment metrics-server -n kube-system

kubectl get pods -n kube-system | grep metrics-server

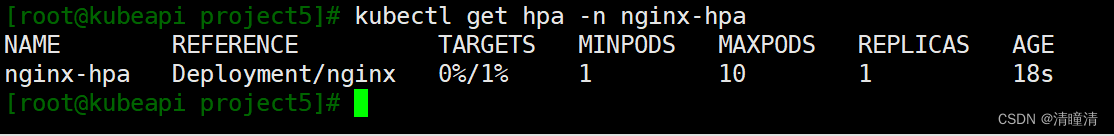

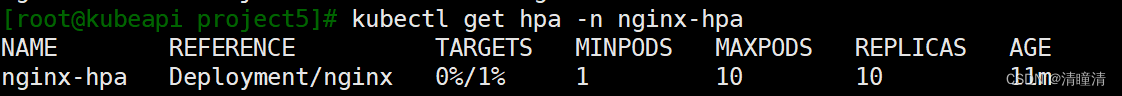

这里部署成功后等待一会,我们在检查hpa的状态

kubectl get hpa -n nginx-hpa

发现可以看到负载的数据了

使用 kubectl run 命令创建一个 Pod 来生成负载:

kubectl run -i --tty load-generator --image=busybox /bin/sh

在 Pod 内运行以下命令生成 CPU 负载:

while true; do wget -q -O- http://10.0.0.5:31047; done

如果中途退出过容器就删掉重新生成

kubectl delete pod load-generator

验证

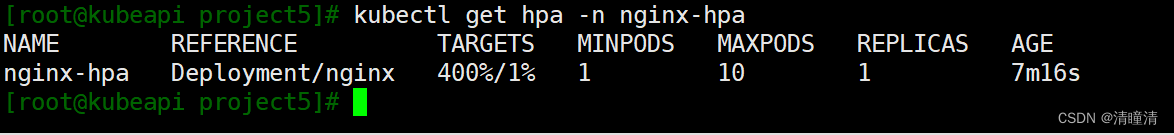

在生成负载之后,再次检查 HPA 和 nginx 部署的状态

检查hpa,发现负载已经超过了我们限定的值

kubectl get hpa -n nginx-hpa

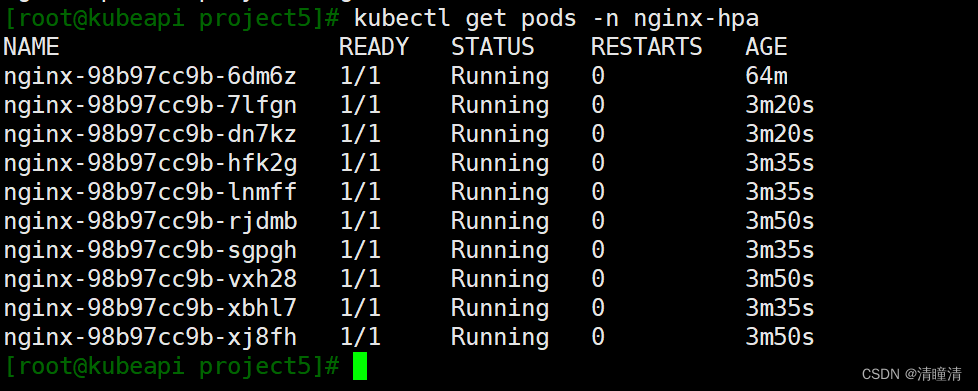

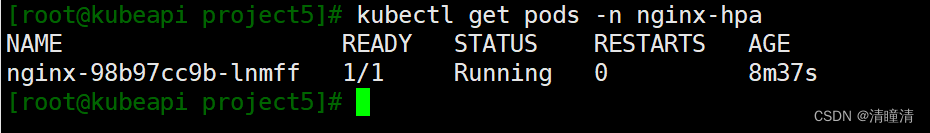

检查nginx容器数量,发现自动增加了9个副本。总数是我们配置文件中maxReplicas: 10规定的最多10个容器

kubectl get pods -n nginx-hpa

关闭负载容器后,当负载不在高出我们所规定的数值后观察pod数量

这里需要注意的是:

如果负载下降后,HPA 没有按预期缩减 Pod 数量,有可能是配置问题或需要等待一段时间。HPA 的自动缩减行为需要满足一些条件,并且通常有一个冷却时间窗口,以避免频繁扩缩容导致的不稳定性。这个时间窗口默认是5分钟,可以通过以下命令查看配置:

kubectl get hpa nginx-hpa -o yaml -n nginx-hpa

确保没有手动调整 Deployment 副本数,HPA 的调整策略会被手动更改副本数所覆盖。

经过一段时间以后,在观察pod的数量,发现已经自动缩减到1个

通过以上步骤,你应该能看到 HPA 根据 CPU 使用率自动扩展和缩减 Pod 的数量。最初部署时只有一个 Pod,但在生成负载后,你应该会看到 Pod 的数量增加。当负载减少时,Pod 的数量会再次减少。

我是为了实验效果把HPA触发的值调整的很低,生产中建议根据实际情况调整