图相似性——SimGNN

- 论文链接:

- 个人理解:

- 数据处理:

- feature_1 = [

- [1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], # "A"

- [0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0], # "B"

- [0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0] # "C" 第二个循环: for n in graph['labels_2']: # ["A", "C", "D"]

- feature_2 = [

- [1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], # "A"

- [0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0], # "C"

- [0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0] # "D"

- ]

- norm_ged = 2 / (0.5 * (3 + 3)) = 2 / 3 = 0.6667

- target = torch.from_numpy(np.exp(-0.6667).reshape(1, 1)).view(-1).float().unsqueeze(0)

- target = torch.tensor([[0.5134]])

- 卷积层:

- 注意力层

- 张量网络层:

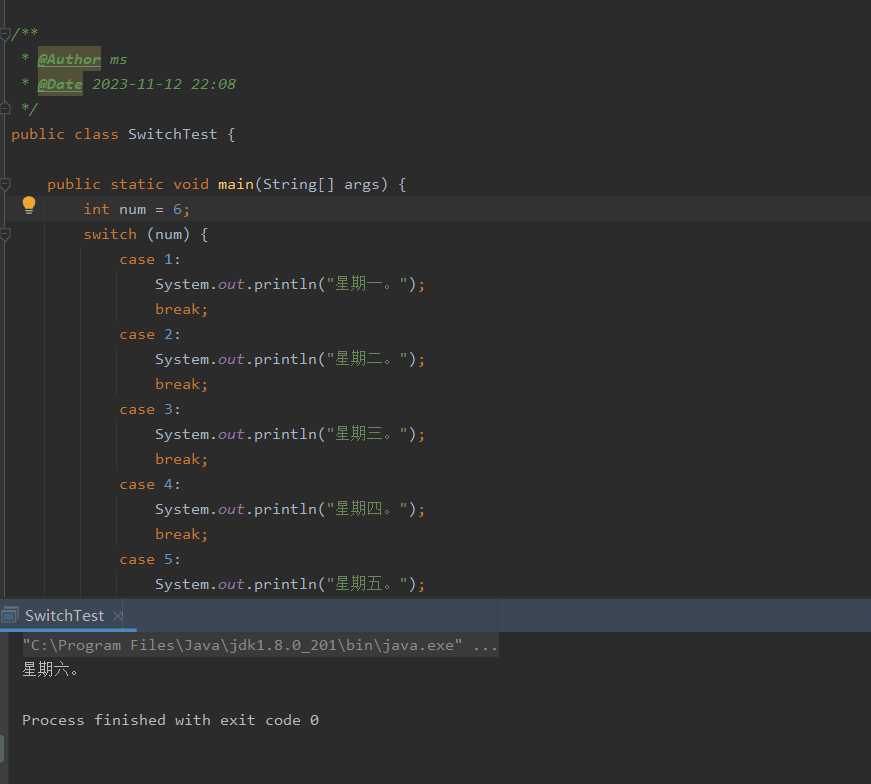

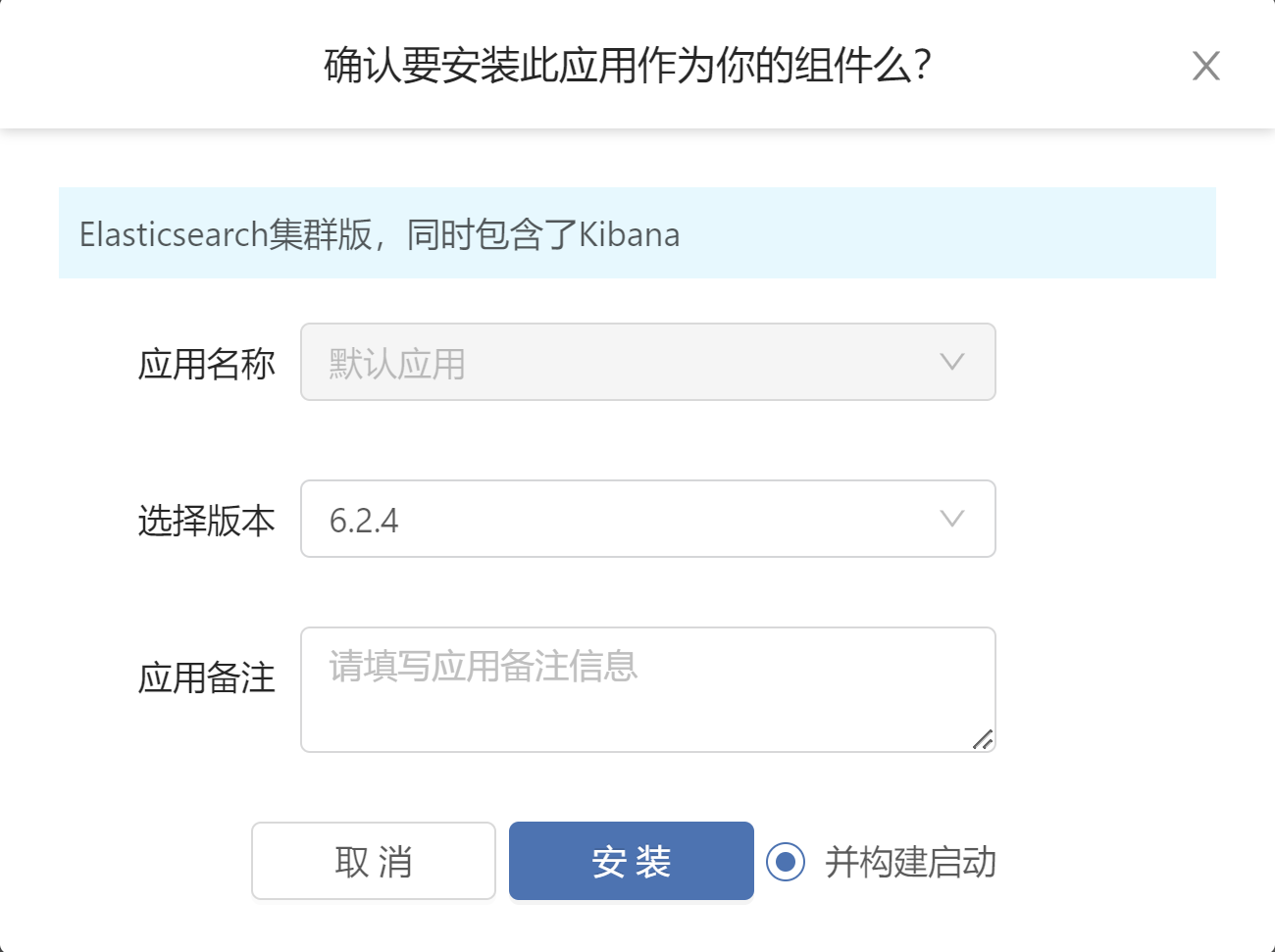

- 定义simgnn网络模型:

- 整体代码:

论文链接:

SimGNN: A Neural Network Approachto Fast Graph Similarity Computation

个人理解:

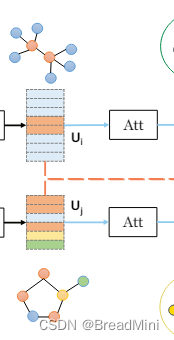

重中之重就是理解该图:

逐步来击破这幅图吧。

数据处理:

论文中给定的例子所提供的数据是 json 格式。

读取数据:

train_graphs = glob.glob('data/train/'+'*.json')

test_graphs = glob.glob('data/test/'+'*.json')

看提供的图可得知,特征为one-hot 编码,将数据处理成需要的格式。

1、获取图节点所拥有的所有特征!

def node_mapping():

nodes_id = set()

graph_pairs = train_graphs + test_graphs

for graph_pair in graph_pairs:

graph = json.load(open(graph_pair))

nodes_id = nodes_id.union(set(graph['labels_1']))

nodes_id = nodes_id.union(set(graph['labels_2']))

nodes_id = sorted(nodes_id)

nodes_id = {id:index for index,id in enumerate(nodes_id)}

num_nodes_id = len(nodes_id)

print(nodes_id,num_nodes_id)

return nodes_id,num_nodes_id

l理解上述代码:

理解node_mapping()函数的作用:

例如: graph1.json:{

“labels_1”: [“A”, “B”, “C”],

“labels_2”: [“D”, “E”] } =>train_graph graph2.json:{

“labels_1”: [“C”, “F”],

“labels_2”: [“A”, “G”] } => test_graph 执行: graph_pairs = train_graphs + test_graphs 得:=> graph_pairs =

[‘graph1.json’,‘graph2.json’]执行: for graph_pair in graph_pairs: 第一个循环:graph =

json.load(open(‘graph1.json’)) 得: #graph = {“labels_1”: [“A”, “B”,

“C”], “labels_2”: [“D”, “E”]}nodes_id = nodes_id.union(set(graph[‘labels_1’])) # {“A”, “B”, “C”}

nodes_id = nodes_id.union(set(graph[‘labels_2’])) # {“A”, “B”, “C”,

“D”, “E”}第二个循环:graph = json.load(open(‘graph2.json’)) 得: #graph = {“labels_1”:

[“C”, “F”], “labels_2”: [“A”, “G”]} nodes_id =

nodes_id.union(set(graph[‘labels_1’])) # {“A”, “B”, “C”, “D”, “E”,

“F”} nodes_id = nodes_id.union(set(graph[‘labels_2’])) # {“A”, “B”,

“C”, “D”, “E”, “F”, “G”}执行: nodes_id = sorted(nodes_id) 进行排序 得: # [“A”, “B”, “C”, “D”, “E”,

“F”, “G”]执行: nodes_id = {id:index for index,id in enumerate(nodes_id)} 进行索引的建立

得:# nodes_id = {“A”: 0, “B”: 1, “C”: 2, “D”: 3, “E”: 4, “F”: 5, “G”:

6} 简简单单理解这段代码,作用也就是完成one-hot 编码的建立

2、创建数据加载器:

def load_dataset():

train_dataset = []

test_dataset = []

nodes_id, num_nodes_id = node_mapping()

for graph_pair in train_graphs:

graph = json.load(open(graph_pair))

data = process_data(graph,nodes_id)

train_dataset.append(data)

for graph_pair in test_graphs:

graph = json.load(open(graph_pair))

data = process_data(graph, nodes_id)

test_dataset.append(data)

return train_dataset,test_dataset,num_nodes_id

其中,process_data() 函数:

def process_data(graph,nodes_id):

data = dict()

# 获取每个图的邻接矩阵

edges_1 = graph['graph_1'] + [[y,x] for x,y in graph['graph_1']]

edges_2 = graph['graph_2'] + [[y,x] for x,y in graph['graph_2']]

edges_1 = torch.from_numpy(np.array(edges_1,dtype=np.int64).T).type(torch.long)

edges_2 = torch.from_numpy(np.array(edges_2,dtype=np.int64).T).type(torch.long)

data['edge_index_1'] = edges_1

data['edge_index_2'] = edges_2

feature_1 ,feature_2 = [],[]

for n in graph['labels_1']:

feature_1.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])

for n in graph['labels_2']:

feature_2.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])

feature_1 = torch.FloatTensor(np.array(feature_1))

feature_2 = torch.FloatTensor(np.array(feature_2))

data['features_1'] = feature_1

data['features_2'] = feature_2

norm_ged = graph['ged'] / (0.5 * (len(graph['labels_1']) +len(graph['labels_2'])))

data['norm_ged'] = norm_ged

data["target"] = torch.from_numpy(np.exp(-norm_ged).reshape(1, 1)).view(-1).float().unsqueeze(0)

return data

理解process_data ()函数:

理解process_data()函数的作用:

数据假设: {

“graph_1”: [[0, 1], [1, 2]], // 边的列表

“graph_2”: [[0, 2], [2, 3]],

“labels_1”: [“A”, “B”, “C”], // 图1的节点标签

“labels_2”: [“A”, “C”, “D”], // 图2的节点标签

“ged”: 2 // 图编辑距离 } 使用node_mapping()得到:nodes_id = {“A”: 0, “B”: 1, “C”: 2, “D”: 3, “E”: 4, “F”: 5, “G”: 6} 执行:edges_1 =

graph[‘graph_1’] + [[y, x] for x, y in graph[‘graph_1’]] 得: # [[0,

1], [1, 2], [1, 0], [2, 1]] 前部分是获取正向边,后边是添加反向边 执行:edges_2 =

graph[‘graph_2’] + [[y, x] for x, y in graph[‘graph_2’]] 得: # [[0,

2], [2, 3], [2, 0], [3, 2]]执行:edges_1 = torch.from_numpy(np.array(edges_1,

dtype=np.int64).T).type(torch.long) 得:将其转换为tensor类型第一个循环: 执行:for n in graph[‘labels_1’]: # [“A”, “B”, “C”]

执行:feature_1.append([1.0 if nodes_id[n] == i else 0.0 for i in

nodes_id.values()]) 得:feature_1 = [

[ A , B , C , D , E , F , G ][1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], # “A”

[0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0], # “B”

[0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0] # “C” 第二个循环: for n in graph[‘labels_2’]: # [“A”, “C”, “D”]

feature_2.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])feature_2 = [

[1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], # “A”

[0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0], # “C”

[0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0] # “D”

]

执行:feature_1 = torch.FloatTensor(np.array(feature_1)) 得:将其转换为张量

计算ged: norm_ged = graph['ged'] / (0.5 * (len(graph['labels_1']) + len(graph['labels_2'])))norm_ged = 2 / (0.5 * (3 + 3)) = 2 / 3 = 0.6667

计算目标值: data['target'] = torch.from_numpy(np.exp(-norm_ged).reshape(1,1)).view(-1).float().unsqueeze(0)

target = torch.from_numpy(np.exp(-0.6667).reshape(1, 1)).view(-1).float().unsqueeze(0)

target = torch.tensor([[0.5134]])

理解一下ged: Graph Edit Distance :图编辑距离 Ged 是衡量两个图之间的相似度的标识,ged越小,则越相似。 ged 是表示将一个图转变为另一个图的最小操作数(节点和边的添加、删除、替换等)。norm_ged = GED / (0.5 * (V1 + V2 )) 其中V1和V2 是两个图的节点。

理解一下target: target = exp(-norm_ged),很多公式都会取负指数,因为这样能够将数值限定在0到1之间。

到这就完成了数据的处理。

卷积层:

这层直接调用就行,无需过多了解。

注意力层

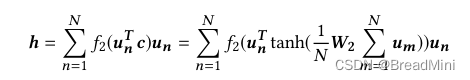

论文中的公式如下:

class AttentionModule(nn.Module):

def __init__(self,args):

super(AttentionModule,self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

def setup_weights(self):

# 创建权重矩阵(并未赋值),fiLters_3 = 32

self.weight_matrix = nn.Parameter(torch.Tensor(self.args.filters_3, self.args.filters_3))

def init_parameters(self):

# 为权重矩阵进行赋值。

nn.init.xavier_uniform_(self.weight_matrix)

def forward(self, embedding):

# embedding:(num_nodes=14, num_features=32)

# 在每个特征维度上,取节点平均值

# 执行torch.matmul(embedding, self.weight_matrix)=>[14,32]

# 执行torch.mean(,dim=0) => [32,] 其实就是一行32列

global_context = torch.mean(torch.matmul(embedding, self.weight_matrix), dim=0)

# [32,] => [32,]

transformed_global = torch.tanh(global_context)

# sigmoid_scores计算每个节点与图之间的相似性得分(注意力)

# sigmoid_scores:(14)

# 执行:transormed_global.view(-1,1) [32,]=> [32,1]

# 执行:torch.mm() =>矩阵乘法=>[14,32] × [32,1] = [14,1],

# 注意:这里的实现并没有完全跟论文相同,缺少了一个转置,放在下一步实现了

sigmoid_scores = torch.sigmoid(torch.mm(embedding, transformed_global.view(-1, 1)))

# representation:图的嵌入(32)

# 执行:torch.t(embedding) [14,32] =>[32,14]

# 执行:torch.mm() [32,14] * [14,1] =>[32,1]

representation = torch.mm(torch.t(embedding), sigmoid_scores)

return representation

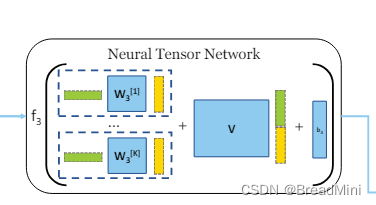

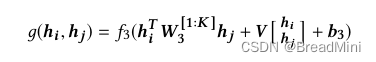

张量网络层:

张量网络层的公式如下:

class TensorNetworkModule(nn.Module):

def __init__(self,args):

super(TensorNetworkModule,self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

def setup_weights(self):

# tensor_neurons 表示神经元数目

# 三维张量 M

self.weight_matrix = nn.Parameter(torch.Tensor(self.args.filters_3, self.args.filters_3, self.args.tensor_neurons))

# 权重矩阵 V

self.weight_matrix_block = nn.Parameter(torch.Tensor(self.args.tensor_neurons,2*self.args.filters_3))

# 偏置向量 b

self.bias = nn.Parameter(torch.Tensor(self.args.tensor_neurons,1))

def init_parameters(self):

nn.init.xavier_uniform_(self.weight_matrix)

nn.init.xavier_uniform_(self.weight_matrix_block)

nn.init.xavier_uniform_(self.bias)

def forward(self,embedding_1,embedding_2):

# 这一部分实现:h1^T * M * h2

# embedding_1 = [32,1] 经转置=> [1,32]

# M = [32,32,16]

# scoring = [1,32] * [32,512] = [1,512]

scoring = torch.mm(torch.t(embedding_1),self.weight_matrix.view(self.args.filters_3,-1))

# scoring = [32,16]

scoring = scoring.view(self.args.filters_3,self.args.tensor_neurons) # shape=[32,16]

# scoring = [16,1]

scoring = torch.mm(torch.t(scoring),embedding_2) # shape = [16,1]

# 就是论文中将两个嵌入向量叠加起来

combined_representation = torch.cat((embedding_1,embedding_2))

# V*[]

block_scoring = torch.mm(self.weight_matrix_block,combined_representation)

scores = F.relu(scoring + block_scoring +self.bias)

return scores

理解这一部分张量网络:

根据SimGNN论文中的公式: 假设embedding_1 和 embedding_2 都为

[32,1],self.args.filters_3=32,self.args.tensor_neurons=16 可得:

self.weight_matrix = [32,32,16]

self.weight_matrix_block = [16,64]

self.bias = [16,1] 问题一:M 为啥是三维的权重矩阵呢? 例子中的M是[32,32,16] 表示k = 16个32*32的矩阵

定义simgnn网络模型:

class SimGNN(nn.Module):

def __init__(self,args,num_nodes_id):

super(SimGNN,self).__init__()

self.args = args

self.num_nodes_id = num_nodes_id

self.setup_layers()

# 是否使用直方图

def calculate_bottleneck_features(self):

if self.args.histogram == True:

self.feature_count = self.args.tensor_neurons + self.args.bins

else:

self.feature_count = self.args.tensor_neurons

def setup_layers(self):

self.calculate_bottleneck_features()

self.convolution_1 = GCNConv(self.num_nodes_id,self.args.filters_1)

self.convolution_2 = GCNConv(self.args.filters_1,self.args.filters_2)

self.convolution_3 = GCNConv(self.args.filters_2,self.args.filters_3)

self.attention = AttentionModule(self.args)

self.tensor_network = TensorNetworkModule(self.args)

self.fully_connected_first = nn.Linear(self.feature_count,self.args.bottle_neck_neurons)

self.scoring_layer = nn.Linear(self.args.bottle_neck_neurons,1)

def calculate_histogram(self,abstract_features_1, abstract_features_2):

"""

理解calculate_histogram函数:

作用:用于计算节点特征的直方图,用于衡量图之间的相似性。

"""

# abstract_features_1:(num_nodes1, num_features=32)

# abstract_features_2:(num_features=32, num_nodes2) 这里是完成转置了

# 执行torch.mm 后得到 相似性得分矩阵。

scores = torch.mm(abstract_features_1, abstract_features_2).detach()

scores = scores.view(-1, 1)

# 计算直方图,将得分分为 bins 个区间。

# 例如 bins为 8,hist = tensor([4., 3., 1., 0., 0., 2., 1., 1.])

hist = torch.histc(scores, bins=self.args.bins) # 统计得分在每个区间的个数

# hist = tensor([0.3333, 0.2500, 0.0833, 0.0000, 0.0000, 0.1667, 0.0833, 0.0833])

hist = hist / torch.sum(hist) # 归一化

hist = hist.view(1, -1)

return hist

def convolutional_pass(self, edge_index, features):

features = self.convolution_1(features, edge_index)

features = F.relu(features)

features = F.dropout(features, p=self.args.dropout, training=self.training)

features = self.convolution_2(features, edge_index)

features = F.relu(features)

features = F.dropout(features, p=self.args.dropout, training=self.training)

features = self.convolution_3(features, edge_index)

return features

def forward(self, data):

# 获取图 1 的邻接矩阵

edge_index_1 = data["edge_index_1"]

# 获取图 2 的邻接矩阵

edge_index_2 = data["edge_index_2"]

# 获取图 1 的特征(独热编码)

features_1 = data["features_1"] # (num_nodes1, num_features=16)

features_2 = data["features_2"] # (num_nodes2, num_features=16)

# (num_nodes1, num_features=16) ——> (num_nodes1, num_features=32)

abstract_features_1 = self.convolutional_pass(edge_index_1,features_1)

# (num_nodes2, num_features=16) ——> (num_nodes2, num_features=32)

abstract_features_2 = self.convolutional_pass(edge_index_2,features_2)

# 直方图

if self.args.histogram == True:

hist = self.calculate_histogram(abstract_features_1, torch.t(abstract_features_2))

# 池化

pooled_features_1 = self.attention(abstract_features_1) # (num_nodes1, num_features=32) ——> (num_features=32)

pooled_features_2 = self.attention(abstract_features_2) # (num_nodes2, num_features=32) ——> (num_features=32)

# NTN模型

scores = self.tensor_network(pooled_features_1, pooled_features_2)

scores = torch.t(scores)

if self.args.histogram == True:

scores = torch.cat((scores, hist), dim=1).view(1, -1)

scores = F.relu(self.fully_connected_first(scores))

score = torch.sigmoid(self.scoring_layer(scores))

return score

整体代码:

import glob,json

import numpy as np

import torch,os,math,random

import torch.nn as nn

import torch.nn.functional as F

import argparse

from tqdm import tqdm, trange

from torch import optim

from torch_geometric.nn import GCNConv

from parameter import parameter_parser,IOStream,table_printer

# 这里读取完也是json格式的文件

train_graphs = glob.glob('data/train/'+'*.json')

test_graphs = glob.glob('data/test/'+'*.json')

def node_mapping():

nodes_id = set()

graph_pairs = train_graphs + test_graphs

for graph_pair in graph_pairs:

graph = json.load(open(graph_pair))

nodes_id = nodes_id.union(set(graph['labels_1']))

nodes_id = nodes_id.union(set(graph['labels_2']))

nodes_id = sorted(nodes_id)

nodes_id = {id:index for index,id in enumerate(nodes_id)}

num_nodes_id = len(nodes_id)

print(nodes_id,num_nodes_id)

return nodes_id,num_nodes_id

"""

理解node_mapping()函数的作用:

例如:

graph1.json:{

"labels_1": ["A", "B", "C"],

"labels_2": ["D", "E"]

} =>train_graph

graph2.json:{

"labels_1": ["C", "F"],

"labels_2": ["A", "G"]

} => test_graph

执行: graph_pairs = train_graphs + test_graphs

得:=> graph_pairs = ['graph1.json','graph2.json']

执行: for graph_pair in graph_pairs:

第一个循环:graph = json.load(open('graph1.json'))

得: #graph = {"labels_1": ["A", "B", "C"], "labels_2": ["D", "E"]}

nodes_id = nodes_id.union(set(graph['labels_1'])) # {"A", "B", "C"}

nodes_id = nodes_id.union(set(graph['labels_2'])) # {"A", "B", "C", "D", "E"}

第二个循环:graph = json.load(open('graph2.json'))

得: #graph = {"labels_1": ["C", "F"], "labels_2": ["A", "G"]}

nodes_id = nodes_id.union(set(graph['labels_1'])) # {"A", "B", "C", "D", "E", "F"}

nodes_id = nodes_id.union(set(graph['labels_2'])) # {"A", "B", "C", "D", "E", "F", "G"}

执行: nodes_id = sorted(nodes_id) 进行排序

得: # ["A", "B", "C", "D", "E", "F", "G"]

执行: nodes_id = {id:index for index,id in enumerate(nodes_id)} 进行索引的建立

得:# nodes_id = {"A": 0, "B": 1, "C": 2, "D": 3, "E": 4, "F": 5, "G": 6}

简简单单理解这段代码,作用也就是完成one-hot 编码的建立

"""

def load_dataset():

train_dataset = []

test_dataset = []

nodes_id, num_nodes_id = node_mapping()

for graph_pair in train_graphs:

graph = json.load(open(graph_pair))

data = process_data(graph,nodes_id)

train_dataset.append(data)

for graph_pair in test_graphs:

graph = json.load(open(graph_pair))

data = process_data(graph, nodes_id)

test_dataset.append(data)

return train_dataset,test_dataset,num_nodes_id

def process_data(graph,nodes_id):

data = dict()

# 获取每个图的邻接矩阵

edges_1 = graph['graph_1'] + [[y,x] for x,y in graph['graph_1']]

edges_2 = graph['graph_2'] + [[y,x] for x,y in graph['graph_2']]

edges_1 = torch.from_numpy(np.array(edges_1,dtype=np.int64).T).type(torch.long)

edges_2 = torch.from_numpy(np.array(edges_2,dtype=np.int64).T).type(torch.long)

data['edge_index_1'] = edges_1

data['edge_index_2'] = edges_2

feature_1 ,feature_2 = [],[]

for n in graph['labels_1']:

feature_1.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])

for n in graph['labels_2']:

feature_2.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])

feature_1 = torch.FloatTensor(np.array(feature_1))

feature_2 = torch.FloatTensor(np.array(feature_2))

data['features_1'] = feature_1

data['features_2'] = feature_2

norm_ged = graph['ged'] / (0.5 * (len(graph['labels_1']) +len(graph['labels_2'])))

data['norm_ged'] = norm_ged

data["target"] = torch.from_numpy(np.exp(-norm_ged).reshape(1, 1)).view(-1).float().unsqueeze(0)

return data

"""

理解process_data()函数的作用:

数据假设:

{

"graph_1": [[0, 1], [1, 2]], // 边的列表

"graph_2": [[0, 2], [2, 3]],

"labels_1": ["A", "B", "C"], // 图1的节点标签

"labels_2": ["A", "C", "D"], // 图2的节点标签

"ged": 2 // 图编辑距离

}

使用node_mapping()得到:nodes_id = {"A": 0, "B": 1, "C": 2, "D": 3, "E": 4, "F": 5, "G": 6}

执行:edges_1 = graph['graph_1'] + [[y, x] for x, y in graph['graph_1']]

得: # [[0, 1], [1, 2], [1, 0], [2, 1]] 前部分是获取正向边,后边是添加反向边

执行:edges_2 = graph['graph_2'] + [[y, x] for x, y in graph['graph_2']]

得: # [[0, 2], [2, 3], [2, 0], [3, 2]]

执行:edges_1 = torch.from_numpy(np.array(edges_1, dtype=np.int64).T).type(torch.long)

得:将其转换为tensor类型

第一个循环:

执行:for n in graph['labels_1']: # ["A", "B", "C"]

执行:feature_1.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])

得:

# feature_1 = [

[ A , B , C , D , E , F , G ]

# [1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], # "A"

# [0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0], # "B"

# [0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0] # "C"

第二个循环:

for n in graph['labels_2']: # ["A", "C", "D"]

feature_2.append([1.0 if nodes_id[n] == i else 0.0 for i in nodes_id.values()])

# feature_2 = [

# [1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0], # "A"

# [0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0], # "C"

# [0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0] # "D"

# ]

执行:feature_1 = torch.FloatTensor(np.array(feature_1))

得:将其转换为张量

计算ged:

norm_ged = graph['ged'] / (0.5 * (len(graph['labels_1']) + len(graph['labels_2'])))

# norm_ged = 2 / (0.5 * (3 + 3)) = 2 / 3 = 0.6667

计算目标值:

data['target'] = torch.from_numpy(np.exp(-norm_ged).reshape(1, 1)).view(-1).float().unsqueeze(0)

# target = torch.from_numpy(np.exp(-0.6667).reshape(1, 1)).view(-1).float().unsqueeze(0)

# target = torch.tensor([[0.5134]])

理解一下ged:

Graph Edit Distance :图编辑距离

Ged 是衡量两个图之间的相似度的标识,ged越小,则越相似。

ged 是表示将一个图转变为另一个图的最小操作数(节点和边的添加、删除、替换等)。

norm_ged = GED / (0.5 * (V1 + V2 )) 其中V1和V2 是两个图的节点。

理解一下target:

target = exp(-norm_ged),很多公式都会取负指数,因为这样能够将数值限定在0到1之间。

"""

# 定义注意力模块

class AttentionModule(nn.Module):

def __init__(self,args):

super(AttentionModule,self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

def setup_weights(self):

# 创建权重矩阵(并未赋值),fiLters_3 = 32

self.weight_matrix = nn.Parameter(torch.Tensor(self.args.filters_3, self.args.filters_3))

def init_parameters(self):

# 为权重矩阵进行赋值。

nn.init.xavier_uniform_(self.weight_matrix)

def forward(self, embedding):

# embedding:(num_nodes=14, num_features=32)

# 在每个特征维度上,取节点平均值

# 执行torch.matmul(embedding, self.weight_matrix)=>[14,32]

# 执行torch.mean(,dim=0) => [32,] 其实就是一行32列

global_context = torch.mean(torch.matmul(embedding, self.weight_matrix), dim=0)

# [32,] => [32,]

transformed_global = torch.tanh(global_context)

# sigmoid_scores计算每个节点与图之间的相似性得分(注意力)

# sigmoid_scores:(14)

# 执行:transormed_global.view(-1,1) [32,]=> [32,1]

# 执行:torch.mm() =>矩阵乘法=>[14,32] × [32,1] = [14,1],

# 注意:这里的实现并没有完全跟论文相同,缺少了一个转置,放在下一步实现了

sigmoid_scores = torch.sigmoid(torch.mm(embedding, transformed_global.view(-1, 1)))

# representation:图的嵌入(32)

# 执行:torch.t(embedding) [14,32] =>[32,14]

# 执行:torch.mm() [32,14] * [14,1] =>[32,1]

representation = torch.mm(torch.t(embedding), sigmoid_scores)

return representation

"""

理解attention函数:

根据simgnn论文可得知:

"""

class TensorNetworkModule(nn.Module):

def __init__(self,args):

super(TensorNetworkModule,self).__init__()

self.args = args

self.setup_weights()

self.init_parameters()

def setup_weights(self):

# tensor_neurons 表示神经元数目

# 三维张量 M

self.weight_matrix = nn.Parameter(torch.Tensor(self.args.filters_3, self.args.filters_3, self.args.tensor_neurons))

# 权重矩阵 V

self.weight_matrix_block = nn.Parameter(torch.Tensor(self.args.tensor_neurons,2*self.args.filters_3))

# 偏置向量 b

self.bias = nn.Parameter(torch.Tensor(self.args.tensor_neurons,1))

def init_parameters(self):

nn.init.xavier_uniform_(self.weight_matrix)

nn.init.xavier_uniform_(self.weight_matrix_block)

nn.init.xavier_uniform_(self.bias)

def forward(self,embedding_1,embedding_2):

# 这一部分实现:h1^T * M * h2

# embedding_1 = [32,1] 经转置=> [1,32]

# M = [32,32,16]

# scoring = [1,32] * [32,512] = [1,512]

scoring = torch.mm(torch.t(embedding_1),self.weight_matrix.view(self.args.filters_3,-1))

# scoring = [32,16]

scoring = scoring.view(self.args.filters_3,self.args.tensor_neurons) # shape=[32,16]

# scoring = [16,1]

scoring = torch.mm(torch.t(scoring),embedding_2) # shape = [16,1]

# 就是论文中将两个嵌入向量叠加起来

combined_representation = torch.cat((embedding_1,embedding_2))

# V*[]

block_scoring = torch.mm(self.weight_matrix_block,combined_representation)

scores = F.relu(scoring + block_scoring +self.bias)

return scores

"""

理解这一部分张量网络:

根据SimGNN论文中的公式:

假设embedding_1 和 embedding_2 都为 [32,1],self.args.filters_3=32,self.args.tensor_neurons=16

可得: self.weight_matrix = [32,32,16]

self.weight_matrix_block = [16,64]

self.bias = [16,1]

问题一:M 为啥是三维的权重矩阵呢?

例子中的M是[32,32,16] 表示k = 16个32*32的矩阵

"""

class SimGNN(nn.Module):

def __init__(self,args,num_nodes_id):

super(SimGNN,self).__init__()

self.args = args

self.num_nodes_id = num_nodes_id

self.setup_layers()

# 是否使用直方图

def calculate_bottleneck_features(self):

if self.args.histogram == True:

self.feature_count = self.args.tensor_neurons + self.args.bins

else:

self.feature_count = self.args.tensor_neurons

def setup_layers(self):

self.calculate_bottleneck_features()

self.convolution_1 = GCNConv(self.num_nodes_id,self.args.filters_1)

self.convolution_2 = GCNConv(self.args.filters_1,self.args.filters_2)

self.convolution_3 = GCNConv(self.args.filters_2,self.args.filters_3)

self.attention = AttentionModule(self.args)

self.tensor_network = TensorNetworkModule(self.args)

self.fully_connected_first = nn.Linear(self.feature_count,self.args.bottle_neck_neurons)

self.scoring_layer = nn.Linear(self.args.bottle_neck_neurons,1)

def calculate_histogram(self,abstract_features_1, abstract_features_2):

"""

理解calculate_histogram函数:

作用:用于计算节点特征的直方图,用于衡量图之间的相似性。

"""

# abstract_features_1:(num_nodes1, num_features=32)

# abstract_features_2:(num_features=32, num_nodes2) 这里是完成转置了

# 执行torch.mm 后得到 相似性得分矩阵。

scores = torch.mm(abstract_features_1, abstract_features_2).detach()

scores = scores.view(-1, 1)

# 计算直方图,将得分分为 bins 个区间。

# 例如 bins为 8,hist = tensor([4., 3., 1., 0., 0., 2., 1., 1.])

hist = torch.histc(scores, bins=self.args.bins) # 统计得分在每个区间的个数

# hist = tensor([0.3333, 0.2500, 0.0833, 0.0000, 0.0000, 0.1667, 0.0833, 0.0833])

hist = hist / torch.sum(hist) # 归一化

hist = hist.view(1, -1)

return hist

def convolutional_pass(self, edge_index, features):

features = self.convolution_1(features, edge_index)

features = F.relu(features)

features = F.dropout(features, p=self.args.dropout, training=self.training)

features = self.convolution_2(features, edge_index)

features = F.relu(features)

features = F.dropout(features, p=self.args.dropout, training=self.training)

features = self.convolution_3(features, edge_index)

return features

def forward(self, data):

# 获取图 1 的邻接矩阵

edge_index_1 = data["edge_index_1"]

# 获取图 2 的邻接矩阵

edge_index_2 = data["edge_index_2"]

# 获取图 1 的特征(独热编码)

features_1 = data["features_1"] # (num_nodes1, num_features=16)

features_2 = data["features_2"] # (num_nodes2, num_features=16)

# (num_nodes1, num_features=16) ——> (num_nodes1, num_features=32)

abstract_features_1 = self.convolutional_pass(edge_index_1,features_1)

# (num_nodes2, num_features=16) ——> (num_nodes2, num_features=32)

abstract_features_2 = self.convolutional_pass(edge_index_2,features_2)

# 直方图

if self.args.histogram == True:

hist = self.calculate_histogram(abstract_features_1, torch.t(abstract_features_2))

# 池化

pooled_features_1 = self.attention(abstract_features_1) # (num_nodes1, num_features=32) ——> (num_features=32)

pooled_features_2 = self.attention(abstract_features_2) # (num_nodes2, num_features=32) ——> (num_features=32)

# NTN模型

scores = self.tensor_network(pooled_features_1, pooled_features_2)

scores = torch.t(scores)

if self.args.histogram == True:

scores = torch.cat((scores, hist), dim=1).view(1, -1)

scores = F.relu(self.fully_connected_first(scores))

score = torch.sigmoid(self.scoring_layer(scores))

return score

def exp_init():

"""实验初始化"""

if not os.path.exists('outputs'):

os.mkdir('outputs')

if not os.path.exists('outputs/' + args.exp_name):

os.mkdir('outputs/' + args.exp_name)

# 跟踪执行脚本,windows下使用copy命令,且使用双引号

os.system(f"copy main.py outputs\\{args.exp_name}\\main.py.backup")

os.system(f"copy data.py outputs\\{args.exp_name}\\data.py.backup")

os.system(f"copy model.py outputs\\{args.exp_name}\\model.py.backup")

os.system(f"copy parameter.py outputs\\{args.exp_name}\\parameter.py.backup")

def train(args, IO, train_dataset, num_nodes_id):

# 使用GPU or CPU

device = torch.device('cpu' if args.gpu_index < 0 else 'cuda:{}'.format(args.gpu_index))

if args.gpu_index < 0:

IO.cprint('Using CPU')

else:

IO.cprint('Using GPU: {}'.format(args.gpu_index))

torch.cuda.manual_seed(args.seed) # 设置PyTorch GPU随机种子

# 加载模型及参数量统计

model = SimGNN(args, num_nodes_id).to(device)

IO.cprint(str(model))

total_params = sum(p.numel() for p in model.parameters() if p.requires_grad)

IO.cprint('Model Parameter: {}'.format(total_params))

# 优化器

optimizer = optim.Adam(model.parameters(), lr=args.learning_rate, weight_decay=args.weight_decay)

IO.cprint('Using Adam')

epochs = trange(args.epochs, leave=True, desc="Epoch")

for epoch in epochs:

random.shuffle(train_dataset)

train_batches = []

for graph in range(0, len(train_dataset), 16):

train_batches.append(train_dataset[graph:graph + 16])

loss_epoch = 0 # 一个epoch,所有样本损失

for index, batch in tqdm(enumerate(train_batches), total=len(train_batches), desc="Train_Batches"):

optimizer.zero_grad()

loss_batch = 0 # 一个batch,样本损失

for data in batch:

# 数据变成GPU支持的数据类型

data["edge_index_1"], data["edge_index_2"] = data["edge_index_1"].to(device), data["edge_index_2"].to(device)

data["features_1"], data["features_2"] = data["features_1"].to(device), data["features_2"].to(device)

prediction = model(data)

loss_batch = loss_batch + F.mse_loss(data["target"], prediction.cpu())

loss_epoch = loss_epoch + loss_batch.item()

loss_batch.backward()

optimizer.step()

IO.cprint('Epoch #{}, Train_Loss: {:.6f}'.format(epoch, loss_epoch/len(train_dataset)))

torch.save(model, 'outputs/%s/model.pth' % args.exp_name)

IO.cprint('The current best model is saved in: {}'.format('******** outputs/%s/model.pth *********' % args.exp_name))

def test(args, IO, test_dataset):

"""测试模型"""

device = torch.device('cpu' if args.gpu_index < 0 else 'cuda:{}'.format(args.gpu_index))

# 输出内容保存在之前的训练日志里

IO.cprint('********** TEST START **********')

IO.cprint('Reload Best Model')

IO.cprint('The current best model is saved in: {}'.format('******** outputs/%s/model.pth *********' % args.exp_name))

model = torch.load('outputs/%s/model.pth' % args.exp_name).to(device)

#model = model.eval() # 创建一个新的评估模式的模型对象,不覆盖原模型

ground_truth = [] # 存放data["norm_ged"]

scores = [] # 存放模型预测与ground_truth的损失

for data in test_dataset:

data["edge_index_1"], data["edge_index_2"] = data["edge_index_1"].to(device), data["edge_index_2"].to(device)

data["features_1"], data["features_2"] = data["features_1"].to(device), data["features_2"].to(device)

prediction = model(data)

scores.append((-math.log(prediction.item()) - data["norm_ged"]) ** 2) # MSELoss

ground_truth.append(data["norm_ged"])

model_error = np.mean(scores)

norm_ged_mean = np.mean(ground_truth)

baseline_error = np.mean([(gt - norm_ged_mean) ** 2 for gt in ground_truth])

IO.cprint('Baseline_Error: {:.6f}, Model_Test_Error: {:.6f}'.format(baseline_error, model_error))

args = parameter_parser()

random.seed(args.seed) # 设置Python随机种子

torch.manual_seed(args.seed) # 设置PyTorch随机种子

exp_init()

IO = IOStream('outputs/' + args.exp_name + '/run.log')

IO.cprint(str(table_printer(args))) # 参数可视化

train_dataset, test_dataset, num_nodes_id = load_dataset()

train(args, IO, train_dataset, num_nodes_id)

test(args, IO, test_dataset)

感谢B站的各位老师,不过训练部分的代码还没看,明天吧!