目前接到任务,让同步表数据。市面很多同步工具不一一尝试了,信赖阿里,所以调研了一下阿里的dataX,一点点来吧,学习为主

环境准备:linux6.8

python自带的2.7

MySQL 5.7.1

1.先下载: wget http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

2.解压:tar -zxvf datax.tar.gz -C /usr/local/

3.授权: chmod -R 755 datax/*

4.进入目录# cd datax/

5.启动脚本:python datax.py ../job/job.json

展示如下

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2019-05-17 02:40:26.101 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2019-05-17 02:40:26.138 [main] INFO Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.91-b14

jvmInfo: Linux amd64 2.6.32-642.el6.x86_64

cpu num: 2

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2019-05-17 02:40:26.204 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"streamreader",

"parameter":{

"column":[

{

"type":"string",

"value":"DataX"

},

{

"type":"long",

"value":19890604

},

{

"type":"date",

"value":"1989-06-04 00:00:00"

},

{

"type":"bool",

"value":true

},

{

"type":"bytes",

"value":"test"

}

],

"sliceRecordCount":100000

}

},

"writer":{

"name":"streamwriter",

"parameter":{

"encoding":"UTF-8",

"print":false

}

}

}

],

"setting":{

"errorLimit":{

"percentage":0.02,

"record":0

},

"speed":{

"byte":10485760

}

}

}

2019-05-17 02:40:26.254 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2019-05-17 02:40:26.257 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2019-05-17 02:40:26.257 [main] INFO JobContainer - DataX jobContainer starts job.

2019-05-17 02:40:26.271 [main] INFO JobContainer - Set jobId = 0

2019-05-17 02:40:26.321 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2019-05-17 02:40:26.325 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2019-05-17 02:40:26.327 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2019-05-17 02:40:26.327 [job-0] INFO JobContainer - jobContainer starts to do split ...

2019-05-17 02:40:26.332 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 10485760 bytes.

2019-05-17 02:40:26.335 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [1] tasks.

2019-05-17 02:40:26.340 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [1] tasks.

2019-05-17 02:40:26.393 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2019-05-17 02:40:26.401 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2019-05-17 02:40:26.408 [job-0] INFO JobContainer - Running by standalone Mode.

2019-05-17 02:40:26.462 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2019-05-17 02:40:26.468 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2019-05-17 02:40:26.474 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2019-05-17 02:40:26.535 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2019-05-17 02:40:27.036 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[521]ms

2019-05-17 02:40:27.037 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2019-05-17 02:40:36.474 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.021s | All Task WaitReaderTime 0.185s | Percentage 100.00%

2019-05-17 02:40:36.474 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2019-05-17 02:40:36.475 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2019-05-17 02:40:36.475 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2019-05-17 02:40:36.475 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2019-05-17 02:40:36.477 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /usr/local/datax/hook

2019-05-17 02:40:36.478 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2019-05-17 02:40:36.478 [job-0] INFO JobContainer - PerfTrace not enable!

2019-05-17 02:40:36.479 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.021s | All Task WaitReaderTime 0.185s | Percentage 100.00%

2019-05-17 02:40:36.480 [job-0] INFO JobContainer -

任务启动时刻 : 2019-05-17 02:40:26

任务结束时刻 : 2019-05-17 02:40:36

任务总计耗时 : 10s

任务平均流量 : 253.91KB/s

记录写入速度 : 10000rec/s

读出记录总数 : 100000

读写失败总数 : 0

这样就可以进入job中编辑自己的同步表的json了

{

"job": {

"setting": {

"speed": {

"byte": 1048576,

"channel":"5",

}

},

"content": [

{

"reader": {

//name不能随便改

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"column": ["*"],

"connection": [

{

"table": ["sys_user"],

"jdbcUrl": ["jdbc:mysql://ip:3306/bdc_asis?useUnicode=true&characterEncoding=utf8"]

}

]

}

},

"writer": {

//测试了一下name不能随便改

"name": "mysqlwriter",

"parameter": {

"writeMode": "replace into",

"username": "root",

"password": "1111",

"column":["*"],

"connection": [

{

"table": ["sys_user"],

"jdbcUrl": "jdbc:mysql://ip:3306/bdc_asis?useUnicode=true&characterEncoding=utf8"

}

]

}

}

}

]

}

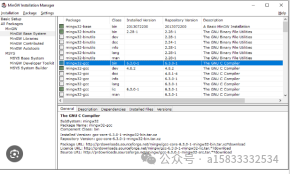

}有几点需要注意:1.账户密码不能错。2.这个是mysql到mysql,不需要引用其他的插件,如果你是sqlserver或者oracle需要自己下载一下插件或者查一下资料。sqlsercer插件sqljdbc_6.0.8112.100_enu.tar.gz驱动:wget https://download.microsoft.com/download/0/2/A/02AAE597-3865-456C-AE7F-613F99F850A8/enu/sqljdbc_6.0.8112.100_enu.tar.gz

解压并把sqljdbc42.jar文件移至/usr/local/datax/lib目录下,并授权

#tar -xf sqljdbc_6.0.8112.100_enu.tar.gz

#mv /sqljdbc_6.0/enu/jre8/sqljdbc42.jar /usr/local/datax/lib/

#chmod 755 /usr/local/datax/lib/sqljdbc42.jar还需要注意:数据库授权可以远程访问。如果遇到java.sql.SQLException:null, message from server: "Host 'xxxxx' is not allowed to connect.基本即使没授权。

进入mysql

use mysql; 回车,在输入 : show tables;

输入: select host from user; 在输入: update user set host ='%' where user ='root';

千万不要忘记刷新授权!千万不要忘记刷新授权!千万不要忘记刷新授权!

刷新权限

FLUSH PRIVILEGES

定时脚本编写需要注意,我们可能要好多json需要操作这样就会写好多的定时脚本,这样有点笨。我们可以采用写一个shell然后启动多个json,这样比较方便一些

#!/bin/bash

#一定要写这个,声明你的环境变量

source /etc/profile

/usr/local/datax/bin/datax.py /usr/local/jobs/sys_user1.json " >>/usr/local/datax_log/sys_user1.`date +%Y%m%d` 2>&1 &

/usr/local/datax/bin/datax.py /usr/local/jobs/sys_user2.json " >>/usr/local/datax_log/sys_user2.`date +%Y%m%d` 2>&1 &

/usr/local/datax/bin/datax.py /usr/local/jobs/sys_user3.json " >>/usr/local/datax_log/sys_user3.`date +%Y%m%d` 2>&1 &

然后写个定时任务

$ crontab -e

#一分钟执行一次脚本

*/1 * * * * /user/local/dataX3.0.sh >/dev/null 2>&1- 我是定时每分钟跑一次脚本,注意一定要处理输入文件,因为cron会见执行情况通过mail发给用户,时间长系统会爆炸掉的哦