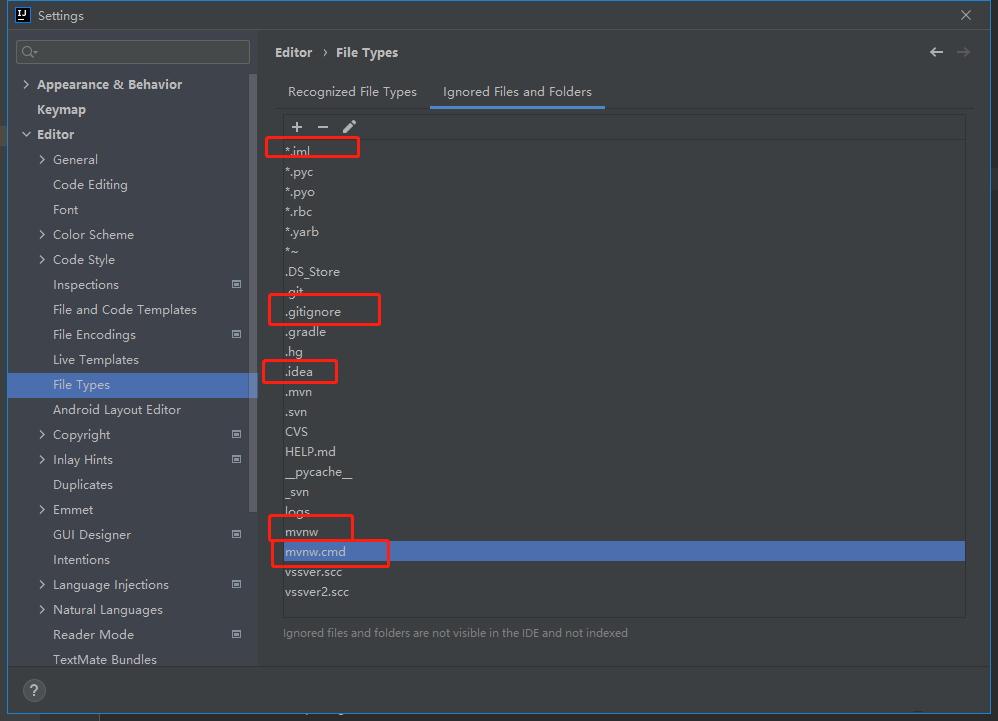

1. 学习率对模型训练的影响

python 代码:

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import Adam

import matplotlib.pyplot as plt

# 生成随机数据集

np.random.seed(0)

X_train = np.random.rand(1000, 10)

y_train = np.random.randint(2, size=(1000, 1))

# 不同的初始学习率

lrs = [0.00001,0.0001,0.001, 0.01, 0.1]

# 训练多个模型并记录结果

histories = []

for lr in lrs:

# 创建模型

model = Sequential()

model.add(Dense(64, activation='relu', input_dim=10))

model.add(Dense(1, activation='sigmoid'))

# 编译模型

optimizer = Adam(learning_rate=lr)

model.compile(optimizer=optimizer, loss='binary_crossentropy', metrics=['accuracy'])

# 训练模型

history = model.fit(X_train, y_train, epochs=100, batch_size=32, verbose=0)

histories.append(history)

# 可视化结果

plt.figure(figsize=(15, 4))

for i, history in enumerate(histories):

plt.subplot(1, len(histories), i+1)

plt.plot(history.history['loss'], label='lr={}'.format(lrs[i]))

plt.title('Training Loss')

plt.xlabel('Epoch')

plt.legend()

plt.show()

plt.figure(figsize=(15, 4))

for i, history in enumerate(histories):

plt.subplot(1, len(histories), i+1)

plt.plot(history.history['accuracy'], label='lr={}'.format(lrs[i]))

plt.title('Training Accuracy')

plt.xlabel('Epoch')

plt.legend()

plt.show()

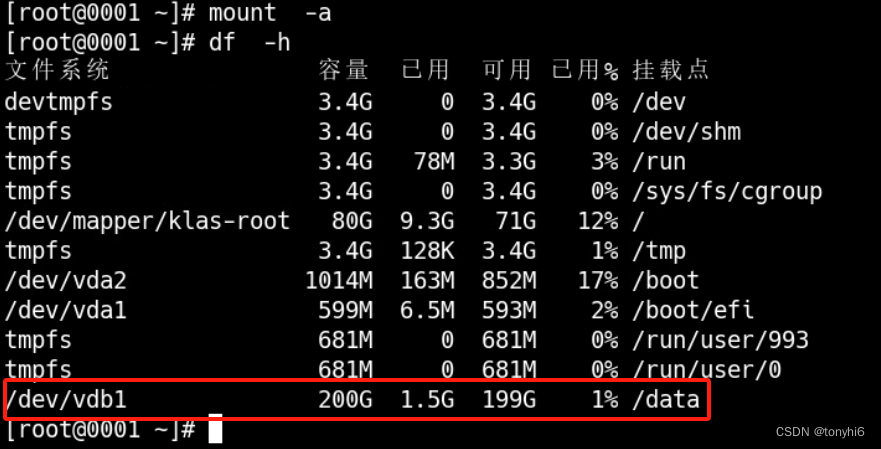

运行结果:

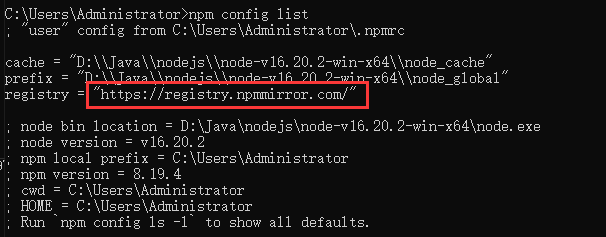

2. 正则化系数对模型训练的影响

python代码:

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

(train_images, train_labels), (test_images, test_labels) = keras.datasets.mnist.load_data()

train_images = train_images.reshape((60000, 28 * 28))

train_images = train_images.astype('float32') / 255

test_images = test_images.reshape((10000, 28 * 28))

test_images = test_images.astype('float32') / 255

# 定义不同的正则化系数

l2s = [0.00001,0.0001,0.001, 0.01, 0.1]

# 训练多个模型并记录结果

histories = []

for l2 in l2s:

# 创建模型

model = keras.Sequential([

keras.layers.Dense(128, activation='relu', kernel_regularizer=keras.regularizers.l2(l2)),

keras.layers.Dense(128, activation='relu', kernel_regularizer=keras.regularizers.l2(l2)),

keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# history = model.fit(train_images, train_labels, epochs=80, batch_size=64,

# validation_data=(test_images, test_labels))

tensorboard_callback = keras.callbacks.TensorBoard(log_dir='./logs', histogram_freq=1)

history = model.fit(train_images, train_labels, epochs=120, batch_size=64,

validation_data=(test_images, test_labels), callbacks=[tensorboard_callback])

histories.append(history)

plt.figure(figsize=(20, 4))

for i, history in enumerate(histories):

plt.subplot(1, len(histories), i+1)

plt.plot(history.history['accuracy'], label='l2={}-train'.format(l2s[i]))

plt.plot(history.history['val_accuracy'],label='l2={}-val'.format(l2s[i]))

plt.title('Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

代码运算结果:

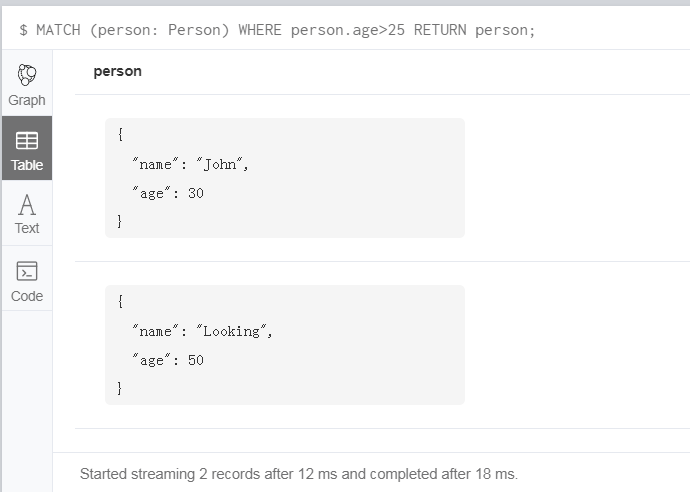

3. 神经元数量

import numpy as np

import matplotlib.pyplot as plt

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense

# 加载MNIST数据集

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

def create_model(num_neurons):

model = Sequential()

model.add(Dense(num_neurons, activation='relu', input_shape=(784,)))

model.add(Dense(10, activation='softmax'))

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

return model

def train_and_evaluate_model(model, x_train, y_train, x_test, y_test, num_epochs=10):

history = model.fit(x_train, y_train, epochs=num_epochs, validation_data=(x_test, y_test), verbose=0)

_, accuracy = model.evaluate(x_test, y_test, verbose=0)

return accuracy, history.history['loss'], history.history['val_loss']

def main():

neuron_counts = [16, 32, 64, 128, 256]

accuracies = []

losses = []

val_losses = []

for num_neurons in neuron_counts:

model = create_model(num_neurons)

accuracy, loss, val_loss = train_and_evaluate_model(model, x_train.reshape(-1, 784), y_train,

x_test.reshape(-1, 784), y_test)

accuracies.append(accuracy)

losses.append(loss)

val_losses.append(val_loss)

# 可视化结果

plt.figure(figsize=(12, 6))

plt.subplot(121)

plt.plot(neuron_counts, accuracies, 'o-')

plt.xlabel('Number of neurons')

plt.ylabel('Accuracy')

plt.title('Model accuracy vs number of neurons')

plt.subplot(122)

for i, num_neurons in enumerate(neuron_counts):

plt.plot(np.arange(1, 11), losses[i], label=f'{num_neurons} neurons')

plt.xlabel('Epochs')

plt.ylabel('Training loss')

plt.title('Training loss vs number of epochs')

plt.legend()

plt.tight_layout()

plt.show()

main()

运算结果:

![内存拆解分析表:学习版[图片]](https://img-blog.csdnimg.cn/direct/7db7069eeb7f43958d8341ce74fa3e43.png)