一. Oracle RAC安装前的系统准备工作

检查安装包

pkginfo –i SUNWarc SUNWbtool SUNWhea SUNWlibC SUNWlibm SUNWlibms SUNWsprotSUNWtoo

pkg install SUNWarc SUNWbtool SUNWhea SUNWlibC SUNWlibm SUNWlibms SUNWsprotSUNWtoo

1.1 创建系统用户和组(两节点都要执行 root用户)

# zfs create -o mountpoint=/export/home/grid rpool/export/home/grid

# zfs create -o mountpoint=/export/home/oracle rpool/export/home/oracle

# groupadd -g 1000 oinstall

# groupadd -g 1031 dba

# groupadd -g 1032 oper

# groupadd -g 1020 asmadmin

# groupadd -g 1021 asmdba

# groupadd -g 1022 asmoper

# useradd -u 1101 -g oinstall -G dba,oper,asmdba -d /export/home/oracle -m oracle

# useradd -u 1100 -g oinstall -G dba,asmadmin,asmdba,asmoper -d

/export/home/grid -m grid

# chown -R grid:oinstall /export/home/grid

# chown -R oracle:oinstall /export/home/oracle

Passwd oracle/oracle11g

Passwd grid/oracle11g

注:红色字体的所属组为oracle官方文档新增的所属组

1.2 创建目录并授权(两节点都要执行 root用户)

# zfs create -o mountpoint=/u01 rpool/oracle

# mkdir -p /u01/app/11.2.0/grid

# mkdir -p /u01/app/grid

# chown -R grid:oinstall /u01

# mkdir -p /u01/app/oracle

# mkdir -p /u01/app/oracle/product/11.2.0/db_1

# chown -R oracle:oinstall /u01/app/oracle

# chmod -R 775 /u01/

1.3 禁用NTP和SendMail服务(两节点都要执行 root用户)

# svcadm disable ntp

# svcadm disable sendmail

1.4 配置 # vi /etc/hosts/

::1 localhost

127.0.0.1 localhost loghost

172.16.50.34 nsf12rac1

172.16.50.36 nsf12rac2

172.16.50.37 nsf12rac1-vip

172.16.50.38 nsf12rac2-vip

#172.16.50.13 nsf12rac-scanip

192.168.1.101 nsf12rac1-priv

192.168.1.102 nsf12rac2-priv

1.5 私有IP绑定

Rac1

root@nsf12rac1:/etc# ipadm create-ip net1

root@nsf12rac1:/etc# ipadm create-addr -T static -a 192.168.1.101/24 net1/v4

root@nsf12rac1:/etc#

root@nsf12rac1:/etc# dladm show-phys

LINK MEDIA STATE SPEED DUPLEX DEVICE

net0 Ethernet up 1000 full i40e0

net1 Ethernet down 0 unknown i40e1

net2 Ethernet down 0 unknown i40e2

net3 Ethernet down 0 unknown i40e3

sp-phys0 Ethernet up 10 full usbecm2

root@nsf12rac1:/etc#

root@nsf12rac1:/etc# dladm show-phys

LINK MEDIA STATE SPEED DUPLEX DEVICE

net0 Ethernet up 1000 full i40e0

net1 Ethernet up 10000 full i40e1

net2 Ethernet down 0 unknown i40e2

net3 Ethernet down 0 unknown i40e3

sp-phys0 Ethernet up 10 full usbecm2

rac2

root@nsf12rac2:~# ipadm create-ip net1

root@nsf12rac2:~# ipadm create-addr -T static -a 192.168.1.102/24 net1/v4

root@nsf12rac2:~# dladm show-phys

LINK MEDIA STATE SPEED DUPLEX DEVICE

net0 Ethernet up 1000 full i40e0

net1 Ethernet down 0 unknown i40e1

net2 Ethernet down 0 unknown i40e2

net3 Ethernet down 0 unknown i40e3

sp-phys0 Ethernet up 10 full usbecm2

root@nsf12rac2:~# dladm show-phys

LINK MEDIA STATE SPEED DUPLEX DEVICE

net0 Ethernet up 1000 full i40e0

net1 Ethernet up 10000 full i40e1

net2 Ethernet down 0 unknown i40e2

net3 Ethernet down 0 unknown i40e3

sp-phys0 Ethernet up 10 full usbecm2

私有IP可以互通

1.6 nslookup 解析 scanip (两节点都要执行 root用户)

将scanip地址添加到可通信文件中

自行配置如下

# mv /usr/sbin/nslookup /usr/sbin/nslookup.original

# vi /usr/sbin/nslookup

#!/bin/bash HOSTNAME=${1}

if [[ $HOSTNAME = "rac-scanip" ]]; then

echo "Server: nsf12rac1-vip(ip 地址)"

echo "Address: nsf12rac1-vip(ip 地址) #53"

echo "Non-authoritative answer:"

echo "Name: nsf12rac-scanip"

echo "Address: nsf12rac-scanip(ip 地址)"

else

/usr/sbin/nslookup.original $HOSTNAME

fi

修改nslookup脚本权限:

# chmod 755 /usr/sbin/nslookup

# nslookup nsf12rac-scanip

1.7 DNS解析

solaris10及之前,加入企业dns主要分为三步:

--1.创建resolv.conf文件,加入dns服务器地址

--2.修改/etc/nsswitch.conf

--3.创建/etc/defaultrouter文件,加入网关地址

Solaris11通过NSSWITCH来配置DNS

--检查smf里面的nsswitch情况

# svccfg -s svc:/system/name-service/switch listprop -l all config

--编辑nsswitch文件

# vi /etc/nsswitch.conf

passwd: files ldap

group: files ldap

hosts: files dns

ipnodes: files dns

--将我们刚才的配置导入到SMF中去

这里要说明一点是,SMF服务与核心配置服务的集成更加紧密,如命名服务等有关的,像 domainname\nsswitch这些的内容基本也是在这里面注册的

# nscfg import -f svc:/system/name-service/switch:default

--检查配置导入情况

# svccfg -s svc:/system/name-service/switch listprop -l all config

检查nsswitch服务

# svcs -a | grep swit

--查看依赖的服务,是否已启动

# svcs -d svc:/system/name-service/switch

--把依赖的服务启动起来

# svcadm enable svc:/system/name-service-cache

配置dns的客户端

--在这时在,我们直接通过SMF命令来进行操作

# svccfg -s svc:/network/dns/client setprop config/domain=sf.com

--检查配置后的结果

# svccfg -s svc:/network/dns/client listprop -l all config/domain

--配置域对应的ip地址

# svccfg -s svc:/network/dns/client setprop

config/nameserver=net_address:'(172.16.50.15 172.16.50.16)'

--检查配置后的结果

# svccfg -s svc:/network/dns/client listprop -l all config/nameserver

上面的步骤执行完成后,会在产生一个resolv.conf的文件

#cat /etc/resolv.conf

# _AUTOGENERATED_FROM_SMF_V1_

# WARNING: THIS FILE GENERATED FROM SMF DATA.

# DO NOT EDIT THIS FILE. EDITS WILL BE LOST.

# See resolv.conf(5) for details.

domain sf.com

nameserver 172.16.50.15

nameserver 172.16.50.16

--把配置结果导入到smf中去

# nscfg import -f svc:/network/dns/client:default

# svccfg -s svc:/network/dns/client listprop -l all config

--检查与dns相关的服务

# svcs -a | grep dns

--把client启动起来

# svcadm enable svc:/network/dns/client

# svcs -a | grep dns

--验证配置的结果

# ping sf.com

1.8 配置Solaris内核参数

# vi /etc/system

set shmsys:shminfo_shmmax=4294967295

#0.5*物理内存。如果物理内存为20G,则 0.5×20G×1024×1024=10485760

set shmsys:shminfo_semmap=1024

set shmsys:shminfo_semmni=2048

#含义:系统中semaphore identifierer的最大个数。推荐值为100或者128。

#设置方法:把这个变量的值设置为这个系统上的所有Oracle的实例的init.ora

#中的最大的那个 processes的那个值加10。

set shmsys:shminfo_semmns=2048

#含义:系统中semaphores的最大个数。

#设置方法:这个值可以通过以下方式计算得到:各个Oracle实例的initSID.ora

#里边的processes的值的总和(除去最大的Processes参数)+最大的那个#Processes×2+10×Oracle实例的数。

set shmsys:shminfo_semmsl=2048

#含义:一个set中semaphore的最大个数。

#设置方法:设置成为10+所有Oracle实例的InitSID.ora中最大的Processes

#的值。

set shmsys:shminfo_semmnu=2048

set shmsys:shminfo_semume=200

set shmsys:shminfo_shmmin=200

set shmsys:shminfo_shmmni=200

set shmsys:shminfo_shmseg=200

set shmsys:shminfo_semvmx=32767

set rlim_fd_max=65536

set rlim_fd_cur=65536

#(进程数软硬限制)

注:shmsys:shminfo_shmmax是一个共享内存段的最大值,而project.max-shm-memory是属于同一个project的用户所能够创建的共享内存总和最大值,在数值上: project.max-shm-memory shmsys:shminfo_shmmax×shmsys:shminfo_shmmni所以如果在system文件中设置 了shmsys:shminfo_shmmax和shmsys:shminfo_shmmni然后在重启系统之后查看project.max-shm-memory的话会发现这是一个很大的值,再看看project.max-shm-memory对系统的影响,在我们创建oracle用户时默认的project为default,如果project.max-shm-memory为4G的话,在不考虑其他用户使用共享内存的情况下,则oracle用户下所有数据库的SGA和不能超过4G,如果只有一个数据库,则这个数据库的SGA不能大过4G(比4G略小),如果有两个则这两个数据库的SGA的总和不能大过4G 然后再看看共享内存段是怎么分配的,在以前的版本里共享内存段的大小由shmsys:shminfo_shmmax决定的,构成SGA的共享内存段大小不会超过这个限制

1.9 两个节点为Grid和oracle用户创建projects,配置共享内存参数

/usr/sbin/projadd -U grid -K "project.max-shm-memory=(priv,128g,deny)" user.grid

/usr/sbin/projmod -s -K "project.max-sem-nsems=(priv,512,deny)" user.grid

/usr/sbin/projmod -s -K "project.max-sem-ids=(priv,128,deny)" user.grid

/usr/sbin/projmod -s -K "project.max-shm-ids=(priv,128,deny)" user.grid

/usr/sbin/projmod -s -K "project.max-shm-memory=(priv,32g,deny)" user.grid

/usr/sbin/projadd -U oracle -K "project.max-shm-memory=(priv,128g,deny)" user.oracle

/usr/sbin/projmod -s -K "project.max-sem-nsems=(priv,512,deny)" user.oracle

/usr/sbin/projmod -s -K "project.max-sem-ids=(priv,128,deny)" user.oracle

/usr/sbin/projmod -s -K "project.max-shm-ids=(priv,128,deny)" user.oracle

/usr/sbin/projmod -s -K "project.max-shm-memory=(priv,128g,deny)" user.oracle

/usr/sbin/projmod -s -K "process.max-file-descriptor=(priv,65536,deny)" user.oracle

/usr/sbin/projmod -s -K "process.max-file-descriptor=(priv,65536,deny)" user.grid

/usr/bin/prctl -n project.max-shm-memory -r -v 128G -i project system

/usr/sbin/projmod -s -K "project.max-shm-memory=(priv,128g,deny)" system

/usr/sbin/projmod -s -K "project.max-shm-memory=(priv,128g,deny)" default

如下参数为ORACLE和Solaris官方文档参数变更和最低值设置

semsys:seminfo_semmni project.max-sem-ids 100

semsys:seminfo_semmsl process.max-sem-nsems 256

shmsys:shminfo_shmmax project.max-shm-memory 4294967295

shmsys:shminfo_shmmni project.max-shm-ids 100

Resource Control

Obsolete Tunable

Old Default Value

Maximum Value

New Default Value

process.max-msg-qbytes

msginfo_msgmnb

4096

ULONG_MAX

65536

process.max-msg-messages

msginfo_msgtql

40

UINT_MAX

8192

process.max-sem-ops

seminfo_semopm

10

INT_MAX

512

process.max-sem-nsems

seminfo_semmsl

25

SHRT_MAX

512

project.max-shm-memory

shminfo_shmmax

0x800000

UINT64_MAX

1/4 of physical memory

project.max-shm-ids

shminfo_shmmni

100

224

128

project.max-msg-ids

msginfo_msgmni

50

224

128

project.max-sem-ids

seminfo_semmni

10

224

128

需要注意的就是shmsys:shminfo_shmmax×shmsys:shminfo_shmmni的大小决定了默认情况下

project.max-shm-memory的大小,另外seminfo_semmsl参数在大于新默认值的时候决定了

process.max-sem-nsems的大小

编辑/etc/user_attr 添加root用户 project=system# vi /etc/user_attr

oracle::::defaultpriv=basic,net_privaddr;roles=root

grid::::defaultpriv=basic,net_privaddr;roles=root

(参数lock_after_retries=no防止登录失败次数过多而锁定账号)

在命令提示符前随着目录的改变一直显示当前所在的目录或路径

1.10 设置UDP 参数

# ipadm set-prop –p smallest_anon_port=9000 tcp

# ipadm set-prop –p largest_anon_port=65500 tcp

# ipadm set-prop –p smallest_anon_port=9000 udp

# ipadm set-prop –p largest_anon_port=65500 upd

# ipadm set-prop -p send_buf=65536 upd

# ipadm set-prop -p recv_buf=65536 upd

1.11 SWAP空间调整

增加一个swap卷

# zfs create -V 2G rpool/swap2

# swap -a /dev/zvol/dsk/rpool/swap2

# swap -l

编辑/etc/vfstab

/dev/zvol/dsk/rpool/swap - - swap - no -

/dev/zvol/dsk/rpool/swap2 - - swap - no -

注意:系统默认分配的 swap 交换空间太小了,要修改为 20G(最少要保证 2G),否则在安装 Database 软件时到 Link RMAN 一步会报错。

1.12 SSH信任(两节点都要执行 grid/oracle用户)

# su – grid

以下两个RAC节点都执行

$ mkdir -p ~/.ssh

$ chmod 700 ~/.ssh

$ /usr/bin/ssh-keygen -t rsa

提示输入输入密码时,保持为空回车即可,使用空密码操作简单一些。

以下只在RAC节点1执行

$ touch ~/.ssh/authorized_keys

$ ssh nsfc3rac1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ ssh nsfc3rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ scp ~/.ssh/authorized_keys nsfc3rac2:.ssh/authorized_keys

以下两个RAC节点都执行

$ chmod 600 ~/.ssh/authorized_keys

当用户等效性建立完毕后,在两个 RAC 节点都执行下面的命令,来验证等效性是否正确

$ssh nsf12rac2

无需输入密码即可登录只第二个节点 在第二个节点同样测试 也要可以oracle 和 grid 用户都需要配置互通验证等效性是否 ok

$ ssh nsfc3rac1 "date;hostname"

$ ssh nsfc3rac2 "date;hostname"

在节点 2 上执行时,第一次根据提示输入 yes 后显示时间和主机名,注意如果第二次执行以上命令还有其它提示出现,如要求密码等,代表用户等效性设置没有确。

1.13 oracle/grid参数文件配置

[grid@nsf12rac1:~]$cat .profile

PS1='[\u@\h:\w]\$';export PS1

# ORACLE_SID

ORACLE_SID=+ASM1; export ORACLE_SID

# ORACLE_BASE

ORACLE_BASE=/u01/app/grid; export ORACLE_BASE

# ORACLE_HOME

ORACLE_HOME=/u01/app/11.2.0/grid; export ORACLE_HOME

# JAVA_HOME

JAVA_HOME=$ORACLE_HOME/jdk; export JAVA_HOME

# ORACLE_PATH

ORACLE_PATH=/u01/app/oracle/common/oracle/sql; export ORACLE_PATH

# ORACLE_TERM

ORACLE_TERM=xterm; export ORACLE_TERM

# NLS_DATE_FORMAT

NLS_DATE_FORMAT="DD-MON-YYYY HH24:MI:SS"; export NLS_DATE_FORMAT

# TNS_ADMIN

TNS_ADMIN=$ORACLE_HOME/network/admin; export TNS_ADMIN

# ORA_NLS11

ORA_NLS11=$ORACLE_HOME/nls/data; export ORA_NLS11

# PATH

PATH=.:${JAVA_HOME}/bin:${PATH}:$HOME/bin:$ORACLE_HOME/bin

PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

PATH=${PATH}:/u01/app/common/oracle/bin

export PATH

# LD_LIBRARY_PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

# CLASSPATH

CLASSPATH=$ORACLE_HOME/JRE

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export CLASSPATH

# THREADS_FLAG

THREADS_FLAG=native; export THREADS_FLAG

# TEMP, TMP, and TMPDIR

export TEMP=/tmp

export TMPDIR=/tmp

# UMASK

umask 022

[oracle@nsf12rac1:~]$ cat .profile

PS1='[\u@\h:\w]\$ ';export PS1

# ORACLE_SID

ORACLE_SID=nsf12db1; export ORACLE_SID

# ORACLE_UNQNAME

ORACLE_UNQNAME=nsf12db; export ORACLE_UNQNAME

# ORACLE_BASE

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

# ORACLE_HOME

ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1; export ORACLE_HOME

# JAVA_HOME

JAVA_HOME=$ORACLE_HOME/jdk; export JAVA_HOME

# ORACLE_PATH

ORACLE_PATH=/u01/app/common/oracle/sql; export ORACLE_PATH

# ORACLE_TERM

ORACLE_TERM=xterm; export ORACLE_TERM

# NLS_DATE_FORMAT

NLS_DATE_FORMAT="DD-MON-YYYY HH24:MI:SS"; export NLS_DATE_FORMAT

# TNS_ADMIN

TNS_ADMIN=$ORACLE_HOME/network/admin; export TNS_ADMIN

# ORA_NLS11

ORA_NLS11=$ORACLE_HOME/nls/data; export ORA_NLS11

# PATH

PATH=.:${JAVA_HOME}/bin:${PATH}:$HOME/bin:$ORACLE_HOME/bin

PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

PATH=${PATH}:/u01/app/common/oracle/bin

export PATH

# LD_LIBRARY_PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

# CLASSPATH

CLASSPATH=$ORACLE_HOME/JRE

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export CLASSPATH

# THREADS_FLAG

THREADS_FLAG=native; export THREADS_FLAG

# TEMP, TMP, and TMPDIR

export TEMP=/tmp

export TMPDIR=/tmp

# UMASK

umask 022

alias cddump='cd /u01/app/oracle/diag/rdbms/nsf12db/nsf12db1/trace'

#alias dsg='cd /export/home/dsg'

alias ll='ls -trlha'

1.14 启动Solaris链路聚合功能

stmsboot –e

二、划分存储

2.1. 在存储上划分vdisk

NSF12RAC_DATA01

800G

NSF12RAC_DATA02

800G

NSF12RAC_DATA03

800G

NSF12RAC_DATA04

800G

NSF12RAC_CRS

5G

NSF12RAC_ARCH

500G

NSF12RAC_OCR

1G

2.2 在两个节点上扫盘,并对硬盘进行format

Solaris10 里面默认会对磁盘做分段,其中都会分出 128MB 的头,(partition0.1)

Solaris11 里面按比例对磁盘进行分段,磁盘头不固定为128M

其中 partition6 默认为整个磁盘,使用磁盘是也会默认使用 partition6

磁盘分区只需要在一个节点做即可,这里针对一个磁盘分区进行说明,其他磁盘分区与其配置方法一致,使用format命令进行分区

format> partition

PARTITION MENU:

0 - change `0’ partition

1 - change `1’ partition

2 - change `2’ partition

3 - change `3’ partition

4 - change `4’ partition

5 - change `5’ partition

6 - change `6’ partition

7 - change `7’ partition

select - select a predefined table

modify - modify a predefined partition table

name - name the current table

print - display the current table

label - write partition map and label to the disk

! - execute , then return

quit

partition> 0

Part Tag Flag Cylinders Size Blocks

0 root wm 0 - 16 132.73MB (17/0/0) 271830

Enter partition id tag[root]: ^C

partition> 6

Part Tag Flag Cylinders Size Blocks

6 usr wm 34 - 65532 499.41GB (65499/0/0) 1047329010

Enter partition id tag[usr]: ^C

partition> quit

FORMAT MENU:

disk - select a disk

type - select (define) a disk type

partition - select (define) a partition table

current - describe the current disk

format - format and analyze the disk

repair - repair a defective sector

label - write label to the disk

analyze - surface analysis

defect - defect list management

backup - search for backup labels

verify - read and display labels

inquiry - show disk ID

volname - set 8-character volume name

! - execute , then return

quit

format> disk

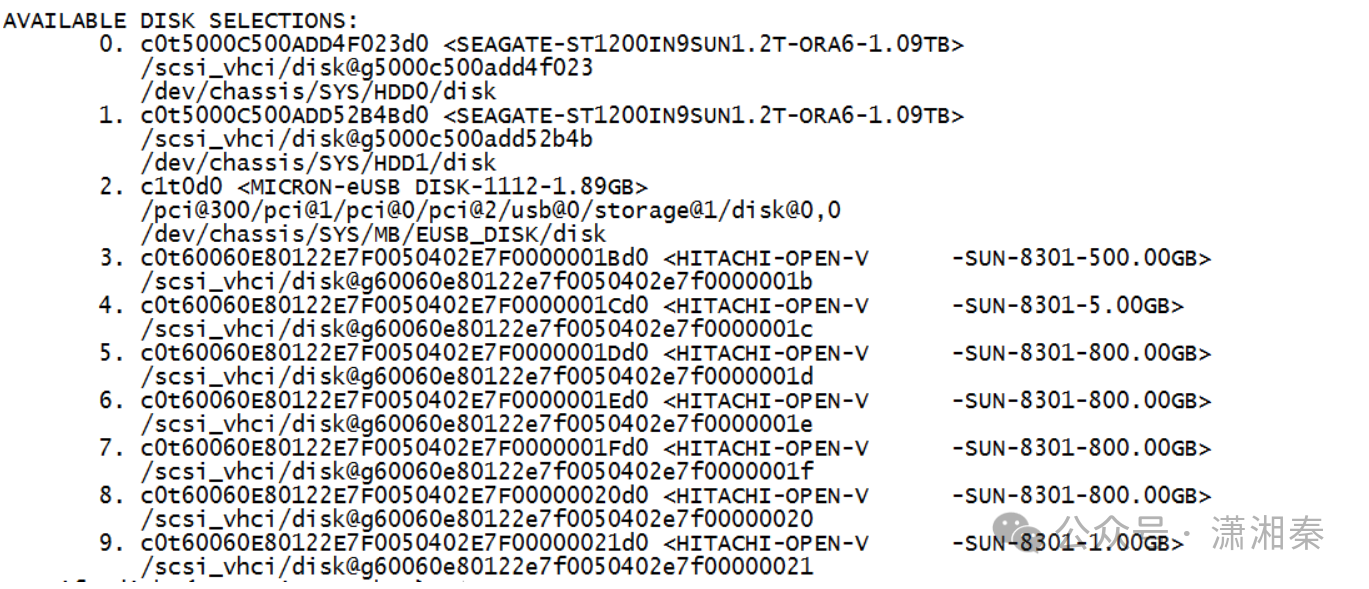

AVAILABLE DISK SELECTIONS:

0. c0t5000C500ADD4F023d0 <SEAGATE-ST1200IN9SUN1.2T-ORA6-1.09TB>

/scsi_vhci/disk@g5000c500add4f023

/dev/chassis/SYS/HDD0/disk

1. c0t5000C500ADD52B4Bd0 <SEAGATE-ST1200IN9SUN1.2T-ORA6-1.09TB>

/scsi_vhci/disk@g5000c500add52b4b

/dev/chassis/SYS/HDD1/disk

2. c1t0d0

/pci@300/pci@1/pci@0/pci@2/usb@0/storage@1/disk@0,0

/dev/chassis/SYS/MB/EUSB_DISK/disk

3. c0t60060E80122E7F0050402E7F0000001Bd0 <HITACHI-OPEN-V -SUN-8301 cyl 65533 alt 2 hd 15 sec 1066>

/scsi_vhci/disk@g60060e80122e7f0050402e7f0000001b

4. c0t60060E80122E7F0050402E7F0000001Cd0 <HITACHI-OPEN-V -SUN-8301-5.00GB>

/scsi_vhci/disk@g60060e80122e7f0050402e7f0000001c

5. c0t60060E80122E7F0050402E7F0000001Dd0 <HITACHI-OPEN-V -SUN-8301-800.00GB>

/scsi_vhci/disk@g60060e80122e7f0050402e7f0000001d

6. c0t60060E80122E7F0050402E7F0000001Ed0 <HITACHI-OPEN-V -SUN-8301-800.00GB>

/scsi_vhci/disk@g60060e80122e7f0050402e7f0000001e

7. c0t60060E80122E7F0050402E7F0000001Fd0 <HITACHI-OPEN-V -SUN-8301-800.00GB>

/scsi_vhci/disk@g60060e80122e7f0050402e7f0000001f

8. c0t60060E80122E7F0050402E7F00000020d0 <HITACHI-OPEN-V -SUN-8301-800.00GB>

/scsi_vhci/disk@g60060e80122e7f0050402e7f00000020

9. c0t60060E80122E7F0050402E7F00000021d0 <HITACHI-OPEN-V -SUN-8301-1.00GB>

/scsi_vhci/disk@g60060e80122e7f0050402e7f00000021

Specify disk (enter its number)[3]: 4

selecting c0t60060E80122E7F0050402E7F0000001Cd0 <HITACHI-OPEN-V -SUN-8301 cyl 1363 alt 2 hd 15 sec 512>c0t60060E80122E7F0050402E7F0000001Cd0: configured with capacity of 4.99GB

[disk formatted]

Disk not labeled. Label it now? Y磁盘分区结果如下

[grid@nsf12rac1:~]$cd /dev/rdsk

[grid@nsf12rac1:/dev/rdsk]$ls –ltr

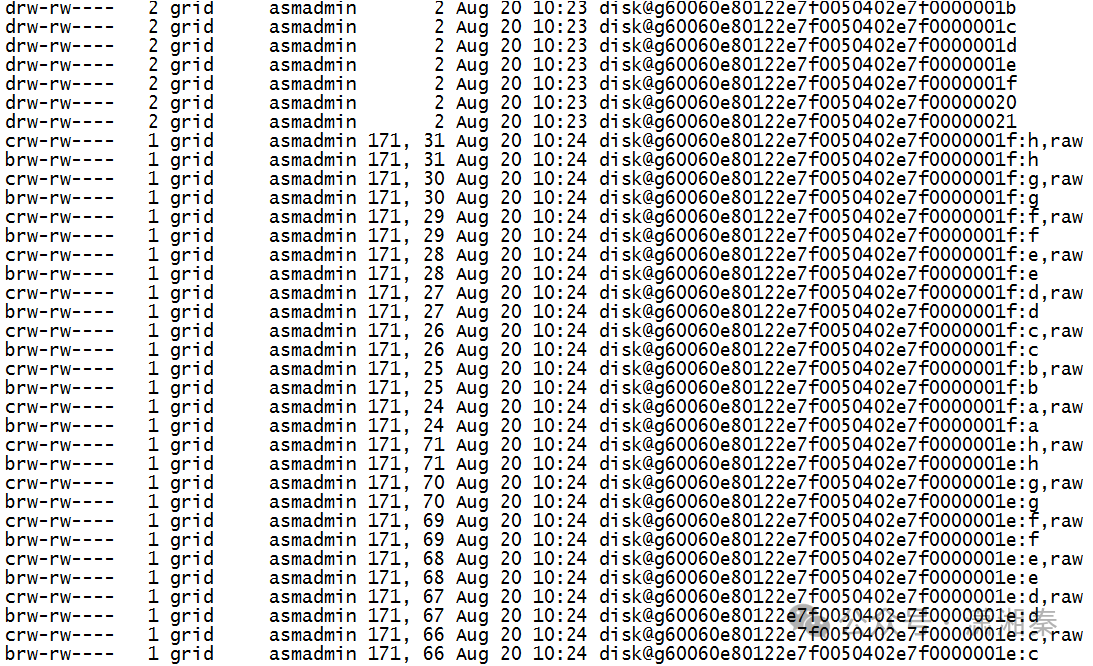

2.3 修改磁盘用户组、所属组、以及权限

要确保每一个共享盘的用户组为 grid:asmadmin 权限为660

root@nsf12rac1:/devices# cd scsi_vhci

root@nsf12rac1:/devices/scsi_vhci# ls –ltr

root@nsf12rac1:/devices/scsi_vhci# chmod -R 660 disk@g60060e80122e7f0050402e7f000000*

root@nsf12rac1:/devices/scsi_vhci# ls -lrt

三、Grid 安装

3.1. 下载地址

https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_1of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_1of7.zip https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_2of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_2of7.zip https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_3of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_3of7.zip https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_4of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_4of7.zip https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_5of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_5of7.zip https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_6of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_6of7.zip https://updates.oracle.com/Orion/Services/download/p13390677_112040_SOLARIS64_7of7.zip?aru=16733784&patch_file=p13390677_112040_SOLARIS64_7of7.zip

3.2 解压

# unzip p13390677_112040_SOLARIS64_1of7.zip

# unzip p13390677_112040_SOLARIS64_2of7.zip

# unzip p13390677_112040_SOLARIS64_3of7.zip

# unzip p13390677_112040_SOLARIS64_4of7.zip

# unzip p13390677_112040_SOLARIS64_5of7.zip

# unzip p13390677_112040_SOLARIS64_6of7.zip

# unzip p13390677_112040_SOLARIS64_7of7.zip3.3 执行命令检查安装环境配置情况

root@nsf12rac1:~# cd /export/home/install/grid

# ./runcluvfy.sh stage -pre crsinst -n nsf12rac1, nsf12rac2 -fixup –verbose

Performing pre-checks for cluster services setup

Checking node reachability…

Check: Node reachability from node “nsf12rac1”

Destination Node Reachable?

------------------------------------ ------------------------

nsf12rac1 yes

nsf12rac2 yes

Result: Node reachability check passed from node “nsf12rac1”

Checking user equivalence…

Check: User equivalence for user “grid”

Node Name Status

------------------------------------ ------------------------

nsf12rac2 passed

nsf12rac1 passed

Result: User equivalence check passed for user “grid”

Checking node connectivity…

Checking hosts config file…

Node Name Status

------------------------------------ ------------------------

nsf12rac2 passed

nsf12rac1 passed

Verification of the hosts config file successful

Interface information for node “nsf12rac2”

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

net0 172.16.50.36 172.16.50.0 172.16.50.36 172.16.50.62 00:10:E0:E2:D8:AE 1500

net1 192.168.1.102 192.168.1.0 192.168.1.102 172.16.50.62 00:10:E0:E2:D8:AF 1500

sp-phys0 169.254.182.77 169.254.182.0 169.254.182.77 172.16.50.62 02:21:28:57:47:17 1500

Interface information for node “nsf12rac1”

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

net0 172.16.50.34 172.16.50.0 172.16.50.34 172.16.50.62 00:10:E0:E2:D3:5E 1500

net1 192.168.1.101 192.168.1.0 192.168.1.101 172.16.50.62 00:10:E0:E2:D3:5F 1500

sp-phys0 169.254.182.77 169.254.182.0 169.254.182.77 172.16.50.62 02:21:28:57:47:17 1500

Check: Node connectivity of subnet “172.16.50.0”

Source Destination Connected?

------------------------------ ------------------------------ ----------------

nsf12rac2[172.16.50.36] nsf12rac1[172.16.50.34] yes

Result: Node connectivity passed for subnet “172.16.50.0” with node(s) nsf12rac2,nsf12rac1

Check: TCP connectivity of subnet “172.16.50.0”

Source Destination Connected?

------------------------------ ------------------------------ ----------------

nsf12rac1:172.16.50.34 nsf12rac2:172.16.50.36 passed

Result: TCP connectivity check passed for subnet “172.16.50.0”

Check: Node connectivity of subnet “192.168.1.0”

Source Destination Connected?

------------------------------ ------------------------------ ----------------

nsf12rac2[192.168.1.102] nsf12rac1[192.168.1.101] yes

Result: Node connectivity passed for subnet “192.168.1.0” with node(s) nsf12rac2,nsf12rac1

Check: TCP connectivity of subnet “192.168.1.0”

Source Destination Connected?

------------------------------ ------------------------------ ----------------

nsf12rac1:192.168.1.101 nsf12rac2:192.168.1.102 passed

Result: TCP connectivity check passed for subnet “192.168.1.0”

Check: Node connectivity of subnet “169.254.182.0”

Source Destination Connected?

------------------------------ ------------------------------ ----------------

nsf12rac2[169.254.182.77] nsf12rac1[169.254.182.77] yes

Result: Node connectivity passed for subnet “169.254.182.0” with node(s) nsf12rac2,nsf12rac1

Check: TCP connectivity of subnet "169.254.182.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

nsf12rac1:169.254.182.77 nsf12rac2:169.254.182.77 failed

ERROR:

PRVF-7617 : Node connectivity between “nsf12rac1 : 169.254.182.77” and “nsf12rac2 : 169.254.182.77” failed

Result: TCP connectivity check failed for subnet "169.254.182.0"

Interfaces found on subnet “172.16.50.0” that are likely candidates for VIP are:

nsf12rac2 net0:172.16.50.36

nsf12rac1 net0:172.16.50.34

Interfaces found on subnet “169.254.182.0” that are likely candidates for VIP are:

nsf12rac2 sp-phys0:169.254.182.77

nsf12rac1 sp-phys0:169.254.182.77

Interfaces found on subnet “192.168.1.0” that are likely candidates for a private interconnect are:

nsf12rac2 net1:192.168.1.102

nsf12rac1 net1:192.168.1.101

Checking subnet mask consistency…

Subnet mask consistency check passed for subnet “172.16.50.0”.

Subnet mask consistency check passed for subnet “192.168.1.0”.

Subnet mask consistency check passed for subnet “169.254.182.0”.

Subnet mask consistency check passed.

Result: Node connectivity check failed

Checking multicast communication…

Checking subnet “172.16.50.0” for multicast communication with multicast group “230.0.1.0”…

Check of subnet “172.16.50.0” for multicast communication with multicast group “230.0.1.0” passed.

Checking subnet “192.168.1.0” for multicast communication with multicast group “230.0.1.0”…

Check of subnet “192.168.1.0” for multicast communication with multicast group “230.0.1.0” passed.

Checking subnet “169.254.182.0” for multicast communication with multicast group “230.0.1.0”…

Check of subnet “169.254.182.0” for multicast communication with multicast group “230.0.1.0” passed.

Check of multicast communication passed.

Check: Total memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

nsf12rac2 254.25GB (2.66600448E8KB) 2GB (2097152.0KB) passed

nsf12rac1 254.25GB (2.66600448E8KB) 2GB (2097152.0KB) passed

Result: Total memory check passed

Check: Available memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

nsf12rac2 229.5294GB (2.40679048E8KB) 50MB (51200.0KB) passed

nsf12rac1 228.8676GB (2.3998508E8KB) 50MB (51200.0KB) passed

Result: Available memory check passed

Check: Swap space

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

nsf12rac2 20GB (2.0971512E7KB) 16GB (1.6777216E7KB) passed

nsf12rac1 20GB (2.0971512E7KB) 16GB (1.6777216E7KB) passed

Result: Swap space check passed

Check: Free disk space for “nsf12rac2:/tmp”

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/tmp nsf12rac2 /tmp 246.1828GB 1GB passed

Result: Free disk space check passed for “nsf12rac2:/tmp”

Check: Free disk space for “nsf12rac1:/tmp”

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/tmp nsf12rac1 /tmp 243.7385GB 1GB passed

Result: Free disk space check passed for “nsf12rac1:/tmp”

Check: User existence for “grid”

Node Name Status Comment

------------ ------------------------ ------------------------

nsf12rac2 passed exists(1100)

nsf12rac1 passed exists(1100)

Checking for multiple users with UID value 1100

Result: Check for multiple users with UID value 1100 passed

Result: User existence check passed for “grid”

Check: Group existence for “oinstall”

Node Name Status Comment

------------ ------------------------ ------------------------

nsf12rac2 passed exists

nsf12rac1 passed exists

Result: Group existence check passed for “oinstall”

Check: Group existence for “dba”

Node Name Status Comment

------------ ------------------------ ------------------------

nsf12rac2 passed exists

nsf12rac1 passed exists

Result: Group existence check passed for “dba”

Check: Membership of user “grid” in group “oinstall” [as Primary]

Node Name User Exists Group Exists User in Group Primary Status

---------------- ------------ ------------ ------------ ------------ ------------

nsf12rac2 yes yes yes yes passed

nsf12rac1 yes yes yes yes passed

Result: Membership check for user “grid” in group “oinstall” [as Primary] passed

Check: Membership of user “grid” in group “dba”

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

nsf12rac2 yes yes yes passed

nsf12rac1 yes yes yes passed

Result: Membership check for user “grid” in group “dba” passed

Check: Run level

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

nsf12rac2 3 3 passed

nsf12rac1 3 3 passed

Result: Run level check passed

Check: Hard limits for “maximum open file descriptors”

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

nsf12rac2 hard 65536 65536 passed

nsf12rac1 hard 65536 65536 passed

Result: Hard limits check passed for “maximum open file descriptors”

Check: Soft limits for “maximum open file descriptors”

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

nsf12rac2 soft 65536 1024 passed

nsf12rac1 soft 65536 1024 passed

Result: Soft limits check passed for “maximum open file descriptors”

Check: Hard limits for “maximum user processes”

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

nsf12rac2 hard 29995 16384 passed

nsf12rac1 hard 29995 16384 passed

Result: Hard limits check passed for “maximum user processes”

Check: Soft limits for “maximum user processes”

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

nsf12rac2 soft 29995 2047 passed

nsf12rac1 soft 29995 2047 passed

Result: Soft limits check passed for “maximum user processes”

Check: System architecture

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

nsf12rac2 64-bit sparcv9 kernel modules sparc5b vis3c vamask pause_nsec xmont xmpmul mwait sparc5 adi vis3b crc32c cbcond pause mont mpmul sha512 sha256 sha1 md5 camellia des aes ima hpc vis3 fmaf asi_blk_init vis2 vis popc fsmuld div32 mul32 64-bit sparcv9 kernel modules passed

nsf12rac1 64-bit sparcv9 kernel modules sparc5b vis3c vamask pause_nsec xmont xmpmul mwait sparc5 adi vis3b crc32c cbcond pause mont mpmul sha512 sha256 sha1 md5 camellia des aes ima hpc vis3 fmaf asi_blk_init vis2 vis popc fsmuld div32 mul32 64-bit sparcv9 kernel modules passed

Result: System architecture check passed

Check: Kernel version

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

nsf12rac2 5.11 5.11 passed

nsf12rac1 5.11 5.11 passed

Result: Kernel version check passed

Checking for multiple users with UID value 0

Result: Check for multiple users with UID value 0 passed

Check: Current group ID

Result: Current group ID check passed

Starting check for consistency of primary group of root user

Node Name Status

------------------------------------ ------------------------

nsf12rac2 passed

nsf12rac1 passed

Check for consistency of root user’s primary group passed

Starting Clock synchronization checks using Network Time Protocol(NTP)…

NTP Configuration file check started…

Network Time Protocol(NTP) configuration file not found on any of the nodes. Oracle Cluster Time Synchronization Service(CTSS) can be used instead of NTP for time synchronization on the cluster nodes

No NTP Daemons or Services were found to be running

Result: Clock synchronization check using Network Time Protocol(NTP) passed

Checking Core file name pattern consistency…

Core file name pattern consistency check passed.

Checking to make sure user “grid” is not in “root” group

Node Name Status Comment

------------ ------------------------ ------------------------

nsf12rac2 passed does not exist

nsf12rac1 passed does not exist

Result: User “grid” is not part of “root” group. Check passed

Check default user file creation mask

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

nsf12rac2 0022 0022 passed

nsf12rac1 0022 0022 passed

Result: Default user file creation mask check passed

Checking consistency of file “/etc/resolv.conf” across nodes

Checking the file “/etc/resolv.conf” to make sure only one of domain and search entries is defined

File “/etc/resolv.conf” does not have both domain and search entries defined

Checking if domain entry in file “/etc/resolv.conf” is consistent across the nodes…

domain entry in file “/etc/resolv.conf” is consistent across nodes

Checking file “/etc/resolv.conf” to make sure that only one domain entry is defined

All nodes have one domain entry defined in file “/etc/resolv.conf”

Checking all nodes to make sure that domain is “sf.com” as found on node “nsf12rac2”

All nodes of the cluster have same value for ‘domain’

Checking if search entry in file “/etc/resolv.conf” is consistent across the nodes…

search entry in file “/etc/resolv.conf” is consistent across nodes

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

nsf12rac2 passed

nsf12rac1 passed

The DNS response time for an unreachable node is within acceptable limit on all nodes

File “/etc/resolv.conf” is consistent across nodes

Check: Time zone consistency

Result: Time zone consistency check passed

Pre-check for cluster services setup was unsuccessful.

Checks did not pass for the following node(s):

nsf12rac1

错误由USB端口引起的,解决办法禁掉这个USB接口自带的私有IP

# ipadm disable-if -t sp-phys03.4 安装Grid组件

配置XManager

# export DISPLAY=10.7.68.44:0.0

# /usr/openwin/bin/xhost +

# su – grid

$ export DISPLAY=10.7.68.44:0.0

$ /usr/openwin/bin/xhost +

一定要以grid用户登录远程桌面

$ cd /export/home/install/grid

$ cd /export/home/install/grid

$ ./runInstaller显示出安装界面,开始安装

Skip software update

installation option:install and configure

install type:advanced installation

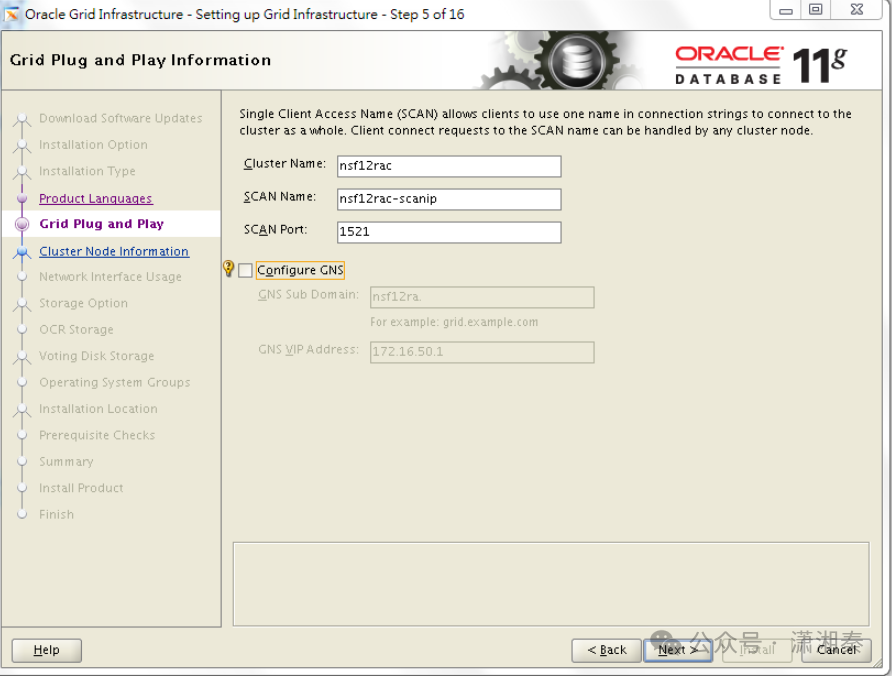

product language:English –default

grid plug and play:

注意:scanip 一定要用nslookup解析

cluster node information :two node public hostname,virtual hostname

network interface usage

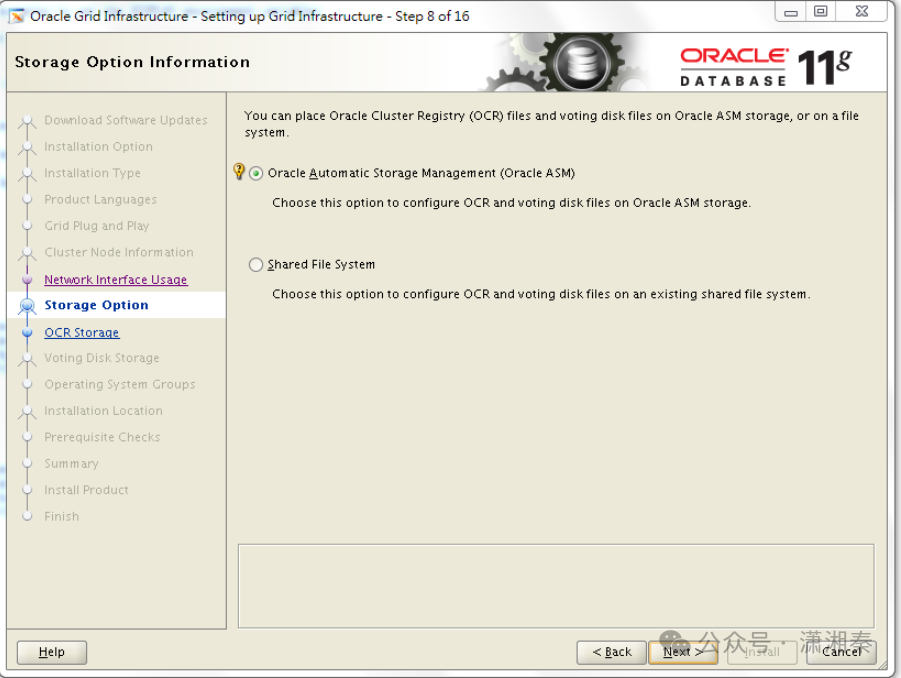

storage option :choose ASM

create ASM disk group:

zfs系统默认s6为全盘

ASM password

OS group

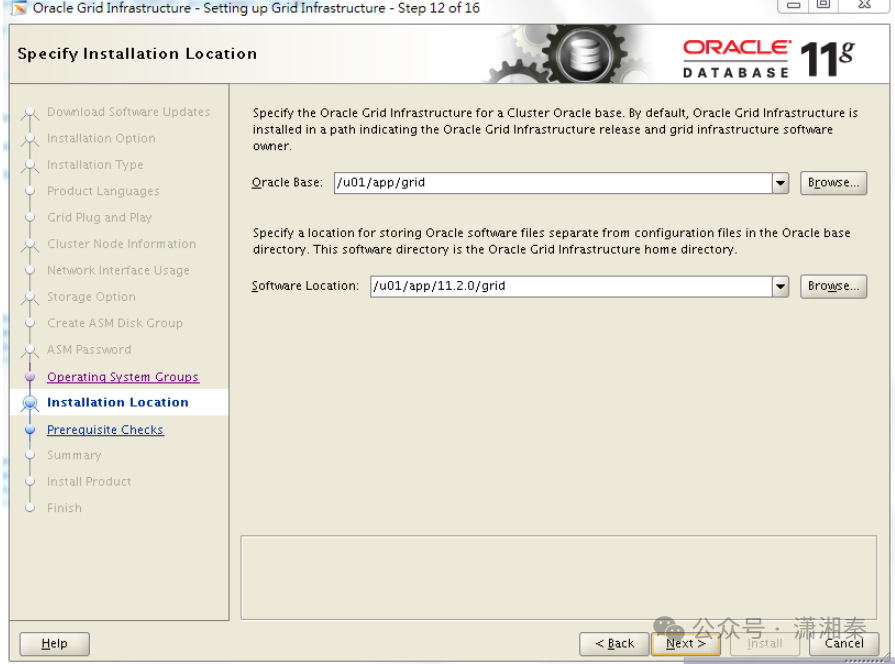

installation location: Oracle Base: /u01/app/grid Software

Location:/u01/app/11.2.0/grid

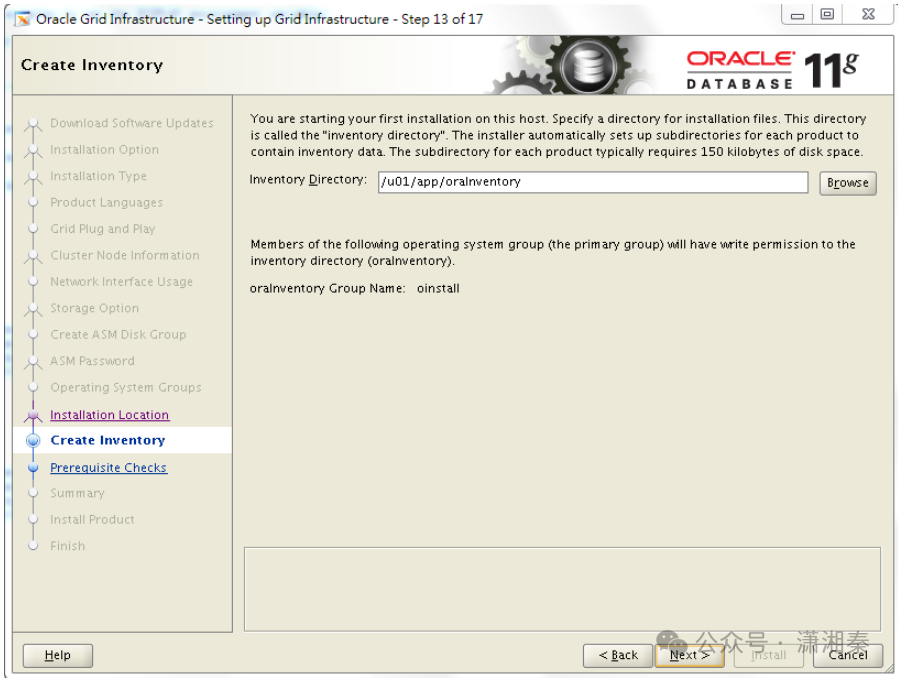

Inventory Directory: /u01/app/oraInventory

summary

begin install

execute shell script one by one

RAC节点1: /u01/app/oraInventory/orainstRoot.sh

RAC节点2: /u01/app/oraInventory/orainstRoot.sh

RAC节点1: /u01/app/11.2.0/grid/root.sh

RAC节点2: /u01/app/11.2.0/grid/root.sh

注意:Solaris11 上安装11gR2 RAC GI, 在最后执行root.sh时报如下错误:

pwd: cannot access parent directories [Permission denied]

Run root.sh from a directory that has read/execute access to the grid owner ‘grid’

/u01/app/11.2.0/grid/perl/bin/perl -I/u01/app/11.2.0/grid/perl/lib -I/u01/app/11.2.0/grid/crs/install /u01/app/11.2.0/grid/crs/install/rootcrs.pl execution failed

这是一个bug,详见

11.2 Grid Infrastructure root.sh Failed with “error retrieving current directory” (文档 ID 1114203.1)

解决方法也很简单:

进入GRID_HOME,然后执行./root.sh

# cd $GRID_HOME

# ./root.shfinish

3.5 安装后对 Clusterware 集群件的校验

注:gsd为11g摒弃的一个服务 offline属于正常

检验SCAN的配置,使用命令cluvfy comp scan -verbose输出详细信息

四、安装 Database 软件,并建立共享 ASM 磁盘组

4.1. 安装 database 软件

用oracle 用户登录远程桌面

$ export DISPLAY=10.7.68.44:0.0

$ /usr/openwin/bin/xhost +

(登陆后也要执行一遍上面的两步)

[oracle@nsf12rac1:~]$ cd /export/home/install/database

[oracle@nsf12rac1:/export/home/install/database]$ ./runInstaller

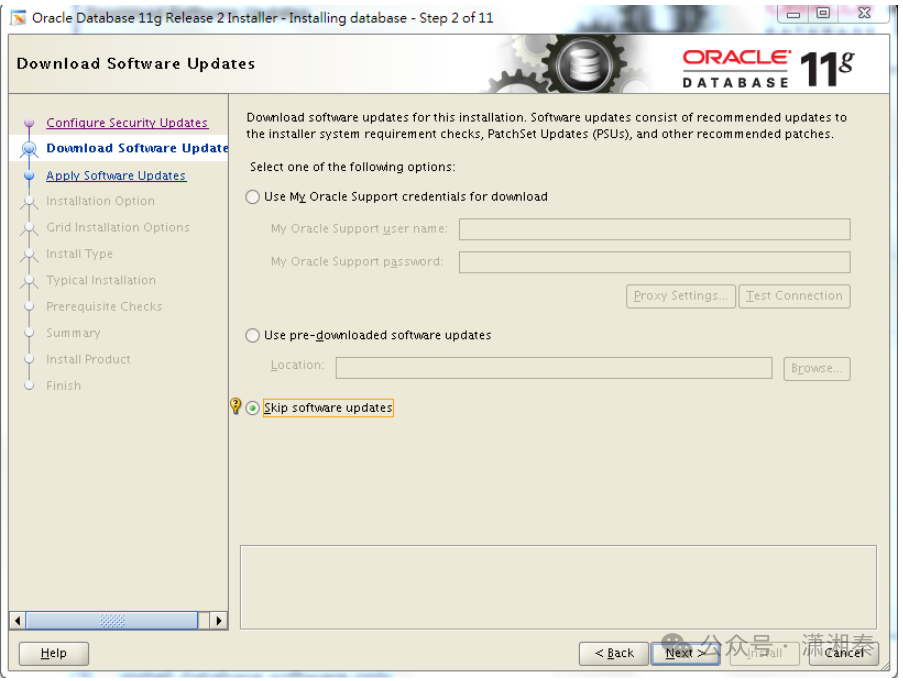

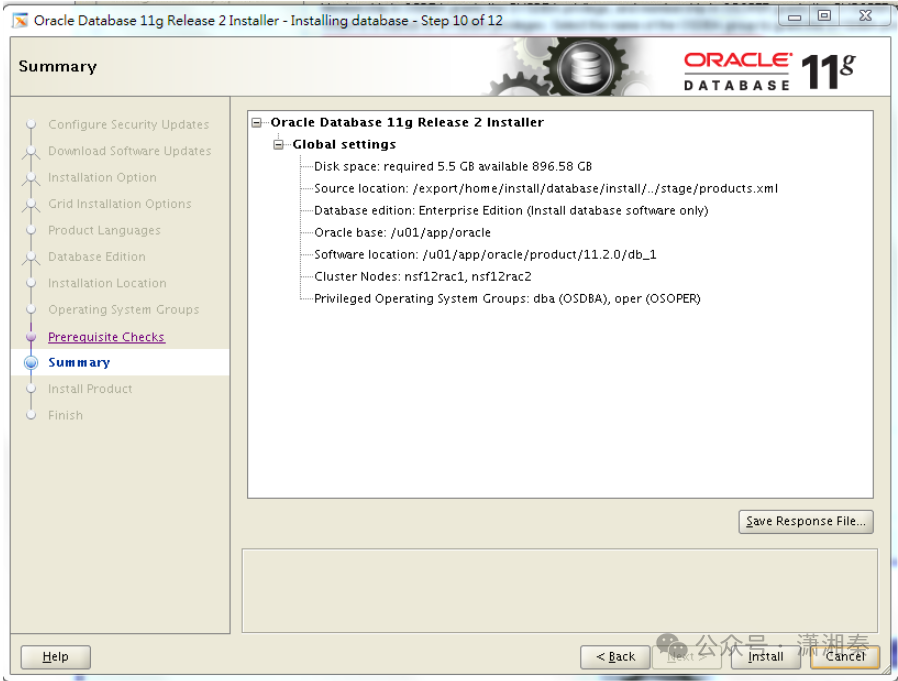

skip MOS mail set

skip software update

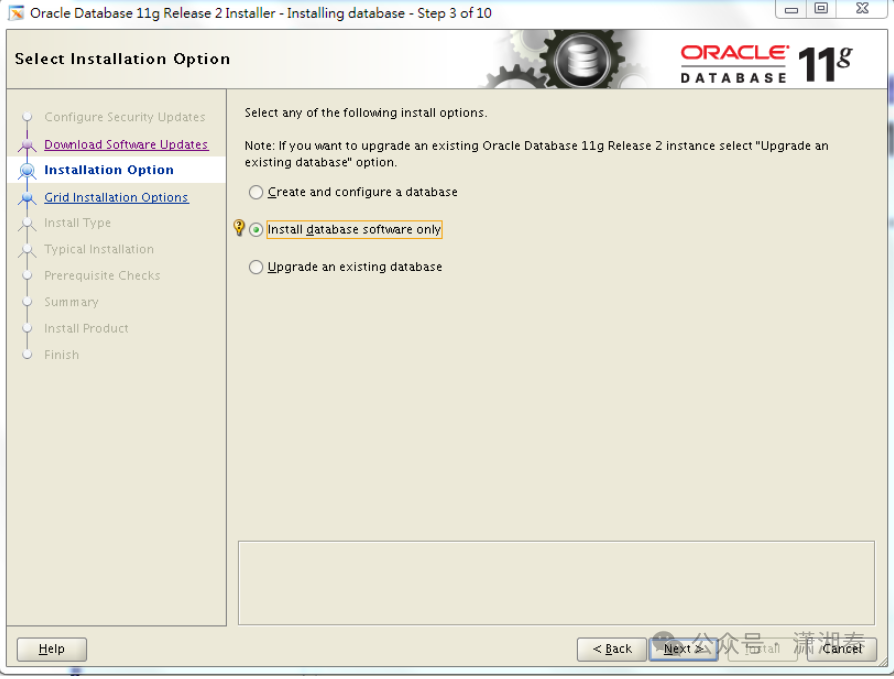

install database software only

RAC install choose two node

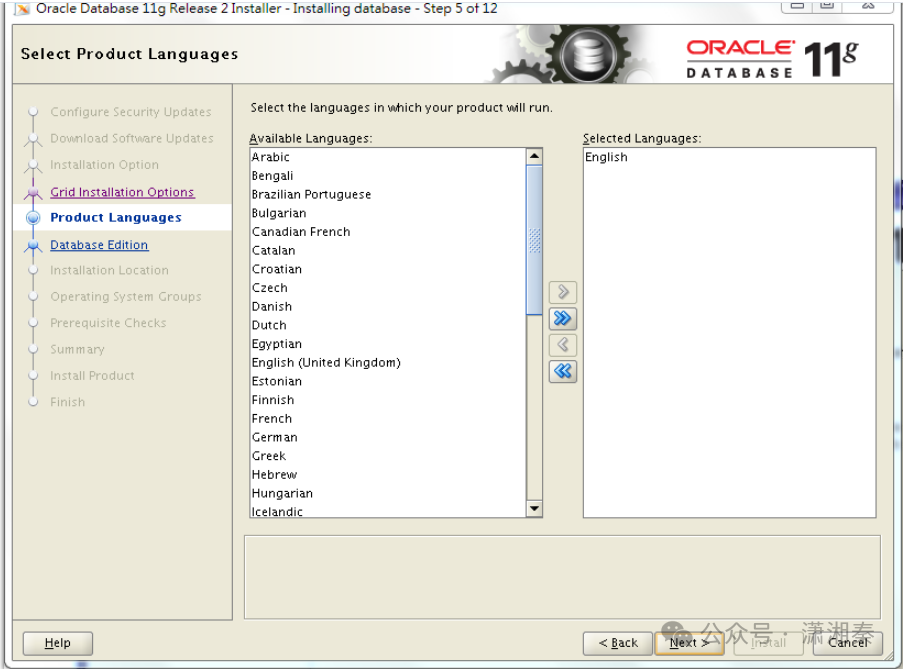

default language set:English

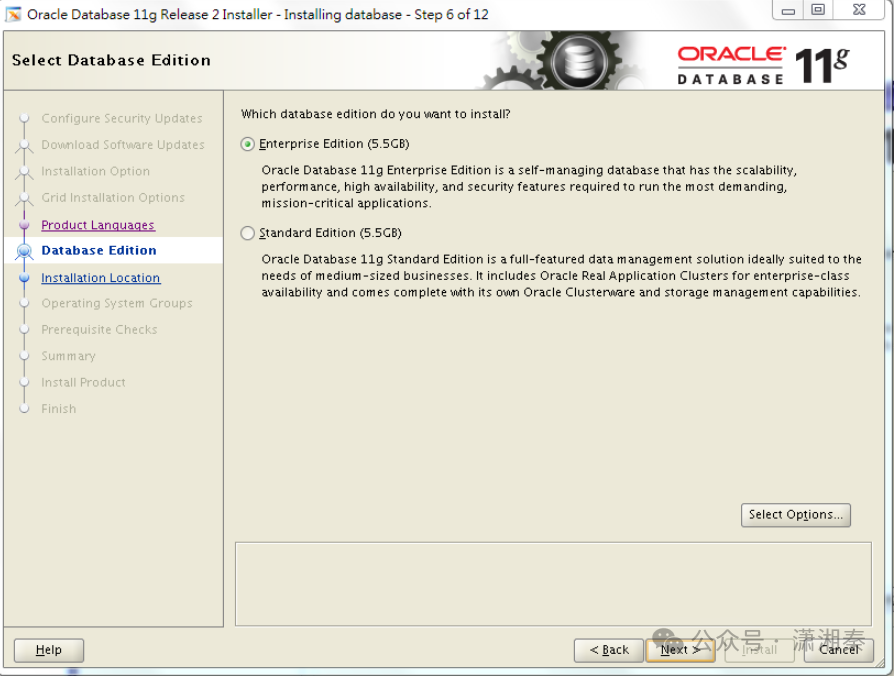

Enterprise Edtion install

installation Loction Oracle Base: /u01/app/oracle

Software Location: /u01/app/oracle/product/11.2.0/db_home_1

OS group OSDBA for DB组:dba OSOPER for DB组:oper

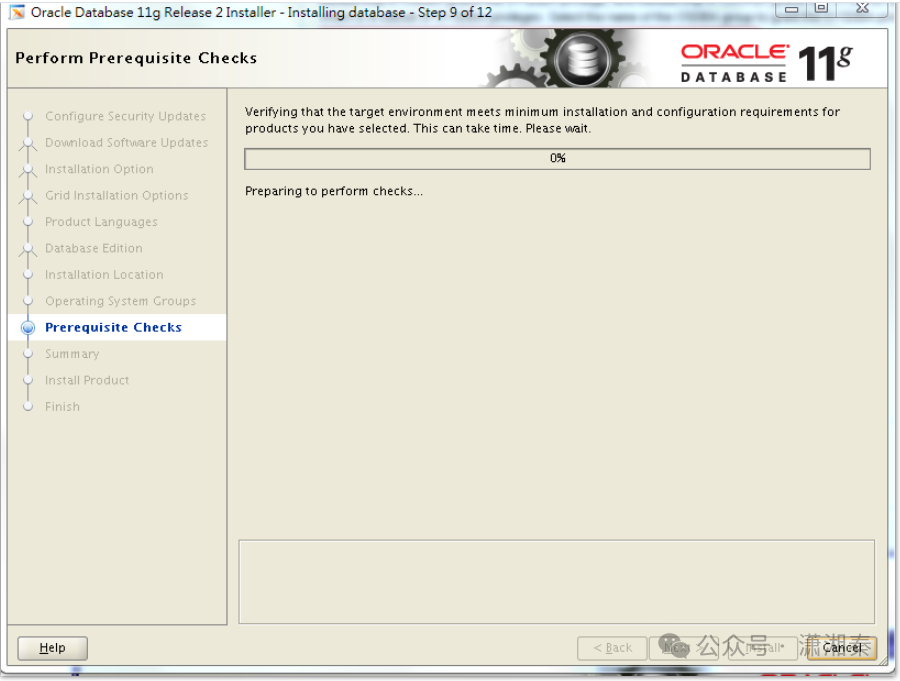

prerequisite checks

Summary

install product

RAC节点1: # /u01/app/oracle/product/11.2.0/db_1/root.sh

RAC节点2: # /u01/app/oracle/product/11.2.0/db_1/root.sh

finish : install successful

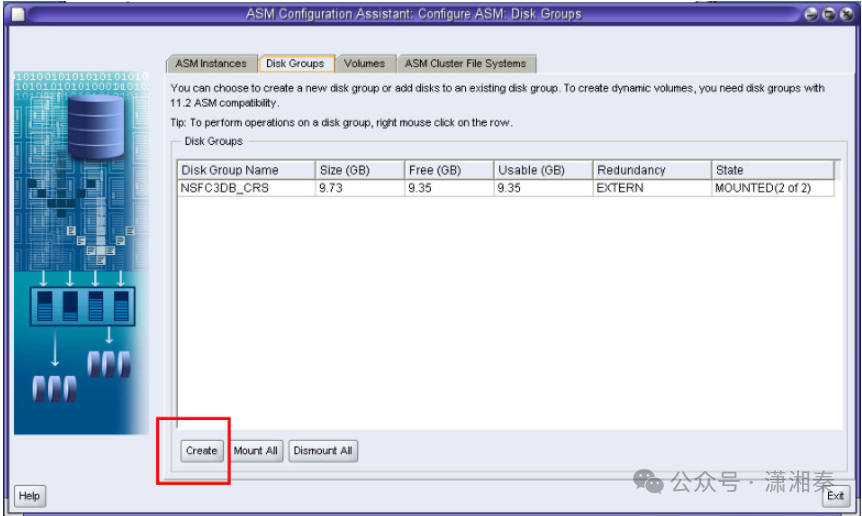

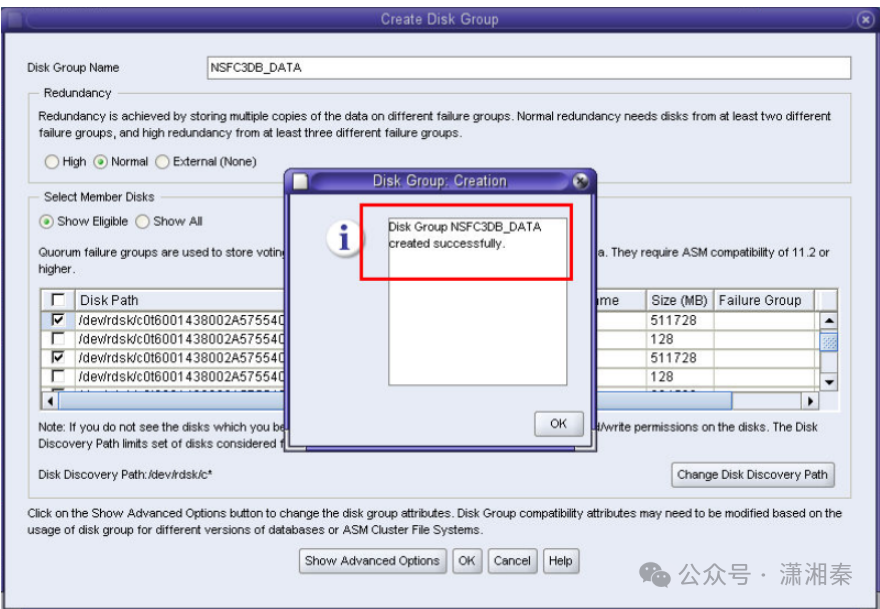

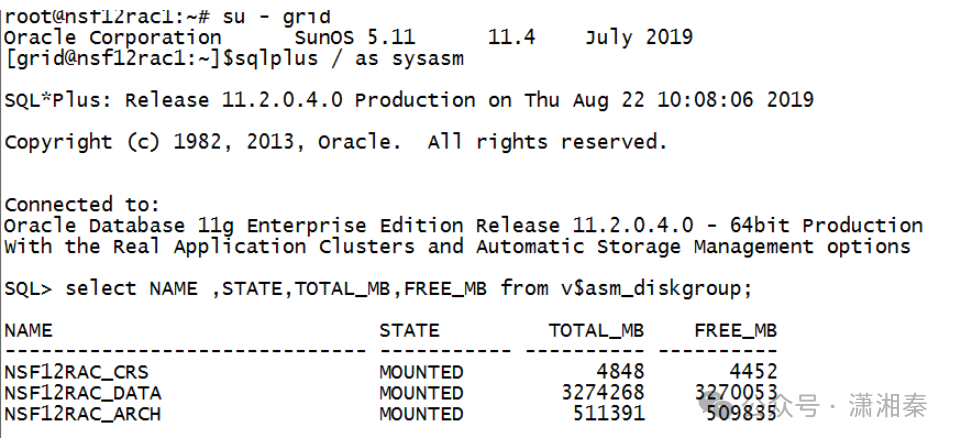

4.2 ASMCA 建立 ASM 磁盘组

$ export DISPLAY=10.7.68.44:0.0

$ /usr/openwin/bin/xhost +

一定要以grid用户登录远程桌面

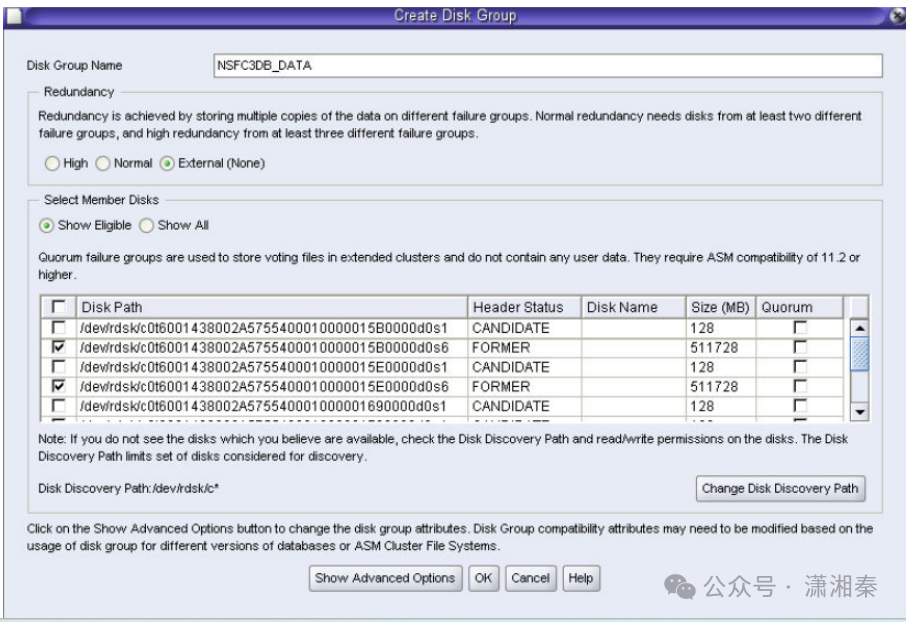

点击disk group –> create

Redundancy :external choose rdsk/xxxxs6

Disk group create successfully

Create ARCH disk group

Check disk_group is mounted

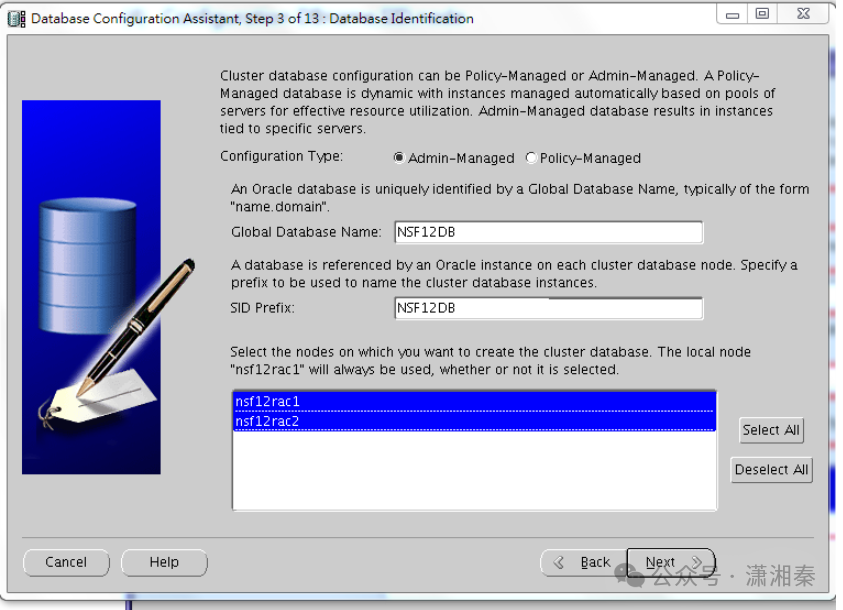

五、用 dbca 建立 RAC 集群数据库

5.1 建立 RAC 集群数据库 (不配置 EM)

用oracle 用户登录远程桌面

$ export DISPLAY=10.7.68.44:0.0

$ /usr/openwin/bin/xhost +

(登陆后也要执行一遍上面的两步)

$ dbca

显示出图形配置界面,开始进行安装

Oracle real application cluster (RAC)database

Create a database

General purpose or transaction processing

Configuration DBname,SID,node

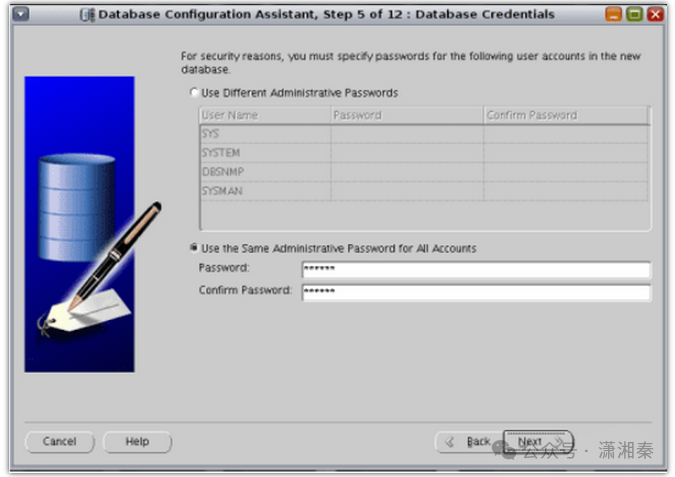

Password(大小写英文加数字)

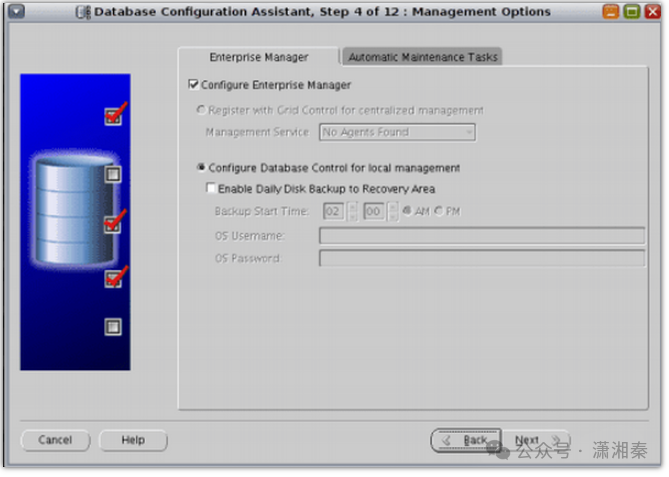

Management Options

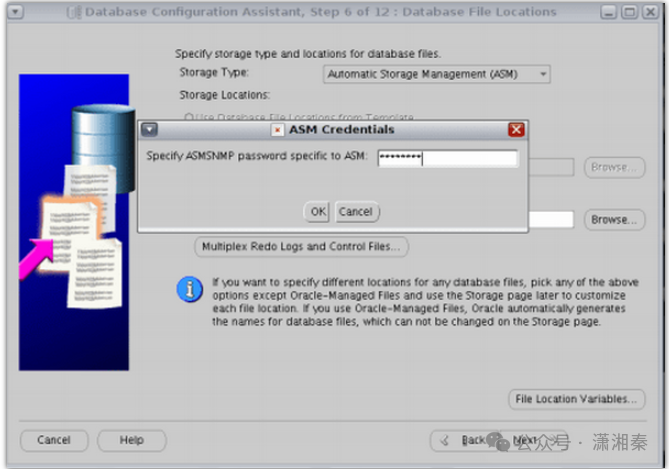

Database File Locations

输入next后进入ASM管理密码界面

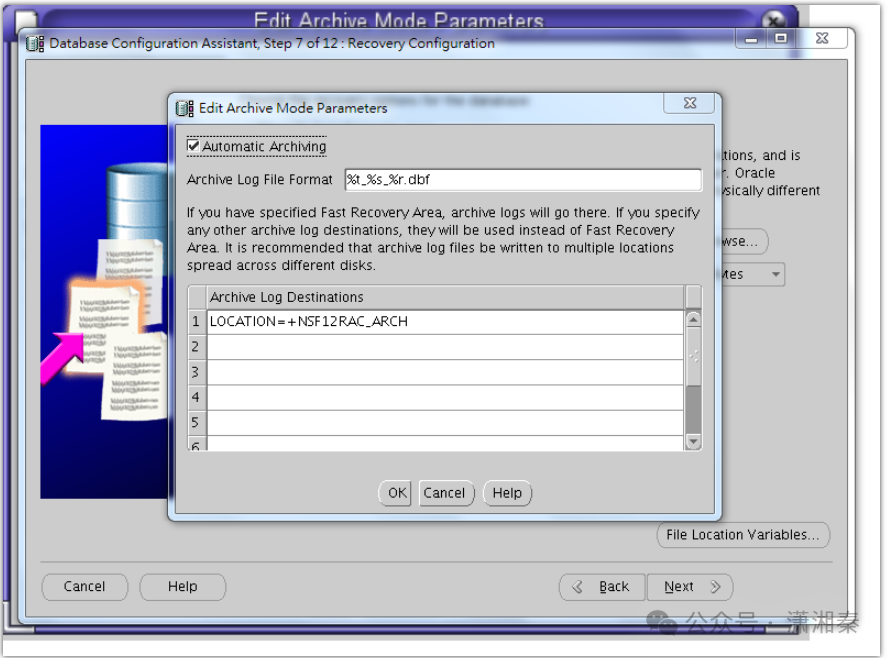

Configuration FRA and archive

Archive log location

Database Content

Database storage set

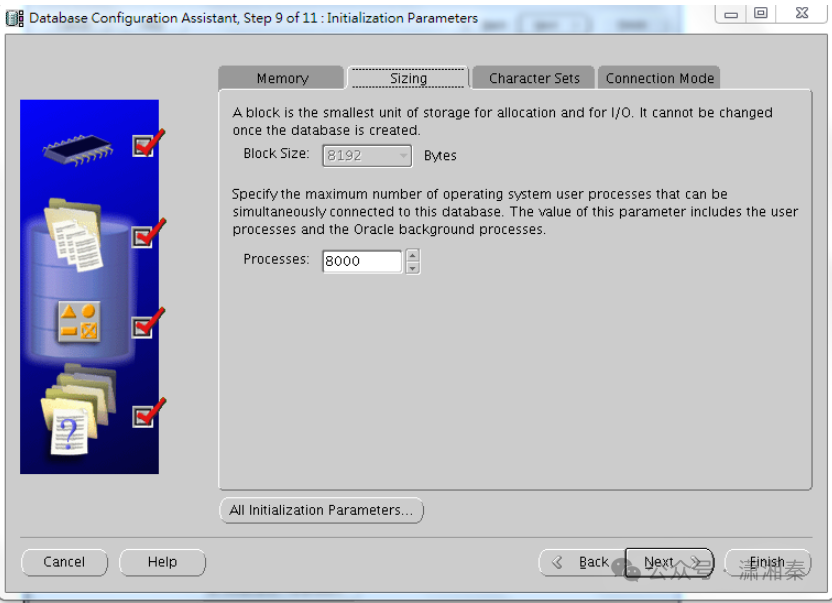

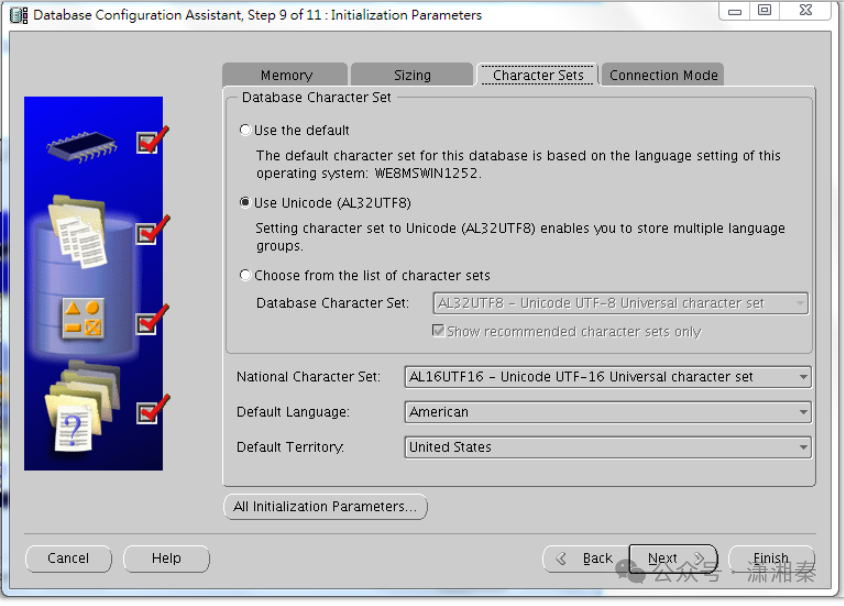

Initialization Parameters

Database storage set

全部都要调整注意大小为M

Create database and store scripts

Create database

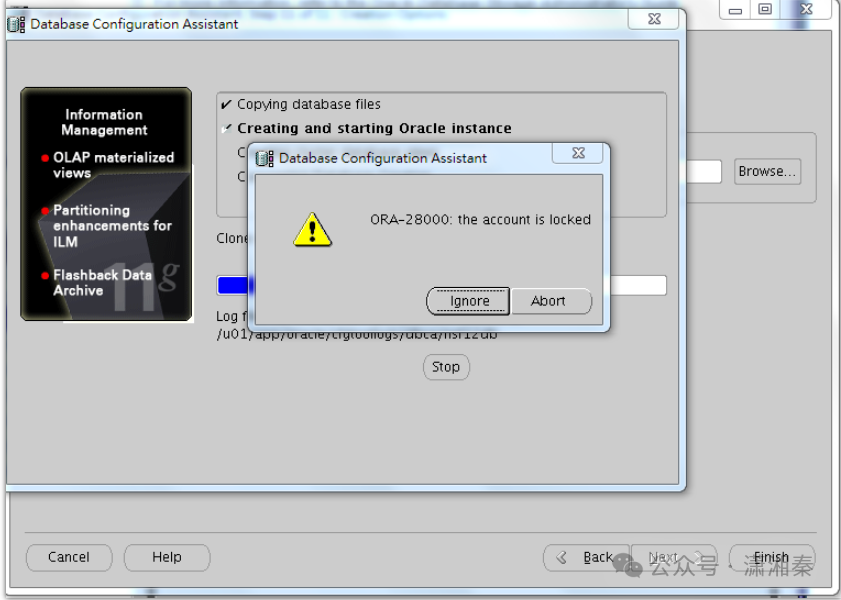

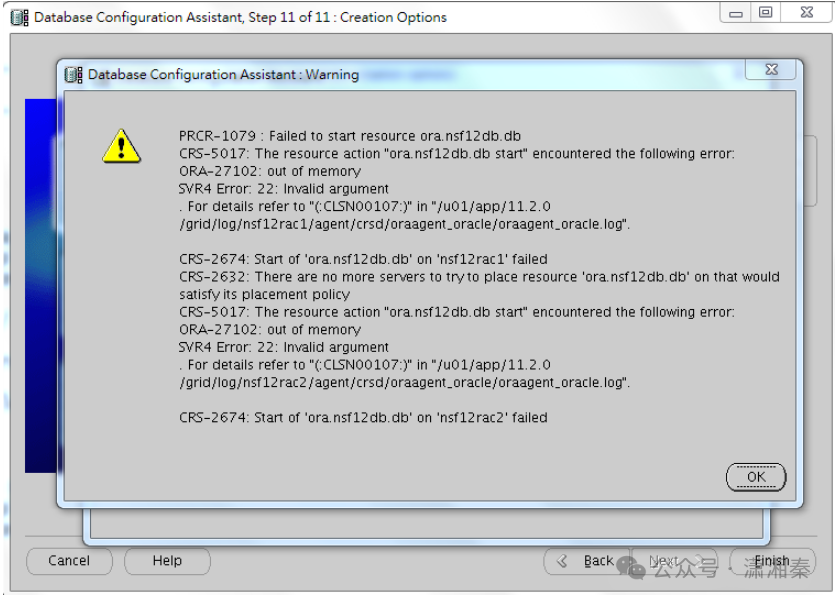

更改Oracle用户的组属性后重装错误消失

Database create successfully

虽然安装过程中有警告和错误,但是数据库成功搭建未见到有异常,待观察。

5.2. 安装后检测

监听检测

[oracle@nsf12rac1:~]$ lsnrctl status LSNRCTL for Solaris: Version 11.2.0.4.0 - Production on 21-AUG-2019 13:33:03 Copyright © 1991, 2013, Oracle. All rights reserved. Connecting to (ADDRESS=(PROTOCOL=tcp)(HOST=)(PORT=1521)) STATUS of the LISTENER ------------------------ Alias LISTENER Version TNSLSNR for Solaris: Version 11.2.0.4.0 - Production Start Date 20-AUG-2019 00:37:39 Uptime 0 days 20 hr. 55 min. 25 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /u01/app/11.2.0/grid/network/admin/listener.ora Listener Log File /u01/app/grid/diag/tnslsnr/nsf12rac1/listener/alert/log.xml Listening Endpoints Summary… (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=172.16.50.34)(PORT=1521))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=172.16.50.37)(PORT=1521))) Services Summary… Service “+ASM” has 1 instance(s). Instance “+ASM1”, status READY, has 1 handler(s) for this service… Service “nsf12db” has 1 instance(s). Instance “nsf12db1”, status READY, has 1 handler(s) for this service… Service “nsf12dbXDB” has 1 instance(s). Instance “nsf12db1”, status READY, has 1 handler(s) for this service… The command completed successfully网络监测

root@nsf12rac1:~# ifconfig -a

lo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1

inet 127.0.0.1 netmask ff000000

net0: flags=100001000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4,PHYSRUNNING> mtu 1500 index 2

inet 172.16.50.34 netmask ffffffc0 broadcast 172.16.50.63

ether 0:10:e0:e2:d3:5e

net0:1: flags=100001040843<UP,BROADCAST,RUNNING,MULTICAST,DEPRECATED,IPv4,PHYSRUNNING> mtu 1500 index 2

inet 172.16.50.37 netmask ffffffc0 broadcast 172.16.50.63

net0:2: flags=100001040843<UP,BROADCAST,RUNNING,MULTICAST,DEPRECATED,IPv4,PHYSRUNNING> mtu 1500 index 2

inet 172.16.50.13 netmask ffffffc0 broadcast 172.16.50.63

net1: flags=100001000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4,PHYSRUNNING> mtu 1500 index 3

inet 192.168.1.101 netmask ffffff00 broadcast 192.168.1.255

ether 0:10:e0:e2:d3:5f

net1:1: flags=100001000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4,PHYSRUNNING> mtu 1500 index 3

inet 169.254.71.193 netmask ffff0000 broadcast 169.254.255.255

lo0: flags=2002000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv6,VIRTUAL> mtu 8252 index 1

inet6 ::1/128

net0: flags=120002004841<UP,RUNNING,MULTICAST,DHCP,IPv6,PHYSRUNNING> mtu 1500 index 2

inet6 fe80::210:e0ff:fee2:d35e/10

ether 0:10:e0:e2:d3:5e

net1: flags=120002000840<RUNNING,MULTICAST,IPv6,PHYSRUNNING> mtu 1500 index 3

inet6 ::/0

ether 0:10:e0:e2:d3:5f

查看指定的集群数据库的配置信息root@nsf12rac1:~# su - grid Oracle Corporation SunOS 5.11 11.4 July 2019 [grid@nsf12rac1:~]$srvctl config database -d nsf12db Database unique name: nsf12db Database name: nsf12db Oracle home: /u01/app/oracle/product/11.2.0/db_1 Oracle user: oracle Spfile: +NSF12RAC_DATA/nsf12db/spfilensf12db.ora Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: nsf12db Database instances: nsf12db1,nsf12db2 Disk Groups: NSF12RAC_DATA,NSF12RAC_ARCH Mount point paths: Services: Type: RAC Database is administrator managed

登录数据[oracle@nsf12rac2:~]$ sqlplus / as sysdba SQL*Plus: Release 11.2.0.4.0 Production on Wed Aug 21 13:48:17 2019 Copyright © 1982, 2013, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP, Data Mining and Real Application Testing options SQL> show parameter sga NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ lock_sga boolean FALSE pre_page_sga boolean FALSE sga_max_size big integer 104192M sga_target big integer 0 SQL> show parameter pga NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ pga_aggregate_target big integer 0 SQL> SQL> show parameter memory

获取集群时间同步信息[grid@nsf12rac2:~]$cluvfy comp clocksync Verifying Clock Synchronization across the cluster nodes Checking if Clusterware is installed on all nodes… Check of Clusterware install passed Checking if CTSS Resource is running on all nodes… CTSS resource check passed Querying CTSS for time offset on all nodes… Query of CTSS for time offset passed Check CTSS state started… CTSS is in Active state. Proceeding with check of clock time offsets on all nodes… Check of clock time offsets passed Oracle Cluster Time Synchronization Services check passed Verification of Clock Synchronization across the cluster nodes was successful.