颜色空间转换有很多相关标准:

https://docs.opencv.org/3.4.0/de/d25/imgproc_color_conversions.html

https://www.itu.int/rec/R-REC-BT.601-4-199407-S/en

ffmpeg命令行颜色空间转换是通过调用vf_scale中的swscale来进行转码。

我们通过gdb来调试ffmpeg.

首先编译ffmpeg n4.5.编译脚本如下:

./configure \

--prefix=/workspace/FFmpeg-n4.5-dev/libffmpeg \

--enable-shared \

--disable-static \

--extra-cflags=-g \

--enable-debug \

--disable-optimizations \

--disable-stripping

make -j8

make install

编译后我们找到ffmpeg_g来进行追踪。

gdb ./ffmpeg_g

set args -y -i /workspace/libx264_640x360_baseline_5_frames.h264 "scale=in_color_matrix=bt601:in_range=2" -pix_fmt bgr24 ffmpeg-bgr24.rgb

b yuv2rgb.c:ff_yuv2rgb_get_func_ptr

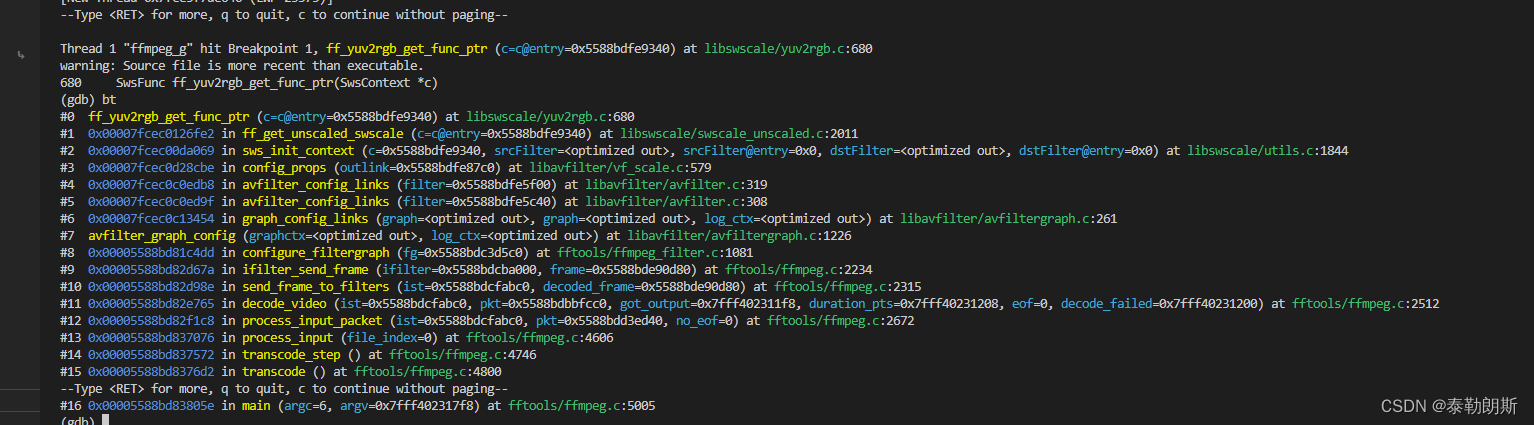

ffmpeg调用栈如下:

下面这个是初始化调用,最后调用到ff_yuv2rgb_get_func_ptr

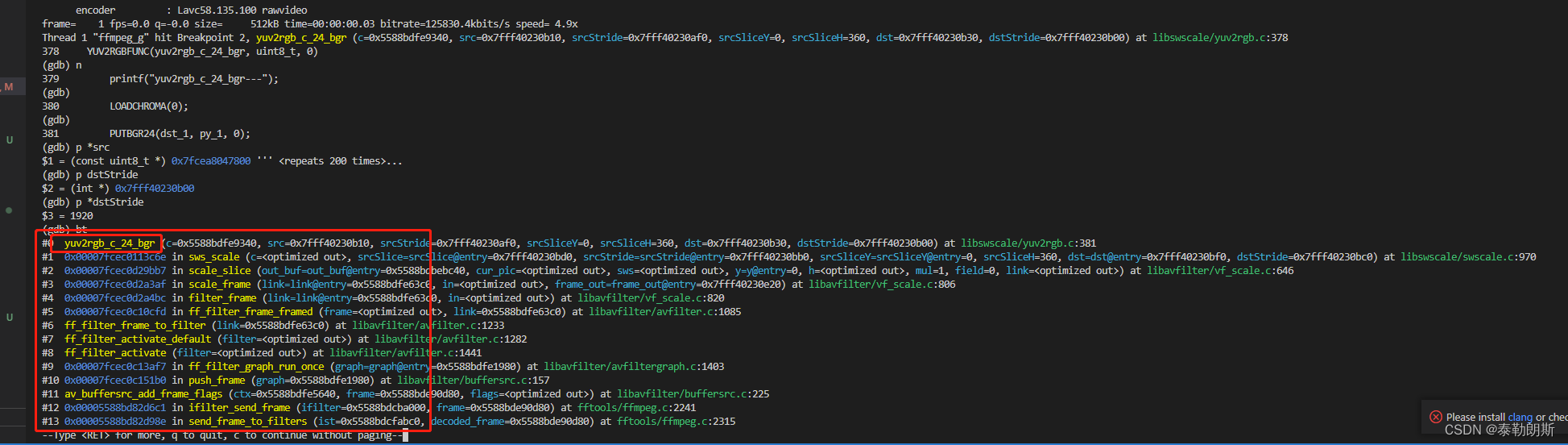

下面是正常csc中调用流程:

ifilter_send_frame()

configure_filtergraph(fg)

avfilter_graph_config(fg->graph, NULL))

graph_config_links(graphctx, log_ctx))

avfilter_config_links(filt))

config_link(link)->config_props(AVFilterLink *outlink)

sws_init_context(*s, NULL, NULL))

上面調用了:

static const AVClass scale_class = {

.class_name = "scale",

.item_name = av_default_item_name,

.option = scale_options,

.version = LIBAVUTIL_VERSION_INT,

.category = AV_CLASS_CATEGORY_FILTER,

#if FF_API_CHILD_CLASS_NEXT

.child_class_next = child_class_next,

#endif

.child_class_iterate = child_class_iterate,

};

static const AVFilterPad avfilter_vf_scale_inputs[] = {

{

.name = "default",

.type = AVMEDIA_TYPE_VIDEO,

.filter_frame = filter_frame,

},

{ NULL }

};

static const AVFilterPad avfilter_vf_scale_outputs[] = {

{

.name = "default",

.type = AVMEDIA_TYPE_VIDEO,

.config_props = config_props,

},

{ NULL }

};

AVFilter ff_vf_scale = {

.name = "scale",

.description = NULL_IF_CONFIG_SMALL("Scale the input video size and/or convert the image format."),

.init_dict = init_dict,

.uninit = uninit,

.query_formats = query_formats,

.priv_size = sizeof(ScaleContext),

.priv_class = &scale_class,

.inputs = avfilter_vf_scale_inputs,

.outputs = avfilter_vf_scale_outputs,

.process_command = process_command,

};

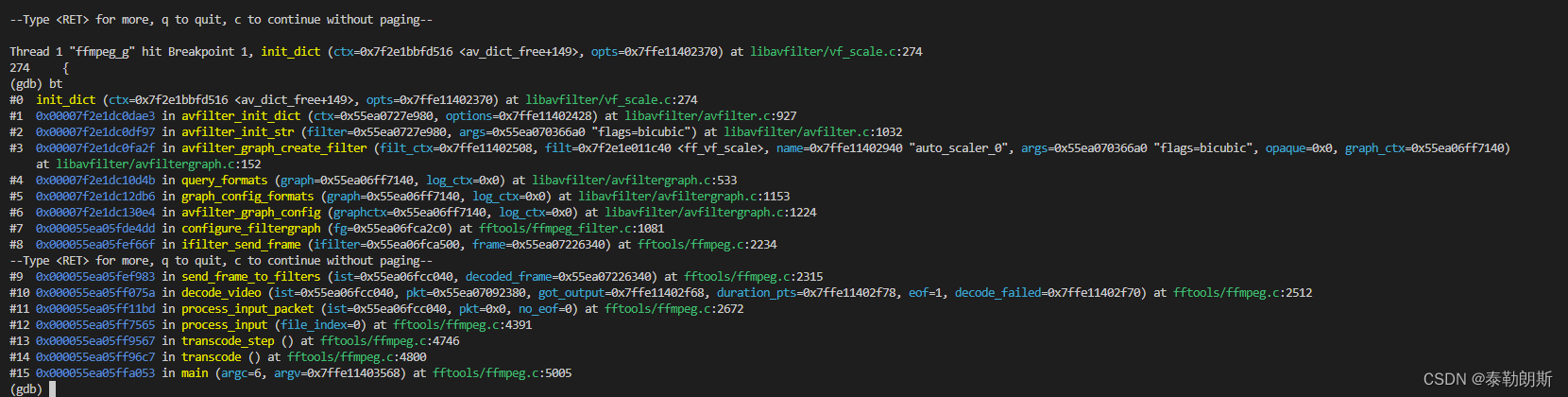

下面是调用inid_dict的调用栈:

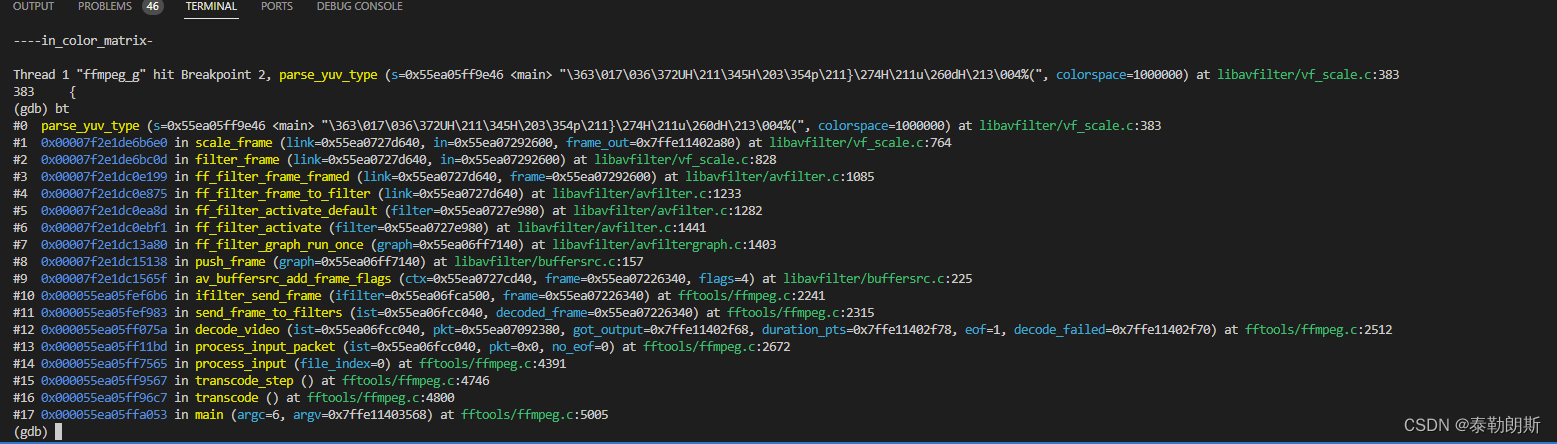

下面是调用:parse_yuv_type()

static const int *parse_yuv_type(const char *s, enum AVColorSpace colorspace)

{

printf("parse_yuv_type=%s>>>>>>>>\n",s);

if (!s)

s = "bt601";

if (s && strstr(s, "bt709")) {

colorspace = AVCOL_SPC_BT709;

} else if (s && strstr(s, "fcc")) {

colorspace = AVCOL_SPC_FCC;

} else if (s && strstr(s, "smpte240m")) {

colorspace = AVCOL_SPC_SMPTE240M;

} else if (s && (strstr(s, "bt601") || strstr(s, "bt470") || strstr(s, "smpte170m"))) {

colorspace = AVCOL_SPC_BT470BG;

} else if (s && strstr(s, "bt2020")) {

colorspace = AVCOL_SPC_BT2020_NCL;

}

if (colorspace < 1 || colorspace > 10 || colorspace == 8) {

colorspace = AVCOL_SPC_BT470BG;

}

printf("colorspace=%d>>>>>>>>\n",colorspace);

return sws_getCoefficients(colorspace);

}

static int scale_frame(AVFilterLink *link, AVFrame *in, AVFrame **frame_out)

{

AVFilterContext *ctx = link->dst;

ScaleContext *scale = ctx->priv;

AVFilterLink *outlink = ctx->outputs[0];

AVFrame *out;

...

scale:

if (!scale->sws) {

*frame_out = in;

return 0;

}

...

in_range = in->color_range;

if ( scale->in_color_matrix

|| scale->out_color_matrix

|| scale-> in_range != AVCOL_RANGE_UNSPECIFIED

|| in_range != AVCOL_RANGE_UNSPECIFIED

|| scale->out_range != AVCOL_RANGE_UNSPECIFIED) {

int in_full, out_full, brightness, contrast, saturation;

const int *inv_table, *table;

sws_getColorspaceDetails(scale->sws, (int **)&inv_table, &in_full,

(int **)&table, &out_full,

&brightness, &contrast, &saturation);

//这里使用了两个输入矩阵设置,因为in_color_matrix和out_color_matrix默认是auto,所以外边设置了out_color_matrix

//之后是没有用的,好奇怪

if (scale->in_color_matrix)

inv_table = parse_yuv_type(scale->in_color_matrix, in->colorspace);

if (scale->out_color_matrix)

table = parse_yuv_type(scale->out_color_matrix, AVCOL_SPC_UNSPECIFIED);

else if (scale->in_color_matrix)

table = inv_table;

if (scale-> in_range != AVCOL_RANGE_UNSPECIFIED)

in_full = (scale-> in_range == AVCOL_RANGE_JPEG);

else if (in_range != AVCOL_RANGE_UNSPECIFIED)

in_full = (in_range == AVCOL_RANGE_JPEG);

if (scale->out_range != AVCOL_RANGE_UNSPECIFIED)

out_full = (scale->out_range == AVCOL_RANGE_JPEG);

sws_setColorspaceDetails(scale->sws, inv_table, in_full,

table, out_full,

brightness, contrast, saturation);

if (scale->isws[0])

sws_setColorspaceDetails(scale->isws[0], inv_table, in_full,

table, out_full,

brightness, contrast, saturation);

if (scale->isws[1])

sws_setColorspaceDetails(scale->isws[1], inv_table, in_full,

table, out_full,

brightness, contrast, saturation);

out->color_range = out_full ? AVCOL_RANGE_JPEG : AVCOL_RANGE_MPEG;

}

av_reduce(&out->sample_aspect_ratio.num, &out->sample_aspect_ratio.den,

(int64_t)in->sample_aspect_ratio.num * outlink->h * link->w,

(int64_t)in->sample_aspect_ratio.den * outlink->w * link->h,

INT_MAX);

if (scale->interlaced>0 || (scale->interlaced<0 && in->interlaced_frame)) {

scale_slice(link, out, in, scale->isws[0], 0, (link->h+1)/2, 2, 0);

scale_slice(link, out, in, scale->isws[1], 0, link->h /2, 2, 1);

} else if (scale->nb_slices) {

int i, slice_h, slice_start, slice_end = 0;

const int nb_slices = FFMIN(scale->nb_slices, link->h);

for (i = 0; i < nb_slices; i++) {

slice_start = slice_end;

slice_end = (link->h * (i+1)) / nb_slices;

slice_h = slice_end - slice_start;

scale_slice(link, out, in, scale->sws, slice_start, slice_h, 1, 0);

}

} else {

scale_slice(link, out, in, scale->sws, 0, link->h, 1, 0);

}

av_frame_free(&in);

return 0;

}

最终是调用yuv2rgb_c_24_bgr():

这个命令可以简单的将宏展开

gcc -E -P file.c >> out.c

ffmpeg swscale颜色空间转换是采用查表法,用了4个表,具体可以看代码查看具体算法

#define YUVRGB_TABLE_HEADROOM 512

#define YUVRGB_TABLE_LUMA_HEADROOM 512

static int yuv2rgb_c_24_bgr(SwsContext *c, const uint8_t *src[], int srcStride[],

int srcSliceY, int srcSliceH, uint8_t *dst[], int dstStride[]) {

int y;

if (!0 && c->srcFormat == AV_PIX_FMT_YUV422P) {

srcStride[1] *= 2;

srcStride[2] *= 2;

}

for (y = 0; y < srcSliceH; y += 2) {

int yd = y + srcSliceY;

uint8_t * dst_1 = (uint8_t *)(dst[0] + (yd)*dstStride[0]);

uint8_t * dst_2 = (uint8_t *)(dst[0] + (yd + 1) * dstStride[0]);

uint8_t * r, *g, *b;

const uint8_t *py_1 = src[0] + y * srcStride[0];

const uint8_t *py_2 = py_1 + srcStride[0];

const uint8_t *pu = src[1] + (y >> 1) * srcStride[1];

const uint8_t *pv = src[2] + (y >> 1) * srcStride[2];

const uint8_t *pa_1, *pa_2;

unsigned int h_size = c->dstW >> 3;

if (0) {

pa_1 = src[3] + y * srcStride[3];

pa_2 = pa_1 + srcStride[3];

}

while (h_size--) {

int U, V, Y;

U = pu[0];

V = pv[0];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_1[2 * 0];

dst_1[6 * 0 + 0] = b[Y];

dst_1[6 * 0 + 1] = g[Y];

dst_1[6 * 0 + 2] = r[Y];

Y = py_1[2 * 0 + 1];

dst_1[6 * 0 + 3] = b[Y];

dst_1[6 * 0 + 4] = g[Y];

dst_1[6 * 0 + 5] = r[Y];

;

Y = py_2[2 * 0];

dst_2[6 * 0 + 0] = b[Y];

dst_2[6 * 0 + 1] = g[Y];

dst_2[6 * 0 + 2] = r[Y];

Y = py_2[2 * 0 + 1];

dst_2[6 * 0 + 3] = b[Y];

dst_2[6 * 0 + 4] = g[Y];

dst_2[6 * 0 + 5] = r[Y];

;

U = pu[1];

V = pv[1];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_2[2 * 1];

dst_2[6 * 1 + 0] = b[Y];

dst_2[6 * 1 + 1] = g[Y];

dst_2[6 * 1 + 2] = r[Y];

Y = py_2[2 * 1 + 1];

dst_2[6 * 1 + 3] = b[Y];

dst_2[6 * 1 + 4] = g[Y];

dst_2[6 * 1 + 5] = r[Y];

;

Y = py_1[2 * 1];

dst_1[6 * 1 + 0] = b[Y];

dst_1[6 * 1 + 1] = g[Y];

dst_1[6 * 1 + 2] = r[Y];

Y = py_1[2 * 1 + 1];

dst_1[6 * 1 + 3] = b[Y];

dst_1[6 * 1 + 4] = g[Y];

dst_1[6 * 1 + 5] = r[Y];

;

U = pu[2];

V = pv[2];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_1[2 * 2];

dst_1[6 * 2 + 0] = b[Y];

dst_1[6 * 2 + 1] = g[Y];

dst_1[6 * 2 + 2] = r[Y];

Y = py_1[2 * 2 + 1];

dst_1[6 * 2 + 3] = b[Y];

dst_1[6 * 2 + 4] = g[Y];

dst_1[6 * 2 + 5] = r[Y];

;

Y = py_2[2 * 2];

dst_2[6 * 2 + 0] = b[Y];

dst_2[6 * 2 + 1] = g[Y];

dst_2[6 * 2 + 2] = r[Y];

Y = py_2[2 * 2 + 1];

dst_2[6 * 2 + 3] = b[Y];

dst_2[6 * 2 + 4] = g[Y];

dst_2[6 * 2 + 5] = r[Y];

;

U = pu[3];

V = pv[3];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_2[2 * 3];

dst_2[6 * 3 + 0] = b[Y];

dst_2[6 * 3 + 1] = g[Y];

dst_2[6 * 3 + 2] = r[Y];

Y = py_2[2 * 3 + 1];

dst_2[6 * 3 + 3] = b[Y];

dst_2[6 * 3 + 4] = g[Y];

dst_2[6 * 3 + 5] = r[Y];

;

Y = py_1[2 * 3];

dst_1[6 * 3 + 0] = b[Y];

dst_1[6 * 3 + 1] = g[Y];

dst_1[6 * 3 + 2] = r[Y];

Y = py_1[2 * 3 + 1];

dst_1[6 * 3 + 3] = b[Y];

dst_1[6 * 3 + 4] = g[Y];

dst_1[6 * 3 + 5] = r[Y];

;

pu += 4 >> 0;

pv += 4 >> 0;

py_1 += 8 >> 0;

py_2 += 8 >> 0;

dst_1 += 24 >> 0;

dst_2 += 24 >> 0;

}

if (c->dstW & (4 >> 0)) {

int av_unused Y, U, V;

U = pu[0];

V = pv[0];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_1[2 * 0];

dst_1[6 * 0 + 0] = b[Y];

dst_1[6 * 0 + 1] = g[Y];

dst_1[6 * 0 + 2] = r[Y];

Y = py_1[2 * 0 + 1];

dst_1[6 * 0 + 3] = b[Y];

dst_1[6 * 0 + 4] = g[Y];

dst_1[6 * 0 + 5] = r[Y];

;

Y = py_2[2 * 0];

dst_2[6 * 0 + 0] = b[Y];

dst_2[6 * 0 + 1] = g[Y];

dst_2[6 * 0 + 2] = r[Y];

Y = py_2[2 * 0 + 1];

dst_2[6 * 0 + 3] = b[Y];

dst_2[6 * 0 + 4] = g[Y];

dst_2[6 * 0 + 5] = r[Y];

;

U = pu[1];

V = pv[1];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_2[2 * 1];

dst_2[6 * 1 + 0] = b[Y];

dst_2[6 * 1 + 1] = g[Y];

dst_2[6 * 1 + 2] = r[Y];

Y = py_2[2 * 1 + 1];

dst_2[6 * 1 + 3] = b[Y];

dst_2[6 * 1 + 4] = g[Y];

dst_2[6 * 1 + 5] = r[Y];

;

Y = py_1[2 * 1];

dst_1[6 * 1 + 0] = b[Y];

dst_1[6 * 1 + 1] = g[Y];

dst_1[6 * 1 + 2] = r[Y];

Y = py_1[2 * 1 + 1];

dst_1[6 * 1 + 3] = b[Y];

dst_1[6 * 1 + 4] = g[Y];

dst_1[6 * 1 + 5] = r[Y];

;

pu += 4 >> 1;

pv += 4 >> 1;

py_1 += 8 >> 1;

py_2 += 8 >> 1;

dst_1 += 24 >> 1;

dst_2 += 24 >> 1;

}

if (c->dstW & (4 >> 1)) {

int av_unused Y, U, V;

U = pu[0];

V = pv[0];

r = (void *)c->table_rV[V + YUVRGB_TABLE_HEADROOM];

g = (void *)(c->table_gU[U + YUVRGB_TABLE_HEADROOM] +

c->table_gV[V + YUVRGB_TABLE_HEADROOM]);

b = (void *)c->table_bU[U + YUVRGB_TABLE_HEADROOM];

;

Y = py_1[2 * 0];

dst_1[6 * 0 + 0] = b[Y];

dst_1[6 * 0 + 1] = g[Y];

dst_1[6 * 0 + 2] = r[Y];

Y = py_1[2 * 0 + 1];

dst_1[6 * 0 + 3] = b[Y];

dst_1[6 * 0 + 4] = g[Y];

dst_1[6 * 0 + 5] = r[Y];

;

Y = py_2[2 * 0];

dst_2[6 * 0 + 0] = b[Y];

dst_2[6 * 0 + 1] = g[Y];

dst_2[6 * 0 + 2] = r[Y];

Y = py_2[2 * 0 + 1];

dst_2[6 * 0 + 3] = b[Y];

dst_2[6 * 0 + 4] = g[Y];

dst_2[6 * 0 + 5] = r[Y];

;

}

}

return srcSliceH;

}