基本思想:需求部署yolov8目标检测和旋转目标检测算法部署atlas 200dk 开发板上

一、转换模型 链接: https://pan.baidu.com/s/1hJPX2QvybI4AGgeJKO6QgQ?pwd=q2s5 提取码: q2s5

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8s.yaml") # build a new model from scratch

model = YOLO("yolov8s.pt") # load a pretrained model (recommended for training)

# Use the model

results = model(r"F:\zhy\Git\McQuic\bus.jpg") # predict on an image

path = model.export(format="onnx") # export the model to ONNX format精简模型

(base) root@davinci-mini:~/sxj731533730# python3 -m onnxsim yolov8s.onnx yolov8s_sim.onnx

Simplifying...

Finish! Here is the difference:

┏━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━┓

┃ ┃ Original Model ┃ Simplified Model ┃

┡━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━┩

│ Add │ 9 │ 8 │

│ Concat │ 19 │ 19 │

│ Constant │ 22 │ 0 │

│ Conv │ 64 │ 64 │

│ Div │ 2 │ 1 │

│ Gather │ 1 │ 0 │

│ MaxPool │ 3 │ 3 │

│ Mul │ 60 │ 58 │

│ Reshape │ 5 │ 5 │

│ Resize │ 2 │ 2 │

│ Shape │ 1 │ 0 │

│ Sigmoid │ 58 │ 58 │

│ Slice │ 2 │ 2 │

│ Softmax │ 1 │ 1 │

│ Split │ 9 │ 9 │

│ Sub │ 2 │ 2 │

│ Transpose │ 1 │ 1 │

│ Model Size │ 42.8MiB │ 42.7MiB │

└────────────┴────────────────┴──────────────────┘

使用huawei板子进行转换模型

(base) root@davinci-mini:~/sxj731533730# atc --model=yolov8s.onnx --framework=5 --output=yolov8s --input_format=NCHW --i

nput_shape="images:1,3,640,640" --log=error --soc_version=Ascend310B1

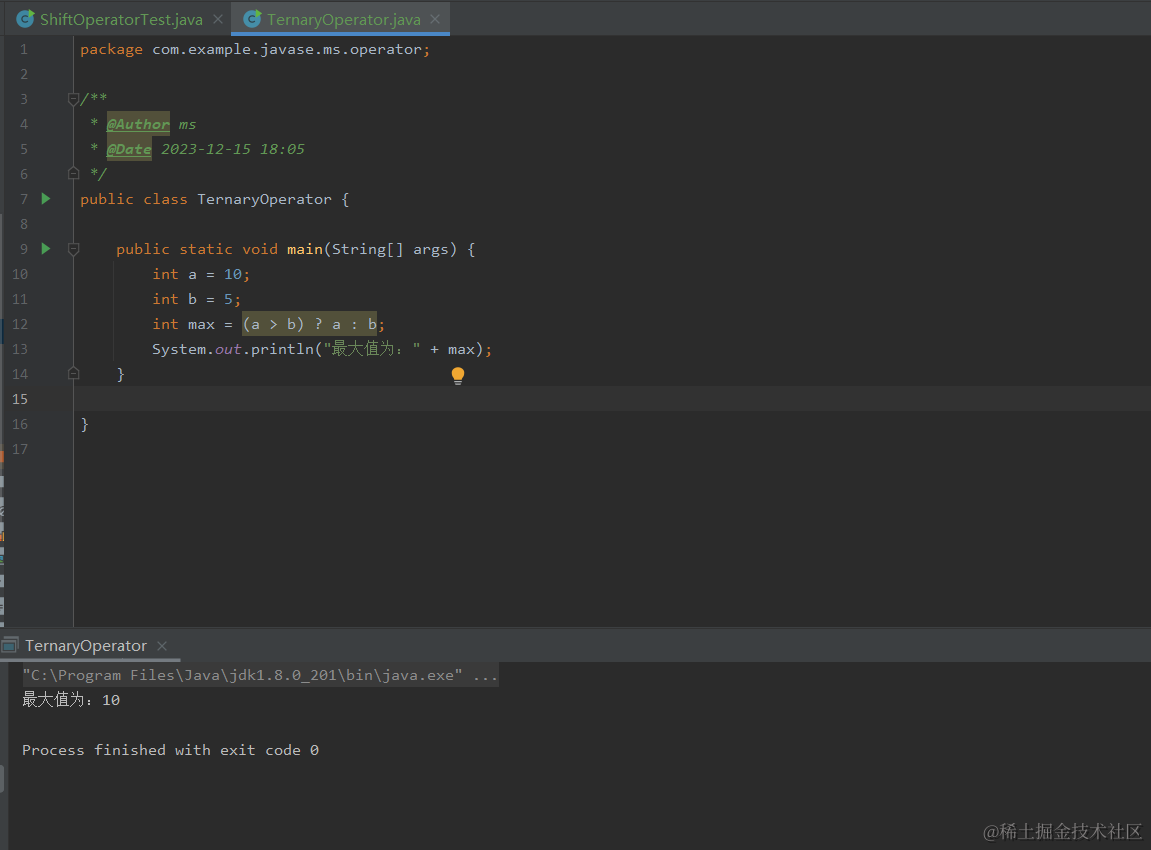

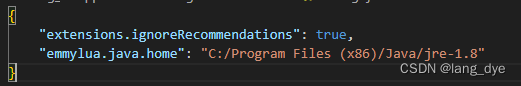

配置pycharm professional

pycharm professional 设置

/usr/local/miniconda3/bin/python3

LD_LIBRARY_PATH=/usr/local/Ascend/ascend-toolkit/latest/lib64:/usr/local/Ascend/ascend-toolkit/latest/lib64/plugin/opskernel:/usr/local/Ascend/ascend-toolkit/latest/lib64/plugin/nnengine:$LD_LIBRARY_PATH;PYTHONPATH=/usr/local/Ascend/ascend-toolkit/latest/python/site-packages:/usr/local/Ascend/ascend-toolkit/latest/opp/op_impl/built-in/ai_core/tbe:$PYTHONPATH;PATH=/usr/local/Ascend/ascend-toolkit/latest/bin:/usr/local/Ascend/ascend-toolkit/latest/compiler/ccec_compiler/bin:$PATH;ASCEND_AICPU_PATH=/usr/local/Ascend/ascend-toolkit/latest;ASCEND_OPP_PATH=/usr/local/Ascend/ascend-toolkit/latest/opp;TOOLCHAIN_HOME=/usr/local/Ascend/ascend-toolkit/latest/toolkit;ASCEND_HOME_PATH=/usr/local/Ascend/ascend-toolkit/latest

/usr/local/miniconda3/bin/python3.9 /home/sxj731533730/sgagtyeray.py测试代码pyhon

import ctypes

import os

import shutil

import random

import sys

import threading

import time

import cv2

import numpy as np

import numpy as np

import cv2

from ais_bench.infer.interface import InferSession

class_names = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard',

'cell phone', 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase',

'scissors', 'teddy bear', 'hair drier', 'toothbrush']

# Create a list of colors for each class where each color is a tuple of 3 integer values

rng = np.random.default_rng(3)

colors = rng.uniform(0, 255, size=(len(class_names), 3))

model_path = 'yolov8s.om'

IMG_PATH = 'bus.jpg'

conf_threshold = 0.5

iou_threshold = 0.4

input_w=640

input_h=640

def get_img_path_batches(batch_size, img_dir):

ret = []

batch = []

for root, dirs, files in os.walk(img_dir):

for name in files:

if len(batch) == batch_size:

ret.append(batch)

batch = []

batch.append(os.path.join(root, name))

if len(batch) > 0:

ret.append(batch)

return ret

def plot_one_box(x, img, color=None, label=None, line_thickness=None):

"""

description: Plots one bounding box on image img,

this function comes from YoLov8 project.

param:

x: a box likes [x1,y1,x2,y2]

img: a opencv image object

color: color to draw rectangle, such as (0,255,0)

label: str

line_thickness: int

return:

no return

"""

tl = (

line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1

) # line/font thickness

color = color or [random.randint(0, 255) for _ in range(3)]

c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))

cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)

if label:

tf = max(tl - 1, 1) # font thickness

t_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]

c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3

cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filled

cv2.putText(

img,

label,

(c1[0], c1[1] - 2),

0,

tl / 3,

[225, 255, 255],

thickness=tf,

lineType=cv2.LINE_AA,

)

def preprocess_image( raw_bgr_image):

"""

description: Convert BGR image to RGB,

resize and pad it to target size, normalize to [0,1],

transform to NCHW format.

param:

input_image_path: str, image path

return:

image: the processed image

image_raw: the original image

h: original height

w: original width

"""

image_raw = raw_bgr_image

h, w, c = image_raw.shape

image = cv2.cvtColor(image_raw, cv2.COLOR_BGR2RGB)

# Calculate widht and height and paddings

r_w = input_w / w

r_h = input_h / h

if r_h > r_w:

tw = input_w

th = int(r_w * h)

tx1 = tx2 = 0

ty1 = int(( input_h - th) / 2)

ty2 = input_h - th - ty1

else:

tw = int(r_h * w)

th = input_h

tx1 = int(( input_w - tw) / 2)

tx2 = input_w - tw - tx1

ty1 = ty2 = 0

# Resize the image with long side while maintaining ratio

image = cv2.resize(image, (tw, th))

# Pad the short side with (128,128,128)

image = cv2.copyMakeBorder(

image, ty1, ty2, tx1, tx2, cv2.BORDER_CONSTANT, None, (128, 128, 128)

)

image = image.astype(np.float32)

# Normalize to [0,1]

image /= 255.0

# HWC to CHW format:

image = np.transpose(image, [2, 0, 1])

# CHW to NCHW format

image = np.expand_dims(image, axis=0)

# Convert the image to row-major order, also known as "C order":

image = np.ascontiguousarray(image)

return image, image_raw, h, w,r_h,r_w

def xywh2xyxy( origin_h, origin_w, x):

"""

description: Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

param:

origin_h: height of original image

origin_w: width of original image

x: A boxes numpy, each row is a box [center_x, center_y, w, h]

return:

y: A boxes numpy, each row is a box [x1, y1, x2, y2]

"""

y = np.zeros_like(x)

r_w = input_w / origin_w

r_h = input_h / origin_h

if r_h > r_w:

y[:, 0] = x[:, 0]

y[:, 2] = x[:, 2]

y[:, 1] = x[:, 1] - ( input_h - r_w * origin_h) / 2

y[:, 3] = x[:, 3] - ( input_h - r_w * origin_h) / 2

y /= r_w

else:

y[:, 0] = x[:, 0] - ( input_w - r_h * origin_w) / 2

y[:, 2] = x[:, 2] - ( input_w - r_h * origin_w) / 2

y[:, 1] = x[:, 1]

y[:, 3] = x[:, 3]

y /= r_h

return y

def rescale_boxes( boxes,img_width, img_height, input_width, input_height):

# Rescale boxes to original image dimensions

input_shape = np.array([input_width, input_height,input_width, input_height])

boxes = np.divide(boxes, input_shape, dtype=np.float32)

boxes *= np.array([img_width, img_height, img_width, img_height])

return boxes

def xywh2xyxy(x):

# Convert bounding box (x, y, w, h) to bounding box (x1, y1, x2, y2)

y = np.copy(x)

y[..., 0] = x[..., 0] - x[..., 2] / 2

y[..., 1] = x[..., 1] - x[..., 3] / 2

y[..., 2] = x[..., 0] + x[..., 2] / 2

y[..., 3] = x[..., 1] + x[..., 3] / 2

return y

def compute_iou(box, boxes):

# Compute xmin, ymin, xmax, ymax for both boxes

xmin = np.maximum(box[0], boxes[:, 0])

ymin = np.maximum(box[1], boxes[:, 1])

xmax = np.minimum(box[2], boxes[:, 2])

ymax = np.minimum(box[3], boxes[:, 3])

# Compute intersection area

intersection_area = np.maximum(0, xmax - xmin) * np.maximum(0, ymax - ymin)

# Compute union area

box_area = (box[2] - box[0]) * (box[3] - box[1])

boxes_area = (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])

union_area = box_area + boxes_area - intersection_area

# Compute IoU

iou = intersection_area / union_area

return iou

def nms(boxes, scores, iou_threshold):

# Sort by score

sorted_indices = np.argsort(scores)[::-1]

keep_boxes = []

while sorted_indices.size > 0:

# Pick the last box

box_id = sorted_indices[0]

keep_boxes.append(box_id)

# Compute IoU of the picked box with the rest

ious = compute_iou(boxes[box_id, :], boxes[sorted_indices[1:], :])

# Remove boxes with IoU over the threshold

keep_indices = np.where(ious < iou_threshold)[0]

# print(keep_indices.shape, sorted_indices.shape)

sorted_indices = sorted_indices[keep_indices + 1]

return keep_boxes

def multiclass_nms(boxes, scores, class_ids, iou_threshold):

unique_class_ids = np.unique(class_ids)

keep_boxes = []

for class_id in unique_class_ids:

class_indices = np.where(class_ids == class_id)[0]

class_boxes = boxes[class_indices,:]

class_scores = scores[class_indices]

class_keep_boxes = nms(class_boxes, class_scores, iou_threshold)

keep_boxes.extend(class_indices[class_keep_boxes])

return keep_boxes

def extract_boxes(predictions,img_width, img_height, input_width, input_height):

# Extract boxes from predictions

boxes = predictions[:, :4]

# Scale boxes to original image dimensions

boxes = rescale_boxes(boxes,img_width, img_height, input_width, input_height)

# Convert boxes to xyxy format

boxes = xywh2xyxy(boxes)

return boxes

def process_output(output,img_width, img_height, input_width, input_height):

predictions = np.squeeze(output[0]).T

# Filter out object confidence scores below threshold

scores = np.max(predictions[:, 4:], axis=1)

predictions = predictions[scores > conf_threshold, :]

scores = scores[scores > conf_threshold]

if len(scores) == 0:

return [], [], []

# Get the class with the highest confidence

class_ids = np.argmax(predictions[:, 4:], axis=1)

# Get bounding boxes for each object

boxes = extract_boxes(predictions,img_width, img_height, input_width, input_height)

# Apply non-maxima suppression to suppress weak, overlapping bounding boxes

# indices = nms(boxes, scores, self.iou_threshold)

indices = multiclass_nms(boxes, scores, class_ids, iou_threshold)

return boxes[indices], scores[indices], class_ids[indices]

def bbox_iou( box1, box2, x1y1x2y2=True):

"""

description: compute the IoU of two bounding boxes

param:

box1: A box coordinate (can be (x1, y1, x2, y2) or (x, y, w, h))

box2: A box coordinate (can be (x1, y1, x2, y2) or (x, y, w, h))

x1y1x2y2: select the coordinate format

return:

iou: computed iou

"""

if not x1y1x2y2:

# Transform from center and width to exact coordinates

b1_x1, b1_x2 = box1[:, 0] - box1[:, 2] / 2, box1[:, 0] + box1[:, 2] / 2

b1_y1, b1_y2 = box1[:, 1] - box1[:, 3] / 2, box1[:, 1] + box1[:, 3] / 2

b2_x1, b2_x2 = box2[:, 0] - box2[:, 2] / 2, box2[:, 0] + box2[:, 2] / 2

b2_y1, b2_y2 = box2[:, 1] - box2[:, 3] / 2, box2[:, 1] + box2[:, 3] / 2

else:

# Get the coordinates of bounding boxes

b1_x1, b1_y1, b1_x2, b1_y2 = box1[:, 0], box1[:, 1], box1[:, 2], box1[:, 3]

b2_x1, b2_y1, b2_x2, b2_y2 = box2[:, 0], box2[:, 1], box2[:, 2], box2[:, 3]

# Get the coordinates of the intersection rectangle

inter_rect_x1 = np.maximum(b1_x1, b2_x1)

inter_rect_y1 = np.maximum(b1_y1, b2_y1)

inter_rect_x2 = np.minimum(b1_x2, b2_x2)

inter_rect_y2 = np.minimum(b1_y2, b2_y2)

# Intersection area

inter_area = np.clip(inter_rect_x2 - inter_rect_x1 + 1, 0, None) * \

np.clip(inter_rect_y2 - inter_rect_y1 + 1, 0, None)

# Union Area

b1_area = (b1_x2 - b1_x1 + 1) * (b1_y2 - b1_y1 + 1)

b2_area = (b2_x2 - b2_x1 + 1) * (b2_y2 - b2_y1 + 1)

iou = inter_area / (b1_area + b2_area - inter_area + 1e-16)

return iou

def non_max_suppression( prediction, origin_h, origin_w, conf_thres=0.5, nms_thres=0.4):

"""

description: Removes detections with lower object confidence score than 'conf_thres' and performs

Non-Maximum Suppression to further filter detections.

param:

prediction: detections, (x1, y1, x2, y2, conf, cls_id)

origin_h: original image height

origin_w: original image width

conf_thres: a confidence threshold to filter detections

nms_thres: a iou threshold to filter detections

return:

boxes: output after nms with the shape (x1, y1, x2, y2, conf, cls_id)

"""

# Get the boxes that score > CONF_THRESH

boxes = prediction[prediction[:, 4] >= conf_thres]

# Trandform bbox from [center_x, center_y, w, h] to [x1, y1, x2, y2]

boxes[:, :4] = xywh2xyxy(origin_h, origin_w, boxes[:, :4])

# clip the coordinates

boxes[:, 0] = np.clip(boxes[:, 0], 0, origin_w - 1)

boxes[:, 2] = np.clip(boxes[:, 2], 0, origin_w - 1)

boxes[:, 1] = np.clip(boxes[:, 1], 0, origin_h - 1)

boxes[:, 3] = np.clip(boxes[:, 3], 0, origin_h - 1)

# Object confidence

confs = boxes[:, 4]

# Sort by the confs

boxes = boxes[np.argsort(-confs)]

# Perform non-maximum suppression

keep_boxes = []

while boxes.shape[0]:

large_overlap = bbox_iou(np.expand_dims(boxes[0, :4], 0), boxes[:, :4]) > nms_thres

label_match = boxes[0, -1] == boxes[:, -1]

# Indices of boxes with lower confidence scores, large IOUs and matching labels

invalid = large_overlap & label_match

keep_boxes += [boxes[0]]

boxes = boxes[~invalid]

boxes = np.stack(keep_boxes, 0) if len(keep_boxes) else np.array([])

return boxes

def draw_box( image: np.ndarray, box: np.ndarray, color: tuple[int, int, int] = (0, 0, 255),

thickness: int = 2) -> np.ndarray:

x1, y1, x2, y2 = box.astype(int)

return cv2.rectangle(image, (x1, y1), (x2, y2), color, thickness)

def draw_text(image: np.ndarray, text: str, box: np.ndarray, color: tuple[int, int, int] = (0, 0, 255),

font_size: float = 0.001, text_thickness: int = 2) -> np.ndarray:

x1, y1, x2, y2 = box.astype(int)

(tw, th), _ = cv2.getTextSize(text=text, fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=font_size, thickness=text_thickness)

th = int(th * 1.2)

cv2.rectangle(image, (x1, y1),

(x1 + tw, y1 - th), color, -1)

return cv2.putText(image, text, (x1, y1), cv2.FONT_HERSHEY_SIMPLEX, font_size, (255, 255, 255), text_thickness, cv2.LINE_AA)

def draw_detections(image, boxes, scores, class_ids, mask_alpha=0.3):

det_img = image.copy()

img_height, img_width = image.shape[:2]

font_size = min([img_height, img_width]) * 0.0006

text_thickness = int(min([img_height, img_width]) * 0.001)

# Draw bounding boxes and labels of detections

for class_id, box, score in zip(class_ids, boxes, scores):

color = colors[class_id]

draw_box(det_img, box, color)

label = class_names[class_id]

caption = f'{label} {int(score * 100)}%'

draw_text(det_img, caption, box, color, font_size, text_thickness)

return det_img

if __name__ == "__main__":

# load custom plugin and engine

# loa

# 初始化推理模型

model = InferSession(0, model_path)

image = cv2.imread(IMG_PATH)

h,w,_=image.shape

# img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))

img = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (input_w, input_h))

img_ = img / 255.0 # 归一化到0~1

img_ = img_.transpose(2, 0, 1)

img_ = np.ascontiguousarray(img_, dtype=np.float32)

outputs = model.infer([img_])

import pickle

with open('yolov8.pkl', 'wb') as f:

pickle.dump(outputs, f)

with open('yolov8.pkl', 'rb') as f:

outputs = pickle.load(f)

#print(outputs)

boxes, scores, class_ids = process_output(

outputs,w,h,input_w,input_h

)

print(boxes, scores, class_ids)

original_image=draw_detections(image, boxes, scores, class_ids, mask_alpha=0.3)

#print(boxes, scores, class_ids)

cv2.imwrite("result.jpg",original_image)

print("------------------")测试结果

[INFO] acl init success

[INFO] open device 0 success

[INFO] load model yolov8s.om success

[INFO] create model description success

[[5.0941406e+01 4.0057031e+02 2.4648047e+02 9.0386719e+02]

[6.6951562e+02 3.9065625e+02 8.1000000e+02 8.8087500e+02]

[2.2377832e+02 4.0732031e+02 3.4512012e+02 8.5999219e+02]

[3.1640625e-01 5.5096875e+02 7.3762207e+01 8.7075000e+02]

[3.1640625e+00 2.2865625e+02 8.0873438e+02 7.4503125e+02]] [0.9140625 0.9121094 0.87597656 0.55566406 0.8984375 ] [0 0 0 0 5]

------------------

[INFO] unload model success, model Id is 1

[INFO] end to destroy context

[INFO] end to reset device is 0

[INFO] end to finalize acl

Process finished with exit code 0

二、c++版本

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(untitled10)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

add_definitions(-DENABLE_DVPP_INTERFACE)

include_directories(/usr/local/samples/cplusplus/common/acllite/include)

include_directories(/usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/include)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(libascendcl SHARED IMPORTED)

set_target_properties(libascendcl PROPERTIES IMPORTED_LOCATION /usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/lib64/libascendcl.so)

add_library(libacllite SHARED IMPORTED)

set_target_properties(libacllite PROPERTIES IMPORTED_LOCATION /usr/local/samples/cplusplus/common/acllite/out/aarch64/libacllite.so)

add_executable(untitled10 main.cpp)

target_link_libraries(untitled10 ${OpenCV_LIBS} libascendcl libacllite)main.cpp

#include <opencv2/opencv.hpp>

#include "AclLiteUtils.h"

#include "AclLiteImageProc.h"

#include "AclLiteResource.h"

#include "AclLiteError.h"

#include "AclLiteModel.h"

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <algorithm>

using namespace std;

using namespace cv;

typedef enum Result {

SUCCESS = 0,

FAILED = 1

} Result;

std::vector<std::string> CLASS_NAMES = {"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat",

"traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse",

"sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie",

"suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove",

"skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl",

"banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake",

"chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote",

"keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase",

"scissors", "teddy bear",

"hair drier", "toothbrush"};

const std::vector<std::vector<float>> COLOR_LIST = {

{1, 1, 1},

{0.098, 0.325, 0.850},

{0.125, 0.694, 0.929},

{0.556, 0.184, 0.494},

{0.188, 0.674, 0.466},

{0.933, 0.745, 0.301},

{0.184, 0.078, 0.635},

{0.300, 0.300, 0.300},

{0.600, 0.600, 0.600},

{0.000, 0.000, 1.000},

{0.000, 0.500, 1.000},

{0.000, 0.749, 0.749},

{0.000, 1.000, 0.000},

{1.000, 0.000, 0.000},

{1.000, 0.000, 0.667},

{0.000, 0.333, 0.333},

{0.000, 0.667, 0.333},

{0.000, 1.000, 0.333},

{0.000, 0.333, 0.667},

{0.000, 0.667, 0.667},

{0.000, 1.000, 0.667},

{0.000, 0.333, 1.000},

{0.000, 0.667, 1.000},

{0.000, 1.000, 1.000},

{0.500, 0.333, 0.000},

{0.500, 0.667, 0.000},

{0.500, 1.000, 0.000},

{0.500, 0.000, 0.333},

{0.500, 0.333, 0.333},

{0.500, 0.667, 0.333},

{0.500, 1.000, 0.333},

{0.500, 0.000, 0.667},

{0.500, 0.333, 0.667},

{0.500, 0.667, 0.667},

{0.500, 1.000, 0.667},

{0.500, 0.000, 1.000},

{0.500, 0.333, 1.000},

{0.500, 0.667, 1.000},

{0.500, 1.000, 1.000},

{1.000, 0.333, 0.000},

{1.000, 0.667, 0.000},

{1.000, 1.000, 0.000},

{1.000, 0.000, 0.333},

{1.000, 0.333, 0.333},

{1.000, 0.667, 0.333},

{1.000, 1.000, 0.333},

{1.000, 0.000, 0.667},

{1.000, 0.333, 0.667},

{1.000, 0.667, 0.667},

{1.000, 1.000, 0.667},

{1.000, 0.000, 1.000},

{1.000, 0.333, 1.000},

{1.000, 0.667, 1.000},

{0.000, 0.000, 0.333},

{0.000, 0.000, 0.500},

{0.000, 0.000, 0.667},

{0.000, 0.000, 0.833},

{0.000, 0.000, 1.000},

{0.000, 0.167, 0.000},

{0.000, 0.333, 0.000},

{0.000, 0.500, 0.000},

{0.000, 0.667, 0.000},

{0.000, 0.833, 0.000},

{0.000, 1.000, 0.000},

{0.167, 0.000, 0.000},

{0.333, 0.000, 0.000},

{0.500, 0.000, 0.000},

{0.667, 0.000, 0.000},

{0.833, 0.000, 0.000},

{1.000, 0.000, 0.000},

{0.000, 0.000, 0.000},

{0.143, 0.143, 0.143},

{0.286, 0.286, 0.286},

{0.429, 0.429, 0.429},

{0.571, 0.571, 0.571},

{0.714, 0.714, 0.714},

{0.857, 0.857, 0.857},

{0.741, 0.447, 0.000},

{0.741, 0.717, 0.314},

{0.000, 0.500, 0.500}

};

struct Object {

// The object class.

int label{};

// The detection's confidence probability.

float probability{};

// The object bounding box rectangle.

cv::Rect_<float> rect;

};

float clamp_T(float value, float low, float high) {

if (value < low) return low;

if (high < value) return high;

return value;

}

void drawObjectLabels(cv::Mat& image, const std::vector<Object> &objects, unsigned int scale=1) {

// If segmentation information is present, start with that

// Bounding boxes and annotations

for (auto & object : objects) {

// Choose the color

int colorIndex = object.label % COLOR_LIST.size(); // We have only defined 80 unique colors

cv::Scalar color = cv::Scalar(COLOR_LIST[colorIndex][0],

COLOR_LIST[colorIndex][1],

COLOR_LIST[colorIndex][2]);

float meanColor = cv::mean(color)[0];

cv::Scalar txtColor;

if (meanColor > 0.5){

txtColor = cv::Scalar(0, 0, 0);

}else{

txtColor = cv::Scalar(255, 255, 255);

}

const auto& rect = object.rect;

// Draw rectangles and text

char text[256];

sprintf(text, "%s %.1f%%", CLASS_NAMES[object.label].c_str(), object.probability * 100);

int baseLine = 0;

cv::Size labelSize = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.35 * scale, scale, &baseLine);

cv::Scalar txt_bk_color = color * 0.7 * 255;

int x = object.rect.x;

int y = object.rect.y + 1;

cv::rectangle(image, rect, color * 255, scale + 1);

cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(labelSize.width, labelSize.height + baseLine)),

txt_bk_color, -1);

cv::putText(image, text, cv::Point(x, y + labelSize.height), cv::FONT_HERSHEY_SIMPLEX, 0.35 * scale, txtColor, scale);

}

}

int main() {

const char *modelPath = "../model/yolov8s.om";

const char *fileName="../bus.jpg";

auto image_process_mode="letter_box";

const int label_num=80;

const float nms_threshold = 0.6;

const float conf_threshold = 0.25;

int TOP_K=100;

std::vector<int> iImgSize={640,640};

float resizeScales=1;

cv::Mat iImg = cv::imread(fileName);

int m_imgWidth=iImg.cols;

int m_imgHeight=iImg.rows;

cv::Mat oImg = iImg.clone();

cv::cvtColor(oImg, oImg, cv::COLOR_BGR2RGB);

cv::Mat detect_image;

cv::resize(oImg, detect_image, cv::Size(iImgSize.at(0), iImgSize.at(1)));

float m_ratio_w = 1.f / (iImgSize.at(0) / static_cast<float>(iImg.cols));

float m_ratio_h = 1.f / (iImgSize.at(1) / static_cast<float>(iImg.rows));

float* imageBytes;

AclLiteResource aclResource_;

AclLiteImageProc imageProcess_;

AclLiteModel model_;

aclrtRunMode runMode_;

ImageData resizedImage_;

const char *modelPath_;

int32_t modelWidth_;

int32_t modelHeight_;

AclLiteError ret = aclResource_.Init();

if (ret == FAILED) {

ACLLITE_LOG_ERROR("resource init failed, errorCode is %d", ret);

return FAILED;

}

ret = aclrtGetRunMode(&runMode_);

if (ret == FAILED) {

ACLLITE_LOG_ERROR("get runMode failed, errorCode is %d", ret);

return FAILED;

}

// init dvpp resource

ret = imageProcess_.Init();

if (ret == FAILED) {

ACLLITE_LOG_ERROR("imageProcess init failed, errorCode is %d", ret);

return FAILED;

}

// load model from file

ret = model_.Init(modelPath);

if (ret == FAILED) {

ACLLITE_LOG_ERROR("model init failed, errorCode is %d", ret);

return FAILED;

}

// data standardization

float meanRgb[3] = {0, 0, 0};

float stdRgb[3] = {1/255.0f, 1/255.0f, 1/255.0f};

int32_t channel = detect_image.channels();

int32_t resizeHeight = detect_image.rows;

int32_t resizeWeight = detect_image.cols;

imageBytes = (float *) malloc(channel * (iImgSize.at(0)) * (iImgSize.at(1)) * sizeof(float));

memset(imageBytes, 0, channel * (iImgSize.at(0)) * (iImgSize.at(1)) * sizeof(float));

// image to bytes with shape HWC to CHW, and switch channel BGR to RGB

for (int c = 0; c < channel; ++c) {

for (int h = 0; h < resizeHeight; ++h) {

for (int w = 0; w < resizeWeight; ++w) {

int dstIdx = c * resizeHeight * resizeWeight + h * resizeWeight + w;

imageBytes[dstIdx] = static_cast<float>(

(detect_image.at<cv::Vec3b>(h, w)[c] -

1.0f * meanRgb[c]) * 1.0f * stdRgb[c] );

}

}

}

std::vector <InferenceOutput> inferOutputs;

ret = model_.CreateInput(static_cast<void *>(imageBytes),

channel * iImgSize.at(0) * iImgSize.at(1) * sizeof(float));

if (ret == FAILED) {

ACLLITE_LOG_ERROR("CreateInput failed, errorCode is %d", ret);

return FAILED;

}

// inference

ret = model_.Execute(inferOutputs);

if (ret != ACL_SUCCESS) {

ACLLITE_LOG_ERROR("execute model failed, errorCode is %d", ret);

return FAILED;

}

float *rawData = static_cast<float *>(inferOutputs[0].data.get());

std::vector<cv::Rect> bboxes;

std::vector<float> scores;

std::vector<int> labels;

std::vector<int> indices;

auto numChannels =84;

auto numAnchors =8400;

auto numClasses = CLASS_NAMES.size();

cv::Mat output = cv::Mat(numChannels, numAnchors, CV_32F,rawData);

output = output.t();

// Get all the YOLO proposals

for (int i = 0; i < numAnchors; i++) {

auto rowPtr = output.row(i).ptr<float>();

auto bboxesPtr = rowPtr;

auto scoresPtr = rowPtr + 4;

auto maxSPtr = std::max_element(scoresPtr, scoresPtr + numClasses);

float score = *maxSPtr;

if (score > conf_threshold) {

float x = *bboxesPtr++;

float y = *bboxesPtr++;

float w = *bboxesPtr++;

float h = *bboxesPtr;

float x0 = clamp_T((x - 0.5f * w) * m_ratio_w, 0.f, m_imgWidth);

float y0 =clamp_T((y - 0.5f * h) * m_ratio_h, 0.f, m_imgHeight);

float x1 = clamp_T((x + 0.5f * w) * m_ratio_w, 0.f, m_imgWidth);

float y1 = clamp_T((y + 0.5f * h) * m_ratio_h, 0.f, m_imgHeight);

int label = maxSPtr - scoresPtr;

cv::Rect_<float> bbox;

bbox.x = x0;

bbox.y = y0;

bbox.width = x1 - x0;

bbox.height = y1 - y0;

bboxes.push_back(bbox);

labels.push_back(label);

scores.push_back(score);

}

}

// Run NMS

cv::dnn::NMSBoxes(bboxes, scores, conf_threshold, nms_threshold, indices);

std::vector<Object> objects;

// Choose the top k detections

int cnt = 0;

for (auto& chosenIdx : indices) {

if (cnt >= TOP_K) {

break;

}

Object obj{};

obj.probability = scores[chosenIdx];

obj.label = labels[chosenIdx];

obj.rect = bboxes[chosenIdx];

objects.push_back(obj);

cnt += 1;

}

drawObjectLabels( iImg, objects);

cv::imwrite("../result_c++.jpg",iImg);

model_.DestroyResource();

imageProcess_.DestroyResource();

aclResource_.Release();

return SUCCESS;

}

测试结果

三、旋转目标

待需