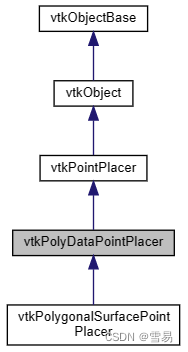

hdl SLAM和定位的关系:HDL和cartographer一样,是离线建图的

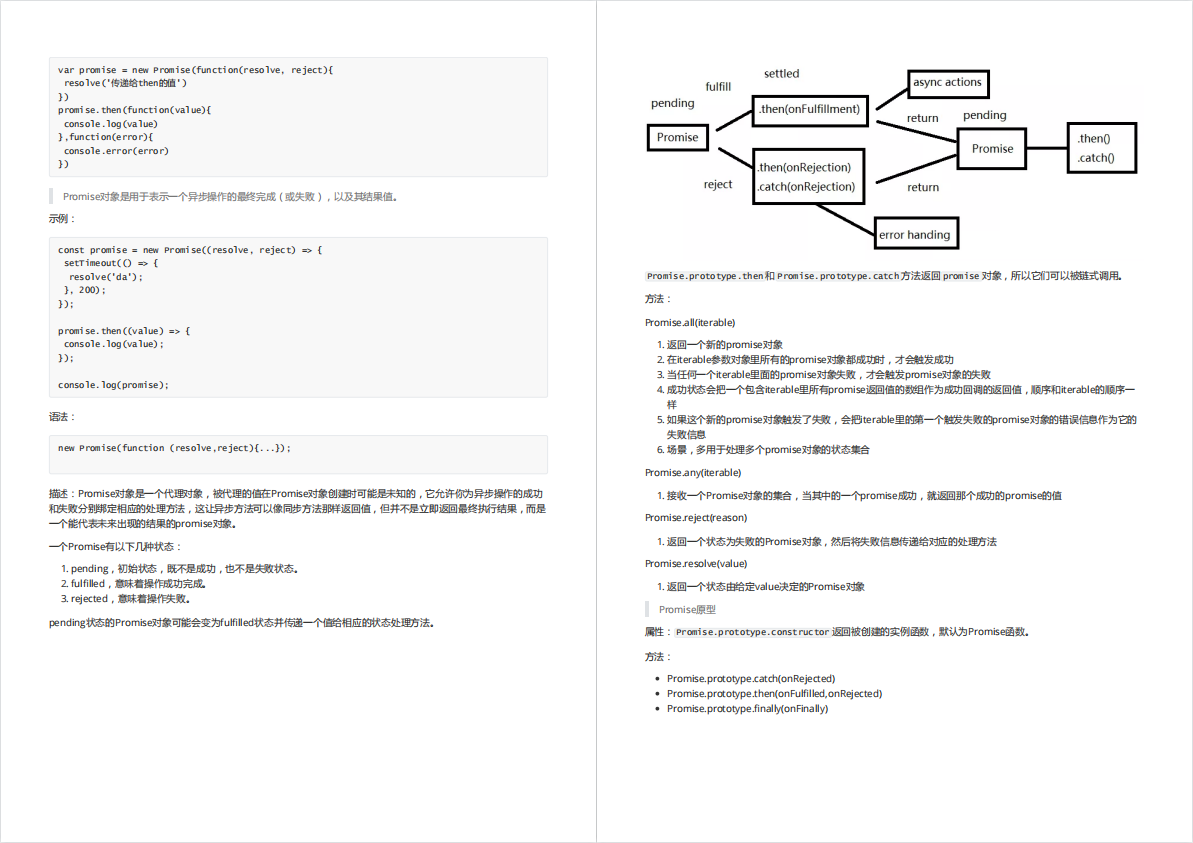

整个SLAM系统的架构

包含四个节点: 预处理、 帧匹配、hdl_slam、地面检测

输入点云首先经过预处理进行降采样,然后传给下一个节点。帧匹配通过迭代获取两帧之间运动变化的里程计,地面检测通过RANSAC检测地平面,检测的平面发送给hdl_slam模块。

hdl_graph_slam支持多种GPS数据格式,都会通过hdl转为UTM坐标系,并且将他们加入到3D位置约束,如果其高度为NAN值,则将GPS数据作为2D的约束,几何点为最基础的数据,由经纬高组成,尽管NavSatFix也提供很多的信息,但本工程值视野点位的信息忽略其他信息。

1.预处理模块

prefiltering_nodelet.cpp

通过看到其包含的头文件,即可发现该节点只依赖ros、pcl、tf库。文件只有一个类声明及实现,即PrefilteringNodelet类,它是继承了ros的nodelet::Nodelet的。

在构造后进行初始化:

获取ros句柄,根据ros句柄来初始化参数;通过IMU进行畸变去除,订阅IMU数据;订阅原始的激光数据,输出经过滤波后的点云。

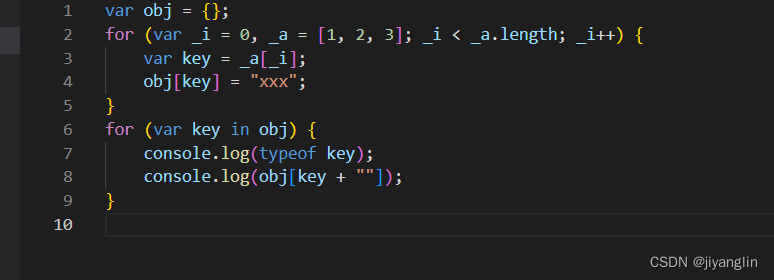

virtual void onInit() {

nh = getNodeHandle();

private_nh = getPrivateNodeHandle();

initialize_params();

if(private_nh.param<bool>("deskewing", false)) {

imu_sub = nh.subscribe("/imu/data", 1, &PrefilteringNodelet::imu_callback, this);

}

points_sub = nh.subscribe("/velodyne_points", 64, &PrefilteringNodelet::cloud_callback, this);

points_pub = nh.advertise<sensor_msgs::PointCloud2>("/filtered_points", 32);

colored_pub = nh.advertise<sensor_msgs::PointCloud2>("/colored_points", 32);

}读取参数:有降采样方式(体素降采样、近似体素降采样)、降采样栅格大小、离群点去除方法(基于统计StatisticalOutlierRemoval, 基于半径 setRadiusSearch)、是否使用距离滤波,保留1-100m内的点云。

点云去畸变

//点云的去畸变

pcl::PointCloud<PointT>::ConstPtr deskewing(const pcl::PointCloud<PointT>::ConstPtr& cloud) {

ros::Time stamp = pcl_conversions::fromPCL(cloud->header.stamp);//获取时间戳

if(imu_queue.empty()) {

return cloud;

}

// the color encodes the point number in the point sequence

if(colored_pub.getNumSubscribers()) {

pcl::PointCloud<pcl::PointXYZRGB>::Ptr colored(new pcl::PointCloud<pcl::PointXYZRGB>());

colored->header = cloud->header;

colored->is_dense = cloud->is_dense;

colored->width = cloud->width;

colored->height = cloud->height;

colored->resize(cloud->size());

for(int i = 0; i < cloud->size(); i++) {

double t = static_cast<double>(i) / cloud->size();

colored->at(i).getVector4fMap() = cloud->at(i).getVector4fMap();

colored->at(i).r = 255 * t;

colored->at(i).g = 128;

colored->at(i).b = 255 * (1 - t);

}

colored_pub.publish(*colored); //区分帧头、帧尾的点云为不同颜色

}

//从IMU的头开始,一旦满足IMU的时间比点云时间新,就退出

sensor_msgs::ImuConstPtr imu_msg = imu_queue.front();

auto loc = imu_queue.begin();

for(; loc != imu_queue.end(); loc++) {

imu_msg = (*loc);

if((*loc)->header.stamp > stamp) {

break;

}

}

imu_queue.erase(imu_queue.begin(), loc); //删掉不符合要求的IMU数据

Eigen::Vector3f ang_v(imu_msg->angular_velocity.x, imu_msg->angular_velocity.y, imu_msg->angular_velocity.z);

ang_v *= -1; //取反

pcl::PointCloud<PointT>::Ptr deskewed(new pcl::PointCloud<PointT>()); //去畸变

deskewed->header = cloud->header;

deskewed->is_dense = cloud->is_dense;

deskewed->width = cloud->width;

deskewed->height = cloud->height;

deskewed->resize(cloud->size());

double scan_period = private_nh.param<double>("scan_period", 0.1); //读取帧时间差 10Hz,两帧之间差0.1s

for(int i = 0; i < cloud->size(); i++) {

const auto& pt = cloud->at(i);

// TODO: transform IMU data into the LIDAR frame

// 点云时间戳为帧头的时间,IMU数据为最接近帧头时间的数据

double delta_t = scan_period * static_cast<double>(i) / cloud->size(); //还原每一个点

//通过四元数的变化,小范围变化为(1,delta_t / 2.0 * ang_v[0],) 通过轴角的形式表达微小变化

Eigen::Quaternionf delta_q(1, delta_t / 2.0 * ang_v[0], delta_t / 2.0 * ang_v[1], delta_t / 2.0 * ang_v[2]);

Eigen::Vector3f pt_ = delta_q.inverse() * pt.getVector3fMap(); //以匀加速模型,将帧间内的对应点的位置转到帧头

deskewed->at(i) = cloud->at(i);

deskewed->at(i).getVector3fMap() = pt_;

}

return deskewed;

}点云回调

进行点云变化,转到base坐标系,去畸变,滤波、降采样等,发出新的经过处理的点云

void cloud_callback(const pcl::PointCloud<PointT>& src_cloud_r) {

pcl::PointCloud<PointT>::ConstPtr src_cloud = src_cloud_r.makeShared();

if(src_cloud->empty()) {

return;

}

//进行点云的去畸变

src_cloud = deskewing(src_cloud);

// if base_link_frame is defined, transform the input cloud to the frame

//获取base和激光之间的静态TF,通过查询进行调整

if(!base_link_frame.empty()) {

if(!tf_listener.canTransform(base_link_frame, src_cloud->header.frame_id, ros::Time(0))) {

std::cerr << "failed to find transform between " << base_link_frame << " and " << src_cloud->header.frame_id << std::endl;

}

tf::StampedTransform transform;

tf_listener.waitForTransform(base_link_frame, src_cloud->header.frame_id, ros::Time(0), ros::Duration(2.0));

tf_listener.lookupTransform(base_link_frame, src_cloud->header.frame_id, ros::Time(0), transform);

pcl::PointCloud<PointT>::Ptr transformed(new pcl::PointCloud<PointT>());

pcl_ros::transformPointCloud(*src_cloud, *transformed, transform);

transformed->header.frame_id = base_link_frame;

transformed->header.stamp = src_cloud->header.stamp;

src_cloud = transformed; //src_cloud此时已经是基于base坐标系的点云了

}

//距离滤波,去除超过100 及小于0.2的点

pcl::PointCloud<PointT>::ConstPtr filtered = distance_filter(src_cloud);

//根据选择的方法,进行体素降采样

filtered = downsample(filtered);

//离群点去除

filtered = outlier_removal(filtered);

//发布经过处理的点云

points_pub.publish(*filtered); ///filtered_points话题的

}

pcl::PointCloud<PointT>::ConstPtr downsample(const pcl::PointCloud<PointT>::ConstPtr& cloud) const {

if(!downsample_filter) {

return cloud;

}

pcl::PointCloud<PointT>::Ptr filtered(new pcl::PointCloud<PointT>());

downsample_filter->setInputCloud(cloud);

downsample_filter->filter(*filtered);

filtered->header = cloud->header;

return filtered;

}

pcl::PointCloud<PointT>::ConstPtr outlier_removal(const pcl::PointCloud<PointT>::ConstPtr& cloud) const {

if(!outlier_removal_filter) {

return cloud;

}

pcl::PointCloud<PointT>::Ptr filtered(new pcl::PointCloud<PointT>());

outlier_removal_filter->setInputCloud(cloud);

outlier_removal_filter->filter(*filtered);

filtered->header = cloud->header;

return filtered;

}

pcl::PointCloud<PointT>::ConstPtr distance_filter(const pcl::PointCloud<PointT>::ConstPtr& cloud) const {

pcl::PointCloud<PointT>::Ptr filtered(new pcl::PointCloud<PointT>());

filtered->reserve(cloud->size());

std::copy_if(cloud->begin(), cloud->end(), std::back_inserter(filtered->points), [&](const PointT& p) {

double d = p.getVector3fMap().norm();

return d > distance_near_thresh && d < distance_far_thresh;

});

filtered->width = filtered->size();

filtered->height = 1;

filtered->is_dense = false;

filtered->header = cloud->header;

return filtered;

}帧间匹配

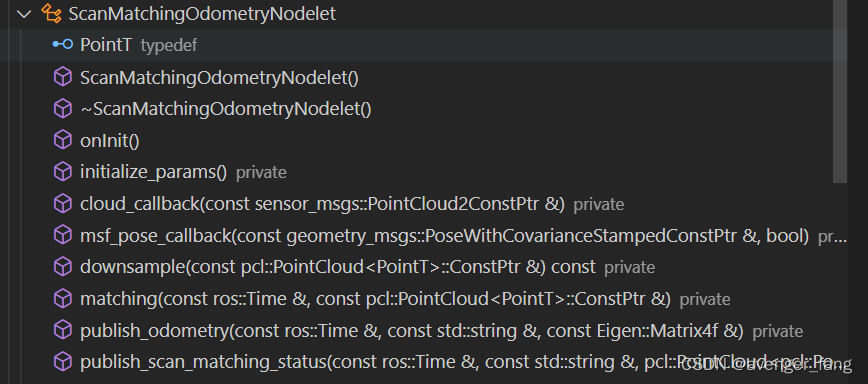

ScanMatchingOdometryNodelet.cpp

同样看包括的头文件,可以发现该节点只依赖ros,pcl, tf以及

#include <hdl_graph_slam/ros_utils.hpp>

#include <hdl_graph_slam/registrations.hpp>

#include <hdl_graph_slam/ScanMatchingStatus.h>ros_utils.hpp

matrix2transform(): convert Eigen::Matrix to geometry_msgs::TransformStamped

Eigen::Isometry3d pose2isometry(const geometry_msgs::Pose& pose) 将pose信息转为Eigen::Isometry3d几何信息结构 以及其他的几个格式互转

registrations.hpp

选择某一种配准方式

ScanMatchingStatus.hpp为配准ros消息类型生成的头文件

ScanMatchingOdometryNodelet这个类和之前预处理的类设计方式相似,通过初始化开启

先是获取ros句柄,然后初始化参数,如果使用IMU的前端(默认不使用的),则订阅/msf_core/pose和/msf_core/pose_after_update

否则直接订阅预处理后的/filtered_points,然后发布多个话题

初始化参数:

降采样、特征参数、配准参数

// 关键帧选择的特征阈值

keyframe_delta_trans = pnh.param<double>("keyframe_delta_trans", 0.25);

keyframe_delta_angle = pnh.param<double>("keyframe_delta_angle", 0.15);

keyframe_delta_time = pnh.param<double>("keyframe_delta_time", 1.0);

// Registration validation by thresholding 配准有效性阈值

transform_thresholding = pnh.param<bool>("transform_thresholding", false);

max_acceptable_trans = pnh.param<double>("max_acceptable_trans", 1.0);

max_acceptable_angle = pnh.param<double>("max_acceptable_angle", 1.0);关键函数:帧帧匹配函数

Eigen::Matrix4f matching(const ros::Time& stamp, const pcl::PointCloud<PointT>::ConstPtr& cloud)

输出即为输入点云相对关键帧点云的变换

/**

* @brief estimate the relative pose between an input cloud and a keyframe cloud

* @param stamp the timestamp of the input cloud

* @param cloud the input cloud

* @return the relative pose between the input cloud and the keyframe cloud

* 输出即为输入点云相对关键帧点云的变换

*/

Eigen::Matrix4f matching(const ros::Time& stamp, const pcl::PointCloud<PointT>::ConstPtr& cloud) {

if(!keyframe) { //如果关键帧点云不存在的话

prev_time = ros::Time();

prev_trans.setIdentity();

keyframe_pose.setIdentity();

keyframe_stamp = stamp;

keyframe = downsample(cloud);

registration->setInputTarget(keyframe); //参考帧,相当于地图

return Eigen::Matrix4f::Identity(); //初始时刻

}

//降采样之后

auto filtered = downsample(cloud);

registration->setInputSource(filtered); //插入当前帧

std::string msf_source;

Eigen::Isometry3f msf_delta = Eigen::Isometry3f::Identity();

if(private_nh.param<bool>("enable_imu_frontend", false)) {

if(msf_pose && msf_pose->header.stamp > keyframe_stamp && msf_pose_after_update && msf_pose_after_update->header.stamp > keyframe_stamp) {

Eigen::Isometry3d pose0 = pose2isometry(msf_pose_after_update->pose.pose);

Eigen::Isometry3d pose1 = pose2isometry(msf_pose->pose.pose);

Eigen::Isometry3d delta = pose0.inverse() * pose1;

msf_source = "imu";

msf_delta = delta.cast<float>();

} else {

std::cerr << "msf data is too old" << std::endl;

} //是否使用轮式里程计做初值 找到里程计和雷达之间的TF变换,将

} else if(private_nh.param<bool>("enable_robot_odometry_init_guess", false) && !prev_time.isZero()) {

tf::StampedTransform transform;

if(tf_listener.waitForTransform(cloud->header.frame_id, stamp, cloud->header.frame_id, prev_time, robot_odom_frame_id, ros::Duration(0))) {

tf_listener.lookupTransform(cloud->header.frame_id, stamp, cloud->header.frame_id, prev_time, robot_odom_frame_id, transform);

} else if(tf_listener.waitForTransform(cloud->header.frame_id, ros::Time(0), cloud->header.frame_id, prev_time, robot_odom_frame_id, ros::Duration(0))) {

tf_listener.lookupTransform(cloud->header.frame_id, ros::Time(0), cloud->header.frame_id, prev_time, robot_odom_frame_id, transform);

}

if(transform.stamp_.isZero()) {

NODELET_WARN_STREAM("failed to look up transform between " << cloud->header.frame_id << " and " << robot_odom_frame_id);

} else {

msf_source = "odometry";

msf_delta = tf2isometry(transform).cast<float>(); //里程计的变化只

}

}

pcl::PointCloud<PointT>::Ptr aligned(new pcl::PointCloud<PointT>());

//利用里程计的变化做配准的初值,提高收敛速度

registration->align(*aligned, prev_trans * msf_delta.matrix()); //相对于第一帧的变换,通过prev_trans * msf_delta.matrix()给初值

//

publish_scan_matching_status(stamp, cloud->header.frame_id, aligned, msf_source, msf_delta);

if(!registration->hasConverged()) { //

NODELET_INFO_STREAM("scan matching has not converged!!");

NODELET_INFO_STREAM("ignore this frame(" << stamp << ")");

return keyframe_pose * prev_trans; //如果没有收敛,则返回先前的位置,并提示

}

Eigen::Matrix4f trans = registration->getFinalTransformation();

Eigen::Matrix4f odom = keyframe_pose * trans; //最新的激光里程计 trans为当前帧相对于第一帧的位置

//

if(transform_thresholding) {

Eigen::Matrix4f delta = prev_trans.inverse() * trans; //此次激光配准的距离和角度变化量

double dx = delta.block<3, 1>(0, 3).norm(); //距离变换量

double da = std::acos(Eigen::Quaternionf(delta.block<3, 3>(0, 0)).w()); //角度离变换量

if(dx > max_acceptable_trans || da > max_acceptable_angle) {

NODELET_INFO_STREAM("too large transform!! " << dx << "[m] " << da << "[rad]");

NODELET_INFO_STREAM("ignore this frame(" << stamp << ")");

return keyframe_pose * prev_trans;

} //如果配准算出来的值差异太大,则放弃当前帧的数据,继续使用上一帧数据

}

prev_time = stamp;

prev_trans = trans;

auto keyframe_trans = matrix2transform(stamp, keyframe_pose, odom_frame_id, "keyframe");

keyframe_broadcaster.sendTransform(keyframe_trans);

double delta_trans = trans.block<3, 1>(0, 3).norm();

double delta_angle = std::acos(Eigen::Quaternionf(trans.block<3, 3>(0, 0)).w());

double delta_time = (stamp - keyframe_stamp).toSec();

//满足关键帧条件 keyframe_pose就是里程计的值

if(delta_trans > keyframe_delta_trans || delta_angle > keyframe_delta_angle || delta_time > keyframe_delta_time) {

keyframe = filtered;

registration->setInputTarget(keyframe);

keyframe_pose = odom;

keyframe_stamp = stamp;

prev_time = stamp;

prev_trans.setIdentity(); //prev_trans即为单位阵

}

//有订阅配准帧才会发布配准帧点云出来

if (aligned_points_pub.getNumSubscribers() > 0)

{

pcl::transformPointCloud (*cloud, *aligned, odom);

aligned->header.frame_id=odom_frame_id;

aligned_points_pub.publish(*aligned);

}

return odom;

}点云回调:

void cloud_callback(const sensor_msgs::PointCloud2ConstPtr& cloud_msg) {

if(!ros::ok()) {

return;

}

pcl::PointCloud<PointT>::Ptr cloud(new pcl::PointCloud<PointT>());

pcl::fromROSMsg(*cloud_msg, *cloud);

Eigen::Matrix4f pose = matching(cloud_msg->header.stamp, cloud);//得到相对关键帧的变换

//发布激光里程计和TF

publish_odometry(cloud_msg->header.stamp, cloud_msg->header.frame_id, pose);

// In offline estimation, point clouds until the published time will be supplied

//离线估计时

std_msgs::HeaderPtr read_until(new std_msgs::Header());

read_until->frame_id = points_topic;

read_until->stamp = cloud_msg->header.stamp + ros::Duration(1, 0);

read_until_pub.publish(read_until);

read_until->frame_id = "/filtered_points";

read_until_pub.publish(read_until);

}

发布帧匹配的状态:

包括有:帧匹配的frame_id、时间戳、配准得分、是否收敛

ScanMatchingStatus status;

status.header.frame_id = frame_id;

status.header.stamp = stamp;

status.has_converged = registration->hasConverged();

status.matching_error = registration->getFitnessScore();

获取内点得分:

int num_inliers = 0;

std::vector<int> k_indices;

std::vector<float> k_sq_dists;

for(int i=0; i<aligned->size(); i++) {

const auto& pt = aligned->at(i);

registration->getSearchMethodTarget()->nearestKSearch(pt, 1, k_indices, k_sq_dists);

if(k_sq_dists[0] < max_correspondence_dist * max_correspondence_dist) {

num_inliers++;

}

}地面检测

floor_detection_nodelet.cpp

该程序只依赖ros、pcl、boost

初始化:

virtual void onInit() {

NODELET_DEBUG("initializing floor_detection_nodelet...");

nh = getNodeHandle();

private_nh = getPrivateNodeHandle();

initialize_params();

//订阅/filtered_points点, 发布地板系数

points_sub = nh.subscribe("/filtered_points", 256, &FloorDetectionNodelet::cloud_callback, this);

floor_pub = nh.advertise<hdl_graph_slam::FloorCoeffs>("/floor_detection/floor_coeffs", 32);

read_until_pub = nh.advertise<std_msgs::Header>("/floor_detection/read_until", 32);

floor_filtered_pub = nh.advertise<sensor_msgs::PointCloud2>("/floor_detection/floor_filtered_points", 32);

floor_points_pub = nh.advertise<sensor_msgs::PointCloud2>("/floor_detection/floor_points", 32);

}

初始化参数:

void initialize_params() {

// approximate sensor tilt angle [deg] 传感器与水平面的近似倾角

tilt_deg = private_nh.param<double>("tilt_deg", 0.0);

// approximate sensor height [m] 雷达高度

sensor_height = private_nh.param<double>("sensor_height", 2.0);

// points with heights in [sensor_height - height_clip_range, sensor_height + height_clip_range] will be used for floor detection

//截取的高度范围

height_clip_range = private_nh.param<double>("height_clip_range", 1.0);

// minimum number of support points of RANSAC to accept a detected floor plane

//支持RANSAC提取平面所需要的最少的点数

floor_pts_thresh = private_nh.param<int>("floor_pts_thresh", 512);

// verticality check thresold for the detected floor plane [deg]

//检测地平面的水平角度阈值

floor_normal_thresh = private_nh.param<double>("floor_normal_thresh", 10.0);

// if true, points with "non-"vertical normals will be filtered before RANSAC

// 使用法线进行过滤

use_normal_filtering = private_nh.param<bool>("use_normal_filtering", true);

// "non-"verticality check threshold [deg]

//使用法线的阈值

normal_filter_thresh = private_nh.param<double>("normal_filter_thresh", 20.0);

points_topic = private_nh.param<std::string>("points_topic", "/velodyne_points");

}重要函数

boost::optional<Eigen::Vector4f> detect(const pcl::PointCloud<PointT>::Ptr& cloud) const()

boost::optional<Eigen::Vector4f> detect(const pcl::PointCloud<PointT>::Ptr& cloud) const {

// compensate the tilt rotation

Eigen::Matrix4f tilt_matrix = Eigen::Matrix4f::Identity();

//倾角补偿

tilt_matrix.topLeftCorner(3, 3) = Eigen::AngleAxisf(tilt_deg * M_PI / 180.0f, Eigen::Vector3f::UnitY()).toRotationMatrix();

// filtering before RANSAC (height and normal filtering)

pcl::PointCloud<PointT>::Ptr filtered(new pcl::PointCloud<PointT>);

pcl::transformPointCloud(*cloud, *filtered, tilt_matrix);

//将滤波处理后的点云转到倾角平面存放到filtered中,然后

//plane_clip 是通过点云索引提取点云子集

filtered = plane_clip(filtered, Eigen::Vector4f(0.0f, 0.0f, 1.0f, sensor_height + height_clip_range), false);

filtered = plane_clip(filtered, Eigen::Vector4f(0.0f, 0.0f, 1.0f, sensor_height - height_clip_range), true);

if(use_normal_filtering) { //使用法线滤波 滤除法线不垂直的点

filtered = normal_filtering(filtered);

}

pcl::transformPointCloud(*filtered, *filtered, static_cast<Eigen::Matrix4f>(tilt_matrix.inverse()));

if(floor_filtered_pub.getNumSubscribers()) { //讲过地面滤波之后的点云发布

filtered->header = cloud->header;

floor_filtered_pub.publish(*filtered);

}

// too few points for RANSAC 判断滤除的点是否有500个,如果少于这个数据就不做平面提取

if(filtered->size() < floor_pts_thresh) {

return boost::none;

}

// RANSAC 平面提取

pcl::SampleConsensusModelPlane<PointT>::Ptr model_p(new pcl::SampleConsensusModelPlane<PointT>(filtered));

pcl::RandomSampleConsensus<PointT> ransac(model_p);

ransac.setDistanceThreshold(0.1);

ransac.computeModel();

pcl::PointIndices::Ptr inliers(new pcl::PointIndices);

ransac.getInliers(inliers->indices);

// too few inliers 提取处理的点太少,则

if(inliers->indices.size() < floor_pts_thresh) {

return boost::none;

}

// verticality check of the detected floor's normal //参考轴为z方向经过倾角变换之后

Eigen::Vector4f reference = tilt_matrix.inverse() * Eigen::Vector4f::UnitZ();

Eigen::VectorXf coeffs;

ransac.getModelCoefficients(coeffs); //获取RANSAC平面的方向系数

double dot = coeffs.head<3>().dot(reference.head<3>());

//两个内积,即垂直投影的,因为其为单位向量,也就是两个向量的夹角

if(std::abs(dot) < std::cos(floor_normal_thresh * M_PI / 180.0)) {

// the normal is not vertical

return boost::none;

}

// make the normal upward

if(coeffs.head<3>().dot(Eigen::Vector3f::UnitZ()) < 0.0f) {

coeffs *= -1.0f; //如果算出来的向量方向与Z值正方向相反则将系数方向取反

}

if(floor_points_pub.getNumSubscribers()) {

pcl::PointCloud<PointT>::Ptr inlier_cloud(new pcl::PointCloud<PointT>);

pcl::ExtractIndices<PointT> extract;

extract.setInputCloud(filtered);

extract.setIndices(inliers);

extract.filter(*inlier_cloud);

inlier_cloud->header = cloud->header;

floor_points_pub.publish(*inlier_cloud);

}

return Eigen::Vector4f(coeffs);

}