背景:找了一圈资料,都是东讲讲西讲讲,最后我还没搞好,最终决定参考官网说明。

官网指导手册地址:Apache Kafka

需要预备的知识,keytool和openssl

关于keytool的参考:keytool的使用-CSDN博客

关于openssl的参考:openssl常用命令大全_openssl命令参数大全-CSDN博客

先只看SSL安全机制方式。

Apache Kafka 允许客户端通过 SSL 进行连接。默认情况下,SSL 处于禁用状态,但可以根据需要打开。

- 1为每个 Kafka 代理生成 SSL 密钥和证书

部署一个或多个支持 SSL 的代理的第一步是为集群中的每台计算机生成密钥和证书。您可以使用 Java 的 keytool 实用程序来完成此任务。我们最初会将密钥生成到临时密钥库中,以便稍后使用 CA 导出和签名。

keytool -keystore server.keystore.jks -alias localhost -validity 700 -genkey -keyalg RSA您需要在上面的命令中指定两个参数:

- 密钥库:存储证书的密钥库文件。密钥库文件包含证书的私钥;因此,它需要安全保存。这里是server.keystore.jks

- 有效期:证书的有效时间,单位为天。这里是700天。

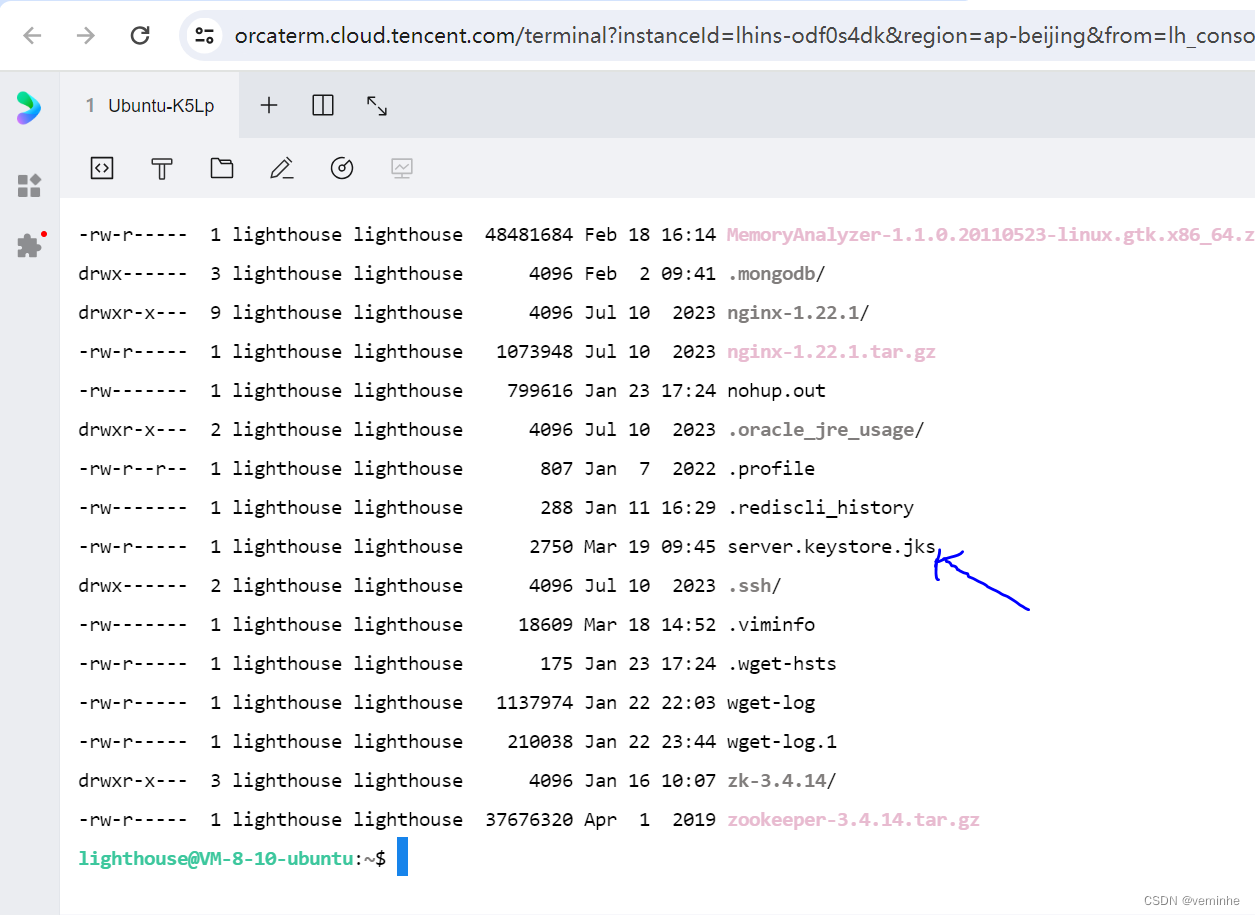

可以看到,目录下生成了对应文件

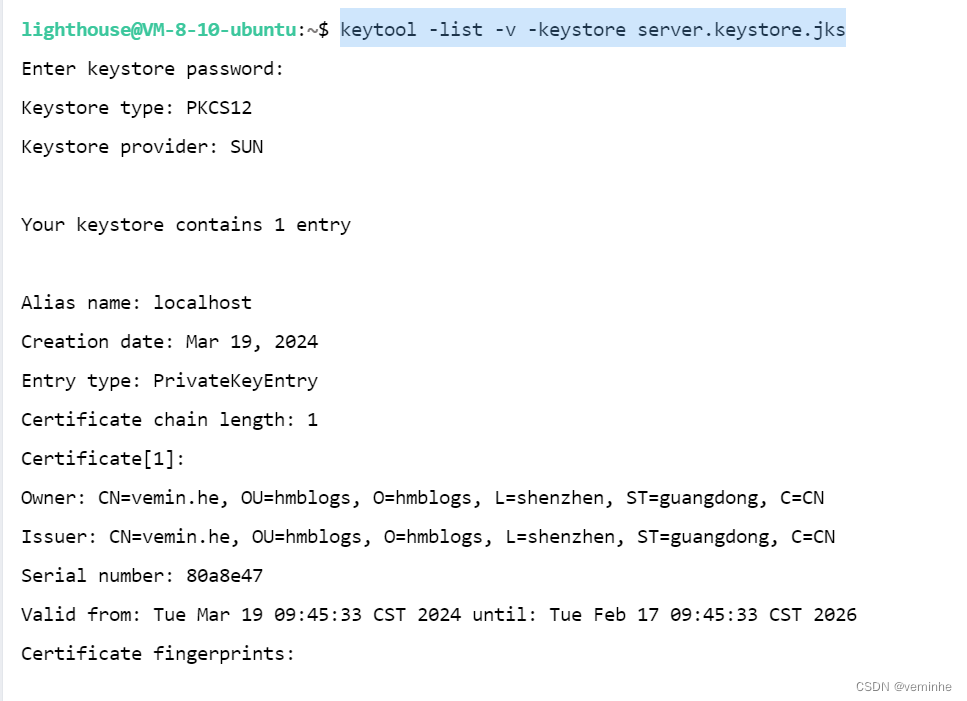

之后可以运行以下命令来验证生成的证书的内容:

keytool -list -v -keystore server.keystore.jks

- 2创建您自己的 CA

完成第一步后,群集中的每台计算机都有一个公钥-私钥对,以及一个用于标识计算机的证书。但是,该证书是未签名的,这意味着攻击者可以创建此类证书来伪装成任何计算机。

因此,通过为群集中的每台计算机对证书进行签名来防止伪造证书非常重要。证书颁发机构 (CA) 负责对证书进行签名。CA的工作方式类似于签发护照的政府——政府在每本护照上盖章(签名),使护照变得难以伪造。其他政府会验证印章以确保护照的真实性。同样,CA 对证书进行签名,而加密技术保证签名证书在计算上难以伪造。因此,只要 CA 是真实且受信任的颁发机构,客户端就可以高度保证它们连接到真实的计算机。

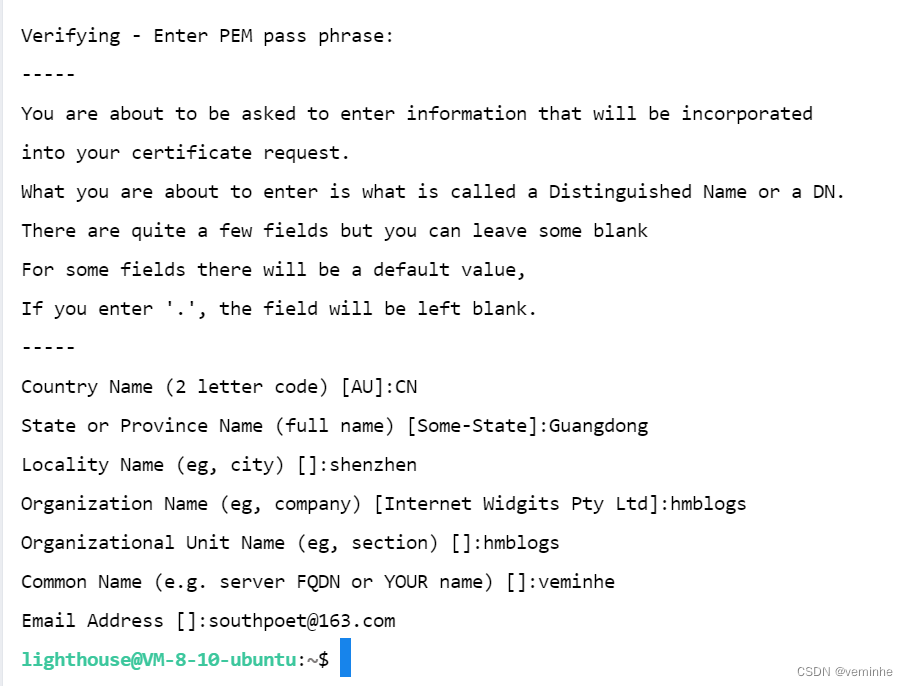

openssl req -new -x509 -keyout ca-key -out ca-cert -days 365

生成的 CA 只是一个公钥-私钥对和证书,它旨在对其他证书进行签名。

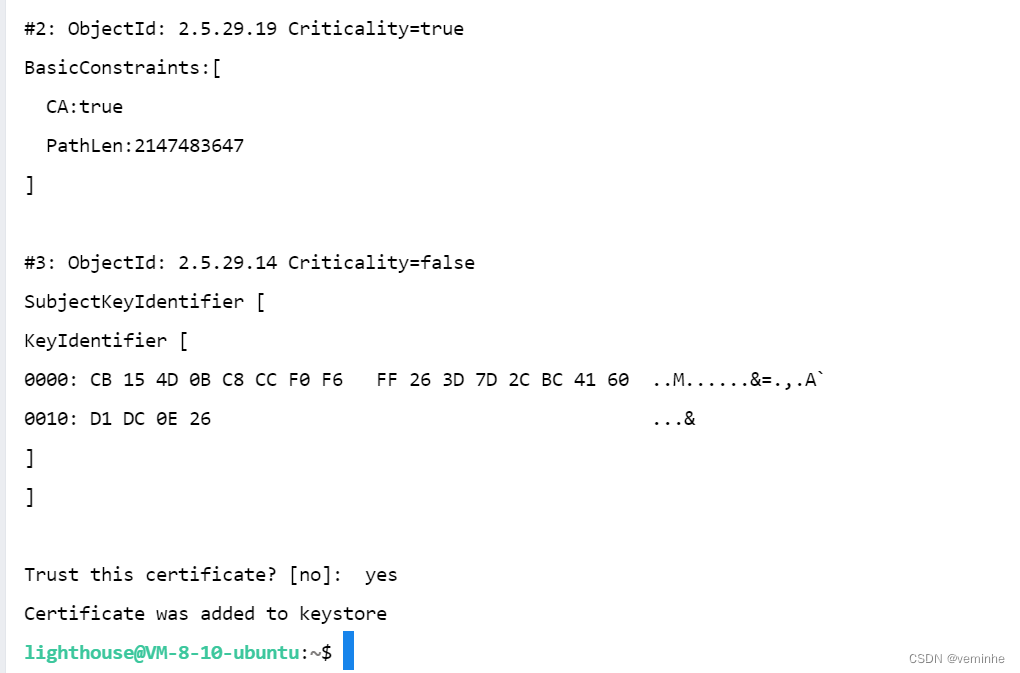

下一步是将生成的 CA 添加到客户端的信任库中,以便客户端可以信任此 CA:

keytool -keystore server.truststore.jks -alias CARoot -import -file ca-cert与步骤 1 中存储每台机器自己的身份的密钥库不同,客户机的信任库存储客户机应信任的所有证书。将证书导入到信任库中还意味着信任由该证书签名的所有证书。如上所述,信任政府 (CA) 也意味着信任它签发的所有护照(证书)。此属性称为信任链,在大型 Kafka 集群上部署 SSL 时特别有用。您可以使用单个 CA 对集群中的所有证书进行签名,并让所有计算机共享信任该 CA 的同一信任库。这样,所有计算机都可以对所有其他计算机进行身份验证。

- 3对证书进行签名

下一步是使用步骤 2 中生成的 CA 对步骤 1 生成的所有证书进行签名。首先,您需要从密钥库中导出证书:

keytool -密钥库 client.truststore.jks -alias CARoot -import -file ca-cert

keytool -keystore server.keystore.jks -alias localhost -certreq -file cert-file

然后与 CA 一起签名:

openssl x509 -req -CA ca-cert -CAkey ca-key -in cert-file -out cert-signed -days 700 -CAcreateserial -passin pass:{ca-password}

最后,您需要将 CA 的证书和签名的证书都导入到密钥库中:

keytool -keystore server.keystore.jks -alias CARoot -import -file ca-cert

keytool -keystore server.keystore.jks -alias localhost -import -file cert-signed

参数的定义如下:

- 密钥库:密钥库的位置

- ca-cert:CA的证书

- ca-key:CA的私钥

- ca-password:CA的密码

- cert-file:导出的服务器未签名证书

- cert-signed:服务器的签名证书

- 4配置 Kafka 代理

Kafka Broker 支持侦听多个端口上的连接。我们需要在 server.properties 中配置以下属性,该属性必须具有一个或多个逗号分隔值:

如果未为代理间通信启用 SSL(请参阅下文了解如何启用它),则需要 PLAINTEXT 和 SSL 端口。

listeners=PLAINTEXT://localhost:9092,SSL://localhost:9092代理端需要以下 SSL 配置

ssl.keystore.location=/home/lighthouse/server.keystore.jks

ssl.keystore.password=test1234

ssl.key.password=test1234

ssl.truststore.location=/home/lighthouse/server.truststore.jks

ssl.truststore.password=测试1234注意:ssl.truststore.password 在技术上是可选的,但强烈建议使用。如果未设置密码,则对信任库的访问仍然可用,但完整性检查将被禁用。值得考虑的可选设置:

- ssl.client.auth=none(“required” => 需要客户端身份验证,“requested” =>请求客户端身份验证,没有证书的客户端仍然可以连接。不建议使用“requested”,因为它提供了错误的安全感,并且配置错误的客户端仍将成功连接。

- ssl.cipher.suites(可选)。密码套件是身份验证、加密、MAC 和密钥交换算法的命名组合,用于协商使用 TLS 或 SSL 网络协议的网络连接的安全设置。(默认值为空列表)

- ssl.enabled.protocols=TLSv1.2,TLSv1.1,TLSv1 (列出要从客户端接受的 SSL 协议。请注意,SSL 已被弃用,取而代之的是 TLS,不建议在生产中使用 SSL)

- ssl.keystore.type=JKS

- ssl.truststore.type=JKS

- ssl.secure.random.implementation=SHA1PRNG

如果要为代理之间的通信启用 SSL,请将以下内容添加到 server.properties 文件(默认为 PLAINTEXT)

security.inter.broker.protocol=SSL- 5配置 Kafka 客户端

SSL 仅支持新的 Kafka 生产者和使用者,不支持较旧的 API。对于生产者和使用者,SSL 的配置是相同的。

如果代理中不需要客户机认证,那么下面是一个最小配置示例:

security.protocol=SSL协议

ssl.truststore.location=/var/private/ssl/client.truststore.jks

ssl.truststore.password=测试1234注意:ssl.truststore.password 在技术上是可选的,但强烈建议使用。如果未设置密码,则对信任库的访问仍然可用,但完整性检查将被禁用。如果需要客户机认证,那么必须像步骤 1 中一样创建密钥库,并且还必须配置以下内容:

ssl.keystore.location=/var/private/ssl/client.keystore.jks

ssl.keystore.password=test1234

ssl.key.password=test1234根据我们的要求和代理配置,可能还需要其他配置设置:

- ssl.provider(可选)。用于 SSL 连接的安全提供程序的名称。缺省值是 JVM 的缺省安全提供程序。

- ssl.cipher.suites(可选)。密码套件是身份验证、加密、MAC 和密钥交换算法的命名组合,用于协商使用 TLS 或 SSL 网络协议的网络连接的安全设置。

- ssl.enabled.protocols=TLSv1.2,TLSv1.1,TLSv1。它应该列出至少一个在代理端配置的协议

- ssl.truststore.type=JKS

- ssl.keystore.type=JKS

生产者和消费者共同使用到的client-ssl.properties文件内容如下:

使用 console-producer 和 console-consumer 的示例:

./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --producer.config ./config/client-ssl.properties

./bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --consumer.config ./config/client-ssl.properties报错了:

还要在用户目录下执行如下命令,信任客户端:

keytool -keystore client.truststore.jks -alias CARoot -import -file ca-cert

keytool -keystore client.keystore.jks -alias CARoot -import -file ca-cert如果密码错了,还会报如下错误:

lighthouse@VM-8-10-ubuntu:~/kafkaWithZk/kafka_2.12-2.2.1$ ./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --producer.config ./config/client-ssl.properties

org.apache.kafka.common.KafkaException: Failed to construct kafka producer

at org.apache.kafka.clients.producer.KafkaProducer.<init>(KafkaProducer.java:431)

at org.apache.kafka.clients.producer.KafkaProducer.<init>(KafkaProducer.java:299)

at kafka.tools.ConsoleProducer$.main(ConsoleProducer.scala:44)

at kafka.tools.ConsoleProducer.main(ConsoleProducer.scala)

Caused by: org.apache.kafka.common.KafkaException: org.apache.kafka.common.KafkaException: org.apache.kafka.common.KafkaException: Failed to load SSL keystore /home/lighthouse/client.truststore.jks of type JKS

at org.apache.kafka.common.network.SslChannelBuilder.configure(SslChannelBuilder.java:73)

at org.apache.kafka.common.network.ChannelBuilders.create(ChannelBuilders.java:146)

at org.apache.kafka.common.network.ChannelBuilders.clientChannelBuilder(ChannelBuilders.java:67)

at org.apache.kafka.clients.ClientUtils.createChannelBuilder(ClientUtils.java:99)

at org.apache.kafka.clients.producer.KafkaProducer.newSender(KafkaProducer.java:439)

at org.apache.kafka.clients.producer.KafkaProducer.<init>(KafkaProducer.java:420)

... 3 more

Caused by: org.apache.kafka.common.KafkaException: org.apache.kafka.common.KafkaException: Failed to load SSL keystore /home/lighthouse/client.truststore.jks of type JKS

at org.apache.kafka.common.security.ssl.SslFactory.configure(SslFactory.java:144)

at org.apache.kafka.common.network.SslChannelBuilder.configure(SslChannelBuilder.java:71)

... 8 more

Caused by: org.apache.kafka.common.KafkaException: Failed to load SSL keystore /home/lighthouse/client.truststore.jks of type JKS

at org.apache.kafka.common.security.ssl.SslFactory$SecurityStore.load(SslFactory.java:357)

at org.apache.kafka.common.security.ssl.SslFactory.createSSLContext(SslFactory.java:248)

at org.apache.kafka.common.security.ssl.SslFactory.configure(SslFactory.java:141)

... 9 more

Caused by: java.io.IOException: keystore password was incorrect

at java.base/sun.security.pkcs12.PKCS12KeyStore.engineLoad(PKCS12KeyStore.java:2092)

at java.base/sun.security.util.KeyStoreDelegator.engineLoad(KeyStoreDelegator.java:243)

at java.base/java.security.KeyStore.load(KeyStore.java:1479)

at org.apache.kafka.common.security.ssl.SslFactory$SecurityStore.load(SslFactory.java:354)

... 11 more

Caused by: java.security.UnrecoverableKeyException: failed to decrypt safe contents entry: javax.crypto.BadPaddingException: Given final block not properly padded. Such issues can arise if a bad key is used during decryption.

... 15 more然后我核对了client-ssl.properties文件中的配置(包含密码),再次启动producer,会报如下错:

lighthouse@VM-8-10-ubuntu:~/kafkaWithZk/kafka_2.12-2.2.1$ ./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test --producer.config ./config/client-ssl.properties

>[2024-03-19 13:42:49,783] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:49,835] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:49,937] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:50,140] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:50,543] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:51,298] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:52,203] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:53,158] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:54,264] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:55,220] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 13:42:56,376] WARN [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

^C^Clighthouse@VM-8-10-ubuntu:~/kafkaWithZk/kafka_2.12-2.2.1$核对了server.properties文件的密码后,启动kafka还是报错,报的错关键信息如下:

[2024-03-19 14:34:31,955] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 14:34:31,957] WARN SSL handshake failed (kafka.utils.CoreUtils$)

org.apache.kafka.common.errors.SslAuthenticationException: SSL handshake failed

Caused by: javax.net.ssl.SSLHandshakeException: No name matching localhost found

at java.base/sun.security.ssl.Alert.createSSLException(Alert.java:131)

at java.base/sun.security.ssl.TransportContext.fatal(TransportContext.java:360)

at java.base/sun.security.ssl.TransportContext.fatal(TransportContext.java:303)

at java.base/sun.security.ssl.TransportContext.fatal(TransportContext.java:298)

at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.checkServerCerts(CertificateMessage.java:654)

at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.onCertificate(CertificateMessage.java:473)

at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.consume(CertificateMessage.java:369)

at java.base/sun.security.ssl.SSLHandshake.consume(SSLHandshake.java:392)

at java.base/sun.security.ssl.HandshakeContext.dispatch(HandshakeContext.java:443)

at java.base/sun.security.ssl.SSLEngineImpl$DelegatedTask$DelegatedAction.run(SSLEngineImpl.java:1076)

at java.base/sun.security.ssl.SSLEngineImpl$DelegatedTask$DelegatedAction.run(SSLEngineImpl.java:1063)

at java.base/java.security.AccessController.doPrivileged(Native Method)

at java.base/sun.security.ssl.SSLEngineImpl$DelegatedTask.run(SSLEngineImpl.java:1010)

at org.apache.kafka.common.network.SslTransportLayer.runDelegatedTasks(SslTransportLayer.java:402)

at org.apache.kafka.common.network.SslTransportLayer.handshakeUnwrap(SslTransportLayer.java:484)

at org.apache.kafka.common.network.SslTransportLayer.doHandshake(SslTransportLayer.java:340)

at org.apache.kafka.common.network.SslTransportLayer.handshake(SslTransportLayer.java:265)

at org.apache.kafka.common.network.KafkaChannel.prepare(KafkaChannel.java:170)

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:547)

at org.apache.kafka.common.network.Selector.poll(Selector.java:483)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:535)

at org.apache.kafka.clients.NetworkClientUtils.awaitReady(NetworkClientUtils.java:74)

at kafka.server.KafkaServer.doControlledShutdown$1(KafkaServer.scala:510)

at kafka.server.KafkaServer.controlledShutdown(KafkaServer.scala:563)

at kafka.server.KafkaServer.$anonfun$shutdown$2(KafkaServer.scala:585)

at kafka.utils.CoreUtils$.swallow(CoreUtils.scala:86)

at kafka.server.KafkaServer.shutdown(KafkaServer.scala:585)

at kafka.server.KafkaServerStartable.shutdown(KafkaServerStartable.scala:48)

at kafka.Kafka$$anon$1.run(Kafka.scala:72)

Caused by: java.security.cert.CertificateException: No name matching localhost found

at java.base/sun.security.util.HostnameChecker.matchDNS(HostnameChecker.java:234)

at java.base/sun.security.util.HostnameChecker.match(HostnameChecker.java:103)

at java.base/sun.security.ssl.X509TrustManagerImpl.checkIdentity(X509TrustManagerImpl.java:461)

at java.base/sun.security.ssl.X509TrustManagerImpl.checkIdentity(X509TrustManagerImpl.java:435)

at java.base/sun.security.ssl.X509TrustManagerImpl.checkTrusted(X509TrustManagerImpl.java:283)

at java.base/sun.security.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:141)

at java.base/sun.security.ssl.CertificateMessage$T12CertificateConsumer.checkServerCerts(CertificateMessage.java:632)

... 24 more

[2024-03-19 14:34:31,957] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:31,960] INFO [/config/changes-event-process-thread]: Shutting down (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2024-03-19 14:34:31,961] INFO [/config/changes-event-process-thread]: Shutdown completed (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2024-03-19 14:34:31,961] INFO [/config/changes-event-process-thread]: Stopped (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2024-03-19 14:34:31,962] INFO [SocketServer brokerId=0] Stopping socket server request processors (kafka.network.SocketServer)

[2024-03-19 14:34:31,979] INFO [SocketServer brokerId=0] Stopped socket server request processors (kafka.network.SocketServer)

[2024-03-19 14:34:31,980] INFO [data-plane Kafka Request Handler on Broker 0], shutting down (kafka.server.KafkaRequestHandlerPool)

[2024-03-19 14:34:31,988] INFO [data-plane Kafka Request Handler on Broker 0], shut down completely (kafka.server.KafkaRequestHandlerPool)

[2024-03-19 14:34:31,995] INFO [KafkaApi-0] Shutdown complete. (kafka.server.KafkaApis)

[2024-03-19 14:34:31,997] INFO [ExpirationReaper-0-topic]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,059] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

^C[2024-03-19 14:34:32,114] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

[2024-03-19 14:34:32,132] INFO [ExpirationReaper-0-topic]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,132] INFO [ExpirationReaper-0-topic]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,134] INFO [TransactionCoordinator id=0] Shutting down. (kafka.coordinator.transaction.TransactionCoordinator)

[2024-03-19 14:34:32,135] INFO [ProducerId Manager 0]: Shutdown complete: last producerId assigned 1000 (kafka.coordinator.transaction.ProducerIdManager)

[2024-03-19 14:34:32,136] INFO [Transaction State Manager 0]: Shutdown complete (kafka.coordinator.transaction.TransactionStateManager)

[2024-03-19 14:34:32,136] INFO [Transaction Marker Channel Manager 0]: Shutting down (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2024-03-19 14:34:32,139] INFO [Transaction Marker Channel Manager 0]: Stopped (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2024-03-19 14:34:32,140] INFO [Transaction Marker Channel Manager 0]: Shutdown completed (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2024-03-19 14:34:32,141] INFO [TransactionCoordinator id=0] Shutdown complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2024-03-19 14:34:32,141] INFO [GroupCoordinator 0]: Shutting down. (kafka.coordinator.group.GroupCoordinator)

[2024-03-19 14:34:32,144] INFO [ExpirationReaper-0-Heartbeat]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,160] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,261] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,344] INFO [ExpirationReaper-0-Heartbeat]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,344] INFO [ExpirationReaper-0-Heartbeat]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,344] INFO [ExpirationReaper-0-Rebalance]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,362] INFO [ExpirationReaper-0-Rebalance]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,362] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,363] INFO [ExpirationReaper-0-Rebalance]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,363] INFO [GroupCoordinator 0]: Shutdown complete. (kafka.coordinator.group.GroupCoordinator)

[2024-03-19 14:34:32,364] INFO [ReplicaManager broker=0] Shutting down (kafka.server.ReplicaManager)

[2024-03-19 14:34:32,364] INFO [LogDirFailureHandler]: Shutting down (kafka.server.ReplicaManager$LogDirFailureHandler)

[2024-03-19 14:34:32,366] INFO [LogDirFailureHandler]: Stopped (kafka.server.ReplicaManager$LogDirFailureHandler)

[2024-03-19 14:34:32,366] INFO [LogDirFailureHandler]: Shutdown completed (kafka.server.ReplicaManager$LogDirFailureHandler)

[2024-03-19 14:34:32,368] INFO [ReplicaFetcherManager on broker 0] shutting down (kafka.server.ReplicaFetcherManager)

[2024-03-19 14:34:32,369] INFO [ReplicaFetcherManager on broker 0] shutdown completed (kafka.server.ReplicaFetcherManager)

[2024-03-19 14:34:32,370] INFO [ReplicaAlterLogDirsManager on broker 0] shutting down (kafka.server.ReplicaAlterLogDirsManager)

[2024-03-19 14:34:32,370] INFO [ReplicaAlterLogDirsManager on broker 0] shutdown completed (kafka.server.ReplicaAlterLogDirsManager)

[2024-03-19 14:34:32,370] INFO [ExpirationReaper-0-Fetch]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,463] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,492] INFO [ExpirationReaper-0-Fetch]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,492] INFO [ExpirationReaper-0-Fetch]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,492] INFO [ExpirationReaper-0-Produce]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,564] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,666] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,674] INFO [ExpirationReaper-0-Produce]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,674] INFO [ExpirationReaper-0-Produce]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,674] INFO [ExpirationReaper-0-DeleteRecords]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,692] INFO [ExpirationReaper-0-DeleteRecords]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,692] INFO [ExpirationReaper-0-DeleteRecords]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,693] INFO [ExpirationReaper-0-ElectPreferredLeader]: Shutting down (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,768] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,870] WARN [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2024-03-19 14:34:32,893] INFO [ExpirationReaper-0-ElectPreferredLeader]: Shutdown completed (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,893] INFO [ExpirationReaper-0-ElectPreferredLeader]: Stopped (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2024-03-19 14:34:32,897] INFO [ReplicaManager broker=0] Shut down completely (kafka.server.ReplicaManager)

[2024-03-19 14:34:32,898] INFO Shutting down. (kafka.log.LogManager)

[2024-03-19 14:34:32,934] INFO Shutdown complete. (kafka.log.LogManager)

[2024-03-19 14:34:32,960] INFO [ZooKeeperClient] Closing. (kafka.zookeeper.ZooKeeperClient)

[2024-03-19 14:34:32,964] INFO Session: 0x100cd6124170002 closed (org.apache.zookeeper.ZooKeeper)

[2024-03-19 14:34:32,966] INFO EventThread shut down for session: 0x100cd6124170002 (org.apache.zookeeper.ClientCnxn)

[2024-03-19 14:34:32,966] INFO [ZooKeeperClient] Closed. (kafka.zookeeper.ZooKeeperClient)

[2024-03-19 14:34:32,968] INFO [ThrottledChannelReaper-Fetch]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,168] INFO [ThrottledChannelReaper-Fetch]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,168] INFO [ThrottledChannelReaper-Fetch]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,168] INFO [ThrottledChannelReaper-Produce]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,170] INFO [ThrottledChannelReaper-Produce]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,170] INFO [ThrottledChannelReaper-Produce]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:33,170] INFO [ThrottledChannelReaper-Request]: Shutting down (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

^C[2024-03-19 14:34:33,740] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

^C[2024-03-19 14:34:33,972] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

[2024-03-19 14:34:34,170] INFO [ThrottledChannelReaper-Request]: Stopped (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:34,170] INFO [ThrottledChannelReaper-Request]: Shutdown completed (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2024-03-19 14:34:34,172] INFO [SocketServer brokerId=0] Shutting down socket server (kafka.network.SocketServer)

^C[2024-03-19 14:34:34,204] INFO [SocketServer brokerId=0] Shutdown completed (kafka.network.SocketServer)

[2024-03-19 14:34:34,204] INFO Terminating process due to signal SIGINT (org.apache.kafka.common.utils.LoggingSignalHandler)

[2024-03-19 14:34:34,206] INFO [KafkaServer id=0] shut down completed (kafka.server.KafkaServer)经过一番清理ca-*,cert-*,client-*,server-*文件后,然后重新生成秘钥证书和CA、签名,步骤如下:

一、生成 SSL 密钥和证书

keytool -keystore server.keystore.jks -alias localhost -validity 700 -genkey -keyalg RSA

keytool -keystore server.truststore.jks -alias localhost -validity 700 -genkey -keyalg RSA

keytool -keystore client.keystore.jks -alias localhost -validity 700 -genkey -keyalg RSA

keytool -keystore client.truststore.jks -alias localhost -validity 700 -genkey -keyalg RSA

2、创建我自己的CA

openssl req -new -x509 -keyout ca-key -out ca-cert -days 700

keytool -keystore server.keystore.jks -alias localhost -certreq -file cert-file

openssl x509 -req -CA ca-cert -CAkey ca-key -in cert-file -out cert-signed -days 700 -CAcreateserial -passin pass:123456

3、对证书进行签名

keytool -keystore server.keystore.jks -alias CARoot -import -file ca-cert

keytool -keystore server.keystore.jks -alias localhost -import -file cert-signed

keytool -keystore server.truststore.jks -alias CARoot -import -file ca-cert

keytool -keystore client.keystore.jks -alias CARoot -import -file ca-cert

keytool -keystore client.truststore.jks -alias CARoot -import -file ca-cert再次启动zookeeper和kafka,然后执行生产producer命令,发现还是报错:

[2024-03-19 17:52:38,773] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:38,876] INFO [Controller id=0, targetBrokerId=0] Failed authentication with localhost/127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:38,876] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed (org.apache.kafka.clients.NetworkClient)

[2024-03-19 17:52:38,876] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:38,979] INFO [Controller id=0, targetBrokerId=0] Failed authentication with localhost/127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.netw

ork.Selector)

[2024-03-19 17:52:38,980] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed

(org.apache.kafka.clients.NetworkClient)

[2024-03-19 17:52:38,980] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:39,083] INFO [Controller id=0, targetBrokerId=0] Failed authentication with localhost/127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.netw

ork.Selector)

[2024-03-19 17:52:39,083] INFO [SocketServer brokerId=0] Failed authentication with /127.0.0.1 (SSL handshake failed) (org.apache.kafka.common.network.Selector)

[2024-03-19 17:52:39,083] ERROR [Controller id=0, targetBrokerId=0] Connection to node 0 (localhost/127.0.0.1:9092) failed authentication due to: SSL handshake failed

(org.apache.kafka.clients.NetworkClient)