PostgreSQL版本为8.4.1

(本文为《PostgreSQL数据库内核分析》一书的总结笔记,需要电子版的可私信我)

索引篇:

- PostgreSQL索引篇 | BTree

- PostgreSQL索引篇 | GIN索引

- PostgreSQL索引篇 | Gist索引

- PostgreSQL索引篇 | TSearch2 全文搜索

Hash索引

在实际的数据库系统中,除了B-Tree外,还有多种数据结构可做索引,Hash表就是其中的一种。通过Hash表可以把键值“分配”到各个桶中。使用这种结构的索引称为Hash索引,其核心是构建Hash表。

在Hash表中,有一个Hash函数h,它以查找键为参数,并计算出一个介于0到B-1的数值,其中B是桶的数目。如果记录的查找键为K,那么该记录将被放在编号为h(K)的桶中。

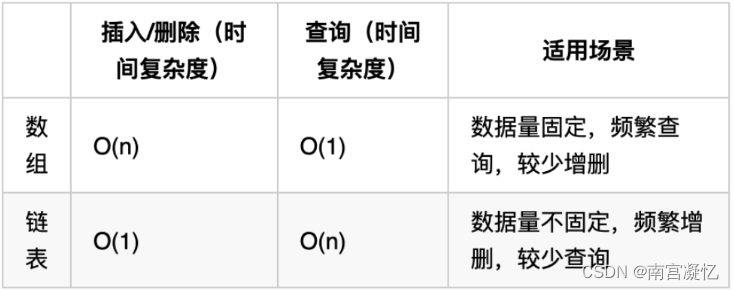

一般有两种方法实现Hash表:

-

静态Hash表:桶数目B不变。

-

动态Hash表:允许B改变,使B近似于记录总数除以一个块中能容纳的记录数所得到的商。也就是说,每个桶大约有一个存储块。

-

动态Hash表又可分为两种:

-

①可扩展Hash表:它在简单的静态Hash表结构上进行了扩充,其特点如下:

- 用一个指向数据块的指针数组来表示桶,而不是用数据块本身组成的数组来表示桶。

- 指针数组能增长,它的长度总是2的幂,因而数组每增长一次,桶的数目就翻倍。但并非每个桶都有数据块;如果某些桶的所有记录都可以放在一个块中,那么这些桶可能共享一个块。

- Hash函数h为每个键计算出一个K位二进制序列,该K值足够大,比如32。但是,无论何时桶的数目都使用从序列第一位开始的若干位来表示,位的数值等于K。比如,使用了r位,则这时桶中将有 2^r 个项。

-

②线性Hash表:它的桶的增长较为缓慢,具有如下特点:

-

桶数目B的选择总是使存储块的平均记录数与每个存储块所能容纳的记录总数保持一个固定的比例,例如80%。

假设每个磁盘块能存放两条记录,r为当前Hash表中总的记录数,B为当前的桶数目,那么r≤1.6B。(块的平均记录数=r/块数,块能容纳的记录总数=2 ,则r/2*B≤80%)

-

由于存储块并不是总能分裂,所以允许有溢出块。

-

用来做桶数组项序号的二进制位数是 [log2 B],其中B是当前的桶数。这些位总是从Hash函数得到的位序列的右(低位)端开始取。

-

假定Hash函数值的i位正在用来给桶数组项编号,且有一个键值为K的记录想要插入到编号为a1a2……ai的桶中,即a1a2…ai是h(K)的后i位。那么,a1a2…ai当作二进制数,设它为m。

- 如果 m<B,那么编号为m的桶存在并把记录存入该桶中。

- 如果 B≤m<2i,那么桶m还不存在,因此把记录存入桶(m-2i-1)中,也就是把a1改为0时对应的桶。

-

-

-

在PostgreSQL系统中使用的是动态Hash表,桶的个数随着分裂次数的增加而增加,但每次分裂后,所有增加的桶并不会立即使用,而只是使用需要存入元组的桶,其他新增加的桶都被保留。这种方式能够有效地减少重新分配元组到桶中的工作量,提高效率。

下面将从Hash索引的页面组织结构和实现流程两方面详细分析。

Hash索引的组织结构

在PostgreSQL 8.4.1的实现中包含两种类型的Hash表:

- 一个是用做索引的外存Hash表

- 另一个是用于内部数据查找的内存Hash表(在第3章中已多次提到)。

本节主要对前者进行分析,其源代码在src/access/hash目录中,它采用的是线性Hash表的一个实现。

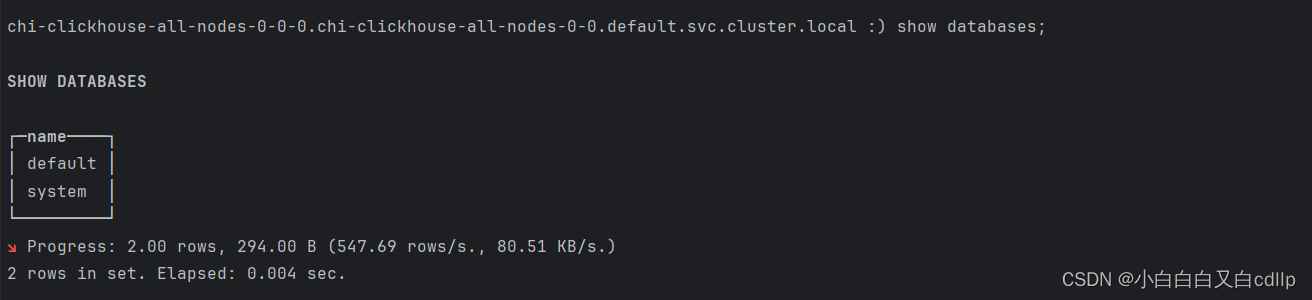

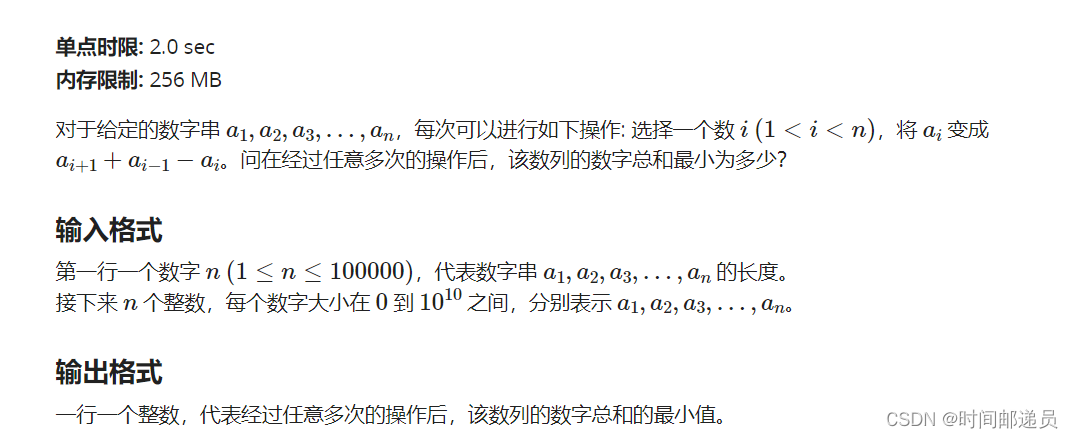

在Hash表中有四种不同类型的页面,分别为元页、桶页、溢出页、位图页,这4种页面的结构如图4-13所示。

Hash索引的页面结构也是按照图3-4所示的结构来组织的,

对于每种页面末尾的**“Special Space”,Hash索引填充的是HashPageOpaqueData结构**。其定义如数据结构4.10所示。

元页

每个Hash索引都有一个元页,它是该索引中的0号页(也就是第1页,这里的编号是C语言的编号习惯),其HashPageOpaqueData.hasho_bucket为-1,表示它不属于任何桶。字段hasho_prevblkno和hasho_nextblkno均置为InvalidBlockNumber。

元页中记录了Hash的版本号、Hash索引记录的索引元组数目、桶的信息、位图等相关信息。

通过元页可以了解该Hash索引在总体上的分配和使用情况,在索引元组的插入、溢出页的分配回收以及Hash表的扩展等过程中,都需要使用元页。

其结构及各数据成员的意义如数据结构HashMetaPageData所示:

其中的字段说明如下:

hashm_bsize:字段实际保存的是一个桶页中用于存放索引元组的空间大小(按字节),由于使用一个磁盘块作为一个桶页,该值为“磁盘块所占空间(8k)- PageHeaderData所占空间- HashPageOpaqueData所占空间”。hashm_firstfree:表示当前可能空闲的最小溢出页号,注意是可能的,并不一定就是空闲的。在查找空闲的溢出页时,还要对该页进行核查。hashm_spares[HASH_MAX_SPLITTPOINTS]:保存了截止到各个分裂点时整个Hash索引分配的溢出页总数。当splitpoint 增加后(即产生了一次新的分裂),在之前保存在这个数组中的值就不能再改变,即不管在该分裂点之前分配的溢出页是否回收都不会修改hashm_spares数组中的值。HASH_MAX_SPLITPOINTS的最大值为32,即最多可分裂32次。hashm_mapp[HASH_MAX_BITMAPS]:记录了各个位图的块号,HASH_MAX_BITMAPS的最大值为128,即最多可分配128块位图。通过这个数组,能够快速找到各个位图的块号。

从上面的结构定义可以看出,元页记录了Hash索引的基本信息,每次对Hash索引进行读写操作时都要将元页读入内存,并对其进行更新。

Hash索引初始化的时候会将已知的关于即将要建立的索引的信息都写到元页中去。

桶页

【Hash表由多个桶组成,每个桶由一个或多个页组成,每个桶的第一页称为桶页,其他页称为溢出页,桶页随着桶的建立而建立】

桶页结构中的HashPageOpaqueData.hasho_bucket为该桶的标识,若该桶有溢出页,字段hasho_nextblkno指向该溢出页,用于构建桶页的链结构,实现快速查找。

桶页是成组分配的,每次分配的数目都是2的幂次。0号和1号桶页是在Hash表初始化的时候分配的,当splitpoint为1时(进行第一次分裂),将会同时分配2号和3号桶页。当需要第5个桶时,也就是splitpoint增加到2时,同时分配4 7号桶页。当splitpoint增加到3时,同时分配815号桶页,以此类推。

每次分配的桶页在磁盘上都是连续存储的,通过这种方式,可以快速了解到各个桶是在哪一次分裂时产生的及其磁盘块号。

之前讲到当splitpoint增加1的时候,并不是立即使用所增加的桶页,而是根据需要分裂一个桶页中的元组到两个桶页(原来的桶页+新增加的桶)中。例如,当splitpoint增加到2时,并不会立即使用4~7号桶页,可能先会使用4号桶,5、6和7号桶现在并没有使用但保留着,当4号桶填满需要增加溢出页时,并不会将溢出页分配在4号桶页之后,而是7号桶页的后面,因为5、6和7号桶的磁盘已经在4号桶之后连续分配。这样一种分配方式可以保证前面提到的**“每次分裂产生的桶页,都是连续存储”**的原则,这也为下面将要介绍的计算桶所对应的磁盘块号的方法提供了基础。

在每一个splitpoint,首先分配(预留)2^splitpoint个桶页,然后在这些桶页的后面,再根据需求分配一定数目的溢出页,这个数目将由数组hashm_spares记录。

当splitpoint 增加1的时候,再分配2^splitpoint个桶页,以此类推,这样的实现机制使得很容易求得页面在磁盘中的块号。

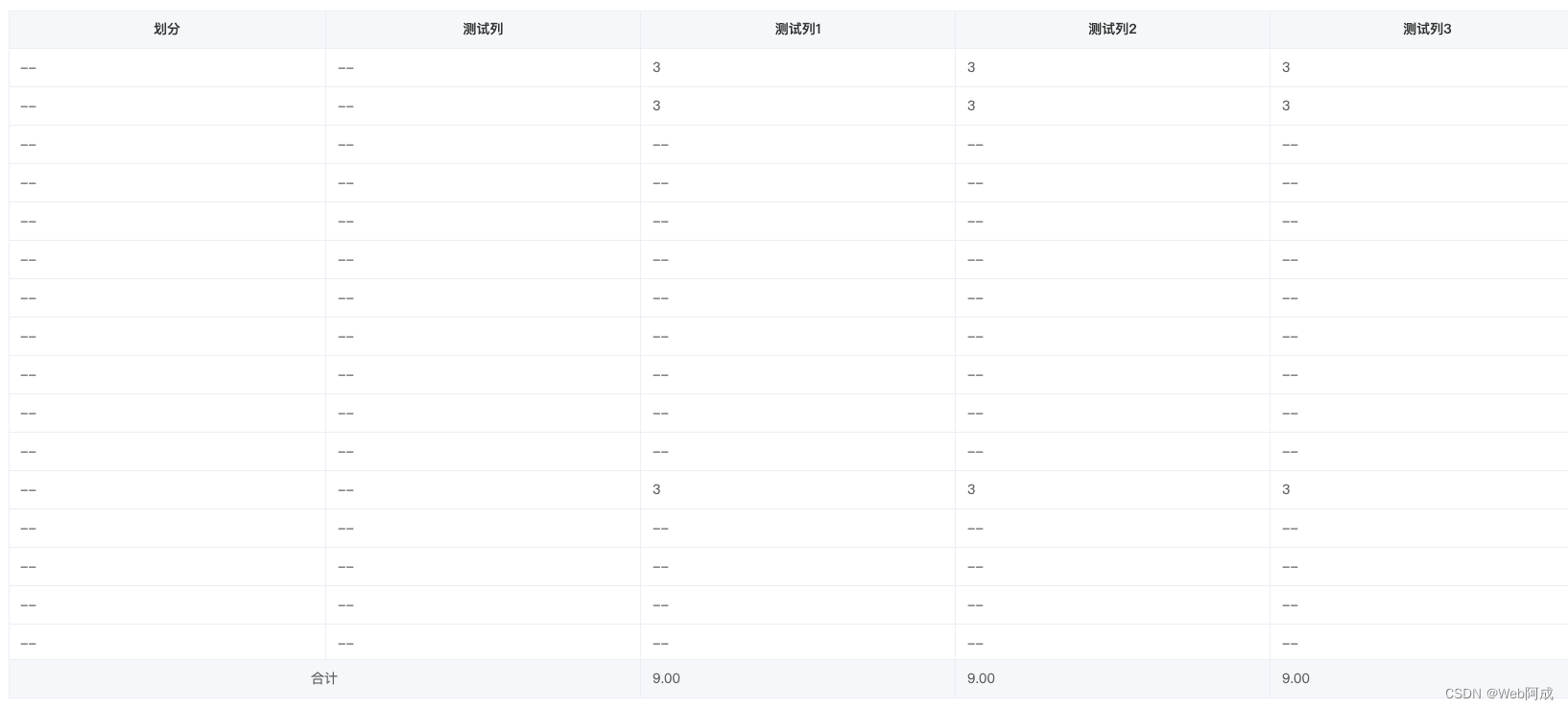

len(hashm_spares)=splitpoint+1; hashm_spares[splitpoint]=n; 从第1次分裂到第splitpoint次分裂后共n个溢出页

下面是书上求块号的公式如下:(有误)

我觉得,

- pagenum=0时,blocknum=1就是因为原先存在一个元页(bloknum=0)

- pagenum≠1时,blocknum=第spiltpoint次分裂后已分配的所有桶的数量下标(2^(spiltpoint+1)-1)+【溢出页的数量(hashm_spares[splitpoint])+位图页的数量(k)】+元页(1)

metap->hashm_spares[splitnum]++;// 计算新增溢出页的blocknum前,先加1

// _hash_getovflpage

/*

* No free pages --- have to extend the relation to add an overflow page.

* First, check to see if we have to add a new bitmap page too.

*/

if (last_bit == (uint32) (BMPGSZ_BIT(metap) - 1))

{

/*

* We create the new bitmap page with all pages marked "in use".

* Actually two pages in the new bitmap's range will exist

* immediately: the bitmap page itself, and the following page which

* is the one we return to the caller. Both of these are correctly

* marked "in use". Subsequent pages do not exist yet, but it is

* convenient to pre-mark them as "in use" too.

*/

bit = metap->hashm_spares[splitnum];

_hash_initbitmap(rel, metap, bitno_to_blkno(metap, bit));

metap->hashm_spares[splitnum]++;// 计算新增溢出页的blocknum前,先加1

}

/* Calculate address of the new overflow page 然后再取计算blocknum*/

bit = metap->hashm_spares[splitnum];

blkno = bitno_to_blkno(metap, bit);

可以由桶页的页号pagenum求出其在在磁盘的块号blocknum。

溢出页

如果某个元组在它所属的桶中放不下的时候,就需要给该桶增加一个溢出页。溢出页和桶页之间是通过双向链链接起来的,即每一页都记录了前一页的块号(prevblkno)和后一页的块号(nextblkno),这个双向链保存在HashPageOpaqueData结构中。当溢出页分配给某个桶页使用时,溢出页的HashPageOpaqueData.hasho_bucket将设置为桶页的块号。

前面说过,在每次扩展Hash表后,都会给该次扩展预留桶的编号(不管是否实际已经分配)。所以,分配的溢出页的磁盘号都是在预留的桶之后的。同时,在元页中使用数组hashm_spares记录了在各次桶的扩展时分配的溢出页数,从而能够快速地计算出各个桶页的块号。当splitpoint 的值由k增加到k +1时,hashm_spares [k]的值就不再允许更改,以保证能够有效地找到每次扩展的桶。也就是说,溢出页一旦分配,便一直存在,即使是被回收也只是标记它为空闲,并没有释放其物理空间。

当该桶存放的记录已经达到饱和时,以后插入到该桶中的记录会添加到该桶的溢出页中,而溢出页通过双向指针与桶页链接,可灵活实现查找、删除等操作。

位图

位图用于管理Hash索引的溢出页和位图页本身的使用情况。如果某个溢出页上的元组都被移除或删除,就要将此溢出页回收,但并不把它还给操作系统,而是继续由PostgreSQL 管理,以便下次需要溢出页的时候使用。因此,需要一种机制来记录每一个溢出页是否可用,这时便用到了位图。

位图数量=溢出页数量/位图大小+(溢出页数量%位图大小)向上取整

所有位图页的HashPaageOpaqueData.hasho_bucket都为-1,HashPageOpaqueData.hasho_prevblkno和hasho_nextblkno均置为InvalidBlockNumber(无效),通过元页中的hashm_mapp数组可以找到位图对应的块号。

splitpoint=找到的m

blocknum=第spiltpoint次分裂后已分配的所有桶的数量(2^(spiltpoint+1)-1)+元页+溢出页的数量(hashm_spares[splitpoint])+位图页的数量(k)+1

Hash表的构建

正常情况下,构建一个Hash索引时,首先要初始化该索引的元页、桶以及位图页。之后,调用扫描函数对待索引的表进行扫描,生成对应的索引元组,最后将这些索引元组插入到Hash表中。

PostgreSQL 8.4.1使用全局变量来记录所创建的Hash索引,它提供了一个外部函数hashbuild来建立Hash索引,函数执行的流程如图4-16所示。

函数hashbuild

Datum

hashbuild(PG_FUNCTION_ARGS)

{

Relation heap = (Relation) PG_GETARG_POINTER(0);

Relation index = (Relation) PG_GETARG_POINTER(1);

IndexInfo *indexInfo = (IndexInfo *) PG_GETARG_POINTER(2);

IndexBuildResult *result;

BlockNumber relpages;

double reltuples;

uint32 num_buckets;

HashBuildState buildstate;

/*

* We expect to be called exactly once for any index relation. If that's

* not the case, big trouble's what we have.

*/

if (RelationGetNumberOfBlocks(index) != 0)

elog(ERROR, "index \"%s\" already contains data",

RelationGetRelationName(index));

/* Estimate the number of rows currently present in the table */

estimate_rel_size(heap, NULL, &relpages, &reltuples);

/*

Initialize the hash index metadata page and initial buckets

初始化元页

*/

num_buckets = _hash_metapinit(index, reltuples);

/*

* If we just insert the tuples into the index in scan order, then

* (assuming their hash codes are pretty random) there will be no locality

* of access to the index, and if the index is bigger than available RAM

* then we'll thrash horribly. To prevent that scenario, we can sort the

* tuples by (expected) bucket number. However, such a sort is useless

* overhead when the index does fit in RAM. We choose to sort if the

* initial index size exceeds NBuffers.

*

* NOTE: this test will need adjustment if a bucket is ever different from

* one page.

*/

if (num_buckets >= (uint32) NBuffers)

buildstate.spool = _h_spoolinit(index, num_buckets);

else

buildstate.spool = NULL;

/* prepare to build the index */

buildstate.indtuples = 0;

/*

do the heap scan

对待建立索引的表进行扫描,生成索引元组

*/

reltuples = IndexBuildHeapScan(heap, index, indexInfo, true,

hashbuildCallback, (void *) &buildstate);

if (buildstate.spool)

{

/* sort the tuples and insert them into the index */

_h_indexbuild(buildstate.spool);

_h_spooldestroy(buildstate.spool);

}

/*

* Return statistics

*/

result = (IndexBuildResult *) palloc(sizeof(IndexBuildResult));

result->heap_tuples = reltuples;

result->index_tuples = buildstate.indtuples;

PG_RETURN_POINTER(result);

}

下面将对hashbuild运行时所调用的几个子函数进行分析。

函数_hash_metapinit

该函数初始化hash表,其中包含一个元页(即图4-7中的metapage)、N个桶以及一个位图,最后返回初始化桶的数目N,其中N为2的k次方。

其执行流程如图:

uint32 _hash_metapinit(Relation rel, double num_tuples)

{

HashMetaPage metap;

HashPageOpaque pageopaque;

Buffer metabuf;

Buffer buf;

Page pg;

int32 data_width;

int32 item_width;

int32 ffactor;

double dnumbuckets;

uint32 num_buckets;

uint32 log2_num_buckets;

uint32 i;

/* safety check 安全机制检查,确定入参正确*/

if (RelationGetNumberOfBlocks(rel) != 0)

elog(ERROR, "cannot initialize non-empty hash index \"%s\"",

RelationGetRelationName(rel));

/*

* Determine the target fill factor (in tuples per bucket) for this index.

* The idea is to make the fill factor correspond to pages about as full

* as the user-settable fillfactor parameter says. We can compute it

* exactly since the index datatype (i.e. uint32 hash key) is fixed-width.

确定装填因子的大小,当Hash表的充满程度达到了fillfactor时,就增加新的桶

*/

data_width = sizeof(uint32);

item_width = MAXALIGN(sizeof(IndexTupleData)) + MAXALIGN(data_width) +

sizeof(ItemIdData); /* include the line pointer */

ffactor = RelationGetTargetPageUsage(rel, HASH_DEFAULT_FILLFACTOR) / item_width;

// desired bytes/page / bytes/item

/* keep to a sane range */

if (ffactor < 10)

ffactor = 10;

/*

* Choose the number of initial bucket pages to match the fill factor

* given the estimated number of tuples. We round up the result to the

* next power of 2, however, and always force at least 2 bucket pages. The

* upper limit is determined by considerations explained in

* _hash_expandtable().

*/

dnumbuckets = num_tuples / ffactor;

if (dnumbuckets <= 2.0)// 初始化桶的数量

num_buckets = 2;

else if (dnumbuckets >= (double) 0x40000000)

num_buckets = 0x40000000;

else

num_buckets = ((uint32) 1) << _hash_log2((uint32) dnumbuckets);

log2_num_buckets = _hash_log2(num_buckets);// <--<--

Assert(num_buckets == (((uint32) 1) << log2_num_buckets));

Assert(log2_num_buckets < HASH_MAX_SPLITPOINTS);

/*

* We initialize the metapage, the first N bucket pages, and the first

* bitmap page in sequence, using _hash_getnewbuf to cause smgrextend()

* calls to occur. This ensures that the smgr level has the right idea of

* the physical index length.

*/

metabuf = _hash_getnewbuf(rel, HASH_METAPAGE);

pg = BufferGetPage(metabuf);

pageopaque = (HashPageOpaque) PageGetSpecialPointer(pg);

pageopaque->hasho_prevblkno = InvalidBlockNumber;

pageopaque->hasho_nextblkno = InvalidBlockNumber;

pageopaque->hasho_bucket = -1;

pageopaque->hasho_flag = LH_META_PAGE;

pageopaque->hasho_page_id = HASHO_PAGE_ID;

// 将metapage读入内存缓冲区,取得metapage在内存中的相应页号

metap = HashPageGetMeta(pg);

metap->hashm_magic = HASH_MAGIC;

metap->hashm_version = HASH_VERSION;

metap->hashm_ntuples = 0;

metap->hashm_nmaps = 0;

metap->hashm_ffactor = ffactor;

metap->hashm_bsize = HashGetMaxBitmapSize(pg);

/* find largest bitmap array size that will fit in page size */

for (i = _hash_log2(metap->hashm_bsize); i > 0; --i)

{

if ((1 << i) <= metap->hashm_bsize)

break;

}

Assert(i > 0);

metap->hashm_bmsize = 1 << i;

metap->hashm_bmshift = i + BYTE_TO_BIT;

Assert((1 << BMPG_SHIFT(metap)) == (BMPG_MASK(metap) + 1));

/*

* Label the index with its primary hash support function's OID. This is

* pretty useless for normal operation (in fact, hashm_procid is not used

* anywhere), but it might be handy for forensic purposes so we keep it.

*/

metap->hashm_procid = index_getprocid(rel, 1, HASHPROC);

/*

* We initialize the index with N buckets, 0 .. N-1, occupying physical

* blocks 1 to N. The first freespace bitmap page is in block N+1. Since

* N is a power of 2, we can set the masks this way:

*/

metap->hashm_maxbucket = metap->hashm_lowmask = num_buckets - 1;

metap->hashm_highmask = (num_buckets << 1) - 1;

MemSet(metap->hashm_spares, 0, sizeof(metap->hashm_spares));

MemSet(metap->hashm_mapp, 0, sizeof(metap->hashm_mapp));

/* Set up mapping for one spare page after the initial splitpoints */

metap->hashm_spares[log2_num_buckets] = 1;// <--<--

metap->hashm_ovflpoint = log2_num_buckets;// 当前分裂次数

metap->hashm_firstfree = 0;

/*

* Release buffer lock on the metapage while we initialize buckets.

* Otherwise, we'll be in interrupt holdoff and the CHECK_FOR_INTERRUPTS

* won't accomplish anything. It's a bad idea to hold buffer locks for

* long intervals in any case, since that can block the bgwriter.

*/

_hash_chgbufaccess(rel, metabuf, HASH_WRITE, HASH_NOLOCK);

/*

* Initialize the first N buckets

初始化桶

*/

for (i = 0; i < num_buckets; i++)

{

/* Allow interrupts, in case N is huge */

CHECK_FOR_INTERRUPTS();

buf = _hash_getnewbuf(rel, BUCKET_TO_BLKNO(metap, i));

pg = BufferGetPage(buf);

pageopaque = (HashPageOpaque) PageGetSpecialPointer(pg);

pageopaque->hasho_prevblkno = InvalidBlockNumber;

pageopaque->hasho_nextblkno = InvalidBlockNumber;

pageopaque->hasho_bucket = i;

pageopaque->hasho_flag = LH_BUCKET_PAGE;

pageopaque->hasho_page_id = HASHO_PAGE_ID;

_hash_wrtbuf(rel, buf);

}

/* Now reacquire buffer lock on metapage */

_hash_chgbufaccess(rel, metabuf, HASH_NOLOCK, HASH_WRITE);

/*

* Initialize first bitmap page

初始化元页的位图信息

*/

_hash_initbitmap(rel, metap, num_buckets + 1);

/* all done 初始化完毕,写回磁盘*/

_hash_wrtbuf(rel, metabuf);

return num_buckets;

}

与其他程序相比,本程序并没有严格的锁的机制。在初始化一个Hash表时,此Hash表还并没有建成,所以不会有程序对此Hash表进行访问,当然也不会有死锁的情况发生,因此本程序并没有严格的加锁与释放锁机制。

函数IndexBuildHeapScan

该函数扫描整个基表,根据查找键对其中的每一个元组都生成一个Hash索引元组,并插入到Hash表中。在其中还将调用函数hashbuildCallback 。

函数hashbuildCallback

该函数根据表中的元组生成Hash索引元组,并插入到Hash表中,其执行流程如图4-18所示。

static void

hashbuildCallback(Relation index,

HeapTuple htup,

Datum *values,

bool *isnull,

bool tupleIsAlive,

void *state)

{

HashBuildState *buildstate = (HashBuildState *) state;

IndexTuple itup;

/* form an index tuple and point it at the heap tuple */

itup = _hash_form_tuple(index, values, isnull);

itup->t_tid = htup->t_self;// 索引元组指向堆元组

/* Hash indexes don't index nulls, see notes in hashinsert */

if (IndexTupleHasNulls(itup))

{

pfree(itup);

return;

}

/* Either spool the tuple for sorting, or just put it into the index */

if (buildstate->spool)

_h_spool(itup, buildstate->spool);

else

_hash_doinsert(index, itup);// <--<--

buildstate->indtuples += 1;

pfree(itup);

}

元组的插入

完成了元页的初始化,根据查找键生成索引元组后,即可调用函数将该索引元组插入到Hash表中,这是创建Hash索引关键的一步。

系统首先会读取元页中的相关信息,计算出该索引元组应插入的桶号

- 若该桶有足够的空间,则将元组插入到该桶中

- 否则申请溢出页并插入到溢出页中。

完成插入后,系统会再次根据元页的信息来判断是否需要增加新的桶。

函数_hash_doinsert

执行流程如图

void

_hash_doinsert(Relation rel, IndexTuple itup)

{

Buffer buf;

Buffer metabuf;

HashMetaPage metap;

BlockNumber blkno;

Page page;

HashPageOpaque pageopaque;

Size itemsz;

bool do_expand;

uint32 hashkey;

Bucket bucket;

/*

* Get the hash key for the item (it's stored in the index tuple itself).

*/

hashkey = _hash_get_indextuple_hashkey(itup);

/* compute item size too */

itemsz = IndexTupleDSize(*itup);

itemsz = MAXALIGN(itemsz); /* be safe, PageAddItem will do this but we

* need to be consistent */

/*

* Acquire shared split lock so we can compute the target bucket safely

* (see README).

*/

_hash_getlock(rel, 0, HASH_SHARE);

/* Read the metapage */

metabuf = _hash_getbuf(rel, HASH_METAPAGE, HASH_READ, LH_META_PAGE);

metap = HashPageGetMeta(BufferGetPage(metabuf));

/*

* Check whether the item can fit on a hash page at all. (Eventually, we

* ought to try to apply TOAST methods if not.) Note that at this point,

* itemsz doesn't include the ItemId.

*

* XXX this is useless code if we are only storing hash keys.

*/

if (itemsz > HashMaxItemSize((Page) metap))

ereport(ERROR,

(errcode(ERRCODE_PROGRAM_LIMIT_EXCEEDED),

errmsg("index row size %lu exceeds hash maximum %lu",

(unsigned long) itemsz,

(unsigned long) HashMaxItemSize((Page) metap)),

errhint("Values larger than a buffer page cannot be indexed.")));

/*

* Compute the target bucket number, and convert to block number.

计算出待插入的桶

*/

bucket = _hash_hashkey2bucket(hashkey,

metap->hashm_maxbucket,

metap->hashm_highmask,

metap->hashm_lowmask);

blkno = BUCKET_TO_BLKNO(metap, bucket);

/* release lock on metapage, but keep pin since we'll need it again */

_hash_chgbufaccess(rel, metabuf, HASH_READ, HASH_NOLOCK);

/*

* Acquire share lock on target bucket; then we can release split lock.

*/

_hash_getlock(rel, blkno, HASH_SHARE);

_hash_droplock(rel, 0, HASH_SHARE);

/* Fetch the primary bucket page for the bucket 进入桶页*/

buf = _hash_getbuf(rel, blkno, HASH_WRITE, LH_BUCKET_PAGE);

page = BufferGetPage(buf);

pageopaque = (HashPageOpaque) PageGetSpecialPointer(page);

Assert(pageopaque->hasho_bucket == bucket);

/* Do the insertion */

while (PageGetFreeSpace(page) < itemsz)// 空闲空间不够

{

/*

* no space on this page; check for an overflow page

*/

BlockNumber nextblkno = pageopaque->hasho_nextblkno;

if (BlockNumberIsValid(nextblkno))// 存在溢出页

{

/*

* ovfl page exists; go get it. if it doesn't have room, we'll

* find out next pass through the loop test above.

*/

_hash_relbuf(rel, buf);

buf = _hash_getbuf(rel, nextblkno, HASH_WRITE, LH_OVERFLOW_PAGE);

page = BufferGetPage(buf);

}

else

{

/*

* we're at the end of the bucket chain and we haven't found a

* page with enough room. allocate a new overflow page.

*/

/* release our write lock without modifying buffer */

_hash_chgbufaccess(rel, buf, HASH_READ, HASH_NOLOCK);

/* chain to a new overflow page 申请溢出页*/

buf = _hash_addovflpage(rel, metabuf, buf);

page = BufferGetPage(buf);

/* should fit now, given test above */

Assert(PageGetFreeSpace(page) >= itemsz);

}

pageopaque = (HashPageOpaque) PageGetSpecialPointer(page);

Assert(pageopaque->hasho_flag == LH_OVERFLOW_PAGE);

Assert(pageopaque->hasho_bucket == bucket);

}

/* found page with enough space, so add the item here 插入操作*/

(void) _hash_pgaddtup(rel, buf, itemsz, itup);

/* write and release the modified page */

_hash_wrtbuf(rel, buf);

/* We can drop the bucket lock now */

_hash_droplock(rel, blkno, HASH_SHARE);

/*

* Write-lock the metapage so we can increment the tuple count. After

* incrementing it, check to see if it's time for a split.

*/

_hash_chgbufaccess(rel, metabuf, HASH_NOLOCK, HASH_WRITE);

metap->hashm_ntuples += 1;

/* Make sure this stays in sync with _hash_expandtable() */

do_expand = metap->hashm_ntuples >

(double) metap->hashm_ffactor * (metap->hashm_maxbucket + 1);

/* Write out the metapage and drop lock, but keep pin */

_hash_chgbufaccess(rel, metabuf, HASH_WRITE, HASH_NOLOCK);

/* Attempt to split if a split is needed */

if (do_expand)

_hash_expandtable(rel, metabuf);

/* Finally drop our pin on the metapage */

_hash_dropbuf(rel, metabuf);

}

因为插入操作并不会改变桶中已经存在的元组的位置,所以插入操作可以和扫描操作并发进行。

溢出页的分配与回收

分配:在索引元组的插入过程中,若待插入的桶中没有空间,就需要创建一个溢出页,并把它链接到该桶上,然后将索引元组插入到分配的溢出页中。回收:当对Hash表进行扩展之后,原来存放在溢出页中的索引元组可能就会移到新增加的桶中,这时就需要对溢出页进行回收。

下面将针对溢出页的分配和回收过程,讲解其中重要的函数和流程。

函数_hash_getovflpage

该函数获取一个溢出页,并返回它在磁盘中的块号。

基本执行过程如下:寻找溢出页时,首先通过位图查找可用的溢出页,若找到则将其分配,并修改位图中相应的位﹔若位图中没有可用的溢出页,即分配给该Hash表的溢出页都在使用,则从磁盘上申请一个块作为溢出页,并且在位图中增加该溢出页的记录。

static Buffer

_hash_getovflpage(Relation rel, Buffer metabuf)

{

HashMetaPage metap;

Buffer mapbuf = 0;

Buffer newbuf;

BlockNumber blkno;

uint32 orig_firstfree;

uint32 splitnum;

uint32 *freep = NULL;

uint32 max_ovflpg;

uint32 bit;

uint32 first_page;

uint32 last_bit;

uint32 last_page;

uint32 i,

j;

/* Get exclusive lock on the meta page */

_hash_chgbufaccess(rel, metabuf, HASH_NOLOCK, HASH_WRITE);

_hash_checkpage(rel, metabuf, LH_META_PAGE);

metap = HashPageGetMeta(BufferGetPage(metabuf));

/* start search at hashm_firstfree */

orig_firstfree = metap->hashm_firstfree;// 当前可能空闲的最小溢出页号

first_page = orig_firstfree >> BMPG_SHIFT(metap);

bit = orig_firstfree & BMPG_MASK(metap);

i = first_page;

j = bit / BITS_PER_MAP;

bit &= ~(BITS_PER_MAP - 1);

/* outer loop iterates once per bitmap page */

for (;;)

{

BlockNumber mapblkno;

Page mappage;

uint32 last_inpage;

/* want to end search with the last existing overflow page

获取分配给Hash表的总溢出页数、最后一个位图的位置以及标记第一个空闲的

溢出页的位图

*/

splitnum = metap->hashm_ovflpoint;

max_ovflpg = metap->hashm_spares[splitnum] - 1;

last_page = max_ovflpg >> BMPG_SHIFT(metap);

last_bit = max_ovflpg & BMPG_MASK(metap);

if (i > last_page)

break;

Assert(i < metap->hashm_nmaps);

mapblkno = metap->hashm_mapp[i];

if (i == last_page)

last_inpage = last_bit;

else

last_inpage = BMPGSZ_BIT(metap) - 1;

/* Release exclusive lock on metapage while reading bitmap page */

_hash_chgbufaccess(rel, metabuf, HASH_READ, HASH_NOLOCK);

// 给溢出页加写锁

mapbuf = _hash_getbuf(rel, mapblkno, HASH_WRITE, LH_BITMAP_PAGE);

mappage = BufferGetPage(mapbuf);

freep = HashPageGetBitmap(mappage);

// 在位图中查找空闲的溢出页

for (; bit <= last_inpage; j++, bit += BITS_PER_MAP)

{

if (freep[j] != ALL_SET)// ALL_SET为0

goto found;

}

/* No free space here, try to advance to next map page

找不到,释放buffer的写锁*/

_hash_relbuf(rel, mapbuf);

i++;

j = 0; /* scan from start of next map page */

bit = 0;

/* Reacquire exclusive lock on the meta page */

_hash_chgbufaccess(rel, metabuf, HASH_NOLOCK, HASH_WRITE);

}

/*

* No free pages --- have to extend the relation to add an overflow page.

* First, check to see if we have to add a new bitmap page too.

申请一个溢出页,并在位图中增加该溢出页的记录

*/

if (last_bit == (uint32) (BMPGSZ_BIT(metap) - 1))

{

/*

* We create the new bitmap page with all pages marked "in use".

* Actually two pages in the new bitmap's range will exist

* immediately: the bitmap page itself, and the following page which

* is the one we return to the caller. Both of these are correctly

* marked "in use". Subsequent pages do not exist yet, but it is

* convenient to pre-mark them as "in use" too.

*/

bit = metap->hashm_spares[splitnum];

_hash_initbitmap(rel, metap, bitno_to_blkno(metap, bit));

metap->hashm_spares[splitnum]++;

}

else

{

/*

* Nothing to do here; since the page will be past the last used page,

* we know its bitmap bit was preinitialized to "in use".

*/

}

/* Calculate address of the new overflow page */

bit = metap->hashm_spares[splitnum];

blkno = bitno_to_blkno(metap, bit);

/*

* Fetch the page with _hash_getnewbuf to ensure smgr's idea of the

* relation length stays in sync with ours. XXX It's annoying to do this

* with metapage write lock held; would be better to use a lock that

* doesn't block incoming searches.

*/

newbuf = _hash_getnewbuf(rel, blkno);

metap->hashm_spares[splitnum]++;

/*

* Adjust hashm_firstfree to avoid redundant searches. But don't risk

* changing it if someone moved it while we were searching bitmap pages.

*/

if (metap->hashm_firstfree == orig_firstfree)

metap->hashm_firstfree = bit + 1;

/* Write updated metapage and release lock, but not pin */

_hash_chgbufaccess(rel, metabuf, HASH_WRITE, HASH_NOLOCK);

return newbuf;

found:// 找到位图标记为0的溢出页,将其标记修改为1

/* convert bit to bit number within page */

bit += _hash_firstfreebit(freep[j]);

/* mark page "in use" in the bitmap */

SETBIT(freep, bit);

_hash_wrtbuf(rel, mapbuf);

/* Reacquire exclusive lock on the meta page */

_hash_chgbufaccess(rel, metabuf, HASH_NOLOCK, HASH_WRITE);

/* convert bit to absolute bit number */

bit += (i << BMPG_SHIFT(metap));

/* Calculate address of the recycled overflow page */

blkno = bitno_to_blkno(metap, bit);

/*

* Adjust hashm_firstfree to avoid redundant searches. But don't risk

* changing it if someone moved it while we were searching bitmap pages.

修改metapage中相关值

*/

if (metap->hashm_firstfree == orig_firstfree)

{

metap->hashm_firstfree = bit + 1;

/* Write updated metapage and release lock, but not pin */

_hash_chgbufaccess(rel, metabuf, HASH_WRITE, HASH_NOLOCK);

}

else

{

/* We didn't change the metapage, so no need to write */

_hash_chgbufaccess(rel, metabuf, HASH_READ, HASH_NOLOCK);

}

/* Fetch, init, and return the recycled page */

return _hash_getinitbuf(rel, blkno);

}

LockBuffer

/*

* Acquire or release the content_lock for the buffer.

*/

void

LockBuffer(Buffer buffer, int mode)

{

volatile BufferDesc *buf;

Assert(BufferIsValid(buffer));

if (BufferIsLocal(buffer))

return; /* local buffers need no lock */

buf = &(BufferDescriptors[buffer - 1]);

if (mode == BUFFER_LOCK_UNLOCK)

LWLockRelease(buf->content_lock);

else if (mode == BUFFER_LOCK_SHARE)

LWLockAcquire(buf->content_lock, LW_SHARED);

else if (mode == BUFFER_LOCK_EXCLUSIVE)

LWLockAcquire(buf->content_lock, LW_EXCLUSIVE);

else

elog(ERROR, "unrecognized buffer lock mode: %d", mode);

}

函数_hash_freeovflpage

该函数将断开桶链,释放某个溢出页,并在位图中标识其为可用。

参数ovflbuf为此溢出页所在的缓冲区号,进入函数前该缓冲区置有写锁,退出函数时释放该写锁。函数的返回值是桶链中此溢出页的下一页的块号,若下一页不存在,则返回InvalidBlockNumber。此外,在整个函数的执行过程中,调用者始终拥有此溢出页所在的桶上的排他lmgr锁。

BlockNumber

_hash_freeovflpage(Relation rel, Buffer ovflbuf,

BufferAccessStrategy bstrategy)

{

HashMetaPage metap;

Buffer metabuf;

Buffer mapbuf;

BlockNumber ovflblkno;

BlockNumber prevblkno;

BlockNumber blkno;

BlockNumber nextblkno;

HashPageOpaque ovflopaque;

Page ovflpage;

Page mappage;

uint32 *freep;

uint32 ovflbitno;

int32 bitmappage,

bitmapbit;

Bucket bucket;

/* Get information from the doomed page */

_hash_checkpage(rel, ovflbuf, LH_OVERFLOW_PAGE);

ovflblkno = BufferGetBlockNumber(ovflbuf);

ovflpage = BufferGetPage(ovflbuf);

ovflopaque = (HashPageOpaque) PageGetSpecialPointer(ovflpage);

nextblkno = ovflopaque->hasho_nextblkno;

prevblkno = ovflopaque->hasho_prevblkno;

bucket = ovflopaque->hasho_bucket;

/* 将该溢出页的所有位 置0

* Zero the page for debugging's sake; then write and release it. (Note:

* if we failed to zero the page here, we'd have problems with the Assert

* in _hash_pageinit() when the page is reused.)

*/

MemSet(ovflpage, 0, BufferGetPageSize(ovflbuf));

_hash_wrtbuf(rel, ovflbuf);

/* 将溢出页从桶链中断开

* Fix up the bucket chain. this is a doubly-linked list, so we must fix

* up the bucket chain members behind and ahead of the overflow page being

* deleted. No concurrency issues since we hold exclusive lock on the

* entire bucket.

*/

if (BlockNumberIsValid(prevblkno))

{

Buffer prevbuf = _hash_getbuf_with_strategy(rel,

prevblkno,

HASH_WRITE,

LH_BUCKET_PAGE | LH_OVERFLOW_PAGE,

bstrategy);

Page prevpage = BufferGetPage(prevbuf);

HashPageOpaque prevopaque = (HashPageOpaque) PageGetSpecialPointer(prevpage);

Assert(prevopaque->hasho_bucket == bucket);

prevopaque->hasho_nextblkno = nextblkno;

_hash_wrtbuf(rel, prevbuf);

}

if (BlockNumberIsValid(nextblkno))

{

Buffer nextbuf = _hash_getbuf_with_strategy(rel,

nextblkno,

HASH_WRITE,

LH_OVERFLOW_PAGE,

bstrategy);

Page nextpage = BufferGetPage(nextbuf);

HashPageOpaque nextopaque = (HashPageOpaque) PageGetSpecialPointer(nextpage);

Assert(nextopaque->hasho_bucket == bucket);

nextopaque->hasho_prevblkno = prevblkno;

_hash_wrtbuf(rel, nextbuf);

}

/* Note: bstrategy is intentionally not used for metapage and bitmap */

// 下面是修改位图上的信息,通过元页找到该对应位图后,将此溢出页对应的bit置0

/* Read the metapage so we can determine which bitmap page to use */

metabuf = _hash_getbuf(rel, HASH_METAPAGE, HASH_READ, LH_META_PAGE);

metap = HashPageGetMeta(BufferGetPage(metabuf));

/* Identify which bit to set */

ovflbitno = blkno_to_bitno(metap, ovflblkno);

bitmappage = ovflbitno >> BMPG_SHIFT(metap);

bitmapbit = ovflbitno & BMPG_MASK(metap);

if (bitmappage >= metap->hashm_nmaps)

elog(ERROR, "invalid overflow bit number %u", ovflbitno);

blkno = metap->hashm_mapp[bitmappage];

/* Release metapage lock while we access the bitmap page */

_hash_chgbufaccess(rel, metabuf, HASH_READ, HASH_NOLOCK);

/* Clear the bitmap bit to indicate that this overflow page is free */

mapbuf = _hash_getbuf(rel, blkno, HASH_WRITE, LH_BITMAP_PAGE);

mappage = BufferGetPage(mapbuf);

freep = HashPageGetBitmap(mappage);

Assert(ISSET(freep, bitmapbit));

CLRBIT(freep, bitmapbit);

_hash_wrtbuf(rel, mapbuf);

/* Get write-lock on metapage to update firstfree */

_hash_chgbufaccess(rel, metabuf, HASH_NOLOCK, HASH_WRITE);

/* if this is now the first free page, update hashm_firstfree

判断是否需要修改元页的hashm_firstfree字段*/

if (ovflbitno < metap->hashm_firstfree)

{

metap->hashm_firstfree = ovflbitno;

_hash_wrtbuf(rel, metabuf);

}

else

{

/* no need to change metapage */

_hash_relbuf(rel, metabuf);

}

return nextblkno;

}

Hash表的扩展

每次插入后,都用当前的记录总数r和当前的桶数目n相除计算r/n,若比率太大,就对Hash表进行扩展,即增加一个桶到Hash表中,新增加的桶和发生插入的桶之间没有任何必然的联系。

如果新加入的桶号的二进制表示为1a2a3……ai,那么就试图分裂桶号为0a2a3……ai的桶中的元组。

函数_hash_expandtable

该函数试图增加一个新桶来扩展Hash表。

其执行流程如图所示。

-

在修改完metapage的信息之后,进行桶的分裂之前,程序释放了metapage 上的“lmgr lock”,这使得桶的分裂操作可以和其他操作并发执行,特别是多个对桶的分裂操作可以并发执行。

函数_hash_splitbucket:该函数将桶号为obucket的旧桶中的一部分元组分裂到桶号为nbucket的新桶里。

/* Write out the metapage and drop lock, but keep pin */ _hash_chgbufaccess(rel, metabuf, HASH_WRITE, HASH_NOLOCK); /* Release split lock; okay for other splits to occur now */ _hash_droplock(rel, 0, HASH_EXCLUSIVE); /* Relocate records to the new bucket */ _hash_splitbucket(rel, metabuf, old_bucket, new_bucket, start_oblkno, start_nblkno, maxbucket, highmask, lowmask); /* Release bucket locks, allowing others to access them */ _hash_droplock(rel, start_oblkno, HASH_EXCLUSIVE);// 通过_hash_try_getlock加锁 _hash_droplock(rel, start_nblkno, HASH_EXCLUSIVE);

/* * Fetch the item's hash key (conveniently stored in the item) and * determine which bucket it now belongs in. 计算原itup现在应该分配给新的桶还是旧桶 */ itup = (IndexTuple) PageGetItem(opage, PageGetItemId(opage, ooffnum)); bucket = _hash_hashkey2bucket(_hash_get_indextuple_hashkey(itup), maxbucket, highmask, lowmask); -

如果未能取得分裂桶上的“Imgr lock”,则放弃增加新桶来扩展Hash表的操作。因为如果继续等待分裂桶上的“Imgr lock”,而某个拥有分裂桶上的“lmgr lock”的进程碰巧也在等待本进程拥有的某个锁,这将会导致死锁。

函数_hash_squeezebucket

该函数压缩桶中的元组,使桶中的元组更加紧凑。

其中要注意:

-

在压缩桶中元组时,从桶的最后一页开始顺序向前进行,对扫描到的每一个元组都把它移动到桶的前面。具体来说,有两类页面,分别是

rpage和wpage。开始时,rpage是桶链的最后一页,wpage是桶链的第一页,从rpage 中读取每一个元组,并一次把它们写到wpage中,并将它们在rpage中的记录删除。

-

若rpage中的所有元组都已扫描完毕,则令rpage的前一页为 rpage,并对其继续扫描;

-

若wpage中已经没有足够的空间进行插入,则令wpage的下一页为wpage,并将元组插入其中。

这样,rpage从桶链的最后一页开始向前移,wpage从桶链的第一页开始向后移,直到rpage与 wpage是同一个桶页为止,程序结束。

-

-

当把位于桶链里后面的元组都移到前面后,可能会出现一些不含任何元组的溢出页,把这些溢出页回收,并在位图中标识其可用,这是调用_hash_freeovflpage函数完成回收的。

-

程序结束时,桶链上的所有页都不空,除非一开始整个桶链就是空的。

-

程序的调用者必须持有整个桶链的“lmgr lock”,以防止某些并发执行的进程访问本桶链,造成程序出错。

wblkno = bucket_blkno; wbuf = _hash_getbuf_with_strategy(rel,wblkno,HASH_WRITE, LH_BUCKET_PAGE, bstrategy);

具体实现流程:

如果rpage的前一页就是wpage,那么wpage 上的写锁将会阻止函数_hash_freeovflpage释放 rpage所在的溢出页,因为当对wpage加写锁的时候,进程是不能修改wpage所在页的指向桶链中下一页所在块的指针的(详见对函数_hash _freeovflpage的代码分析),所以在这里必须分情况讨论。