前言

作为机器学习的初学者,Kaggle提供了一个很好的练习和学习平台,其中有一个栏目《PLAYGROUND》,可以理解为游乐场系列赛,提供有趣、平易近人的数据集,以练习他们的机器学习技能,并每个月都会有一场比赛。非常适合新手学习的机会,同时会有大量的高手分享其代码,本期是2024年2月份的题目《Multi-Class Prediction of Obesity Risk》即《肥胖风险的多类别预测》,在此我分享在这个比赛过程的点点滴滴。

题目说明

数据集介绍:

| 列名 | 完整含义 | 详细说明 |

|---|---|---|

| ‘id’ | id | 每个人的唯一号 |

| ‘Gender’ | 性别 | 个人性别 |

| ‘Age’ | 年龄 | 年龄在14岁到61岁之间 |

| ‘Height’ | 高度 | 高度以米为单位,介于1.45米到1.98米之间 |

| ‘Weight’ | 体重 | 体重介于39到165之间,单位为 KG. |

| ‘family_history_with_overweight’ | 家族史 是否有超重问题 | |

| ‘FAVC’ | 消费频率 对于高热量食物 | 对于高热量食物,这是否定的问题。我想他们问的问题是你吃高热量的食物吗 |

| ‘FCVC’ | 蔬菜消费频率 | 类似于FAVC. 是或否的问题 |

| ‘NCP’ | 主餐数 | 类型为浮点数, NCP介于1和4之间 应该是1,2,3,4,但由于数据是合成的,因此为浮点数 |

| ‘CAEC’ | 消费 两餐之间的食物 | 共有4个值,有时、经常、从不和总是 |

| ‘SMOKE’ | 吸烟 | 是或否的问题, 问题应该是“你抽烟吗?” |

| ‘CH2O’ | 每日摄水量 | 取值在1和3之间,同样是生成的数据,所以是浮点类型 |

| ‘SCC’ | 热量消耗监控 | 是或否的问题 |

| ‘FAF’ | 体育活动频率 | FAF在0到3之间,0表示没有体力活动, 3表示高强度锻炼 |

| ‘TUE’ | 使用技术设备时间 | TUE在0到2之间。 问题是“你有多长时间一直在使用技术设备来跟踪您的健康状况。“ |

| ‘CALC’ | 饮酒量 | 有 3 值,有时、从不、经常 |

| ‘MTRANS’ | 使用的交通工具 | MTRANS取5个值 公共交通、汽车、步行、摩托车和自行车 |

| ‘NObeyesdad’ | 目标 | 这是我们的目标,取7个值,在这个竞赛中,我们必须给予类名(不是概率,大多数竞赛中都是这种情况) |

- Insufficient_Weight (体重不足) : 小于18.5

- Normal_Weight (正常体重) : 18.5 到 24.9

- Obesity_Type_I (肥胖I级) : 30.0 到 34.9

- Obesity_Type_II (肥胖II级) : 35.0 到 39.9

- Obesity_Type_III (肥胖III级): 高于 40

- Overweight_Level_I(超级肥胖I级), Overweight_Level_II (超级肥胖II级)takes values between 25 to 29

加载库

(略)

加载数据

# 加载所有数据

train = pd.read_csv(os.path.join(FILE_PATH, "train.csv"))

test = pd.read_csv(os.path.join(FILE_PATH, "test.csv"))

探索数据

Train Data

Total number of rows: 20758

Total number of columns: 18

Test Data

Total number of rows: 13840

Total number of columns:17

- 训练数据统计汇总如下

+-------------+-------+---------+---------+----------+-------+---------------------+---------------------+

| Column Name | count | dtype | nunique | %nunique | %null | min | max |

+-------------+-------+---------+---------+----------+-------+---------------------+---------------------+

| id | 20758 | int64 | 20758 | 100.0 | 0.0 | 0 | 20757 |

| Gender | 20758 | object | 2 | 0.01 | 0.0 | Female | Male |

| Age | 20758 | float64 | 1703 | 8.204 | 0.0 | 14.0 | 61.0 |

| Height | 20758 | float64 | 1833 | 8.83 | 0.0 | 1.45 | 1.975663 |

| Weight | 20758 | float64 | 1979 | 9.534 | 0.0 | 39.0 | 165.057269 |

| FHWO | 20758 | object | 2 | 0.01 | 0.0 | no | yes |

| FAVC | 20758 | object | 2 | 0.01 | 0.0 | no | yes |

| FCVC | 20758 | float64 | 934 | 4.499 | 0.0 | 1.0 | 3.0 |

| NCP | 20758 | float64 | 689 | 3.319 | 0.0 | 1.0 | 4.0 |

| CAEC | 20758 | object | 4 | 0.019 | 0.0 | Always | no |

| SMOKE | 20758 | object | 2 | 0.01 | 0.0 | no | yes |

| CH2O | 20758 | float64 | 1506 | 7.255 | 0.0 | 1.0 | 3.0 |

| SCC | 20758 | object | 2 | 0.01 | 0.0 | no | yes |

| FAF | 20758 | float64 | 1360 | 6.552 | 0.0 | 0.0 | 3.0 |

| TUE | 20758 | float64 | 1297 | 6.248 | 0.0 | 0.0 | 2.0 |

| CALC | 20758 | object | 3 | 0.014 | 0.0 | Frequently | no |

| MTRANS | 20758 | object | 5 | 0.024 | 0.0 | Automobile | Walking |

| NObeyesdad | 20758 | object | 7 | 0.034 | 0.0 | Insufficient_Weight | Overweight_Level_II |

+-------------+-------+---------+---------+----------+-------+---------------------+---------------------+

- 目标值对性别的分类

| gender_count | %gender_count | target_class_count | %target_class_count | ||

|---|---|---|---|---|---|

| NObeyesdad | Gender | ||||

| Insufficient_Weight | Female | 1621 | 0.64 | 2523 | 0.12 |

| Male | 902 | 0.36 | 2523 | 0.12 | |

| Normal_Weight | Female | 1660 | 0.54 | 3082 | 0.15 |

| Male | 1422 | 0.46 | 3082 | 0.15 | |

| Obesity_Type_I | Female | 1267 | 0.44 | 2910 | 0.14 |

| Male | 1643 | 0.56 | 2910 | 0.14 | |

| Obesity_Type_II | Female | 8 | 0.00 | 3248 | 0.16 |

| Male | 3240 | 1.00 | 3248 | 0.16 | |

| Obesity_Type_III | Female | 4041 | 1.00 | 4046 | 0.19 |

| Male | 5 | 0.00 | 4046 | 0.19 | |

| Overweight_Level_I | Female | 1070 | 0.44 | 2427 | 0.12 |

| Male | 1357 | 0.56 | 2427 | 0.12 | |

| Overweight_Level_II | Female | 755 | 0.30 | 2522 | 0.12 |

| Male | 1767 | 0.70 | 2522 | 0.12 |

从上表中,我们可以看到

Obesity_Type_II中的所有人都是男性,Obesity_Type_III中的所有人为女性Overweight_Level_II由70%的男性组成,Insufficient_Weight由60%以上的女性组成- 从这一点我们可以说,性别是肥胖预测的一个重要特征

数据可视化

在本节中,我们将看到:

- 单个数值图

- 个体分类图

- 数值相关图

- 组合数字图

目标分布与性别

fig, axs = plt.subplots(1,2,figsize = (12,5))

plt.suptitle("Target Distribution")

sns.histplot(binwidth=0.5,x=TARGET,data=train,hue='Gender',palette="dark",ax=axs[0],discrete=True)

axs[0].tick_params(axis='x', rotation=60)

axs[1].pie(

train[TARGET].value_counts(),

shadow = True,

explode=[.1 for i in range(train[TARGET].nunique())],

labels = train[TARGET].value_counts().index,

autopct='%1.f%%',

)

plt.tight_layout()

plt.show()

单个数值图

fig,axs = plt.subplots(len(raw_num_cols),1,figsize=(12,len(raw_num_cols)*2.5),sharex=False)

for i, col in enumerate(raw_num_cols):

sns.violinplot(x=TARGET, y=col,hue="Gender", data=train,ax = axs[i], split=False)

if col in full_form.keys():

axs[i].set_ylabel(full_form[col])

plt.tight_layout()

plt.show()

个体分类图

_,axs = plt.subplots(int(len(raw_cat_cols)-1),2,figsize=(12,len(raw_cat_cols)*3),width_ratios=[1, 4])

for i,col in enumerate(raw_cat_cols[1:]):

sns.countplot(y=col,data=train,palette="bright",ax=axs[i,0])

sns.countplot(x=col,data=train,hue=TARGET,palette="bright",ax=axs[i,1])

if col in full_form.keys():

axs[i,0].set_ylabel(full_form[col])

plt.tight_layout()

plt.show()

数值相关图

tmp = train[raw_num_cols].corr("pearson")

sns.heatmap(tmp,annot=True,cmap ="crest")

组合数字图

- 身高与体重

sns.jointplot(data=train, x="Height", y="Weight", hue=TARGET,height=6)

- 年龄与身高

主成分分析(PCA)和KMeans

from sklearn.decomposition import PCA

from sklearn.cluster import KMeans

#PCA

pca = PCA(n_components=2)

pca_top_2 = pca.fit_transform(train[raw_num_cols])

tmp = pd.DataFrame(data = pca_top_2, columns = ['pca_1','pca_2'])

tmp['TARGET'] = train[TARGET]

fig,axs = plt.subplots(2,1,figsize = (12,6))

sns.scatterplot(data=tmp, y="pca_1", x="pca_2", hue='TARGET',ax=axs[0])

axs[0].set_title("Top 2 Principal Components")

#KMeans

kmeans = KMeans(7,random_state=RANDOM_SEED)

kmeans.fit(tmp[['pca_1','pca_2']])

sns.scatterplot( y= tmp['pca_1'],x = tmp['pca_2'],c = kmeans.labels_,cmap='viridis', marker='o', edgecolor='k', s=50, alpha=0.8,ax = axs[1])

axs[1].set_title("Kmean Clustring on First 2 Principal Components")

plt.tight_layout()

plt.show()

特征工程与处理

#在age_rounder、height_rounder函数中,我们将值相乘

#这有时会提高模型的CV分数

#在提取功能中,我们将功能组合以获得新功能

def age_rounder(x):

x_copy = x.copy()

x_copy['Age'] = (x_copy['Age']*100).astype(np.uint16)

return x_copy

def height_rounder(x):

x_copy = x.copy()

x_copy['Height'] = (x_copy['Height']*100).astype(np.uint16)

return x_copy

def extract_features(x):

x_copy = x.copy()

x_copy['BMI'] = (x_copy['Weight']/x_copy['Height']**2)

# x_copy['PseudoTarget'] = pd.cut(x_copy['BMI'],bins = [0,18.4,24.9,29,34.9,39.9,100],labels = [0,1,2,3,4,5],)

return x_copy

def col_rounder(x):

x_copy = x.copy()

cols_to_round = ['FCVC',"NCP","CH2O","FAF","TUE"]

for col in cols_to_round:

x_copy[col] = round(x_copy[col])

x_copy[col] = x_copy[col].astype('int')

return x_copy

AgeRounder = FunctionTransformer(age_rounder)

HeightRounder = FunctionTransformer(height_rounder)

ExtractFeatures = FunctionTransformer(extract_features)

ColumnRounder = FunctionTransformer(col_rounder)

#使用FeatureDropper,我们可以删除列。这是

#如果我们想传递不同的功能集,这一点很重要

#适用于不同模型

from sklearn.base import BaseEstimator, TransformerMixin

class FeatureDropper(BaseEstimator, TransformerMixin):

def __init__(self, cols):

self.cols = cols

def fit(self,x,y):

return self

def transform(self, x):

return x.drop(self.cols, axis = 1)

接下来,我们将定义“cross_val_model”,它将用于训练和验证我们将在本文中使用的所有模型

cross_val_model函数提供了三个内容:val_scores、valid_prdictions、test_predictions

- val_scores:这为我们提供了验证数据的准确性分数。

- valid_products:这是一个数组,用于在验证集上存储模型预测

- test_predictions:这提供了按我们使用的分割数平均的测试预测

# 使用交叉验证模型

# 结合 分层 K 折.

# 对目标分类进行编码

target_mapping = {

'Insufficient_Weight':0,

'Normal_Weight':1,

'Overweight_Level_I':2,

'Overweight_Level_II':3,

'Obesity_Type_I':4,

'Obesity_Type_II':5 ,

'Obesity_Type_III':6

}

# 定义分层K折交叉验证方法

skf = StratifiedKFold(n_splits=n_splits)

def cross_val_model(estimators,cv = skf, verbose = True):

'''

estimators : pipeline consists preprocessing, encoder & model

cv : Method for cross validation (default: StratifiedKfold)

verbose : print train/valid score (yes/no)

'''

X = train.copy()

y = X.pop(TARGET)

y = y.map(target_mapping)

test_predictions = np.zeros((len(test),7))

valid_predictions = np.zeros((len(X),7))

val_scores, train_scores = [],[]

for fold, (train_ind, valid_ind) in enumerate(skf.split(X,y)):

model = clone(estimators)

#define train set

X_train = X.iloc[train_ind]

y_train = y.iloc[train_ind]

#define valid set

X_valid = X.iloc[valid_ind]

y_valid = y.iloc[valid_ind]

model.fit(X_train, y_train)

if verbose:

print("-" * 100)

print(f"Fold: {fold}")

print(f"Train Accuracy Score:-{accuracy_score(y_true=y_train,y_pred=model.predict(X_train))}")

print(f"Valid Accuracy Score:-{accuracy_score(y_true=y_valid,y_pred=model.predict(X_valid))}")

print("-" * 100)

test_predictions += model.predict_proba(test)/cv.get_n_splits()

valid_predictions[valid_ind] = model.predict_proba(X_valid)

val_scores.append(accuracy_score(y_true=y_valid,y_pred=model.predict(X_valid)))

if verbose:

print(f"Average Mean Accuracy Score:- {np.array(val_scores).mean()}")

return val_scores, valid_predictions, test_predictions

# 合并原始和生成数据

train.drop(['id'],axis = 1, inplace = True)

test_ids = test['id']

test.drop(['id'],axis = 1, inplace=True)

train = pd.concat([train,train_org],axis = 0)

train = train.drop_duplicates()

train.reset_index(drop=True, inplace=True)

# 产生空的 dataframe 用于存储 得分,训练 ,测试预测

score_list, oof_list, predict_list = pd.DataFrame(), pd.DataFrame(), pd.DataFrame()

建模

在这场比赛中,与其只关注一个模型,不如将许多高性能模型的预测结合起来。在本文中,我们将训练四种不同类型的模型,并将它们的预测结合起来,以获得最终的提交。

- 随机森林模型

- LGBM 模型

- XGB 模型

- Catboost 模型

随机森林模型

# Define Random Forest Model Pipeline

RFC = make_pipeline(

ExtractFeatures,

MEstimateEncoder(cols=['Gender','family_history_with_overweight','FAVC','CAEC',

'SMOKE','SCC','CALC','MTRANS']),

RandomForestClassifier(random_state=RANDOM_SEED)

)

# 执行随机森林模型

val_scores,val_predictions,test_predictions = cross_val_model(RFC)

# 保存相应的结果

for k,v in target_mapping.items():

oof_list[f"rfc_{k}"] = val_predictions[:,v]

for k,v in target_mapping.items():

predict_list[f"rfc_{k}"] = test_predictions[:,v]

# 0.8975337326149792

# 0.9049682643904575

----------------------------------------------------------------------------------------------------

Fold: 0

Train Accuracy Score:-0.9999027237354086

Valid Accuracy Score:-0.8954048140043763

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 1

Train Accuracy Score:-0.9999513618677043

Valid Accuracy Score:-0.9010940919037199

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 2

Train Accuracy Score:-0.9999513618677043

Valid Accuracy Score:-0.8940919037199124

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 3

Train Accuracy Score:-0.9999027237354086

Valid Accuracy Score:-0.8905908096280087

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 4

Train Accuracy Score:-0.9998540856031128

Valid Accuracy Score:-0.9102844638949672

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 5

Train Accuracy Score:-0.9999027284665143

Valid Accuracy Score:-0.8975481611208407

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 6

Train Accuracy Score:-0.9998054569330286

Valid Accuracy Score:-0.8966725043782837

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 7

Train Accuracy Score:-0.9998540926997714

Valid Accuracy Score:-0.9080560420315237

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 8

Train Accuracy Score:-0.9998540926997714

Valid Accuracy Score:-0.9063047285464098

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 9

Train Accuracy Score:-0.9999513642332571

Valid Accuracy Score:-0.9610332749562172

----------------------------------------------------------------------------------------------------

Average Mean Accuracy Score:- 0.9061080794184259

LGBM 模型

由于LGBM模型的参数非常多,很多均是超参数,本文采用 Optuna 进行微调

# 定义 Optuna 函数 微调模型

def lgbm_objective(trial):

params = {

'learning_rate' : trial.suggest_float('learning_rate', .001, .1, log = True),

'max_depth' : trial.suggest_int('max_depth', 2, 20),

'subsample' : trial.suggest_float('subsample', .5, 1),

'min_child_weight' : trial.suggest_float('min_child_weight', .1, 15, log = True),

'reg_lambda' : trial.suggest_float('reg_lambda', .1, 20, log = True),

'reg_alpha' : trial.suggest_float('reg_alpha', .1, 10, log = True),

'n_estimators' : 1000,

'random_state' : RANDOM_SEED,

'device_type' : "gpu",

'num_leaves': trial.suggest_int('num_leaves', 10, 1000),

#'boosting_type' : 'dart',

}

optuna_model = make_pipeline(

ExtractFeatures,

MEstimateEncoder(cols=['Gender','family_history_with_overweight','FAVC','CAEC',

'SMOKE','SCC','CALC','MTRANS']),

LGBMClassifier(**params,verbose=-1)

)

val_scores, _, _ = cross_val_model(optuna_model,verbose = False)

return np.array(val_scores).mean()

lgbm_study = optuna.create_study(direction = 'maximize',study_name="LGBM")

如果打开微调开关,将会执行很长时间,请谨慎操作。

# 微调开关

TUNE = False

warnings.filterwarnings("ignore")

if TUNE:

lgbm_study.optimize(lgbm_objective, 50)

将原数据分类数值和分类型,方便以下不同操作

numerical_columns = train.select_dtypes(include=['int64', 'float64']).columns.tolist()

categorical_columns = train.select_dtypes(include=['object']).columns.tolist()

categorical_columns.remove('NObeyesdad')

以下参数是我微调的结果

best_params = {

"objective": "multiclass", # Objective function for the model

"metric": "multi_logloss", # Evaluation metric

"verbosity": -1, # Verbosity level (-1 for silent)

"boosting_type": "gbdt", # Gradient boosting type

"random_state": 42, # Random state for reproducibility

"num_class": 7, # Number of classes in the dataset

'learning_rate': 0.030962211546832760, # Learning rate for gradient boosting

'n_estimators': 500, # Number of boosting iterations

'lambda_l1': 0.009667446568254372, # L1 regularization term

'lambda_l2': 0.04018641437301800, # L2 regularization term

'max_depth': 10, # Maximum depth of the trees

'colsample_bytree': 0.40977129346872643, # Fraction of features to consider for each tree

'subsample': 0.9535797422450176, # Fraction of samples to consider for each boosting iteration

'min_child_samples': 26 # Minimum number of data needed in a leaf

}

类似随机森林的方法进行操作

lgbm = make_pipeline(

ColumnTransformer(

transformers=[('num', StandardScaler(), numerical_columns),

('cat', OneHotEncoder(handle_unknown="ignore"), categorical_columns)]),

LGBMClassifier(**best_params,verbose=-1)

)

# Train LGBM Model

val_scores,val_predictions,test_predictions = cross_val_model(lgbm)

for k,v in target_mapping.items():

oof_list[f"lgbm_{k}"] = val_predictions[:,v]

for k,v in target_mapping.items():

predict_list[f"lgbm_{k}"] = test_predictions[:,v]

----------------------------------------------------------------------------------------------------

Fold: 0

Train Accuracy Score:-0.9771400778210116

Valid Accuracy Score:-0.9089715536105033

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 1

Train Accuracy Score:-0.9767509727626459

Valid Accuracy Score:-0.9076586433260394

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 2

Train Accuracy Score:-0.9776264591439688

Valid Accuracy Score:-0.9059080962800875

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 3

Train Accuracy Score:-0.9775291828793774

Valid Accuracy Score:-0.9089715536105033

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 4

Train Accuracy Score:-0.9770428015564202

Valid Accuracy Score:-0.9164113785557987

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 5

Train Accuracy Score:-0.9779679976654831

Valid Accuracy Score:-0.9076182136602452

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 6

Train Accuracy Score:-0.9779193618987403

Valid Accuracy Score:-0.9058669001751314

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 7

Train Accuracy Score:-0.9779193618987403

Valid Accuracy Score:-0.9194395796847635

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 8

Train Accuracy Score:-0.977676183065026

Valid Accuracy Score:-0.908493870402802

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 9

Train Accuracy Score:-0.9742230436262828

Valid Accuracy Score:-0.9527145359019265

----------------------------------------------------------------------------------------------------

Average Mean Accuracy Score:- 0.91420543252078

XGB 模型

按LGBM方式一样对XGB模型进行操作

# Optuna 处理 xgb

def xgb_objective(trial):

params = {

'grow_policy': trial.suggest_categorical('grow_policy', ["depthwise", "lossguide"]),

'n_estimators': trial.suggest_int('n_estimators', 100, 2000),

'learning_rate': trial.suggest_float('learning_rate', 0.01, 1.0),

'gamma' : trial.suggest_float('gamma', 1e-9, 1.0),

'subsample': trial.suggest_float('subsample', 0.25, 1.0),

'colsample_bytree': trial.suggest_float('colsample_bytree', 0.25, 1.0),

'max_depth': trial.suggest_int('max_depth', 0, 24),

'min_child_weight': trial.suggest_int('min_child_weight', 1, 30),

'reg_lambda': trial.suggest_float('reg_lambda', 1e-9, 10.0, log=True),

'reg_alpha': trial.suggest_float('reg_alpha', 1e-9, 10.0, log=True),

}

params['booster'] = 'gbtree'

params['objective'] = 'multi:softmax'

params["device"] = "cuda"

params["verbosity"] = 0

params['tree_method'] = "gpu_hist"

optuna_model = make_pipeline(

# ExtractFeatures,

MEstimateEncoder(cols=['Gender','family_history_with_overweight','FAVC','CAEC',

'SMOKE','SCC','CALC','MTRANS']),

XGBClassifier(**params,seed=RANDOM_SEED)

)

val_scores, _, _ = cross_val_model(optuna_model,verbose = False)

return np.array(val_scores).mean()

xgb_study = optuna.create_study(direction = 'maximize')

# Optuna 微调开关

TUNE = False

if TUNE:

xgb_study.optimize(xgb_objective, 50)

# XGB Pipeline

params = {

'n_estimators': 1312,

'learning_rate': 0.018279520260162645,

'gamma': 0.0024196354156454324,

'reg_alpha': 0.9025931173755949,

'reg_lambda': 0.06835667255875388,

'max_depth': 5,

'min_child_weight': 5,

'subsample': 0.883274050086088,

'colsample_bytree': 0.6579828557036317

}

# {'eta': 0.018387615982905264, 'max_depth': 29, 'subsample': 0.8149303101087905, 'colsample_bytree': 0.26750463604831476, 'min_child_weight': 0.5292380065098192, 'reg_lambda': 0.18952063379457604, 'reg_alpha': 0.7201451827004944}

params = {'grow_policy': 'depthwise', 'n_estimators': 690,

'learning_rate': 0.31829021594473056, 'gamma': 0.6061120644431842,

'subsample': 0.9032243794829076, 'colsample_bytree': 0.44474031945048287,

'max_depth': 10, 'min_child_weight': 22, 'reg_lambda': 4.42638097284094,

'reg_alpha': 5.927900973354344e-07,'seed':RANDOM_SEED}

best_params = {'grow_policy': 'depthwise', 'n_estimators': 982,

'learning_rate': 0.050053726931263504, 'gamma': 0.5354391952653927,

'subsample': 0.7060590452456204, 'colsample_bytree': 0.37939433412123275,

'max_depth': 23, 'min_child_weight': 21, 'reg_lambda': 9.150224029846654e-08,

'reg_alpha': 5.671063656994295e-08}

best_params['booster'] = 'gbtree'

best_params['objective'] = 'multi:softmax'

best_params["device"] = "cuda"

best_params["verbosity"] = 0

best_params['tree_method'] = "gpu_hist"

XGB = make_pipeline(

# ExtractFeatures,

# MEstimateEncoder(cols=['Gender','family_history_with_overweight','FAVC','CAEC',

# 'SMOKE','SCC','CALC','MTRANS']),

# FeatureDropper(['FAVC','FCVC']),

# ColumnRounder,

# ColumnTransformer(

# transformers=[('num', StandardScaler(), numerical_columns),

# ('cat', OneHotEncoder(handle_unknown="ignore"), categorical_columns)]),

MEstimateEncoder(cols=['Gender','family_history_with_overweight','FAVC','CAEC',

'SMOKE','SCC','CALC','MTRANS']),

XGBClassifier(**best_params,seed=RANDOM_SEED)

)

# 以上不同参数有不同结果

val_scores,val_predictions,test_predictions = cross_val_model(XGB)

for k,v in target_mapping .items():

oof_list[f"xgb_{k}"] = val_predictions[:,v]

for k,v in target_mapping.items():

predict_list[f"xgb_{k}"] = test_predictions[:,v]

# 0.90634942296329

#0.9117093455898445 with rounder

#0.9163506382522121

----------------------------------------------------------------------------------------------------

Fold: 0

Train Accuracy Score:-0.9452821011673151

Valid Accuracy Score:-0.9111597374179431

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 1

Train Accuracy Score:-0.945136186770428

Valid Accuracy Score:-0.9063457330415755

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 2

Train Accuracy Score:-0.9449902723735408

Valid Accuracy Score:-0.9080962800875274

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 3

Train Accuracy Score:-0.9454280155642023

Valid Accuracy Score:-0.9059080962800875

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 4

Train Accuracy Score:-0.9432392996108949

Valid Accuracy Score:-0.9199124726477024

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 5

Train Accuracy Score:-0.9460629346821653

Valid Accuracy Score:-0.9128721541155866

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 6

Train Accuracy Score:-0.946160206215651

Valid Accuracy Score:-0.9106830122591943

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 7

Train Accuracy Score:-0.9456252127814795

Valid Accuracy Score:-0.9168126094570929

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 8

Train Accuracy Score:-0.9446524974466223

Valid Accuracy Score:-0.9106830122591943

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 9

Train Accuracy Score:-0.9407130003404504

Valid Accuracy Score:-0.9610332749562172

----------------------------------------------------------------------------------------------------

Average Mean Accuracy Score:- 0.9163506382522121

Catboost 模型

用 Optuna 设参

# Optuna Function For Catboost Model

def cat_objective(trial):

params = {

'iterations': 1000, # High number of estimators

'learning_rate': trial.suggest_float('learning_rate', 0.01, 0.3),

'depth': trial.suggest_int('depth', 3, 10),

'l2_leaf_reg': trial.suggest_float('l2_leaf_reg', 0.01, 10.0),

'bagging_temperature': trial.suggest_float('bagging_temperature', 0.0, 1.0),

'random_seed': RANDOM_SEED,

'verbose': False,

'task_type':"GPU"

}

cat_features = ['Gender','family_history_with_overweight','FAVC','FCVC','NCP',

'CAEC','SMOKE','CH2O','SCC','FAF','TUE','CALC','MTRANS']

optuna_model = make_pipeline(

ExtractFeatures,

# AgeRounder,

# HeightRounder,

# MEstimateEncoder(cols = raw_cat_cols),

CatBoostClassifier(**params,cat_features=cat_features)

)

val_scores,_,_ = cross_val_model(optuna_model,verbose = False)

return np.array(val_scores).mean()

cat_study = optuna.create_study(direction = 'maximize')

参数结果如下:

params = {'learning_rate': 0.13762007048684638, 'depth': 5,

'l2_leaf_reg': 5.285199432056192, 'bagging_temperature': 0.6029582154263095,

'random_seed': RANDOM_SEED,

'verbose': False,

'task_type':"GPU",

'iterations':1000}

CB = make_pipeline(

# ExtractFeatures,

# AgeRounder,

# HeightRounder,

# MEstimateEncoder(cols = raw_cat_cols),

# CatBoostEncoder(cols = cat_features),

CatBoostClassifier(**params, cat_features=categorical_columns)

)

用上述参数训练模型

# Train Catboost Model

val_scores,val_predictions,test_predictions = cross_val_model(CB)

for k,v in target_mapping.items():

oof_list[f"cat_{k}"] = val_predictions[:,v]

for k,v in target_mapping.items():

predict_list[f"cat_{k}"] = test_predictions[:,v]

# best 0.91179835368868 with extract features, n_splits = 10

# best 0.9121046227778054 without extract features, n_splits = 10

----------------------------------------------------------------------------------------------------

Fold: 0

Train Accuracy Score:-0.9478599221789883

Valid Accuracy Score:-0.9050328227571116

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 1

Train Accuracy Score:-0.9498540856031128

Valid Accuracy Score:-0.9054704595185996

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 2

Train Accuracy Score:-0.9500972762645914

Valid Accuracy Score:-0.9024070021881838

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 3

Train Accuracy Score:-0.949124513618677

Valid Accuracy Score:-0.9050328227571116

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 4

Train Accuracy Score:-0.9482976653696498

Valid Accuracy Score:-0.912472647702407

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 5

Train Accuracy Score:-0.9502456106220515

Valid Accuracy Score:-0.9089316987740805

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 6

Train Accuracy Score:-0.950780604056223

Valid Accuracy Score:-0.9045534150612959

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 7

Train Accuracy Score:-0.95073196828948

Valid Accuracy Score:-0.9098073555166375

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 8

Train Accuracy Score:-0.9513155974903944

Valid Accuracy Score:-0.9111208406304728

----------------------------------------------------------------------------------------------------

----------------------------------------------------------------------------------------------------

Fold: 9

Train Accuracy Score:-0.9446524974466223

Valid Accuracy Score:-0.957968476357268

----------------------------------------------------------------------------------------------------

Average Mean Accuracy Score:- 0.9122797541263168

模型融合和评估

由以上四个模型,采用不同的权重,进行 融合

# skf = StratifiedKFold(n_splits=5)

weights = {"rfc_":0,

"lgbm_":3,

"xgb_":1,

"cat_":0}

tmp = oof_list.copy()

for k,v in target_mapping.items():

tmp[f"{k}"] = (weights['rfc_']*tmp[f"rfc_{k}"] +

weights['lgbm_']*tmp[f"lgbm_{k}"]+

weights['xgb_']*tmp[f"xgb_{k}"]+

weights['cat_']*tmp[f"cat_{k}"])

tmp['pred'] = tmp[target_mapping.keys()].idxmax(axis = 1)

tmp['label'] = train[TARGET]

print(f"Ensemble Accuracy Scoe: {accuracy_score(train[TARGET],tmp['pred'])}")

cm = confusion_matrix(y_true = tmp['label'].map(target_mapping),

y_pred = tmp['pred'].map(target_mapping),

normalize='true')

cm = cm.round(2)

plt.figure(figsize=(8,8))

disp = ConfusionMatrixDisplay(confusion_matrix = cm,

display_labels = target_mapping.keys())

disp.plot(xticks_rotation=50)

plt.tight_layout()

plt.show()

""" BEST """

# Best LB [0,1,0,0]

# Average Train Score:0.9142044335854003

# Average Valid Score:0.91420543252078

# Best CV [1,3, 1,1]

# Average Train Score:0.9168308163711971

# Average Valid Score:0.9168308163711971

# adding orignal data improves score

最终提交

for k,v in target_mapping.items():

predict_list[f"{k}"] = (weights['rfc_']*predict_list[f"rfc_{k}"]+

weights['lgbm_']*predict_list[f"lgbm_{k}"]+

weights['xgb_']*predict_list[f"xgb_{k}"]+

weights['cat_']*predict_list[f"cat_{k}"])

final_pred = predict_list[target_mapping.keys()].idxmax(axis = 1)

sample_sub[TARGET] = final_pred

sample_sub.to_csv("submission.csv",index=False)

sample_sub

| id | NObeyesdad | |

|---|---|---|

| 0 | 20758 | Obesity_Type_II |

| 1 | 20759 | Overweight_Level_I |

| 2 | 20760 | Obesity_Type_III |

| 3 | 20761 | Obesity_Type_I |

| 4 | 20762 | Obesity_Type_III |

| … | … | … |

| 13835 | 34593 | Overweight_Level_II |

| 13836 | 34594 | Normal_Weight |

| 13837 | 34595 | Insufficient_Weight |

| 13838 | 34596 | Normal_Weight |

| 13839 | 34597 | Obesity_Type_II |

13840 rows × 2 columns

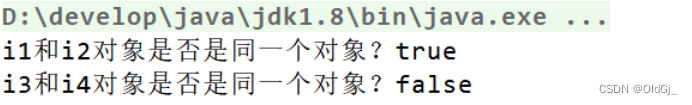

结论

- 全文,从数据探索(EDA),可视化(VIS),特征工程(FE),交叉验证(CV),建模(MOD),模型评估(EV),到最终的提交(SUB),完整的记录整个过程,给机器学习的初学者提供了一个标准的模板;

- 本文的题目是解决多分类问题,评估上只用了一个 混淆矩阵(confusion matrix),在实际应用中还有多个工具可以使用,准确率(Accuracy)、精确度(Precision)、召回率(Recall)AUC得分( AUC_score) F1得分(F1_score);

- 文中使用模型融合采用了加权法,除此之外,还有

stacking、Blending和voting(分为硬投票和软投票),这些内容,可以在我的早期文章找到相关的内容(原理); - 当时提交的得分为0.92+(Public),最终提交的结果分数如下图,排名为34名 达到1%之前。