Week 03 of Advanced Learning Algorithms

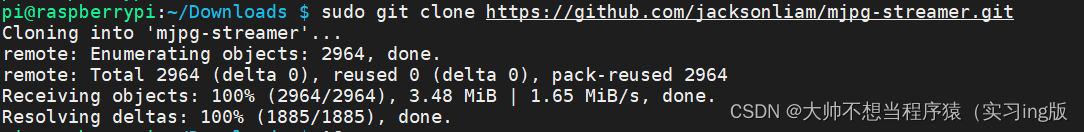

笔者在2022年7月份取得这门课的证书,现在(2024年2月25日)才想起来将笔记发布到博客上。

Website: https://www.coursera.org/learn/advanced-learning-algorithms?specialization=machine-learning-introduction

Offered by: DeepLearning.AI and Stanford

课程地址:https://www.coursera.org/learn/machine-learning

本笔记包含字幕,quiz的答案以及作业的代码,仅供个人学习使用,如有侵权,请联系删除。

文章目录

- Week 03 of Advanced Learning Algorithms

- Learning Objectives

- [1] Advice for applying machine learning

- Deciding what to try next

- Evaluating a model

- Model selection and training/cross validation/test sets

- [2] Practice quiz: Advice for applying machine learning

- Question 3

- [3] Bias and variance

- Diagnosing bias and variance

- Regularization and bias/variance

- Establishing a baseline level of performance

- Learning curves

- Deciding what to try next: revisited

- Bias/variance and neural networks

- [4] Practice quiz: Bias and variance

- [5] Machine learning development process

- Iterative loop of ML development

- Error analysis

- Adding data

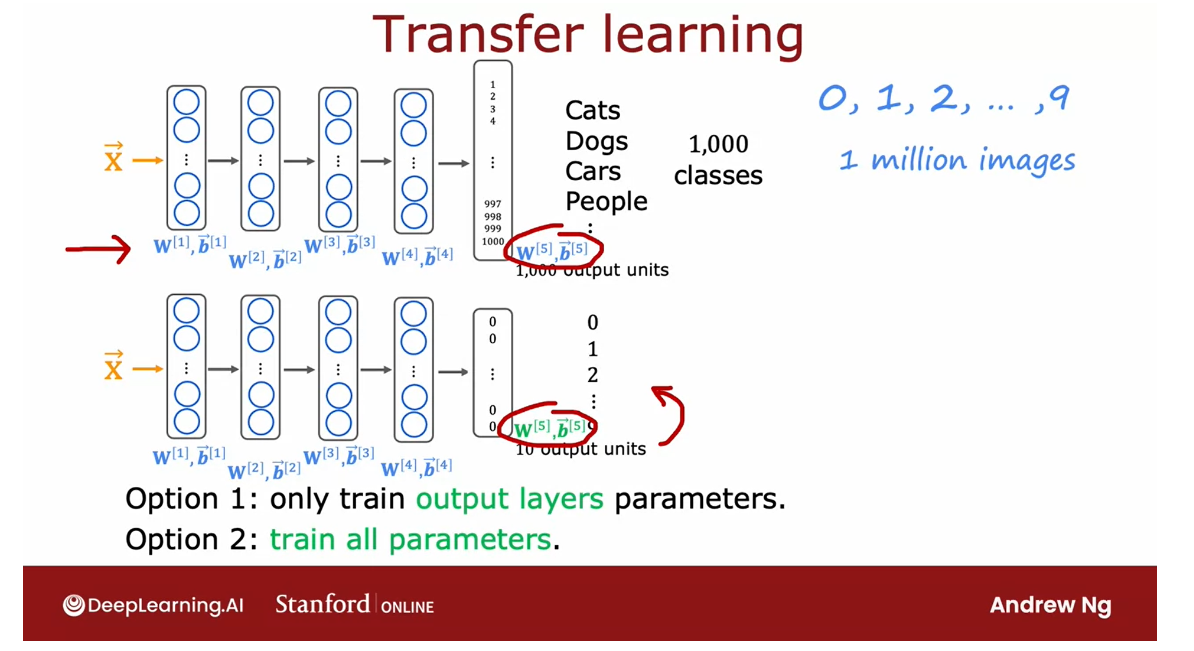

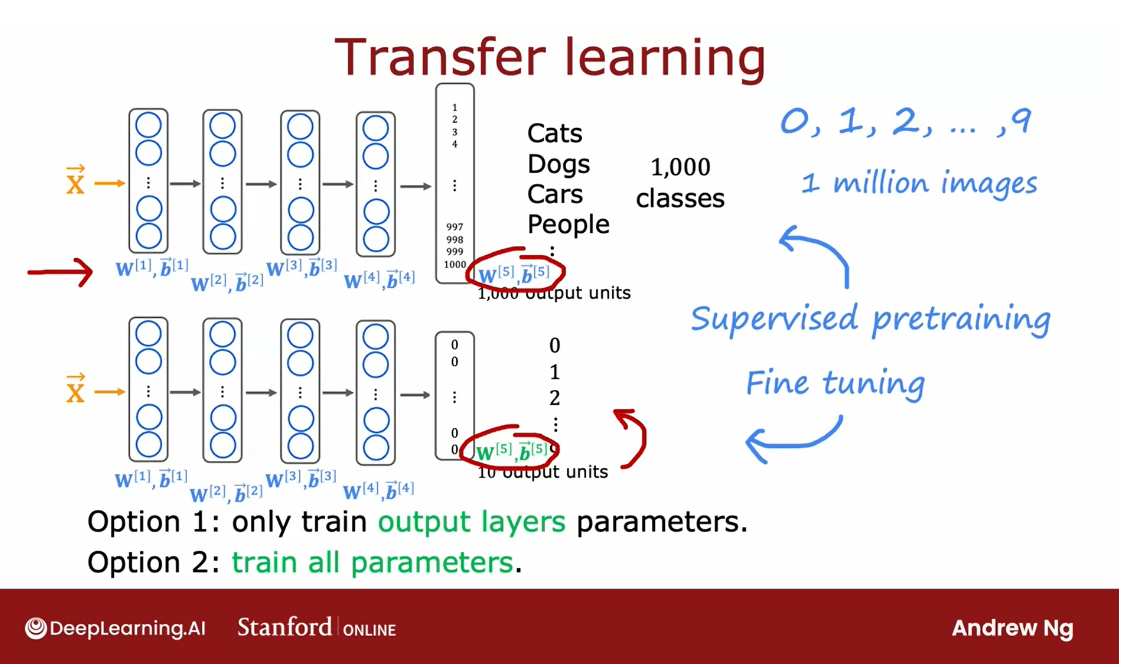

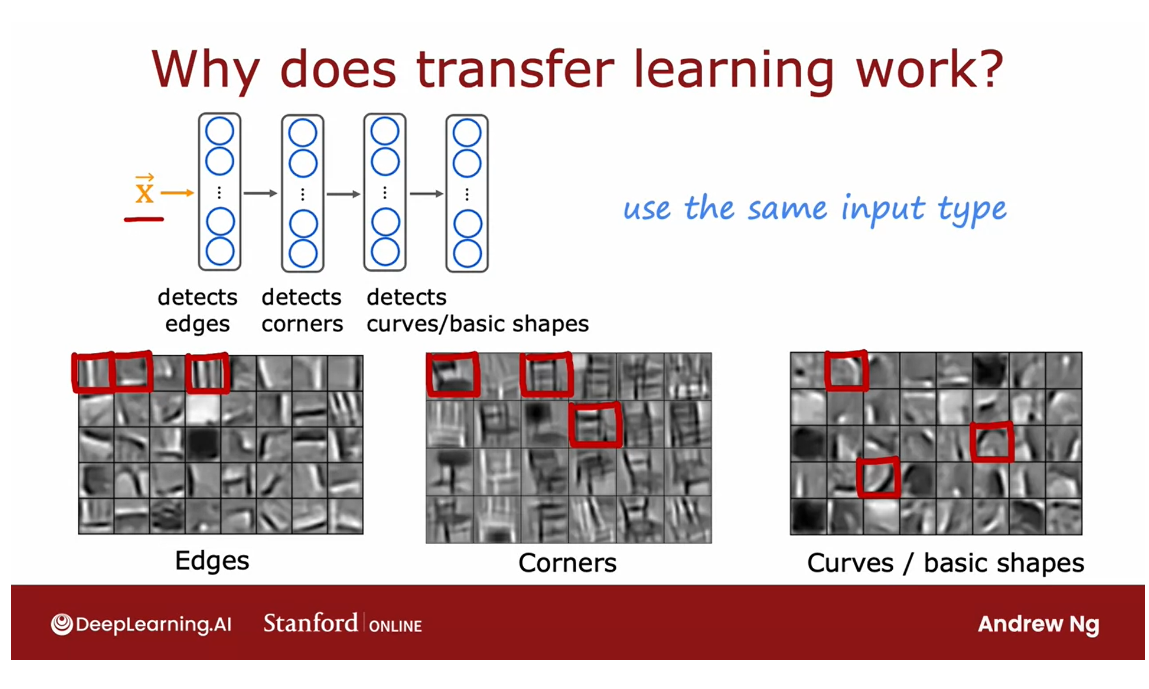

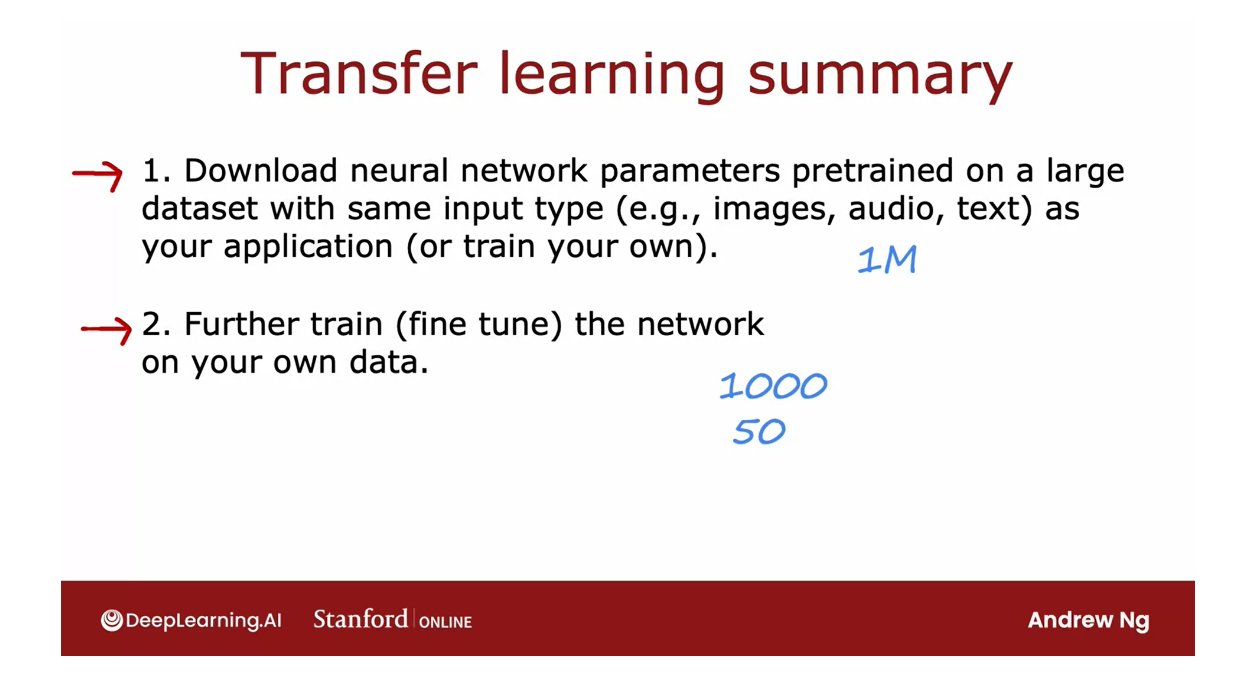

- Transfer learning: using data from a different task

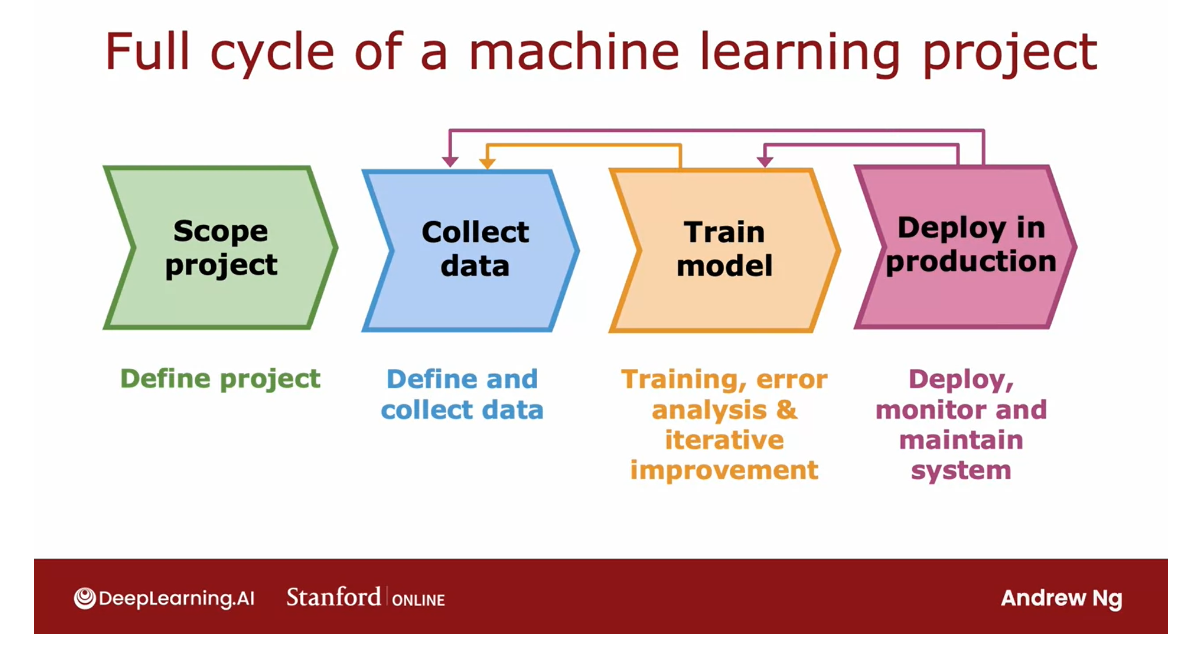

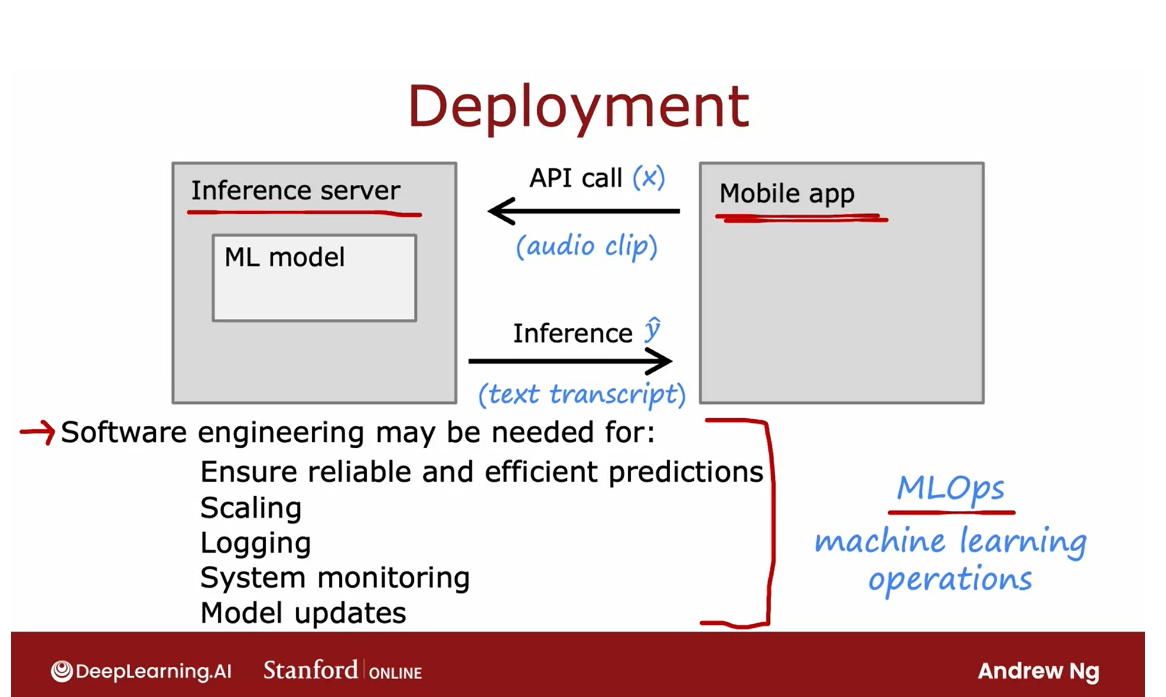

- Full cycle of a machine learning project

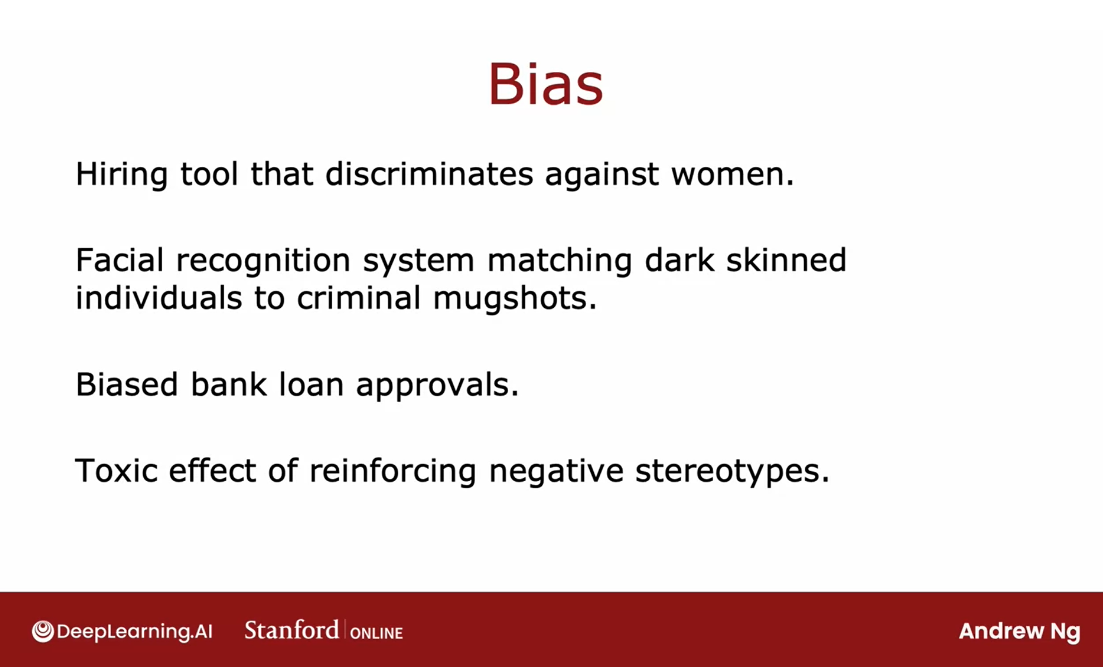

- Fairness, bias, and ethics

- [6] Practice quiz: Machine learning development process

- [7] Skewed datasets (optional)

- Error metrics for skewed datasets

- Trading off precision and recall

- [8] Practice Lab: Advice for applying machine learning

- 1 - Packages

- 2 - Evaluating a Learning Algorithm (Polynomial Regression)

- 2.1 Splitting your data set

- 2.1.1 Plot Train, Test sets

- 2.2 Error calculation for model evaluation, linear regression

- Exercise 1

- 2.3 Compare performance on training and test data

- 3 - Bias and Variance

- 3.1 Plot Train, Cross-Validation, Test

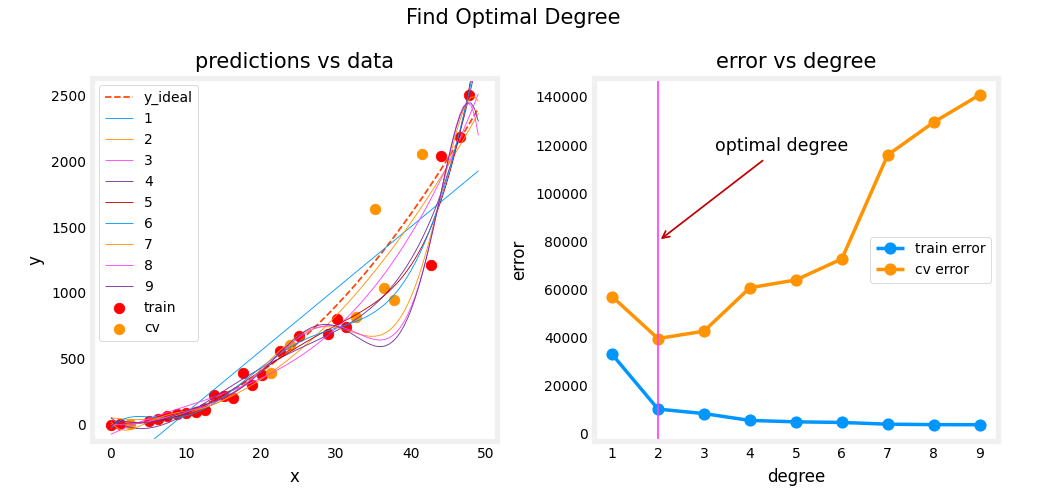

- 3.2 Finding the optimal degree

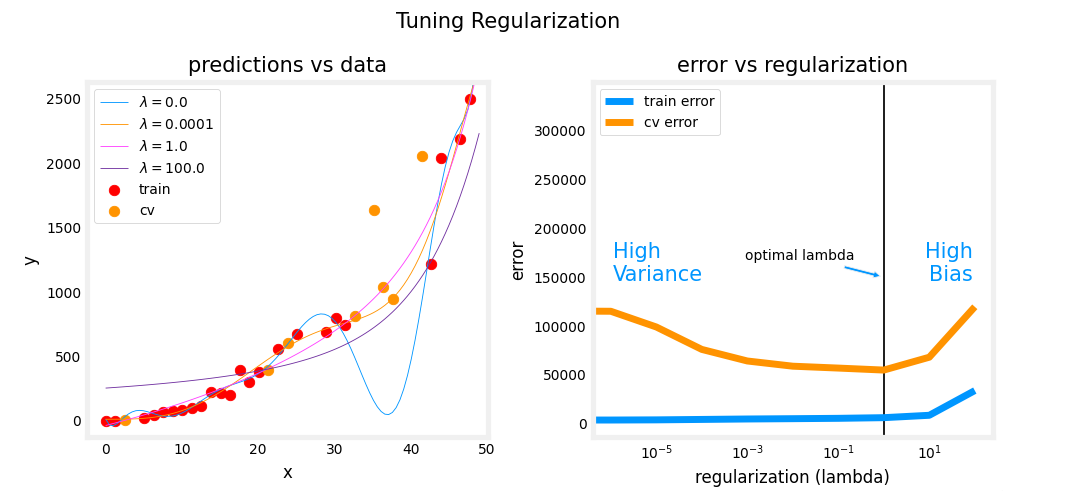

- 3.3 Tuning Regularization.

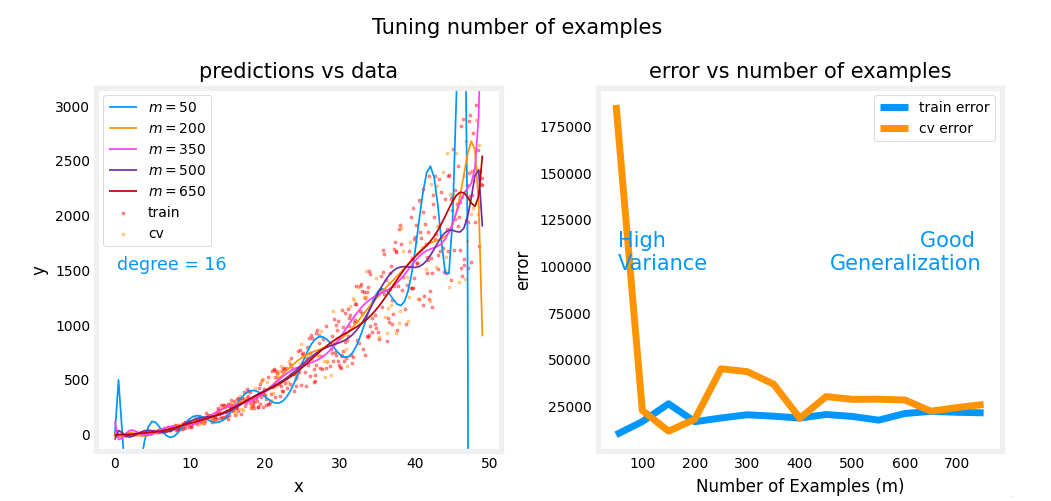

- 3.4 Getting more data: Increasing Training Set Size (m)

- 4 - Evaluating a Learning Algorithm (Neural Network)

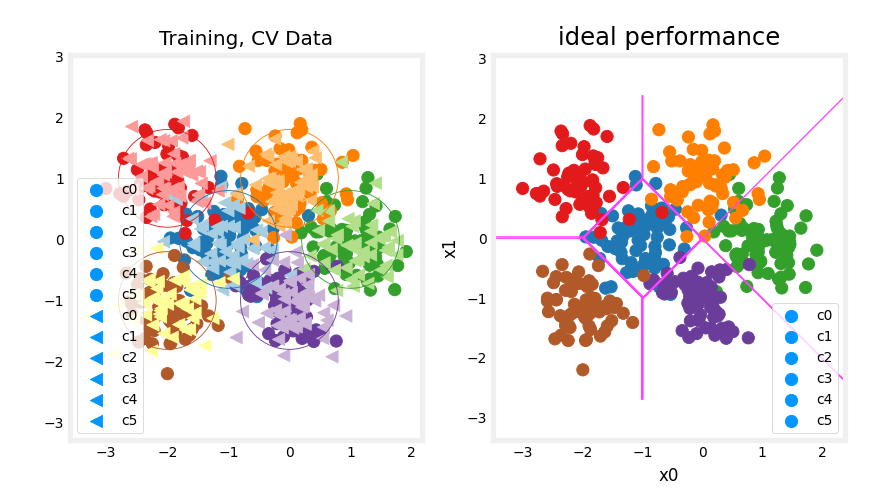

- 4.1 Data Set

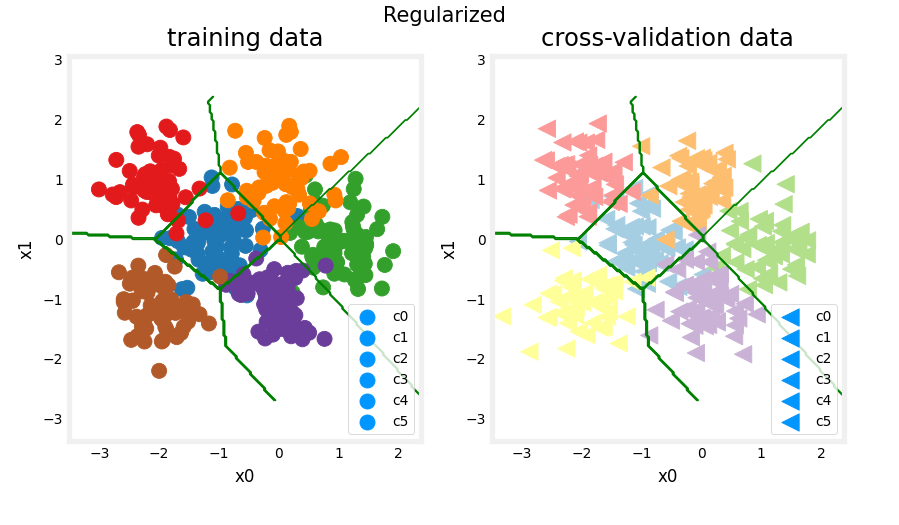

- 4.2 Evaluating categorical model by calculating classification error

- Exercise 2

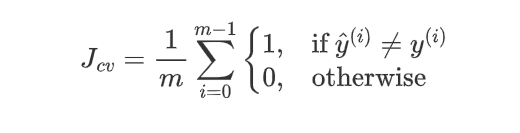

- 5 - Model Complexity

- 5.1 Complex model

- Exercise 3

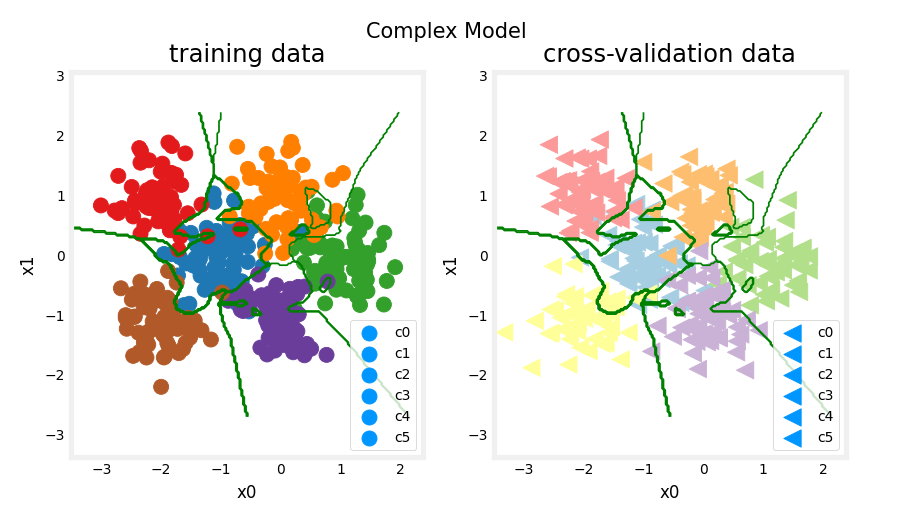

- 5.1 Simple model

- Exercise 4

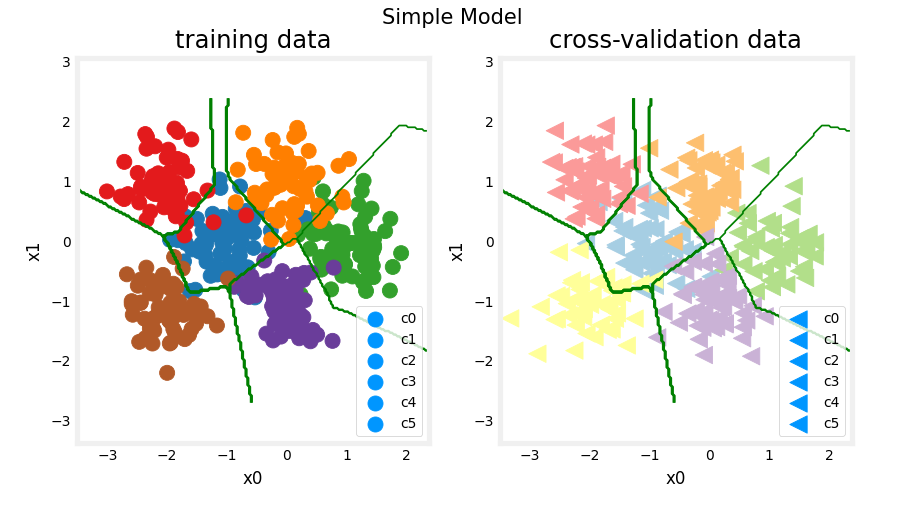

- 6 - Regularization

- Exercise 5

- 7 - Iterate to find optimal regularization value

- 7.1 Test

- Congratulations!

- 其他

- 英文发音

This week you’ll learn best practices for training and evaluating your learning algorithms to improve performance. This will cover a wide range of useful advice about the machine learning lifecycle, tuning your model, and also improving your training data.

Learning Objectives

- Evaluate and then modify your learning algorithm or data to improve your model’s performance

- Evaluate your learning algorithm using cross validation and test datasets.

- Diagnose bias and variance in your learning algorithm

- Use regularization to adjust bias and variance in your learning algorithm

- Identify a baseline level of performance for your learning algorithm

- Understand how bias and variance apply to neural networks

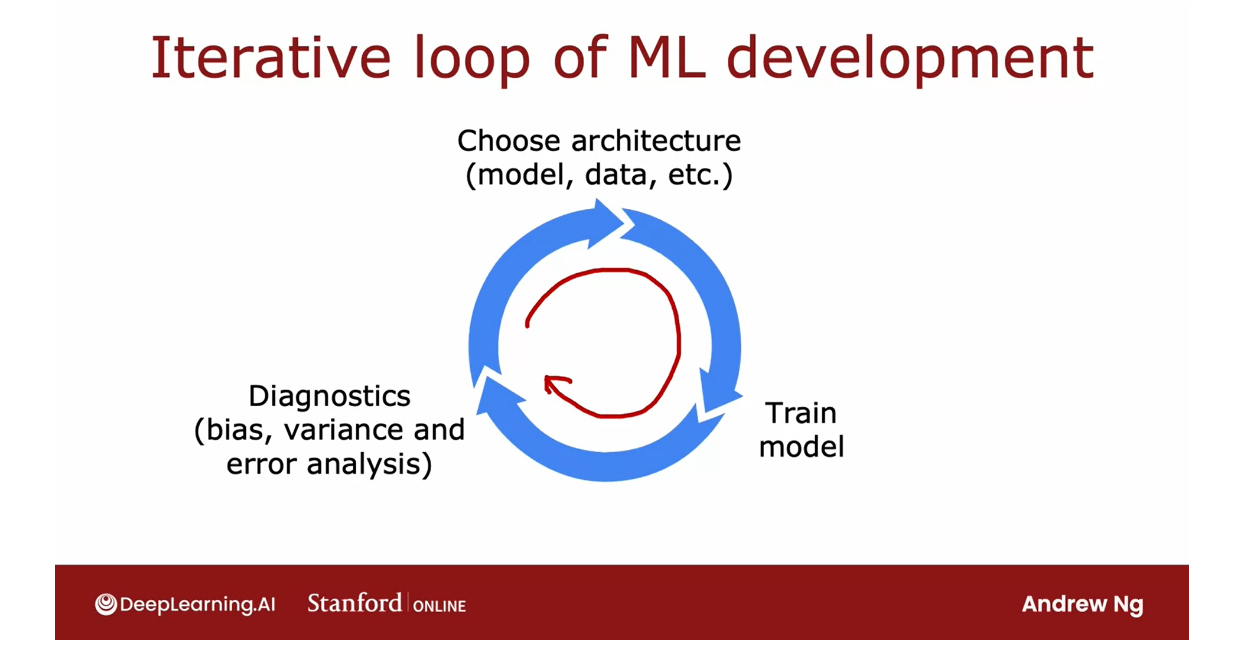

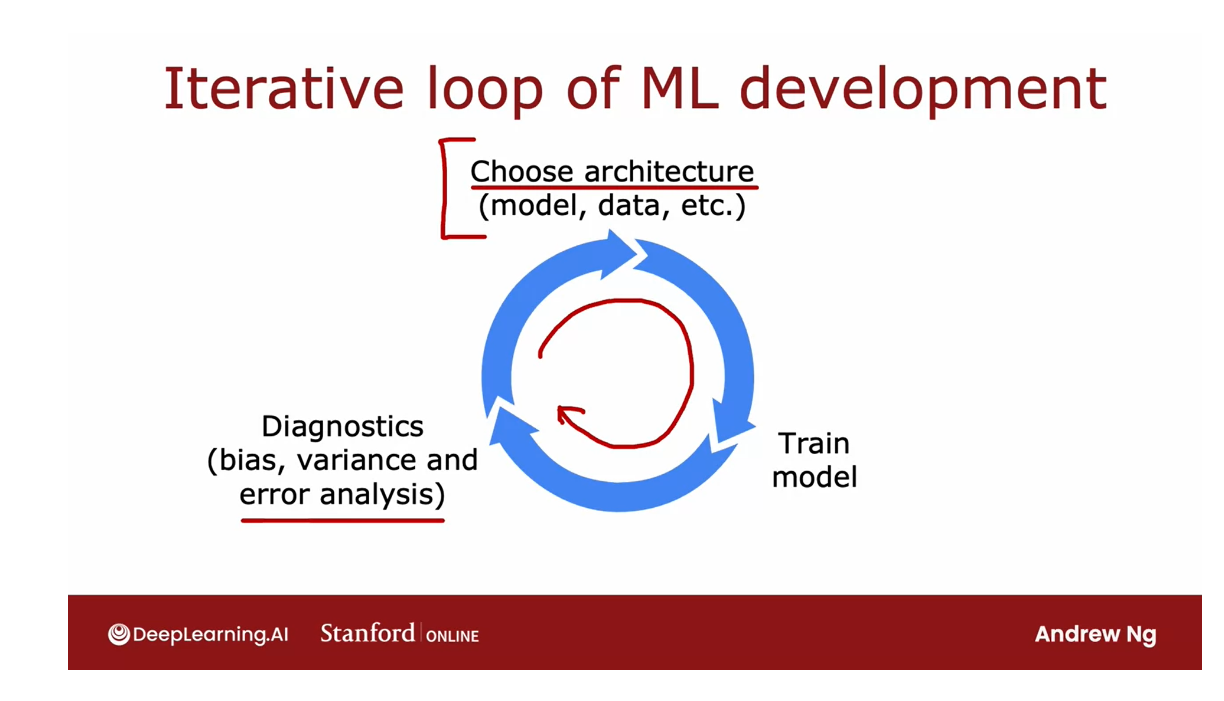

- Learn about the iterative loop of Machine Learning Development that’s used to update and improve a machine learning model

- Learn to use error analysis to identify the types of errors that a learning algorithm is making

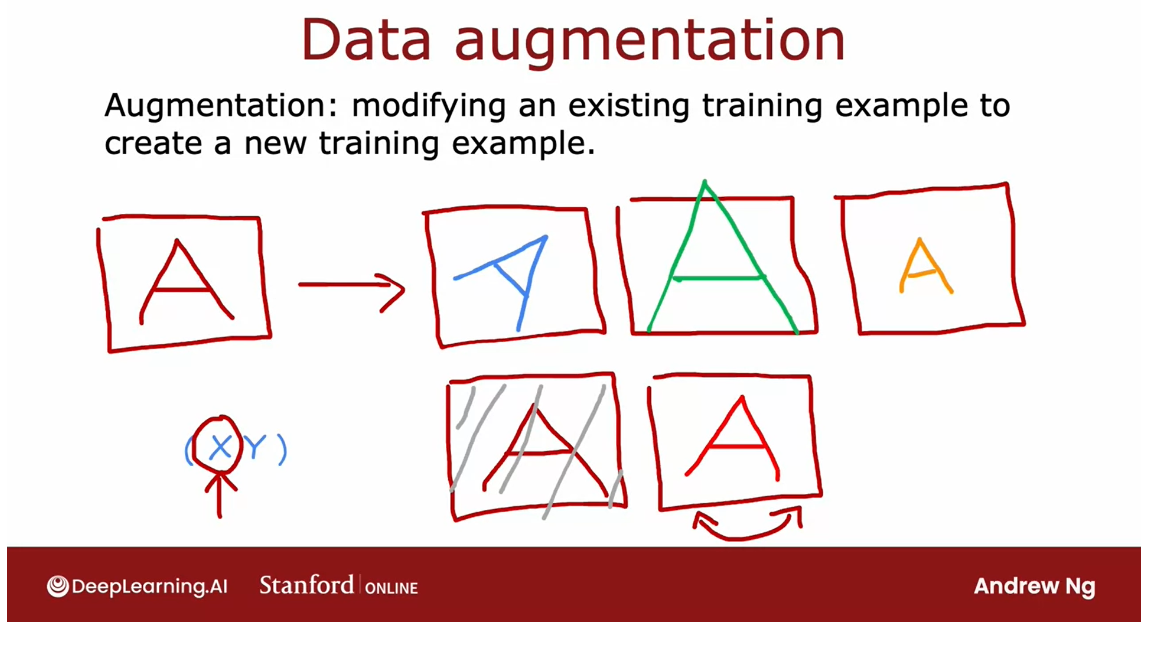

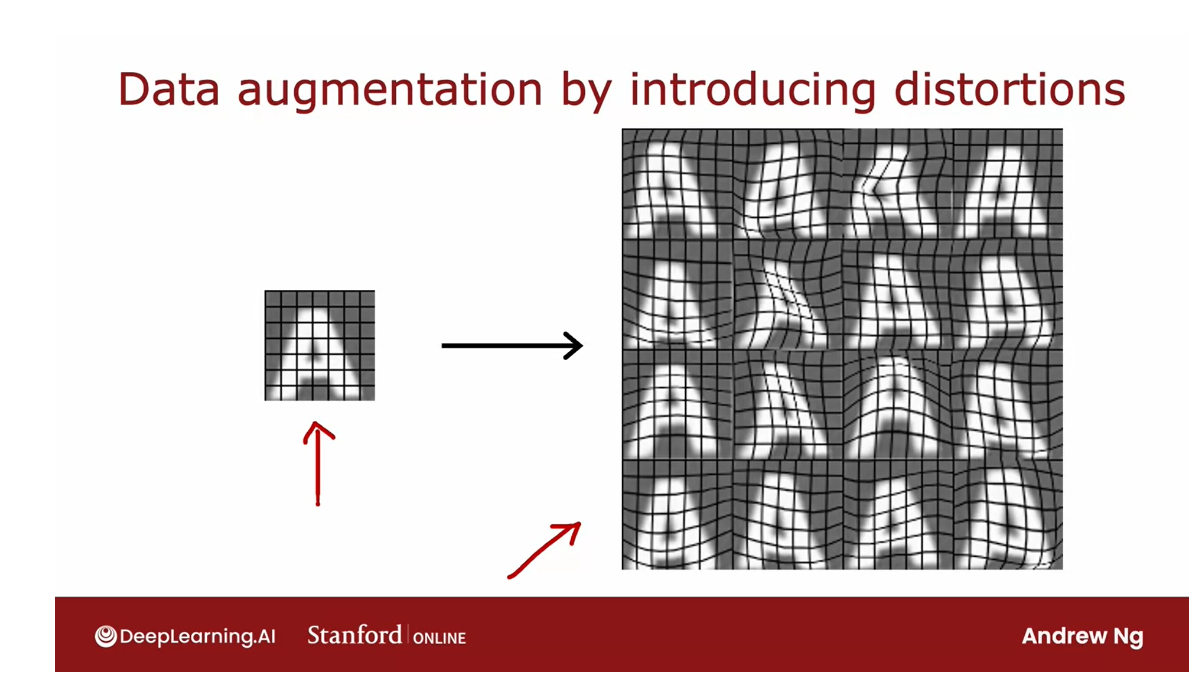

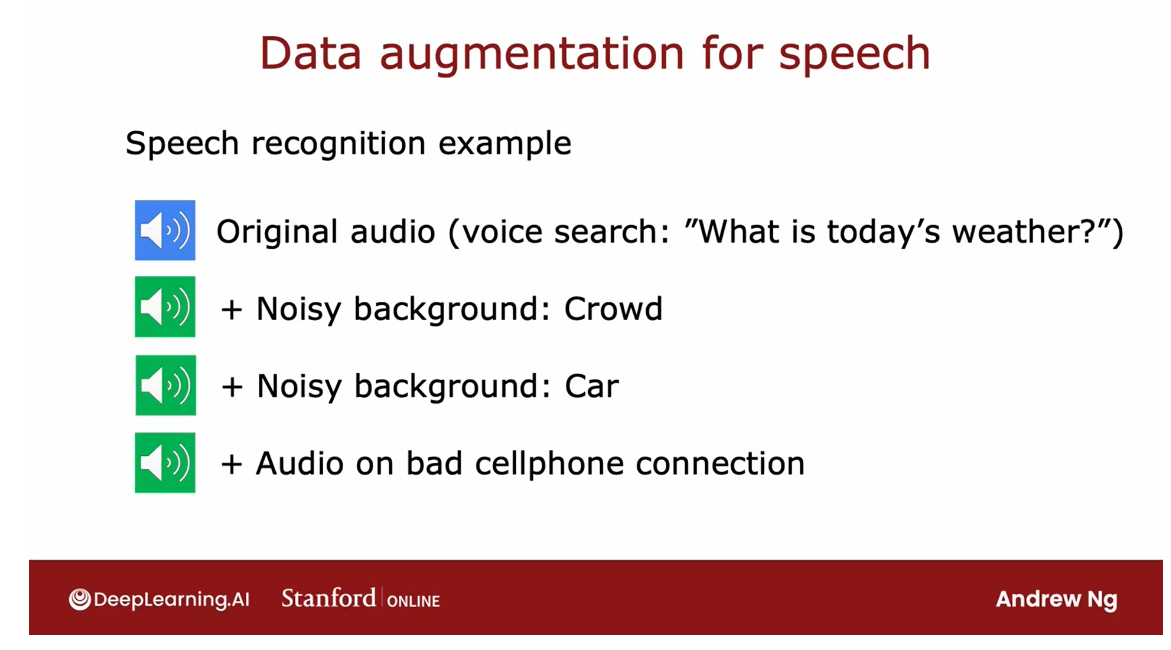

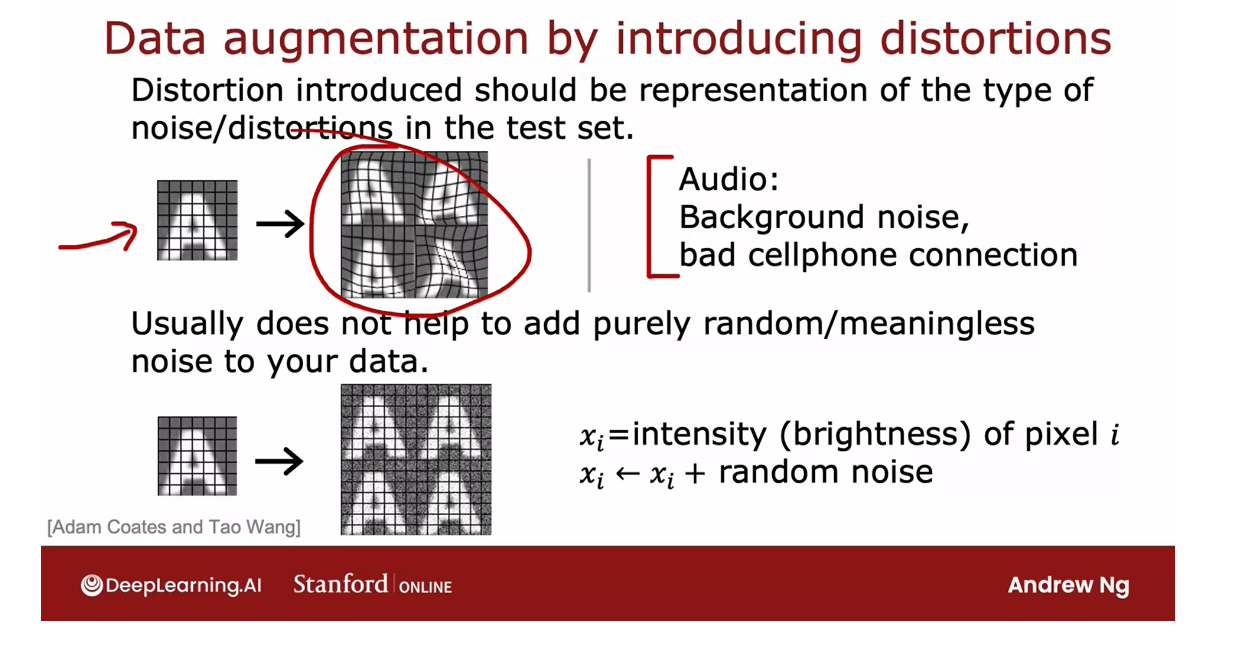

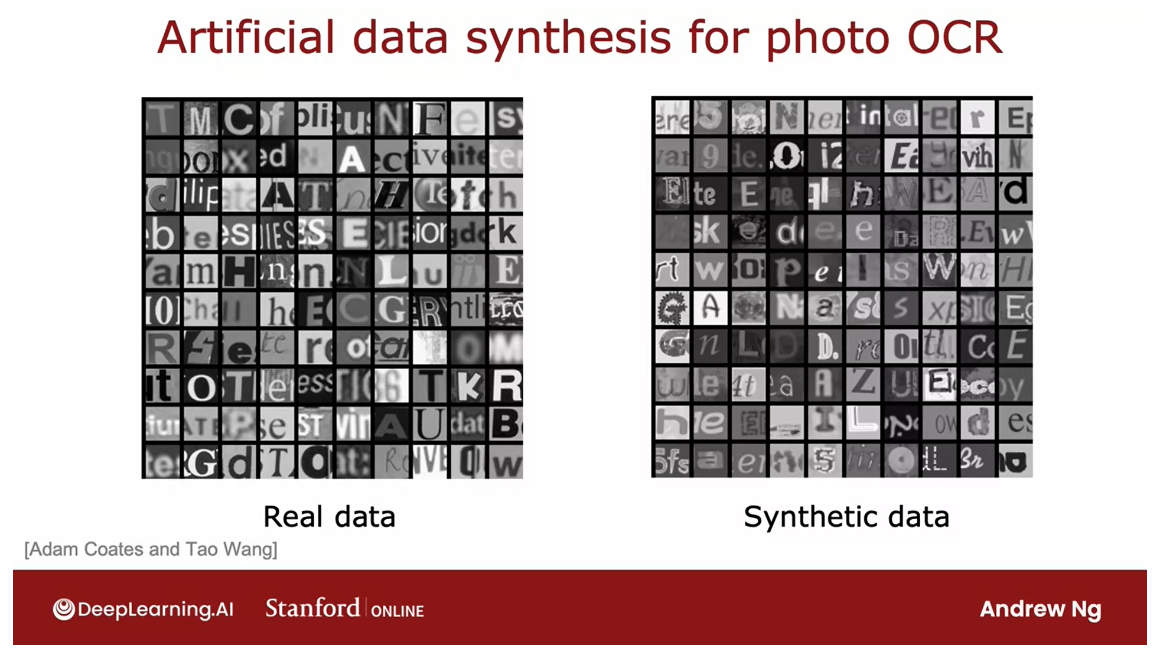

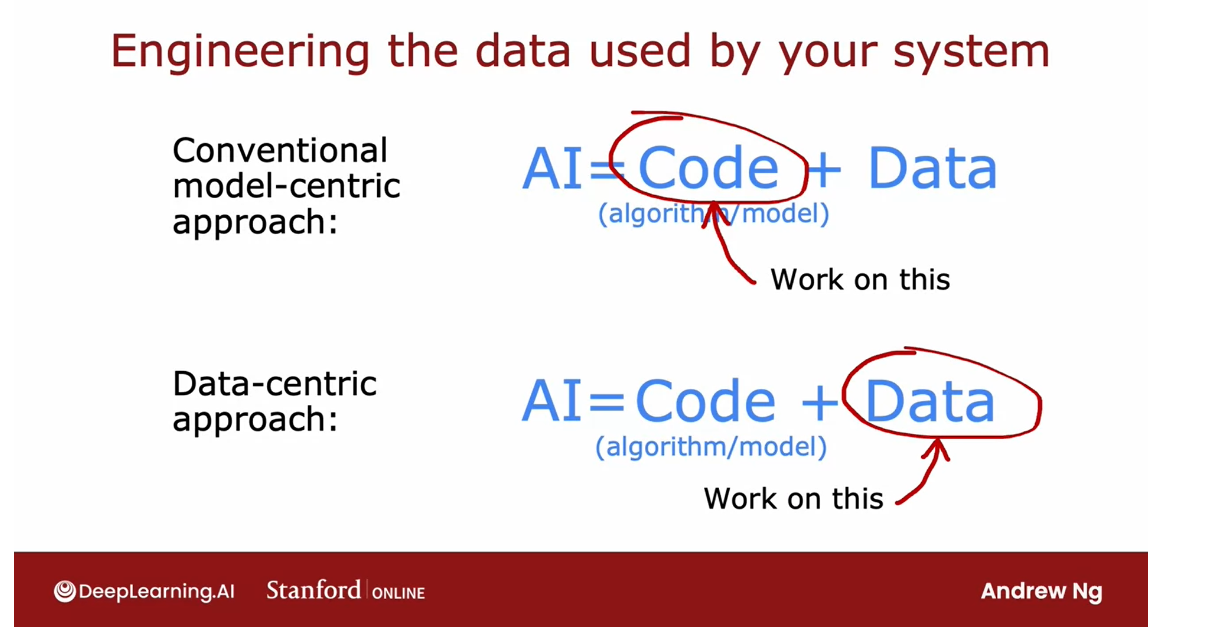

- Learn how to add more training data to improve your model, including data augmentation and data synthesis

- Use transfer learning to improve your model’s performance.

- Learn to include fairness and ethics in your machine learning model development

- Measure precision and recall to work with skewed (imbalanced) datasets

[1] Advice for applying machine learning

Deciding what to try next

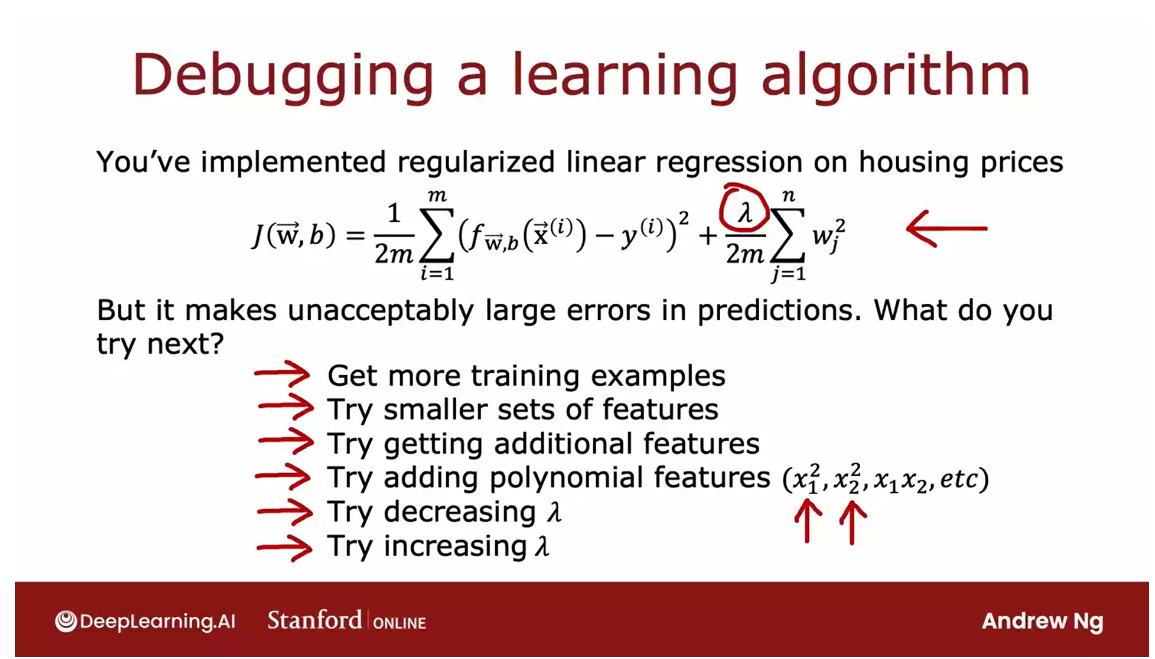

Debugging a learning algorithm

How do you use learning algorithms effectively?

Hi, and welcome back. By now you’ve seen a lot of different learning algorithms, including linear regression, logistic regression,

even deep learning, or neural networks, and next week, you’ll see

decision trees as well.You now have a lot of powerful tools of

machine learning, but how do you use these

tools effectively?

Depend to a large part on how well you can repeatedly make good decisions

I’ve seen teams sometimes, say six months to build a

machine learning system, that I think a more

skilled team could have taken or done in just

a couple of weeks.The efficiency of

how quickly you can get a machine learning

system to work well, will depend to a large part on how well you can

repeatedly make good decisions about

what to do next in the course of a

machine learning project.

In this week, I hope to share with you a

number of tips on how to make decisions

about what to do next in machine

learning project, that I hope will end up

saving you a lot of time.

Let’s take a look at some advice on how to build

machine learning systems.

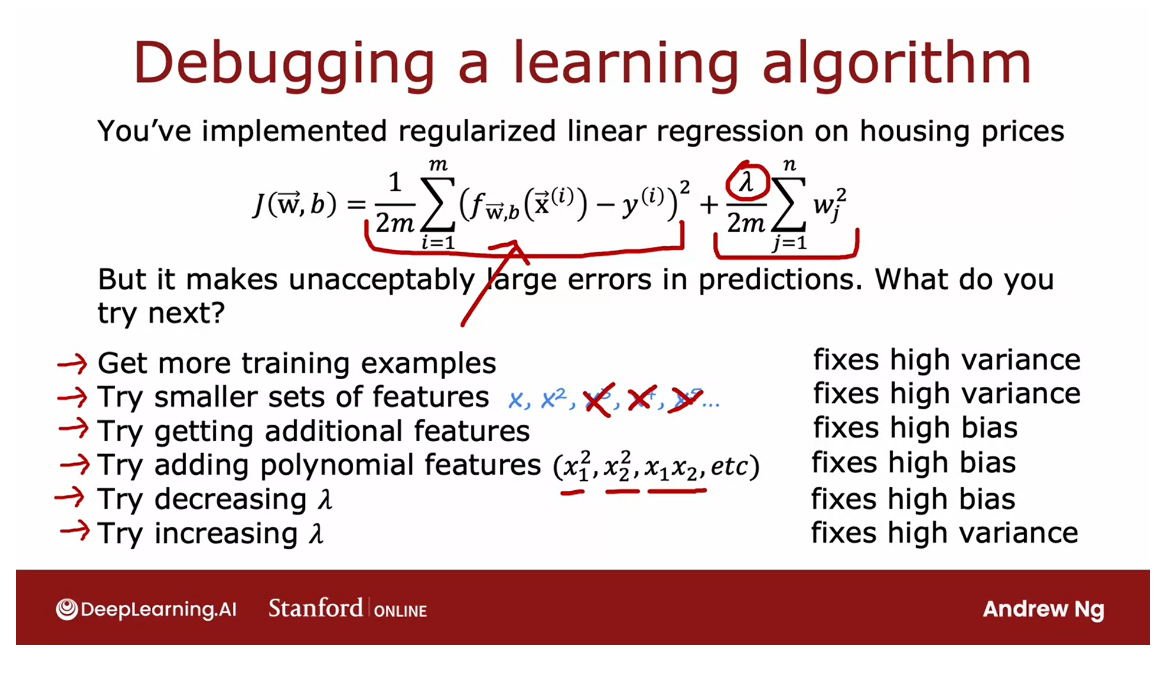

Let’s start with an example, say you’ve implemented

regularized linear regression to predict housing prices, so you have the

usual cost function for your learning algorithm, squared error plus this

regularization term.

Example: unacceptably large errors in prediction

But if you train the model, and find that it makes unacceptably large errors

in it’s predictions, what do you try next?

When you’re building a

machine learning algorithm, there are usually a lot of different things

you could try.

For example, you

could decide to get more training examples since it seems having more

data should help, or maybe you think maybe

you have too many features, so you could try a

smaller set of features.Or maybe you want to get

additional features, such as finally

additional properties of the houses to

toss into your data, and maybe that’ll help

you to do better.

Or you might take the

existing features x_1, x_2, and so on, and try adding polynomial

features x_1 squared, x_2 squared, x_1,

x_2, and so on.

Or you might wonder if the value of Lambda is chosen well, and you might say, maybe it’s too big, I want

to decrease it. Or you may say, maybe

it’s too small, I want to try increasing.On any given machine

learning application, it will often turn

out that some of these things could be fruitful, and some of these

things not fruitful.

Find a way to make good choices

The key to being effective

at how you build a machine learning algorithm

will be if you can find a way to make good choices about where

to invest your time.

For example, I have

seen teams spend literally many months collecting

more training examples, thinking that more training

data is going to help, but it turns out

sometimes it helps a lot, and sometimes it doesn’t.

Machine learning diagnostic

In this week, you’ll

learn about how to carry out a set of diagnostic.

Diagnostic: a test that you can run to gain insight into what is or isn’t working with learning algorithm to gain guidance into improving its performance.

By diagnostic, I mean

a test that you can run to gain insight into what is or isn’t working

with learning algorithm to gain guidance into improving its performance.

Some of these diagnostics

will tell you things like, is it worth weeks,

or even months collecting more training

data, because if it is, then you can then

go ahead and make the investment to get more data, which will hopefully lead

to improved performance, or if it isn’t then running that diagnostic could have

saved you months of time.

One thing you see

this week as well, is that diagnostics can

take time to implement, but running them can be a

very good use of your time.

This week we’ll spend

a lot of time talking about different

diagnostics you can use, to give you guidance on how to improve your learning

algorithm’s performance. But first, let’s take

a look at how to evaluate the performance of

your learning algorithm. Let’s go do that

in the next video.

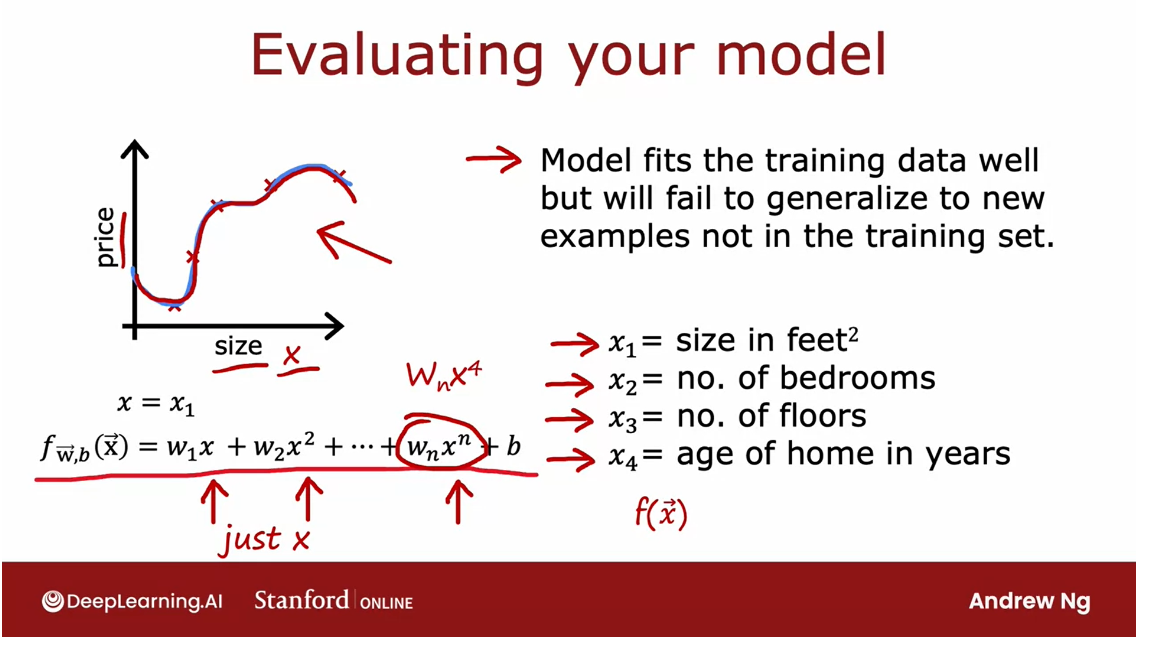

Evaluating a model

Let’s see,

you’ve trained a machine learning model. How do you evaluate that

model’s performance?

You find that having a systematic way to

evaluate performance will also hope paint a clearer path for

how to improve his performance. So let’s take a look at

how to evaluate the model.

Let’s take the example of learning

to predict housing prices as a function of the size. Let’s say you’ve trained the model

to predict housing prices as a function of the size x. And for the model that is

a fourth order polynomial.So features x, x squared,

execute and x to the 4. Because we fit 1/4 order polynomial to

a training set with five data points, this fits the training data really well.

But, we don’t like this model

very much because even though the model fits the training data well, we think it will fail to generalize to new

examples that aren’t in the training set.So, when you are predicting prices, just

a single feature at the size of the house, you could plot the model like this and we

could see that the curve is very weakly so we know this parody isn’t a good model.

But if you were fitting this

model with even more features, say we had x1 the size of house,

number of bedrooms, the number of floors of the house,

also the age of the home in years, then it becomes much harder to plot f because

f is now a function of x1 through x4.And how do you plot a four

dimensional function?

So in order to tell if your model is doing

well, especially for applications where you have more than one or two features,

which makes it difficult to plot f of x.We need some more systematic way to

evaluate how well your model is doing.

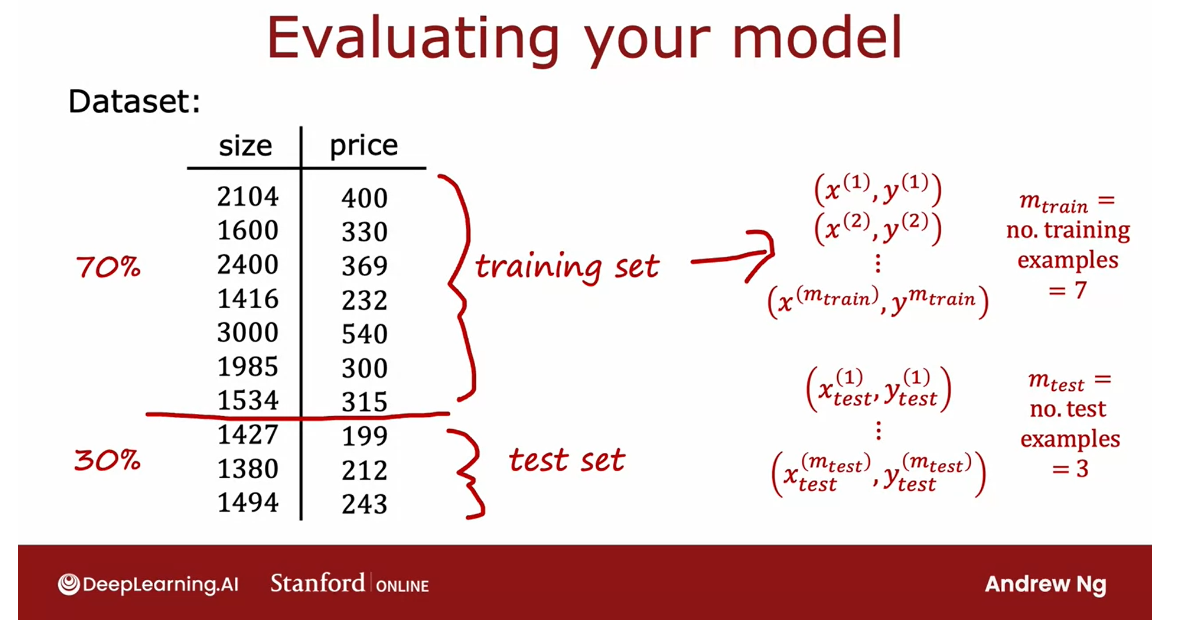

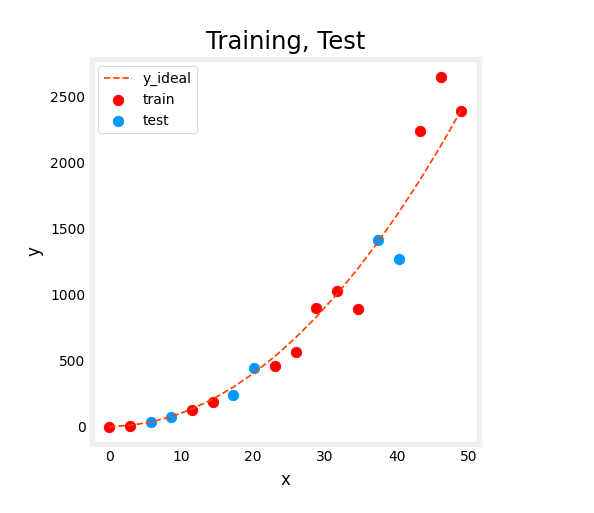

Here’s a technique that you can use. If you have a training set and this is

a small training set with just 10 examples listed here, rather than taking all

your data to train the parameters w and p of the model, you can instead split

the training set into two subsets.

I’m going to draw a line here, and

let’s put 70% of the data into the first part and

I’m going to call that the training set.And the second part of the data,

let’s say 30% of the data, I’m going to put into it has set.And what we’re going to

do is train the models, parameters on the training set on

this first 70% or so of the data, and then we’ll test his

performance on this test set.In notation, I’m going to use x1, why 1? Same as before, to denote

the training examples through xm, ym, except that now to make explicit.So in this little example we would

have seven training example.

And to introduce one

new piece of notation, I’m going to use m subscript train. M train is a number of training examples

which in this small dataset is 7. So the subscript train just

emphasizes if we’re looking at the training set portion of the data.

And for the test set, I’m going to use

the notation x1 subscript test comma y1, subscript test to denote

the first test example, and this goes all the way to

x m test subscript tests. Why m test?Subscript tests and m tests is the number

of test examples, which in this case is 3.

And it’s not uncommon to split your

dataset according to maybe a 70, 30 split or 80, 20 split with most of

your data going into the training set, and then a smaller fraction

going into the test set.

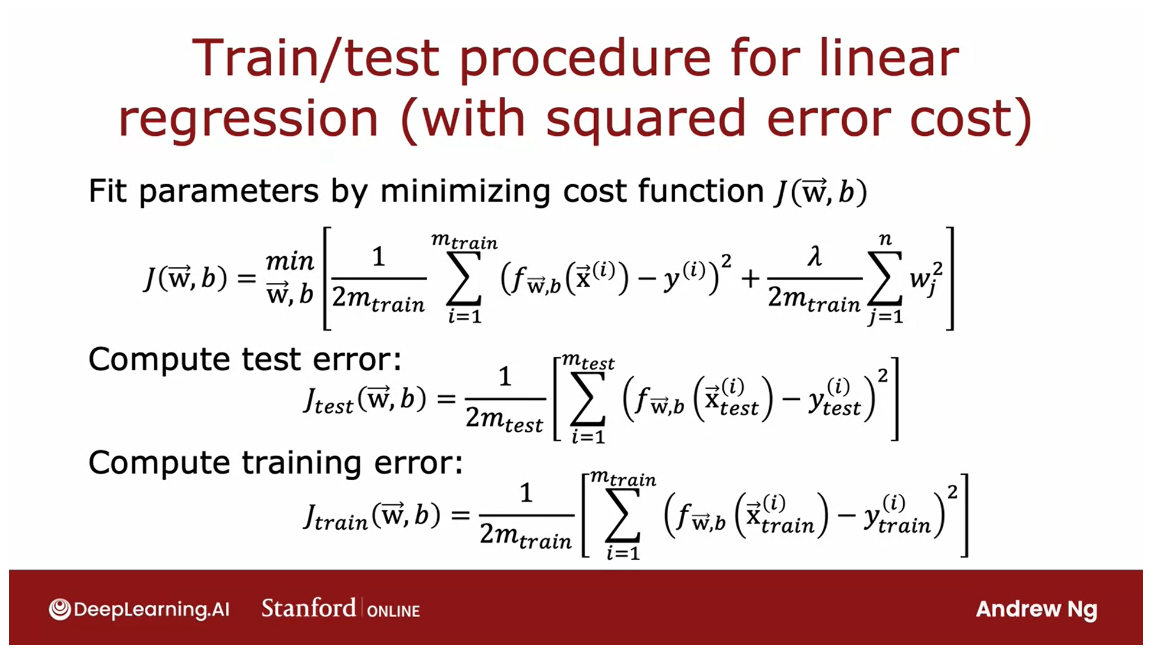

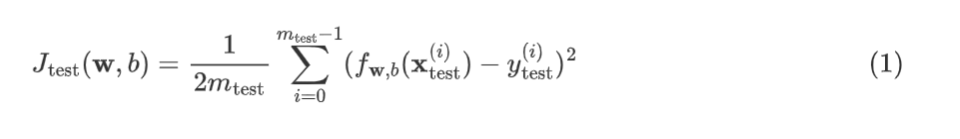

So, in order to train a model and

evaluated it, this is what it would look like if you’re using linear

regression with a squared error cost.

Start off by fitting the parameters by

minimizing the cost function j of w,b. So this is the usual cost

function minimize over w,b of this square error cost,

plus regularization term longer over 2m times

some of the w,j squared.And then to tell how well this model is

doing, you would compute J test of w,b, which is equal to the average

error on the test set, and that’s just equal to 1/2 times m test. That’s the number of test examples.

And then of some overall the examples

from r equals 1, to the number of test examples of the squared era

on each of the test examples like so.So it’s a prediction on the if

test example input minus the actual price of the house

on the test example squared. And notice that the test

error formula J test, it does not include that

regularization term.

And this will give you a sense of how

well your learning algorithm is doing.One of the quantity that’s often

useful to computer as well as the training error,

which is a measure of how well you’re learning algorithm is doing

on the training set.So let me define J train of w,b

to be equal to the average over the training set. 1 over to 2m, or 1/2 m subscript train of some over your training set

of this squared error term.

And once again, this does not include

the regularization term unlike the cost function that you are minimizing

to fit the parameters.

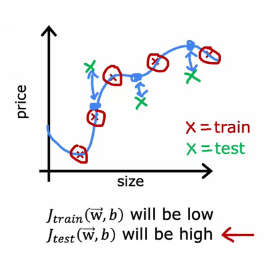

So, in the model like what we

saw earlier in this video, J train of w,b will be low

because the average era on your training examples will be zero or

very close to zero.So J train will be very close to zero. But if you have a few additional examples

in your test set that the algorithm had not trained on, then those test examples,

my love life these.And there’s a large gap between what

the algorithm is predicting as the estimated housing price, and

the actual value of those housing prices.

And so, J tests will be high. So seeing that J test is high on this

model, gives you a way to realize that even though it does great on the training

set, is actually not so good at generalizing to new examples to new data

points that were not in the training set.So, that was regression

with squared error cost.

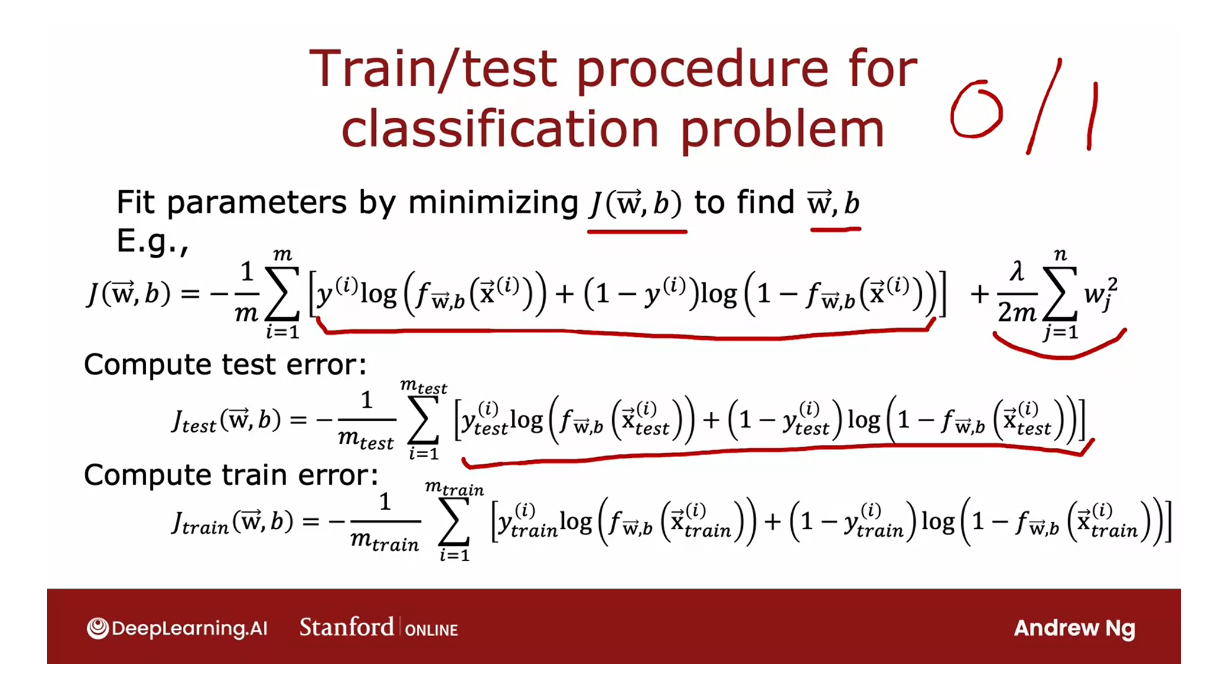

Procedure of classification problem

Now, let’s take a look at how you apply

this procedure to a classification problem.

For example, if you are classifying

between handwritten digits that are either 0 or

1, so same as before, you fit the parameters by minimizing the

cost function to find the parameters w,b.For example,

if you were training logistic regression, then this would be the cost function

J of w,b, where this is the usual logistic loss function, and

then plus also the regularization term.

And to compute the test error,

J test is then the average over your test examples,

that’s that 30% of your data that wasn’t in the training set of the logistic

loss on your test set.And the training error you can

also compute using this formula, is the average logistic loss

on your training data that the algorithm was using to minimize

the cost function J of w, b.

Well, when I described here will work,

okay, for figuring out if your learning algorithm is doing well, by seeing how

I was doing in terms of test error.When applying machine learning

to classification problems, there’s actually one other

definition of J tests and J train that is maybe

even more commonly used.

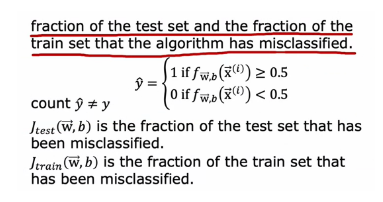

Which is instead of using the logistic

loss to compute the test error and the training error to instead measure

what the fraction of the test set, and the fraction of the training set

that the algorithm has misclassified.So specifically on the chess set,

you can have the algorithm make a prediction 1 or

0 on every test example.

So, recall why hat we would predict us

1 if f of x is greater than equal 4.5, and zero if it’s less than 0.5.And you can then count up in the test

set the fraction of examples where why hat is not equal to the actual

ground truth label while in the test set.So concretely, if you are classifying

handwritten digits 0, 1 by new classification toss, then J tests

would be the fraction of that test set, where 0 was classified as 1 of 1,

classified as 0. And similarly, J train is a fraction of the training

set that has been misclassified.

Taking a dataset and splitting it into a

training set and a separate test set gives you a way to systematically evaluate

how well your learning outcomes doing.By computing both J tests and J train, you can now measure how was doing on

the test set and on the training set.

This procedure is one step to what you’ll

be able to automatically choose what model to use for

a given machine learning application.For example, if you’re trying

to predict housing prices, should you fit a straight

line to your data, or fit a second order polynomial, or

third order fourth order polynomial?

It turns out that with one further

refinement to the idea you saw in this video, you’ll be able to have an algorithm

help you to automatically make that type of decision well. Let’s take a look at how to

do that in the next video.

Model selection and training/cross validation/test sets

Automatically choose a good model for the machine learning algorithm

In the last video,

you saw how to use the test set to evaluate

the performance of a model.Let’s make one

further refinement to that idea in this video, which allow you to

use the technique, to automatically

choose a good model for your machine

learning algorithm.

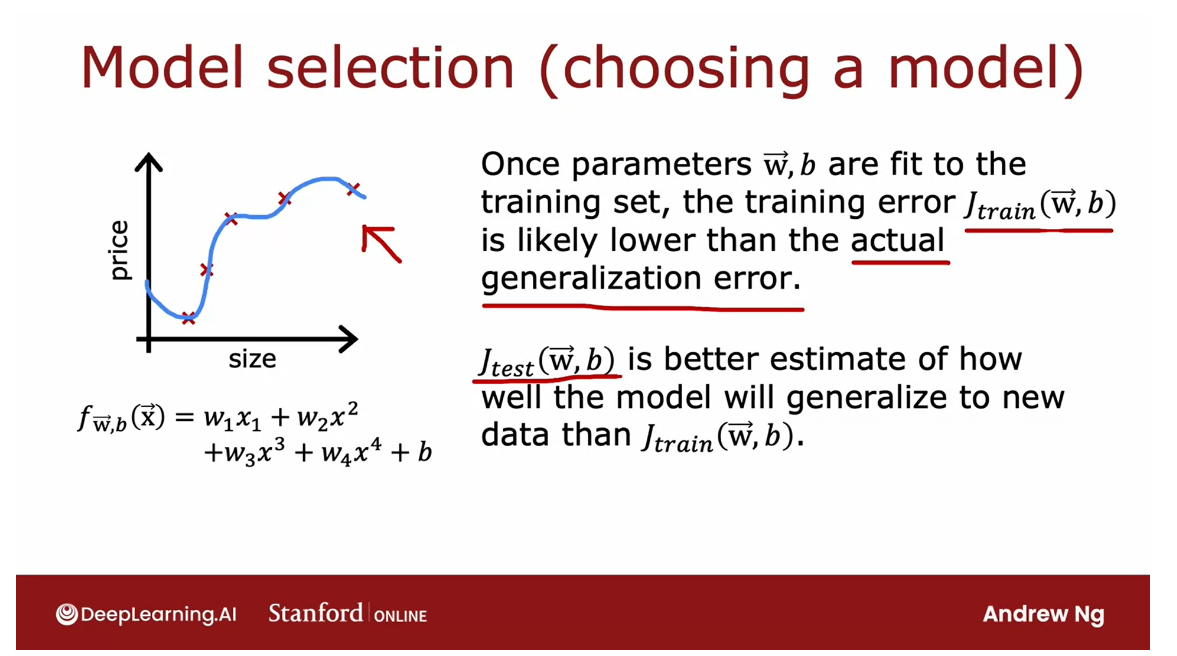

One thing we’ve

seen is that once the model’s parameters w and b have been fit to

the training set.The training error may not be a good indicator of how

well the algorithm will do or how well it

will generalize to new examples that were

not in the training set, and in particular,

for this example, the training error will

be pretty much zero.

That’s likely much lower than the actual generalization error, and by that I mean

the average error on new examples that were

not in the training set.What you saw on the

last video is that J test the performance of

the algorithm on examples, is not trained on, that will be a

better indicator of how well the model will

likely do on new data.

Let’s take a look at how to use a test set to choose a model for a given machine learning application

By that I mean other data

that’s not in the training set. Let’s take a look at

how this affects, how we might use a test set to choose a model for a given

machine learning application.

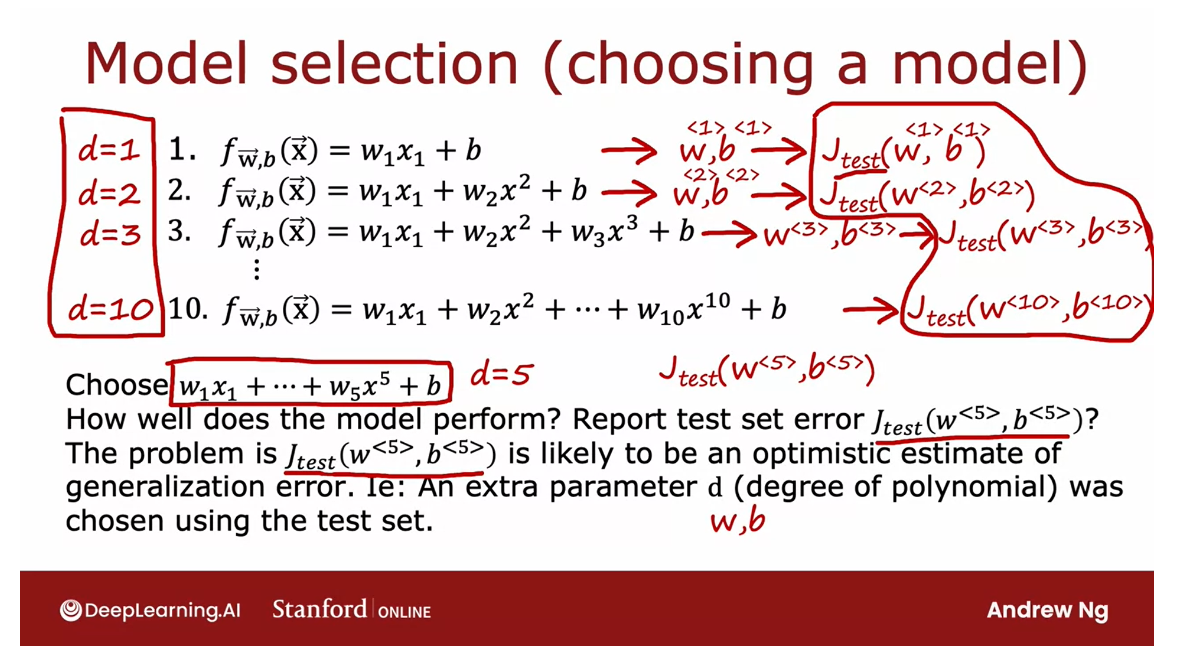

If a fitting a function to predict housing prices or some

other regression problem, one model you might consider is to fit a linear

model like this.This is a first-order

polynomial and we’re going to use d equals 1 on this slide to denote fitting a one or

first-order polynomial.If you were to fit a model like this to

your training set, you get some

parameters, w and b, and you can then compute J tests to estimate how well does this

generalize to new data?

On this slide, I’m

going to use w^1, b^1 to denote that these

are the parameters you get if you were to fit a

first order polynomial, a degree one, d

equals 1 polynomial.

Now, you might also consider fitting a second-order

polynomial or quadratic model, so this is the model. If you were to fit this

to your training set, you would get some

parameters, w^2, b^2, and you can then

similarly evaluate those parameters on your

test set and get J test w^2, b^2, and this will give

you a sense of how well the second-order

polynomial does.

You can go on to try d equals 3, that’s a third order or a degree three polynomial

that looks like this, and fit parameters and

similarly get J test.You might keep doing this until, say you try up to a 10th

order polynomial and you end up with J test

of w^10, b^10.That gives you a

sense of how well the 10th order

polynomial is doing.

One procedure you could try, this turns out not to

be the best procedure, but one thing you could try is, look at all of these J tests, and see which one gives

you the lowest value.

If J t e s t J_{test} Jtest is the lowest => it does best

Say, you find that, J test for the fifth

order polynomial for w^5, b^5 turns out to be the lowest. If that’s the case, then

you might decide that the fifth order polynomial

d equals 5 does best, and choose that model

for your application.

To report the test test error

If you want to estimate how

well this model performs, one thing you could do, but this turns out to be a

slightly flawed procedure, is to report the test set error, J test w^5, b^5.The reason this procedure

is flawed is J test of w^5, b^5 is likely to be an optimistic estimate of

the generalization error.

J t e s t J_{test} Jtest is likely to be an optimistic estimate of the generalization error

In other words, it

is likely to be lower than the actual

generalization error, and the reason is, in the procedure we talked

about on this slide with basic fits, one

extra parameter, which is d, the

degree of polynomial, and we chose this parameter

using the test set.

On the previous slide, we saw

that if you were to fit w, b to the training data, then the training data would be an overly optimistic estimate

of generalization error.It turns out too, that if

you want to choose the parameter d using the test set, then the test set J test is

now an overly optimistic, that is lower than

actual estimate of the generalization error.

Cross validation

validation set = development set = dev set

The procedure on this

particular slide is flawed and I don’t

recommend using this.

Instead, if you want to

automatically choose a model, such as decide what

degree polynomial to use.Here’s how you modify

the training and testing procedure in order to

carry out model selection.

Whereby model selection, I mean choosing amongst

different models, such as these 10 different

models that you might contemplate using for your

machine learning application.

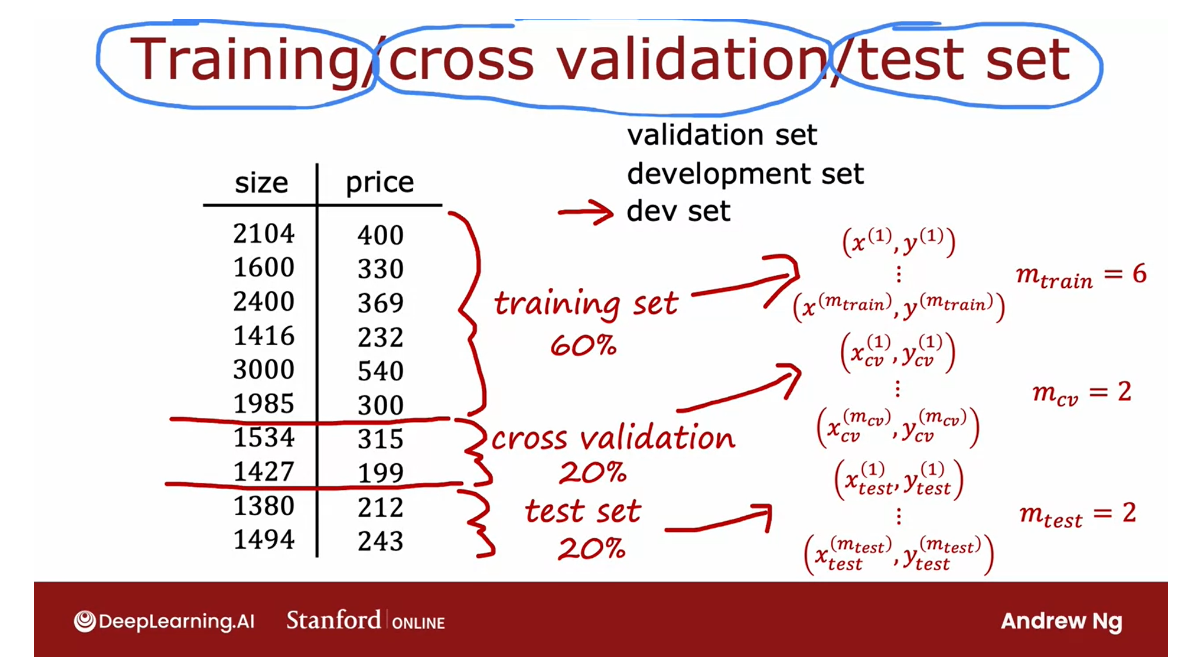

Splitting our data into three different subsets

The way we’ll modify the

procedure is instead of splitting your data

into just two subsets, the training set

and the test set, we’re going to split your data into three different subsets, which we’re going to

call the training set, the cross-validation set,

and then also the test set.

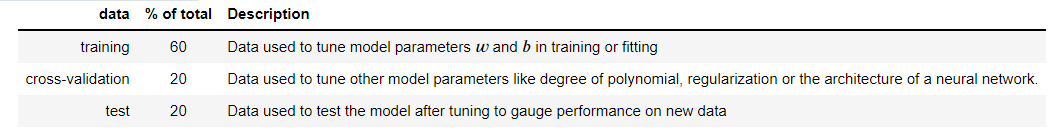

Using our example from before of these 10 training examples, we might split it into putting 60 percent

of the data into the training set and so the notation we’ll use for the training set portion

will be the same as before, except that now M train, the number of training

examples will be six and we might put 20

percent of the data into the cross-validation set and a notation I’m going

to use is x_cv of one, y_cv of one for the first

cross-validation example.

So cv stands for

cross-validation, all the way down to x_cv

of m_cv and y_cv of m_cv.Where here, m_cv equals

2 in this example, is the number of

cross-validation examples.Then finally we have the

test set same as before, so x1 through x m tests

and y1 through y m, where m tests equal to 2. This is the number

of test examples.

We’ll see you on the

next slide how to use the cross-validation set.The way we’ll modify

the procedure is you’ve already seen

the training set and the test set and we’re

going to introduce a new subset of the data called

the cross-validation set.

Cross-validation: an extra dataset used to check the validity or the accuracy of different models

The name cross-validation

refers to that this is an extra dataset

that we’re going to use to check or trust check the validity or really the

accuracy of different models.I don t think it’s a great name, but that is what people

in machine learning have gotten to call

this extra dataset.You may also hear people call this the validation

set for short, it’s just fewer syllables than cross-validation or

in some applications, people also call this

the development set.

Means basically the same

thing or for short.Sometimes you hear people

call this the dev set, but all of these terms mean the same thing as

cross-validation set.I personally use the term dev set the most often because

it’s the shortest, fastest way to say it but

cross-validation is pretty used a little bit more often by machine learning

practitioners.

Onto these three subsets

of the data training set, cross-validation

set, and test set, you can then compute the training error, the

cross-validation error, and the test error using

these three formulas.

None of these three terms include the regularization term

The regularization term is only included in the training objective.

Whereas usual, none of

these terms include the regularization term that is included in the

training objective, and this new term in the middle, the cross-validation error

is just the average over your m_cv

cross-validation examples of the average say,

squared error.

This term, in addition to being called

cross-validation error, is also commonly called the

validation error for short, or even the development set

error, or the dev error.

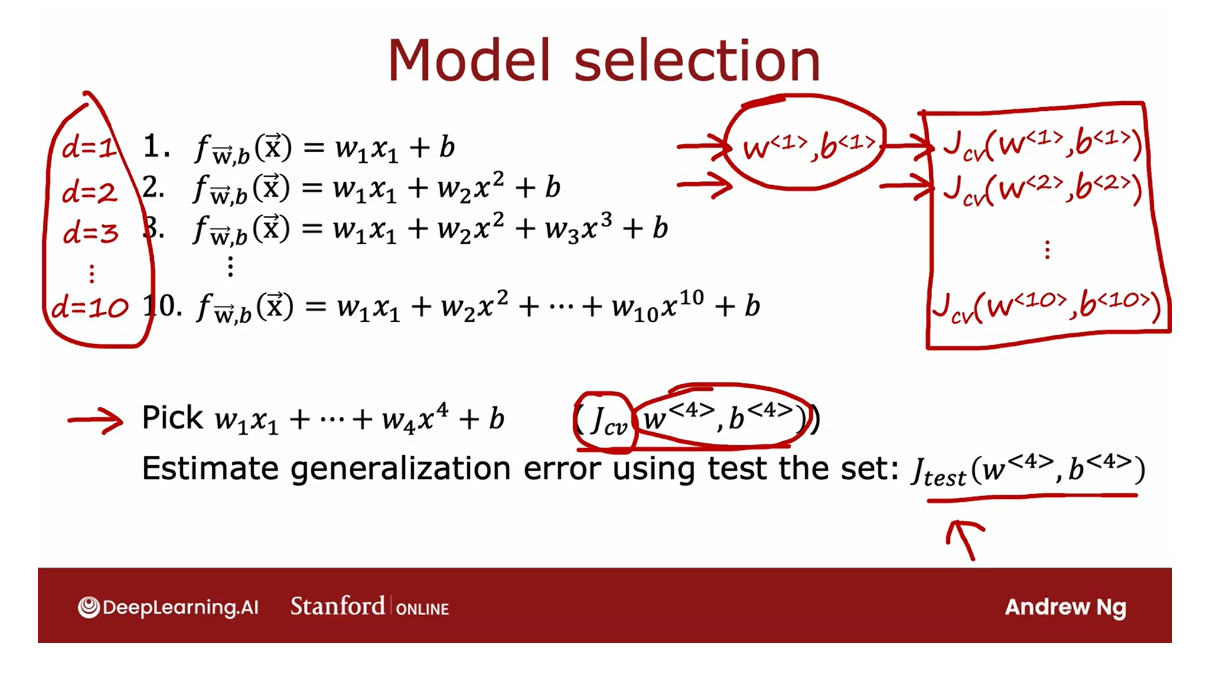

Armed with these three measures of learning algorithm

performance, this is how you can then go about carrying out

model selection.You can, with the 10 models, same as earlier on this slide, with d equals 1, d equals 2, all the way up to a 10th degree or the

10th order polynomial, you can then fit the

parameters w_1, b_1.

But instead of evaluating

this on your test set, you will instead evaluate

these parameters on your cross-validation sets

and compute J_cv of w1, b1, and similarly, for the second model, we get J_cv of w2, v2, and all the way down

to J_cv of w10, b10.

Then, in order to

choose a model, you will look at which model has the lowest

cross-validation error, and concretely, let’s

say that J_cv of w4, b4 as low as, then what that means is you pick this fourth-order polynomial as the model you will use

for this application.

Finally, if you want to

report out an estimate of the generalization error of how well this model will

do on new data.You will do so using that

third subset of your data, the test set and you

report out Jtest of w4,b4. You notice that throughout

this entire procedure, you had fit these parameters

using the training set.

You then chose the parameter

d or chose the degree of polynomial using the

cross-validation set and so up until this point, you’ve not fit any parameters, either w or b or d to the test set and that’s why

Jtest in this example will be fair estimate of the generalization error of this model thus

parameters w4,b4.

This gives a better procedure for model selection

and it lets you automatically make a

decision like what order polynomial to choose for your

linear regression model.

This model selection

procedure also works for choosing among

other types of models.

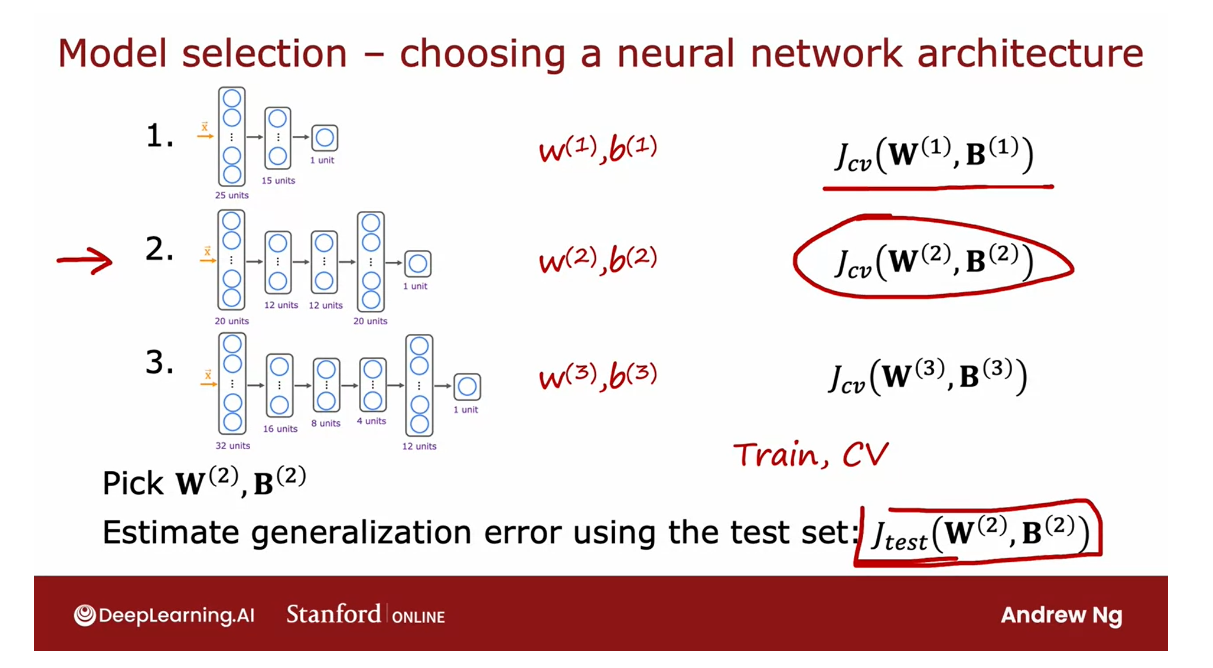

For example, choosing a

neural network architecture.If you are fitting a model for handwritten

digit recognition, you might consider

three models like this, maybe even a larger set of

models than just me but here are a few different

neural networks of small, somewhat larger, and

then even larger.

To help you decide

how many layers do the neural network have and how many hidden units per

layer should you have, you can then train all

three of these models and end up with parameters w1, b1 for the first model, w2, b2 for the second model, and w3,b3 for the third model.

You can then evaluate the neural networks

performance using Jcv, using your cross-validation set and where the

classification problem, Jcv can be the

percentage of examples.

Since this is a

classification problem, Jcv the most common choice

would be to compute this as the fraction of

cross-validation examples that the algorithm

has misclassified.

Pick a model? with the lowest cross validation error

Report out an estimate of the generalization error: use the test set to estimate

You would compute this using all three models and then pick the model with the lowest

cross validation error.If in this example, this has the lowest

cross validation error, you will then pick the second

neural network and use parameters trained on

this model and finally, if you want to report out an estimate of the

generalization error, you then use the test set to estimate how well

the neural network that you just chose will do.

Make decisions only looking at the training set and cross-validation set

After all the decisions, evaluate them on the test set.

In machine learning practice

is considered best practice to make all the decisions you want to make regarding

your learning algorithm, such as how to

choose parameters, what degree polynomial to use, but make decisions

only looking at the training set and

cross-validation set and to not use the test set at all

to make decisions about your model and only after you’ve made

all those decisions,then finally, tick

them all though you have designed and evaluated

on your test set.

That procedure ensures

that you haven’t accidentally fit anything

to the test set so that your test set becomes so unfair and not overly

optimistic estimate of generalization error

of your algorithm.

It’s considered best

practice in machine learning that if you have to make

decisions about your model, such as fitting parameters or choosing the model architecture, such as neural network

architecture or degree of polynomial if you’re fitting

a linear regression, to make all those

decisions only using your training set and your

cross-validation set, and to not look at the test

set at all while you’re still making decisions regarding

your learning algorithm.

It’s only after

you’ve come up with one model as your final

model to only then evaluate it on the test set and because you haven’t made any decisions using the test set, that ensures that

your test set is a fair and not overly

optimistic estimate of how well your model will

generalize to new data.

That’s model

selection and this is actually a very widely

used procedure. I use this all the

time to automatically choose what model to use for a given machine

learning application.

Earlier this week, I mentioned

running diagnostics to decide how to improve the performance of a

learning algorithm.Now that you have

a way to evaluate learning algorithms and even automatically choose a model, let’s dive more deeply into

examples of some diagnostics.The most powerful diagnostic that I know of and that

I used for a lot of machine learning

applications is one called bias and variance. Let’s take a look at what

that means in the next video.

[2] Practice quiz: Advice for applying machine learning

Practice quiz: Advice for applying machine learning

Latest Submission Grade 100%

Actually, if a model overfits the training set, it may not generalize well to new data.

Question 3

For a classification task; suppose you train three different models using three different neural network architectures. Which data do you use to evaluate the three models in order to choose the best one?

Incorrect. You’ll only use the test set after choosing the best model based on the cross validation set. You want to avoid using the test set while you are still selecting model options, because the test set is meant to serve as an estimate for how the model will generalize to new examples that it has never seen before.

Correct. Use the cross validation set to calculate the cross validation error on all three models in order to compare which of the three models is best.

[3] Bias and variance

Diagnosing bias and variance

The typical workflow

of developing a machine learning

system is that you have an idea and

you train the model, and you almost

always find that it doesn’t work as well

as you wish yet.When I’m training a

machine learning model, it pretty much never works

that well the first time.

Bias and variance of a learning algorithm gives you very good guidance on what to try next

Key to the process of building machine learning

system is how to decide what to do next in order to improve

his performance.I’ve found across many

different applications that looking at the bias and variance of a learning

algorithm gives you very good guidance

on what to try next.

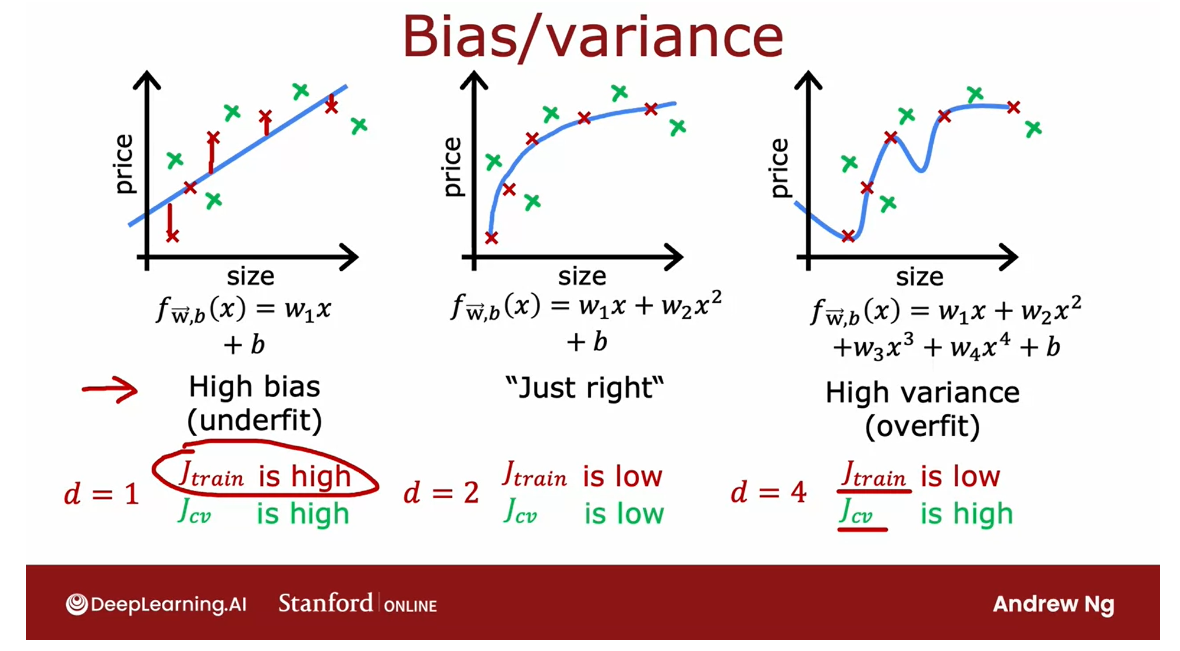

Let’s take a look

at what this means.You might remember

this example from the first course on

linear regression.

Where given this dataset, if you were to fit a

straight line to it, it doesn’t do that well. We said that this algorithm has high bias or that it

underfits this dataset.

If you were to fit a

fourth-order polynomial, then it has high-variance

or it overfits.

In the middle if you fit

a quadratic polynomial, then it looks pretty good. Then I said that was just right. Because this is a problem

with just a single feature x, we could plot the function

f and look at it like this.

If more features, we can’t plot and visualize whether it’s doing well as easily.

Diagnose: look at bias and variance on the training set and on the cross validation set

But if you had more features, you can’t plot f and visualize whether it’s

doing well as easily.Instead of trying to

look at plots like this, a more systematic way to diagnose or to find out if your algorithm

has high bias or high variance will be to

look at the performance of your algorithm on

the training set and on the cross validation set.

In particular, let’s look

at the example on the left.

If you were to compute J_train, how well does the algorithm

do on the training set? Not that well.I’d say J train here would be high

because there are actually pretty large errors between the examples and the actual

predictions of the model.

How about J_cv? J_cv would be if we had

a few new examples, maybe examples like that, that the algorithm had

not previously seen.Here the algorithm

also doesn’t do that well on examples that it

had not previously seen, so J_cv will also be high.

Underfit: high bias

It’s not even doing well on the training set

One characteristic of an

algorithm with high bias, something that is under fitting, is that it’s not even doing that well on

the training set.When J_train is high, that is your strong indicator that this algorithm

has high bias.

Let’s now look at the

example on the right. If you were to compute J_train, how well is this doing

on the training set?Well, it’s actually doing

great on the training set. Fits the training

data really well. J_train here will be low.But if you were to evaluate this model on other houses

not in the training set, then you find that J_cv, the cross-validation

error, will be quite high.

Overfit: high variance

It does much better on data it has seen than on data it has not seen.

A characteristic signature or a characteristic Q that

your algorithm has high variance will be of J_cv is much higher

than J_train.In other words, it does

much better on data it has seen than on data

it has not seen.This turns out to be a strong indicator that your

algorithm has high variance.

Again, the point of

what we’re doing is that I’m computing J_train and J_cv and seeing if J _train is high or if J_cv is

much higher than J_train.This gives you a sense, even if you can’t

plot to function f, of whether your algorithm has

high bias or high variance.

Just right: doesn’t have a high bias problem or high variance problem.

Finally, the chase

in the middle. If you look at J_train, it’s pretty low,

so this is doing quite well on the training set.If you were to look at

a few new examples, like those from, say, your cross-validation set, you find that J_cv is

also a pretty low.J_train not being too high indicates this doesn’t have

a high bias problem and J_cv not being much worse than J_train this indicates that it doesn’t have a high

variance problem either.

Which is why the quadratic model seems to be a pretty good

one for this application.

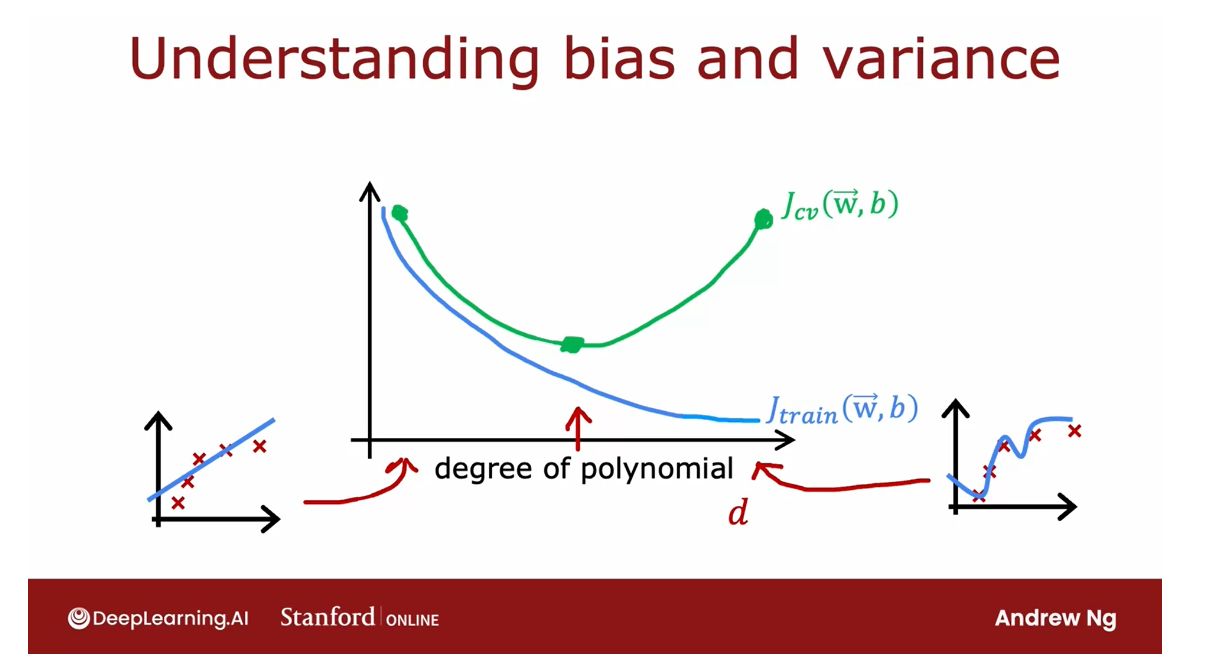

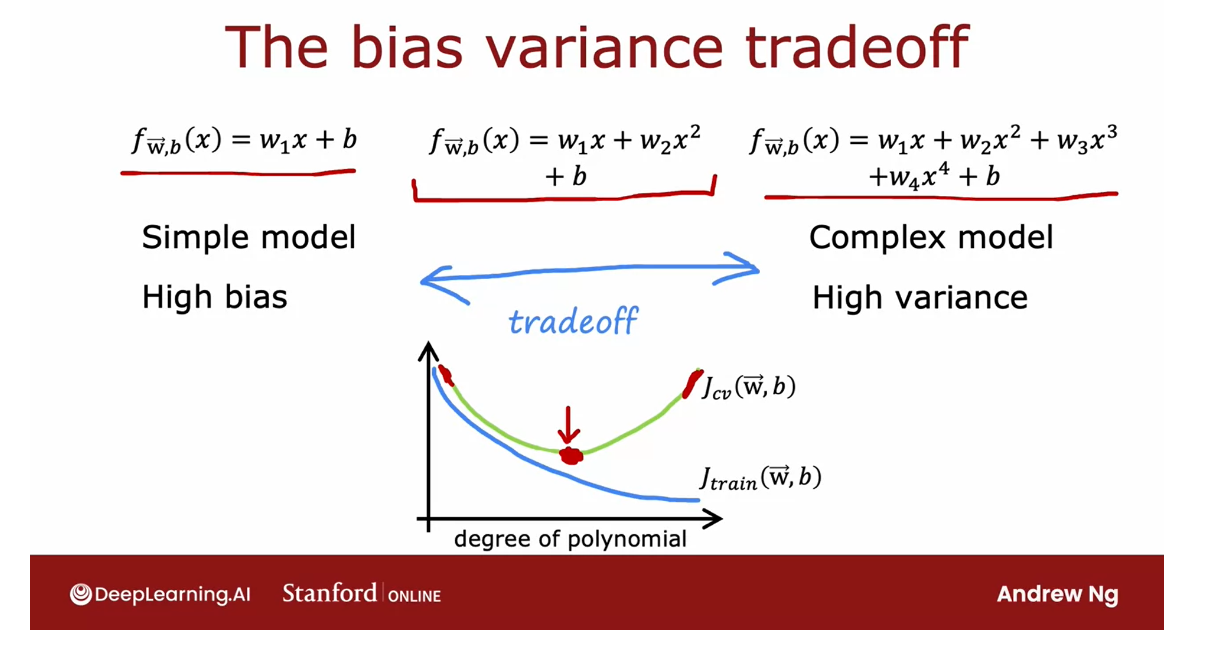

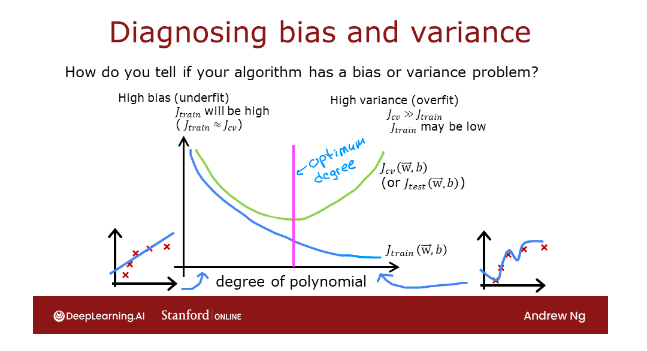

Let me share with you another

view of bias and variance.To summarize, when d equals

1 for a linear polynomial, J_train was high

and J_cv was high. When d equals 4, J train was low, but J_cv is high.When d equals 2, both were pretty low. Let’s now take a different

view on bias and variance. In particular, on

the next slide I’d like to show you how J_train and J_cv variance as a function of the degree of the

polynomial you’re fitting.

Plot a function of the degree of polynomial

Let me draw a figure where the horizontal

axis, this d here, will be the degree of polynomial that we’re fitting to the data.Over on the left we’ll correspond

to a small value of d, like d equals 1, which corresponds to

fitting straight line.Over to the right we’ll

correspond to, say, d equals 4 or even

higher values of d. We’re fitting this

high order polynomial.

So if you were to

plot J train or W, B as a function of the

degree of polynomial, what you find is that as you fit a higher and higher

degree polynomial, here I’m assuming we’re

not using regularization, but as you fit a higher and

higher order polynomial, the training error

will tend to go down because when you have a very

simple linear function, it doesn’t fit the

training data that well, when you fit a

quadratic function or third order polynomial or

fourth-order polynomial, it fits the training

data better and better.

As the degree of

polynomial increases, J train will typically go down.

Next, let’s look at J_cv, which is how well does it do on data that it did

not get to fit to?What we saw was

when d equals one, when the degree of

polynomial was very low, J_cv was pretty high

because it underfits, so it didn’t do well on

the cross validation set.

Here on the right as well, when the degree of polynomial

is very large, say four, it doesn’t do well on the

cross-validation set either, and so it’s also high.

Vary the degree of polynomial, get a curve

But if d was in-between say, a second-order polynomial, then it actually

did much better. If you were to vary the

degree of polynomial, you’d actually get a curve

that looks like this, which comes down and

then goes back up.

Where if the degree of

polynomial is too low, it underfits and so doesn’t

do the cross validation set, if it is too high, it overfits and also doesn’t do well on the cross

validation set.

Is only if it’s

somewhere in the middle, that is just right, which is why the

second-order polynomial in our example ends up with a lower cross-validation

error and neither high bias

nor high-variance.

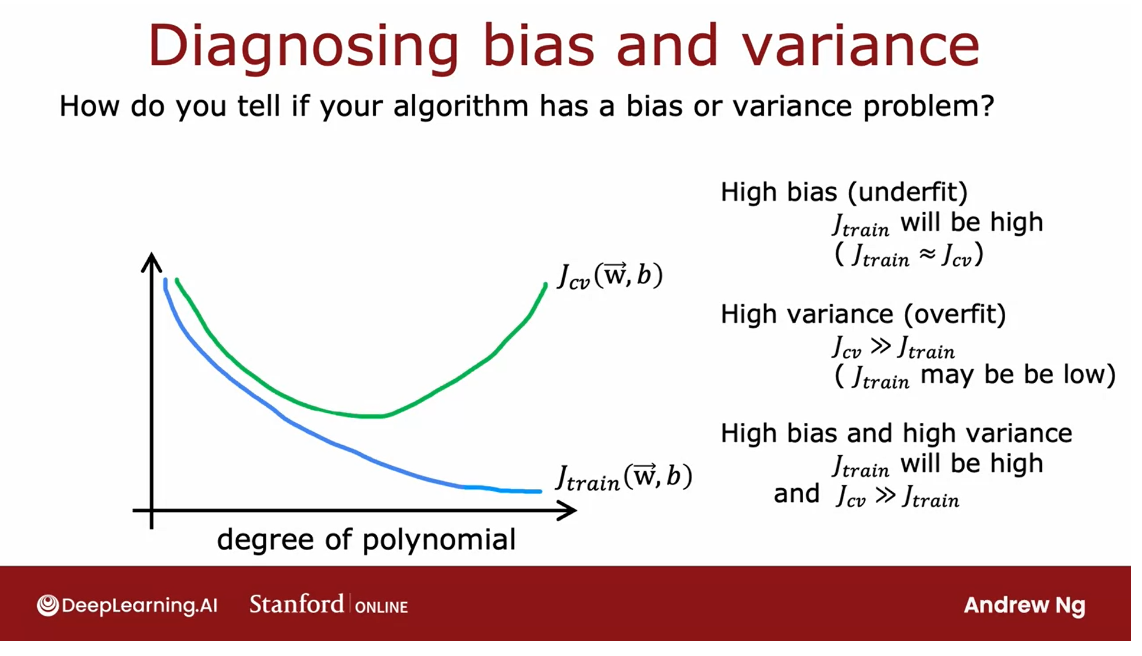

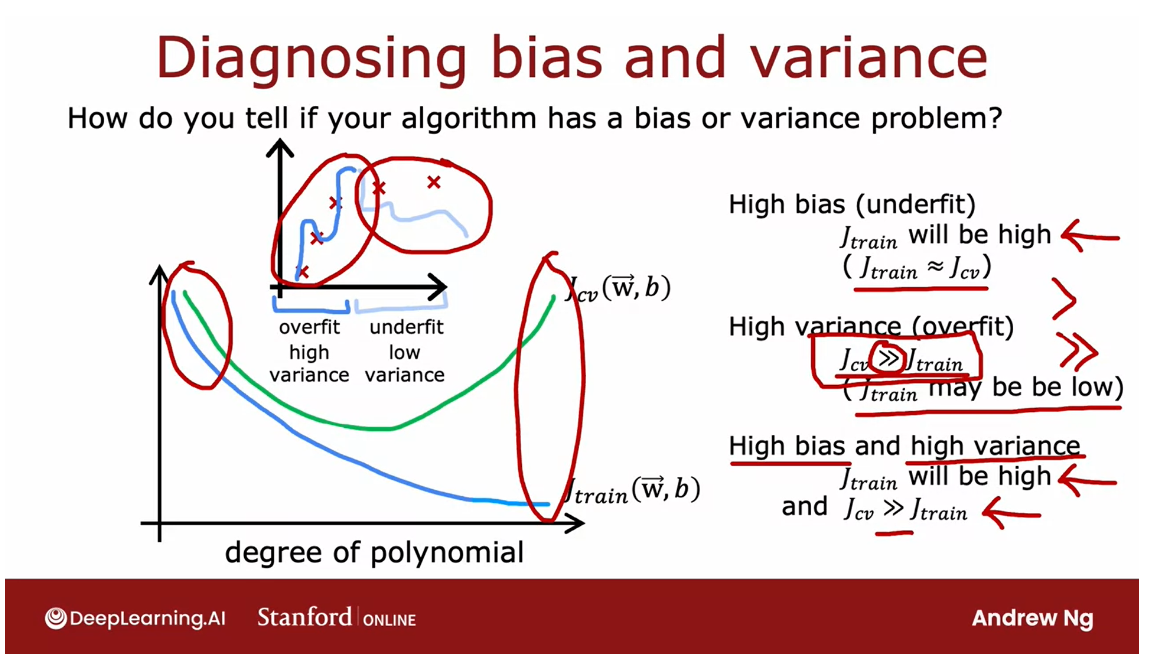

How to diagnose bias and variance in the learning algorithm?

To summarize, how

do you diagnose bias and variance in

your learning algorithm?

If your learning algorithm has high bias or it has

undefeated data, the key indicator will

be if J train is high.That corresponds to this

leftmost portion of the curve, which is where J train as high.Usually you have J train and J_cv will be close

to each other.

How do you diagnose if

you have high variance?While the key indicator

for high-variance will be if J_cv is much greater than J train does double greater than sign in math

refers to a much greater than, so this is greater, and this means much greater.This rightmost portion

of the plot is where J_cv is much greater

than J train.

Usually J train

will be pretty low, but the key indicator is whether J_cv is much greater

than J train. That’s what happens when we had fit a very high order polynomial

to this small dataset.

High bias and high variance

Even though we’ve just

seen bias in the areas, it turns out, in some cases, is possible to simultaneously have high bias and

have high-variance.

You won’t see this happen that much for linear regression, but it turns out that if you’re training

a neural network, there are some

applications where unfortunately you have high

bias and high variance.

One way to recognize

that situation will be if J train is high, so you’re not doing that well on the training set,

but even worse, the cross-validation

error is again, even much larger than

the training set.

The notion of high bias

and high variance, it doesn’t really happen for linear models

applied to one deep.

Intuition about both high bias and high variance.

But to give intuition

about what it looks like, it would be as if for

part of the input, you had a very complicated

model that overfit, so it overfits to

part of the inputs.

But then for some reason, for other parts of the input, it doesn’t even fit the

training data well, and so it underfits

for part of the input.

In this example, which

looks artificial because it’s a single

feature input, we fit the training

set really well and we overfit in

part of the input, and we don’t even fit

the training data well, and we underfit the

part of the input.That’s how in some

applications you can unfortunate end up with both

high bias and high variance.

The indicator for

that will be if the algorithm does poorly

on the training set, and it even does much worse

than on the training set.

For most learning applications, you probably have

primarily a high bias or high variance problem rather than both

at the same time.But it is possible sometimes they’re both

at the same time.

The key takeaways

I know that there’s

a lot of process, there are a lot of

concepts on the slides, but the key takeaways are, high bias means is not even doing well on

the training set, and high variance means, it does much worse on the cross validation set

and the training set.

Whenever I’m training a

machine learning algorithm, I will almost always

try to figure out to what extent

the algorithm has a high bias or underfitting versus a high-variance

when overfitting problem.

This will give good guidance, as we’ll see later this week, on how you can improve the

performance of the algorithm.

But first, let’s take a look at how regularization

effects the bias and variance of a learning algorithm

because that will help you better understand when you

should use regularization. Let’s take a look at

that in the next video.

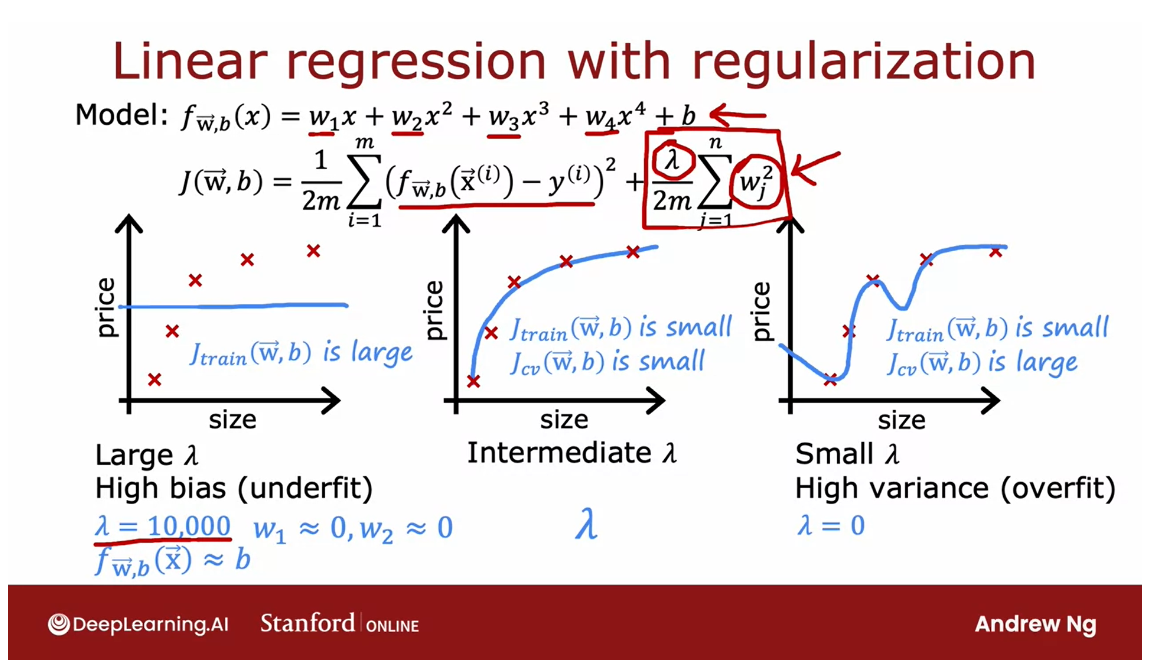

Regularization and bias/variance

You saw in the last video how different choices

of the degree of polynomial D affects the bias and variance of your

learning algorithm and therefore its

overall performance.

In this video, let’s take a

look at how regularization, specifically the choice of the regularization

parameter Lambda affects the bias

and variance and therefore the overall

performance of the algorithm.

This, it turns out, will be helpful

for when you want to choose a good value of Lambda of the

regularization parameter for your algorithm.

Let’s take a look.

In this example, I’m going to use a fourth-order polynomial, but we’re going to fit this

model using regularization.Where here the value of Lambda is the regularization

parameter that controls how much you trade-off

keeping the parameters w small versus fitting

the training data well.

Let’s start with the

example of setting Lambda to be a very large value.Say Lambda is equal to 10,000. If you were to do so, you would end up fitting a model that looks

roughly like this.Because if Lambda

were very large, then the algorithm is

highly motivated to keep these parameters w very small and so you

end up with w_1, w_2, really all of these parameters will

be very close to zero.

The model ends up

being f of x is just approximately

b a constant value, which is why you end up

with a model like this.This model clearly has

high bias and it underfits the training data because

it doesn’t even do well on the training set and

J_train is large.

Let’s take a look at

the other extreme. Let’s say you set Lambda

to be a very small value.

With a small value of Lambda, in fact, let’s go to extreme of setting Lambda equals zero.

With that choice of Lambda, there is no regularization, so we’re just fitting a

fourth-order polynomial with no regularization

and you end up with that curve that you saw previously that

overfits the data.What we saw previously was when you have

a model like this, J_train is small, but J_cv is much larger than

J_train or J_cv is large.This indicates we

have high variance and it overfits this data.

It would be if you have some intermediate

value of Lambda, not really largely 10,000, but not so small as zero that hopefully you get a model

that looks like this, that is just right and

fits the data well with small J_train

and small J_cv.

If you are trying

to decide what is a good value of Lambda to use for the

regularization parameter, cross-validation gives you

a way to do so as well.

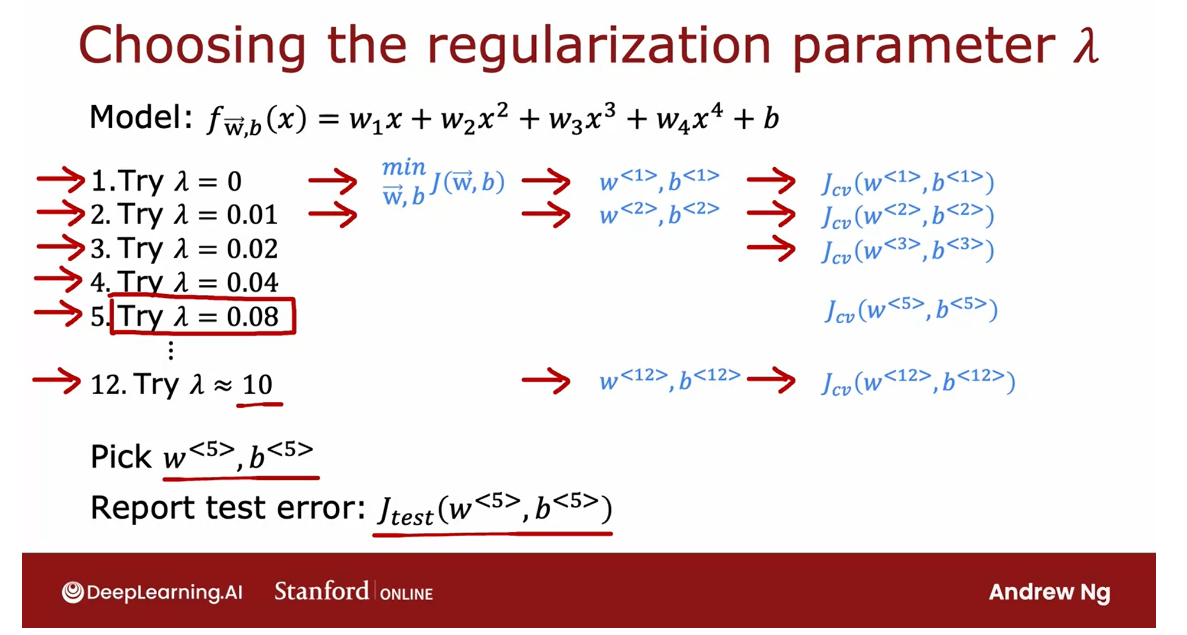

Using regularization, how can you choose a good value of lambda?

Let’s take a look at

how we could do so.Just as a reminder, the problem we’re addressing is if you’re fitting a

fourth-order polynomial, so that’s the model and

you’re using regularization, how can you choose a

good value of Lambda?This would be procedures similar

to what you had seen for choosing the degree of polynomial D using

cross-validation.

Specifically, let’s

say we try to fit a model using

Lambda equals 0.We would minimize

the cost function using Lambda equals 0 and end

up with some parameters w1, b1 and you can then compute

the cross-validation error, J_cv of w1, b1.

Now let’s try a different

value of Lambda. Let’s say you try

Lambda equals 0.01.Then again, minimizing

the cost function gives you a second set

of parameters, w2, b2 and you can also see how well that does on the

cross-validation set, and so on.

Let’s keep trying

other values of Lambda and in this example, I’m going to try doubling

it to Lambda equals 0.02 and so that will

give you J_cv of w3, b3, and so on. Then let’s double again

and double again.

After doubling a

number of times, you end up with Lambda

approximately equal to 10, and that will give

you parameters w12, b12, and J_cv w12 of b12.

By trying out a large range of possible values for Lambda, fitting parameters using those different

regularization parameters, and then evaluating

the performance on the cross-validation set, you can then try to pick what is the best value for the

regularization parameter.

Quickly. If in this example, you find that J_cv of W5, B5 has the lowest value of all of these different

cross-validation errors, you might then decide to

pick this value for Lambda, and so use W5, B5 as to chosen parameters.

Finally, if you want to report out an estimate of the

generalization error, you would then report

out the test set error, J tests of W5, B5.

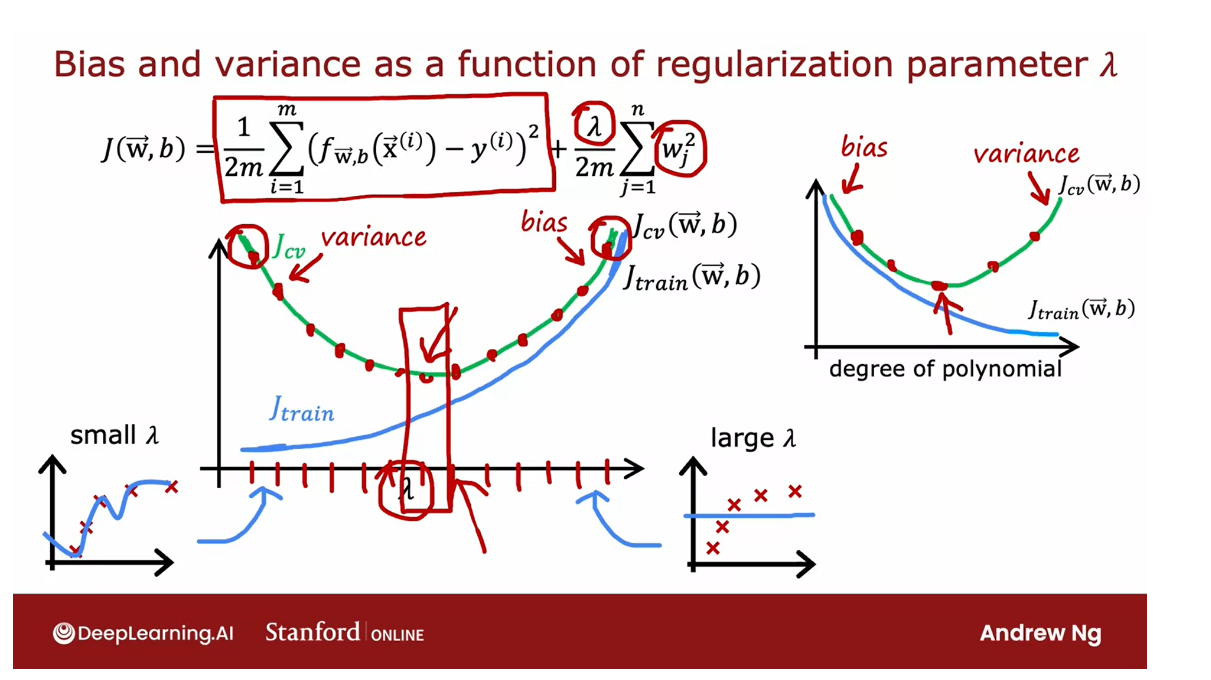

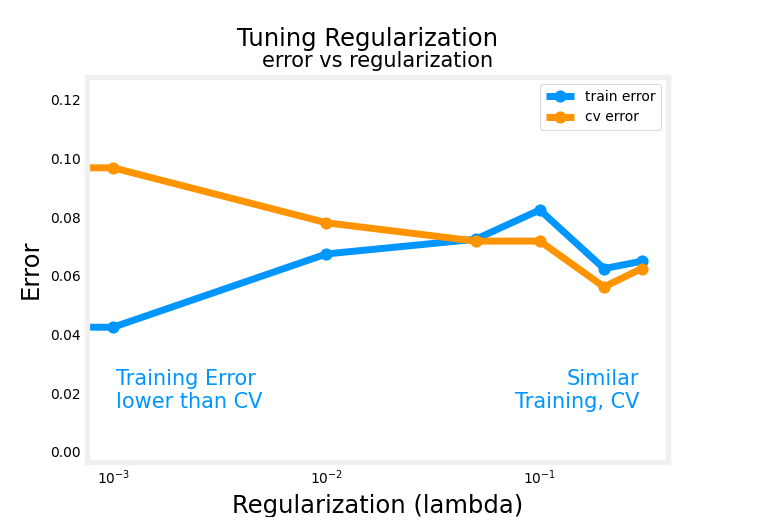

How training error and cross validation error vary as a function of the parameter lambda

To further hone intuition about what this

algorithm is doing, let’s take a look at how training error and

cross validation error vary as a function of

the parameter Lambda.

In this figure, I’ve

changed the x-axis again.Notice that the x-axis here is annotated with the value of the regularization

parameter Lambda, and if we look at the extreme of Lambda equals zero

here on the left, that corresponds to not

using any regularization, and so that’s where we wound up with this very wiggly curve.

If Lambda was small

or it was even zero, and in that case, we have a high variance model, and so J train is going to be small and J_cv is going to be large because it does great on the training data but does much worse on the cross

validation data.

Large lambda: High bias, underfit the data

as Lambda increases, the training error J train will tend to increase

This extreme on the right were very large

values of Lambda. Say Lambda equals 10,000 ends up with fitting a model

that looks like that.This has high bias, it underfits the data, and it turns out J

train will be high and J_cv will be high as well.In fact, if you

were to look at how J train varies as a

function of Lambda, you find that J train

will go up like this because in the

optimization cost function, the larger Lambda is, the more the algorithm is

trying to keep W squared small.That is, the more weight is given to this

regularization term, and thus the less

attention is paid to actually do well

on the training set.

This term on the

left is J train, so the most trying to keep

the parameters small, the less good a job it does on minimizing

the training error.That’s why as Lambda increases, the training error J train

will tend to increase like so.

Now, how about the

cross-validation error?Turns out the cross-validation

error will look like this. Because we’ve seen that if Lambda is too small

or too large, then it doesn’t do well on

the cross-validation set.It either overfits here on the left or underfits

here on the right.

There’ll be some intermediate

value of Lambda that causes the algorithm

to perform best.

What cross-validation

is doing is, it’s trying out a lot of

different values of Lambda.This is what we saw

on the last slide; trial Lambda equals zero, Lambda equals 0.01,

logic is 0,02.

Try different values of lambda and evaluate the cross validation errors

Try a lot of different

values of Lambda and evaluate the

cross-validation error in a lot of these

different points, and then hopefully pick a value that has low cross

validation error, and this will hopefully correspond to a good model

for your application.

If you compare this diagram to the one that we had in

the previous video, where the horizontal axis was

the degree of polynomial, these two diagrams look a little bit not mathematically and

not in any formal way, but they look a little bit like mirror images

of each other, and that’s because when you’re fitting a

degree of polynomial, the left part of this

curve corresponded to overfitting in high bias, the right part corresponded to underfitting in high variance.

Whereas in this one, high-variance was

on the left and high bias was on the right.But that’s why these two

images are a little bit like mirror images

of each other.

You can use cross-validation to make a good choice for the regularization parameter Lambda.

But in both cases,

cross-validation, evaluating different

values can help you choose a good value of degree

or a good value of Lambda.That’s how the choice of regularization parameter

Lambda affects the bias and variance and overall performance

of your algorithm, and you’ve also seen how you

can use cross-validation to make a good choice for the regularization

parameter Lambda.

Now, so far, we’ve talked about how having

a high training set error, high J train is indicative of high bias and how having a high cross-validation

error of J_cv, specifically if it’s much

higher than J train, how that’s indicative

of variance problem.

But what does these

words “high” or “much higher” actually mean?Let’s take a look at that in the next video where we’ll

look at how you can look at the numbers J train and J_cv and judge if

it’s high or low, and it turns out that one further refinement

of these ideas, that is, establishing a baseline level of performance we’re learning algorithm will make it much easier for you to look

at these numbers, J train, J_cv, and judge if they

are high or low. Let’s take a look at what all this means in the next video.

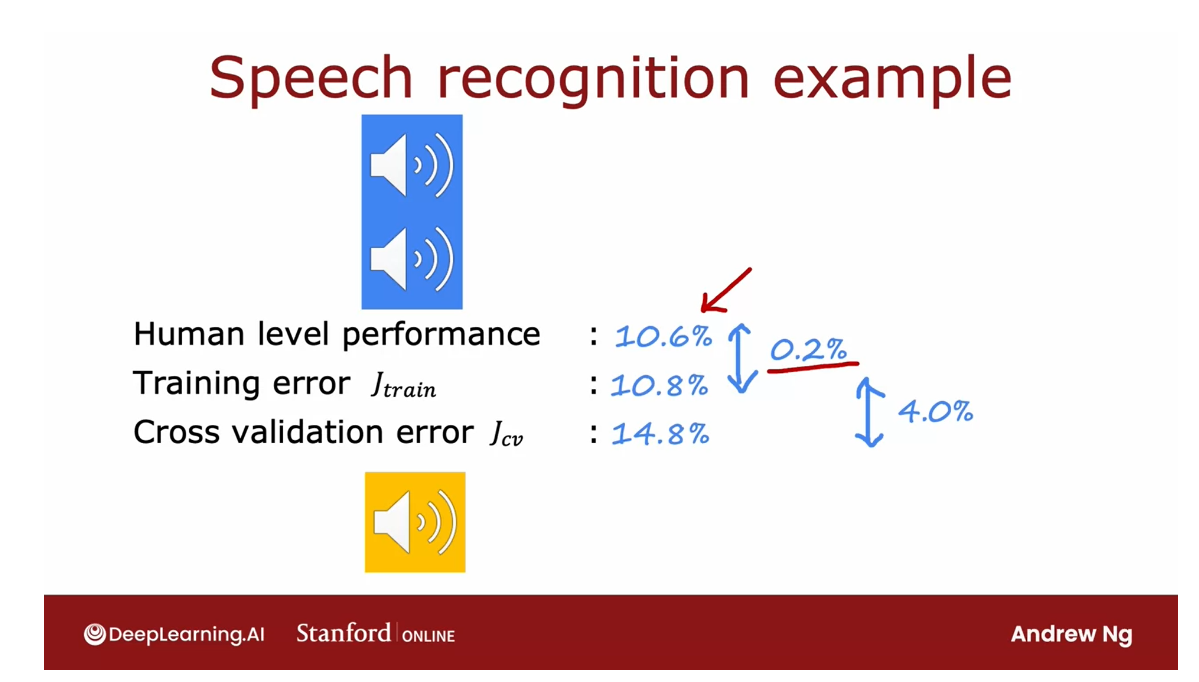

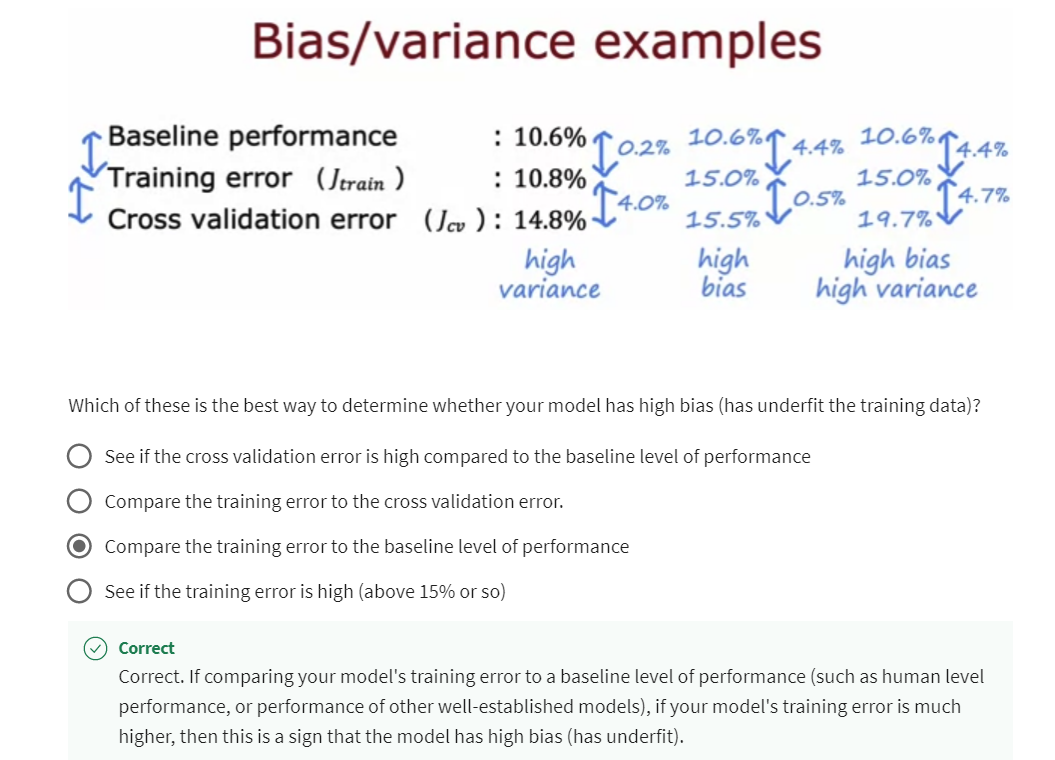

Establishing a baseline level of performance

Let’s look at some

concrete numbers for what J-train and JCV might be, and see how you can judge if a learning algorithm has

high bias or high variance.

Example: application of speech recognition

For the examples in this video, I’m going to use as a running

example the application of speech recognition which is something I’ve worked on

multiple times over the years. Let’s take a look.

A lot of users doing web search on a

mobile phone will use speech recognition

rather than type on the tiny keyboards on

our phones because speaking to a phone is

often faster than typing.

Typical audio that’s

a web search engine we get would be like this, “What is today’s weather?” Or like this, “Coffee shops near me.” It’s the job of the speech recognition algorithms to output the transcripts whether it’s today’s weather or

coffee shops near me.

Now, if you were to train a speech recognition system and measure the training error, and the training

error means what’s the percentage of audio clips in your training set that

the algorithm does not transcribe correctly

in its entirety.

Let’s say the training error for this data-set is

10.8 percent meaning that it transcribes it perfectly for 89.2 percent of

your training set, but makes some mistake in 10.8 percent of

your training set.

If you were to also measure your speech recognition

algorithm’s performance on a separate

cross-validation set, let’s say it gets

14.8 percent error.If you were to look at these numbers it looks like the training error

is really high, it got 10 percent wrong, and then the cross-validation

error is higher but getting 10 percent of even

your training set wrong that seems pretty high.

It seems like that 10 percent

error would lead you to conclude it has high bias because it’s not doing

well on your training set, but it turns out

that when analyzing speech recognition

it’s useful to also measure one other

thing which is what is the human level of performance?

what is the human level of performance

In other words, how

well can even humans transcribe speech accurately

from these audio clips?Concretely, let’s

say that you measure how well fluent speakers can transcribe audio

clips and you find that it is 10.6 percent, and you find that human

level performance achieves 10.6 percent error.

Why is human level

error so high? It turns out that

for web search, there are a lot of audio

clips that sound like this, “I’m going to navigate

to [inaudible].”

There’s a lot of

noisy audio where really no one can accurately transcribe what was said because of the

noise in the audio.

If even a human makes

10.6 percent error, then it seems difficult to expect a learning algorithm

to do much better.

In order to judge if the

training error is high, it turns out to be

more useful to see if the training error is much higher than a human

level of performance, and in this example it does just 0.2 percent

worse than humans.

Given that humans are

actually really good at recognizing speech I think if I can build a speech recognition

system that achieves 10.6 percent error matching human performance

I’d be pretty happy, so it’s just doing a little

bit worse than humans.

But in contrast, the

gap or the difference between JCV and J-train

is much larger. There’s actually a four

percent gap there, whereas previously

we had said maybe 10.8 percent error means

this is high bias.

When we benchmark it to

human level performance, we see that the

algorithm is actually doing quite well on

the training set, but the bigger problem is the cross-validation

error is much higher than the

training error which is why I would conclude that this algorithm actually has more of a variance problem

than a bias problem.

by baseline level of performance I mean what is the level of error you can reasonably hope your learning algorithm to eventually get to.

It turns out when judging if the training error is high is often useful to establish a baseline level of performance, and by baseline level

of performance I mean what is the level of error you can

reasonably hope your learning algorithm to

eventually get to.

One common way to establish a baseline level of performance

is to measure how well humans can do on

this task because humans are really good at

understanding speech data, or processing images or

understanding texts.

Human level performance is often a good benchmark when you

are using unstructured data, such as: audio, images, or texts.

Another way to estimate a baseline level

of performance is if there’s some

competing algorithm, maybe a previous implementation that someone else has

implemented or even a competitor’s

algorithm to establish a baseline level of performance

if you can measure that, or sometimes you might guess

based on prior experience.

If you have access to this baseline level of

performance that is, what is the level of error you can reasonably

hope to get to or what is the desired level of performance that you want

your algorithm to get to?

Then when judging if an algorithm has high

bias or variance, you would look at the baseline

level of performance, and the training error, and the cross-validation error.

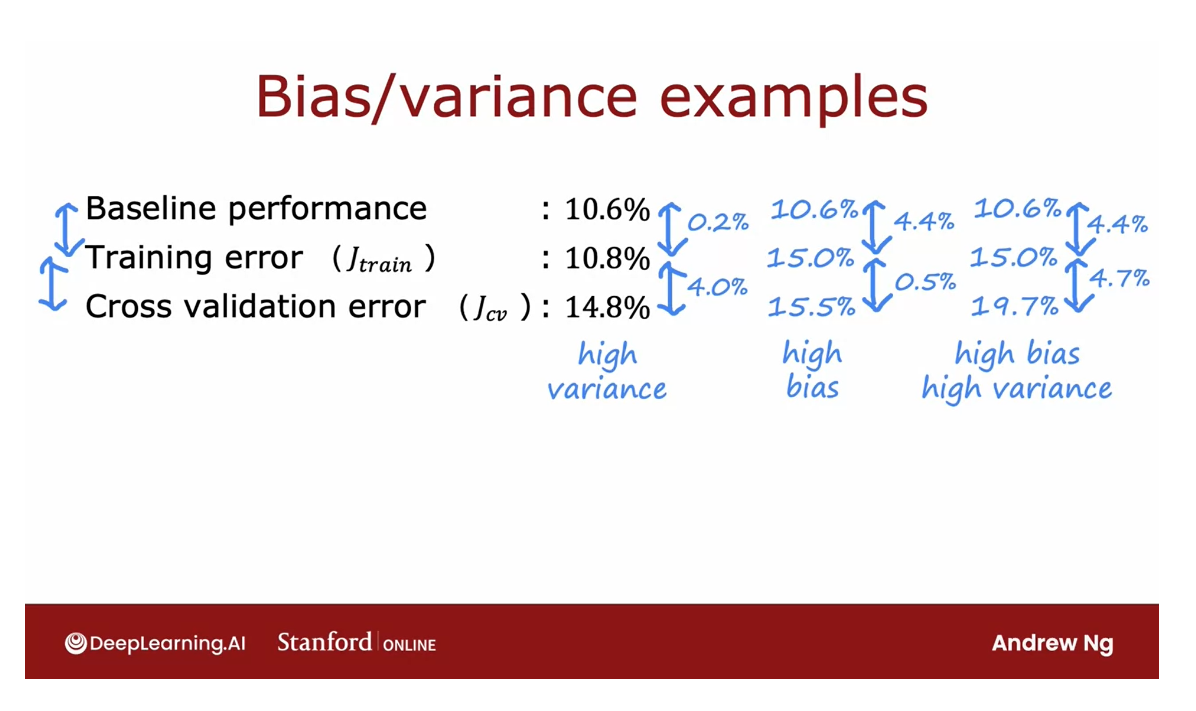

The two key quantities

to measure are then: what is the difference between training error and the baseline level that

you hope to get to.This is 0.2, and if this is large then you would say you have

a high bias problem. You will then also look at this gap between

your training error and your cross-validation error, and if this is high then you will conclude you have a

high variance problem.

That’s why in this example we concluded we have a

high variance problem, whereas let’s look at

the second example. If the baseline level

of performance; that is human level performance,

and training error, and cross validation

error look like this, then this first gap is 4.4 percent and so

there’s actually a big gap.

The training error

is much higher than what humans can

do and what we hope to get to whereas the

cross-validation error is just a little bit bigger

than the training error.If your training error and cross validation

error look like this, I will say this

algorithm has high bias.

By looking at these numbers, training error and

cross validation error, you can get a sense

intuitively or informally of the degree to which

your algorithm has a high bias or

high variance problem.

Just to summarize,

this gap between these first two

numbers gives you a sense of whether you

have a high bias problem, and the gap between these

two numbers gives you a sense of whether you have

a high variance problem.

Sometimes the baseline level of performance could

be zero percent.If your goal is to achieve perfect performance

than the baseline level of performance it

could be zero percent, but for some applications like the speech recognition

application where some audio is just noisy then

the baseline level of a performance could be

much higher than zero.

The method described

on this slide will give you a better read in terms of whether your algorithm suffers from bias or variance.

By the way, it is possible for your algorithms to have high

bias and high variance.Concretely, if you get

numbers like these, then the gap between the baseline and the

training error is large. That would be a 4.6 percent, and the gap between training error and cross

validation error is also large. This is 4.2 percent.

If it looks like this

you will conclude that your algorithm has high

bias and high variance, although hopefully

this won’t happen that often for your

learning applications.

To summarize, we’ve seen that looking at whether

your training error is large is a way to tell if

your algorithm has high bias, but on applications

where the data is sometimes just noisy

and is infeasible or unrealistic to

ever expect to get a zero error then it’s useful to establish this baseline

level of performance.

Rather than just asking is

my training error a lot, you can ask is my training error large relative to what I hope

I can get to eventually, such as, is my training large relative to what

humans can do on the task?

That gives you a more

accurate read on how far away you are in terms of your training error from

where you hope to get to.

Then similarly, looking at whether your

cross-validation error is much larger than

your training error, gives you a sense

of whether or not your algorithm may have a high

variance problem as well.

In practice, this is how I often will look at these

numbers to judge if my learning algorithm has a high bias or high

variance problem.

Now, to further hone our intuition about how a

learning algorithm is doing, there’s one other

thing that I found useful to think about which

is the learning curve. Let’s take a look at what

that means in the next video.

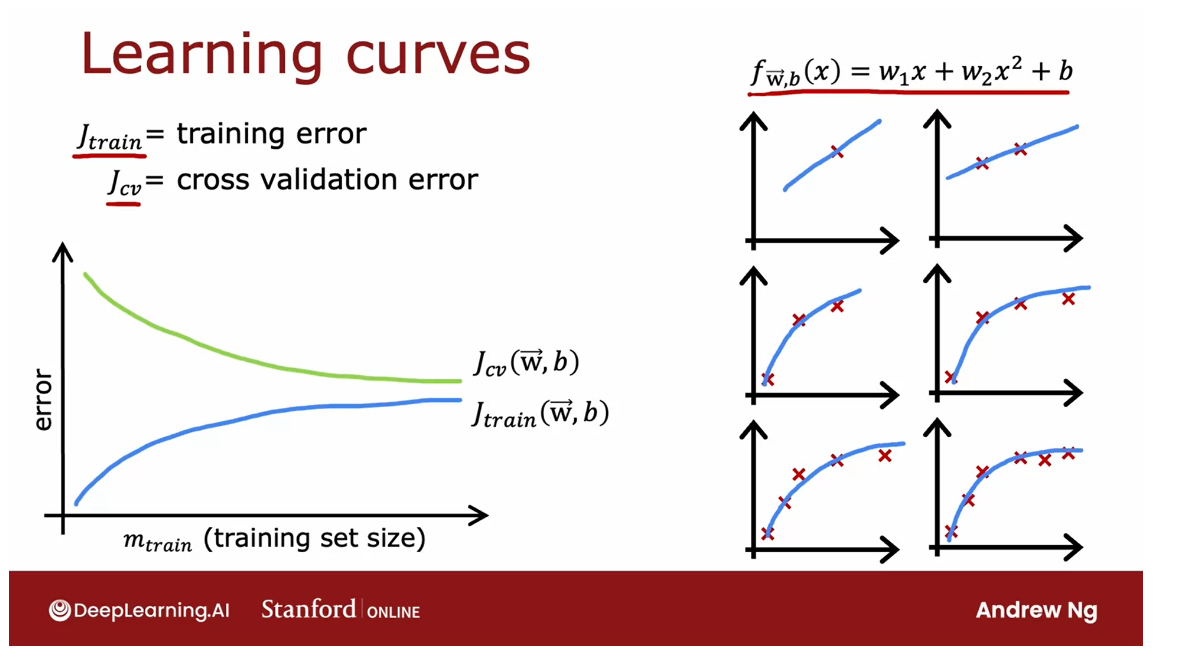

Learning curves

Learning curve: function of the amount of experience (for example, the number of training examples)

Learning curves are a way

to help understand how your learning

algorithm is doing as a function of the amount

of experience it has, whereby experience, I mean, for example, the number of

training examples it has.

Let’s take a look. Let me

plot the learning curves for a model that fits a second-order polynomial

quadratic function like so.I’m going to plot both J_cv, the cross-validation error, as well as J_train

the training error. On this figure, the

horizontal axis is going to be m_train.That is the training set size or the number of examples so the

algorithm can learn from.On the vertical axis, I’m going to plot the error. By error, I mean either

J_cv or J_train.

Let’s start by plotting the

cross-validation error. It will look

something like this. That’s what J_cv of

(w, b) will look like.Is maybe no surprise

that as m_train, the training set

size gets bigger, then you learn a better model and so the cross-validation

error goes down.Now, let’s plot J_train of (w, b) of what the training

error looks like as the training set

size gets bigger.

It turns out that

the training error will actually look like this. That has the training

set size gets bigger, the training set error

actually increases.

Let’s take a look at

why this is the case. We’ll start with an example of when you have just a

single training example.Well, if you were to fit a

quadratic model to this, you can fit easiest

straight line or a curve and your training

error will be zero.How about if you have two

training examples like this? Well, you can again fit a straight line and achieve

zero training error.

In fact, if you have

three training examples, the quadratic function

can still fit this very well and get pretty much

zero training error, but now, if your training set

gets a little bit bigger, say you have four

training examples, then it gets a

little bit harder to fit all four examples perfectly.

You may get a curve

that looks like this, is a pretty well, but you’re a little bit off in a few

places here and there.When you have entries, the training set size to four the training error has

actually gone up a little bit.

How about we have five

training examples. Well again, you can

fit it pretty well, but it gets even a

little bit harder to fit all of them perfectly.We haven’t even larger shading sets it just gets harder and harder to fit every single one of your training

examples perfectly.

To recap, when you have

a very small number of training examples like

one or two or even three, is relatively easy to get zero or very

small training error, but when you have a

larger training set is harder for quadratic function to fit all the training

examples perfectly.

Which is why as the

training set gets bigger, the training error

increases because it’s harder to fit all of the

training examples perfectly.

Notice one other thing

about these curves, which is the

cross-validation error, will be typically higher than the training error because you fit the parameters

to the training set.

You expect to do

at least a little bit better or when m is small, maybe even a lot better on the training set than on

the trans validation set.

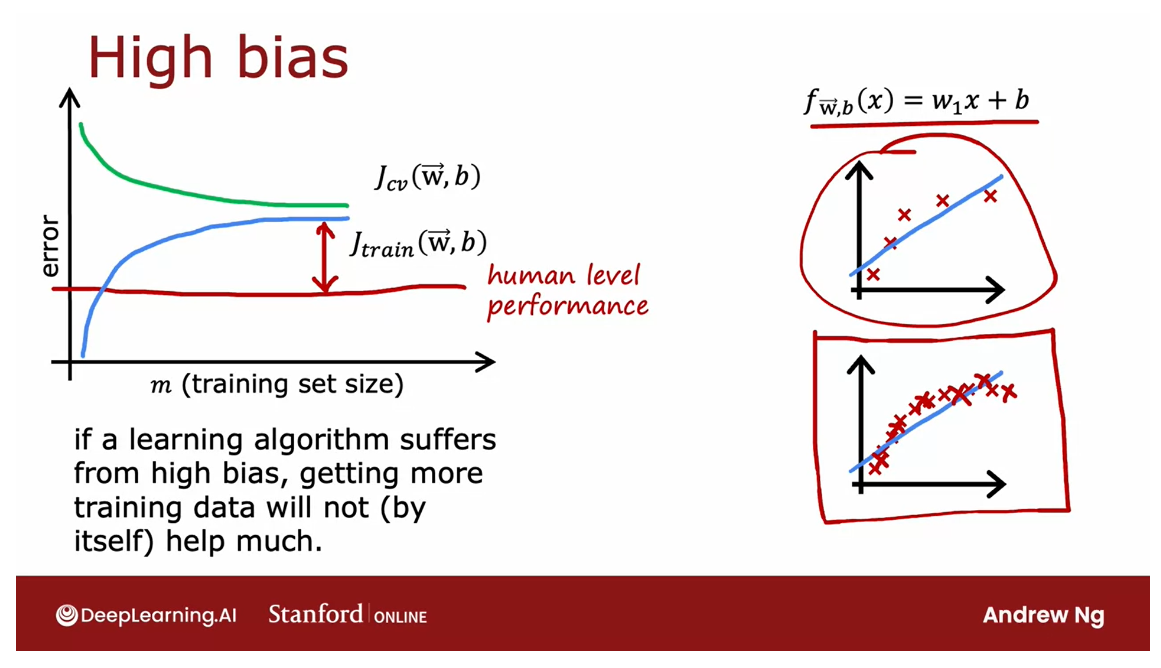

High Bias 欠拟合 underfit

Let’s now take a look at what the learning curves

will look like for an average with high bias versus

one with high variance.

Let’s start at the high bias

or the underfitting case.Recall that an example of high bias would be if you’re

fitting a linear function, so curve that looks like this. If you were to plot

the training error, then the training error will go up like so as you’d expect.

In fact, this curve of training error may

start to flatten out. We call it plateau, meaning flatten

out after a while.

That’s because as you get more and more training examples when you’re fitting the

simple linear function, your model doesn’t actually

change that much more.

Average training error flattens out after a while

Cross validation error come down and also flattened out after a while

It’s fitting a straight

line and even as you get more and more

and more examples, there’s just not that

much more to change, which is why the

average training error flattens out after a while.

Similarly, your cross-validation

error will come down and also flattened

out after a while, which is why J_cv again

is higher than J_train, but J_cv will tend

to look like that.

It’s because be

honest, its endpoints, even as you get more and

more and more examples, not much is going to change about the street

now you’re fitting.It’s just too simple a model to be fitting into

this much data. Which is why both of

these curves, J_cv, and J_train tend to

flatten after a while.

Have a measure of the baseline level of performance

Big gap between the baseline level of performance and J t r a i n J_{train} Jtrain: indicator for this algorithm having high bias

If you had a measure of that baseline

level of performance, such as human-level performance, then they’ll tend to

be a value that is lower than your

J_train and your J_cv.Human-level performance

may look like this.

There’s a big gap between the baseline level of

performance and J_train, which was our indicator for this algorithm having high bias. That is, one could hope to

be doing much better if only we could fit a more complex function

than just a straight line.

Now, one interesting thing about this plot is you can ask, what do you think

will happen if you could have a much

bigger training set?

What would it look

like if we could increase even further than

the right of this plot, you can go further to

the right as follows?

Well, you can imagine if you were to extend both of

these curves to the right, they’ll both flatten out

and both of them will probably just continue

to be flat like that.No matter how far you

extend to the right of this plot, these two curves, they will never somehow

find a way to dip down to this human level

performance or just keep on being flat like this, pretty much forever no matter how large the training set gets.

That gives this conclusion, maybe a little bit surprising, that if a learning

algorithm has high bias, getting more training

data will not by itself hope that much.

High bias: more data will not let you bring down the error rate that much

I know that we’re

used to thinking that having more data is good, but if your algorithm

has high bias, then if the only thing you do is throw more

training data added, that by itself will not ever let you bring down the

error rate that much.

It’s because of this really, no matter how many more examples

you add to this figure, the straight linear

fitting just isn’t going to get that much better.That’s why before

investing a lot of effort into collecting

more training data, it’s worth checking if your learning algorithm has high bias, because if it does, then you probably need to do some other things other than just throw more

training data added.

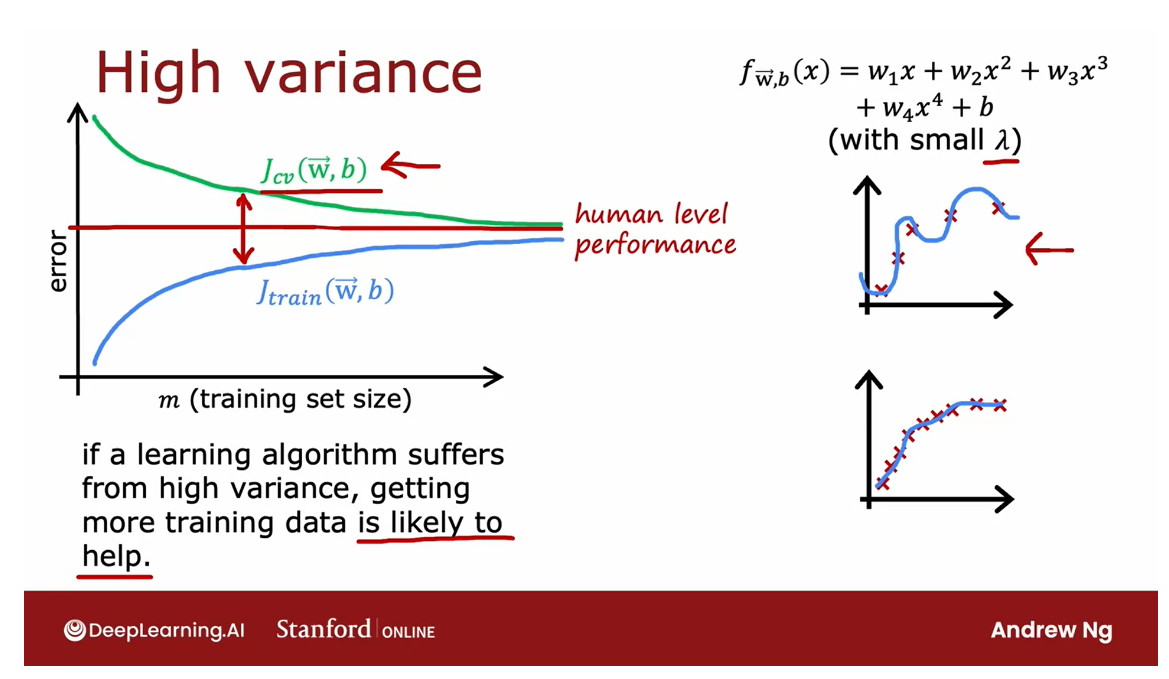

High Variance

Overfit 过拟合的情况

Let’s now take a look at what the learning curve

looks like for learning algorithm

with high variance.

You might remember that

if you were to fit the forefather polynomial

with small lambda, say, or even lambda equals zero, then you get a curve

that looks like this, and even though it

fits the training data very well, it

doesn’t generalize.

Let’s now look at what a learning curve

might look like in this high variance scenario. J train will be going up as the training

set size increases, so you get a curve

that looks like this, and J cv will be much higher, so your cross-validation

error is much higher than

your training error.

The fact there’s a huge gap here is what I can tell you that this high-variance is doing much better on the training set than it’s doing on your

cross-validation set.

If you were to plot a baseline

level of performance, such as human level performance, you may find that it

turns out to be here, that J train can sometimes

be even lower than the human level

performance or maybe human level performance is a

little bit lower than this.

But when you’re over

fitting the training set, you may be able to fit

the training set so well to have an

unrealistically low error, such as zero error in

this example over here, which is actually better

than how well humans will actually be able to predict housing prices or whatever the application

you’re working on.

But again, to signal for

high variance is whether J cv is much higher

than J train.

When you have high variance, then increasing the training

set size could help a lot, and in particular, if we could extrapolate these

curves to the right, increase M train, then the training error

will continue to go up, but then the cross-validation

error hopefully will come down and

approach J train.

High variance: it might be possible just by increasing the training set size to lower the cross-validation error and to get your algorithm to perform better and better

So in this scenario, it might be possible just by increasing the

training set size to lower the cross-validation

error and to get your algorithm to

perform better and better, and this is unlike

the high bias case, where if the only thing you

do is get more training data, that won’t actually help you learn your algorithm

performance much.

Summary

To summarize, if a learning algorithm

suffers from high variance, then getting more training

data is indeed likely to help.Because extrapolating to

the right of this curve, you see that you can expect

J cv to keep on coming down.In this example, just by

getting more training data, allows the algorithm to go from relatively high

cross-validation error to get much closer to human

level performance.

You can see that

if you were to add a lot more training examples and continue to fill the

fourth-order polynomial, then you can just get

a better fourth order polynomial fit to this data than just very weakly

curve up on top.

If you’re building a machine

learning application, you could plot the learning curves if

you want, that is, you can take different subsets

of your training sets, and even if you have, say, 1,000 training examples, you could train a model on just 100 training

examples and look at the training error and

cross-validation error, then train them although

on 200 examples, holding out 800 examples and just not using them for now, and plot J train

and J cv and so on the repeats and plot out what the learning

curve looks like.

If we were to

visualize it that way, then that could be another

way for you to see if your learning

curve looks more like a high bias or

high variance one.

One downside of the

plotting learning curves like this is

something I’ve done, but one downside is, it is computationally

quite expensive to train so many different models using different size subsets of your training set,

so in practice, it isn’t done that

often, but nonetheless, I find that having this mental visual picture in my head of what the

training set looks like, sometimes that helps me to

think through what I think my learning algorithm

is doing and whether it has high bias or high variance.

About the next video

I know we’ve gone through a

lot about bias and variance, let’s go back to our

earlier example of if you’ve trained a model for

housing price prediction, how does bias and variance help you decide what to do next? Let’s go back to that

earlier example, which I hope will now make

a lot more sense to you. Let’s do that in the next video.

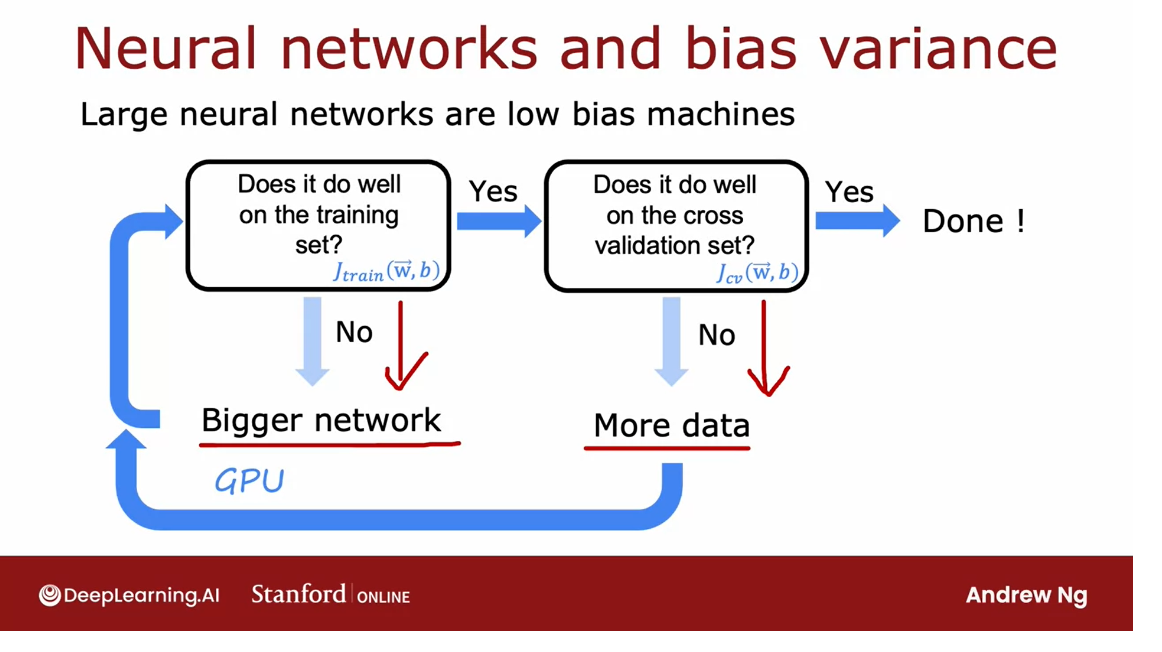

Deciding what to try next: revisited

You’ve seen how by looking

at J train and Jcv, that is the training error

and cross-validation error, or maybe even plotting

a learning curve.

You can try to get

a sense of whether your learning algorithm has

high bias or high variance.This is the procedure

I routinely do when I’m training a

learning algorithm more often look at the training error and cross-validation error to try to decide if my algorithm has

high bias or high variance.

It turns out this

will help you make better decisions about what to try next in order to improve the performance of

your learning algorithm.

Let’s look at an example. This is actually the example

that you have seen earlier.If you’ve implemented

regularized linear regression on predicting housing prices, but your algorithm mix on the set three large

errors since predictions, what do you try next?

These were the six ideas that we had when we had looked

over this slide earlier.

Getting more training examples, try small set of features, additional features, and so on. It turns out that each of

these six items either helps fix a high variance

or a high bias problem.In particular, if your learning

algorithm has high bias, three of these techniques

will be useful.If your learning algorithm

has high variance than a different three of these

techniques will be useful.

Six options here

Let’s see if we can figure

out which is which.

This first option or getting more training examples helps to fix a high variance problem.

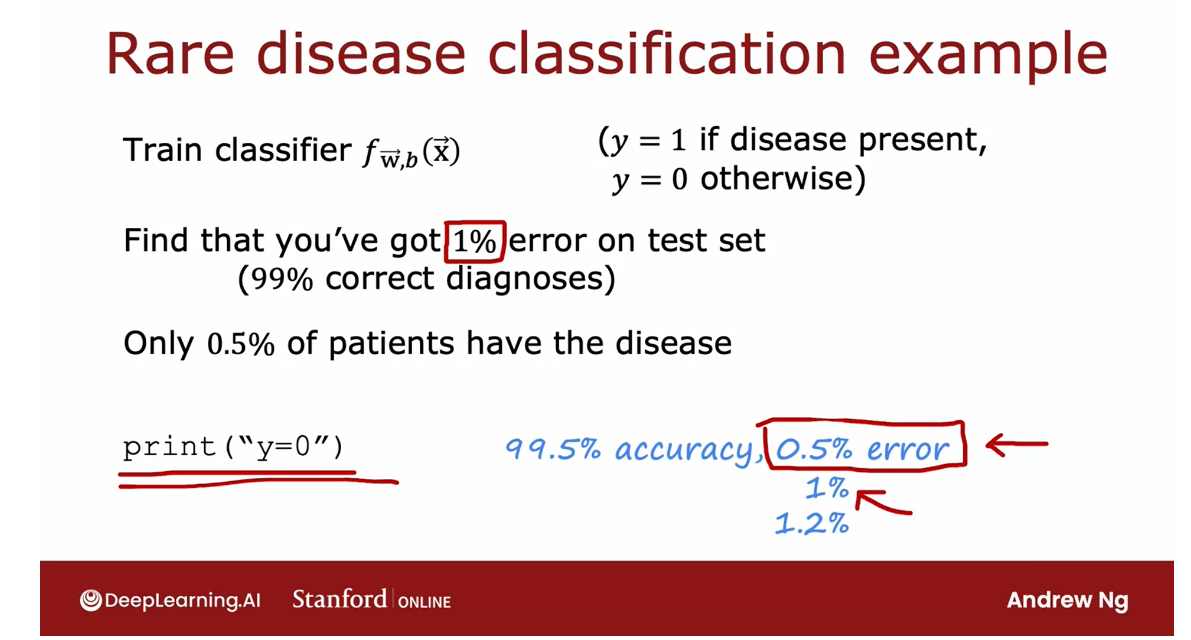

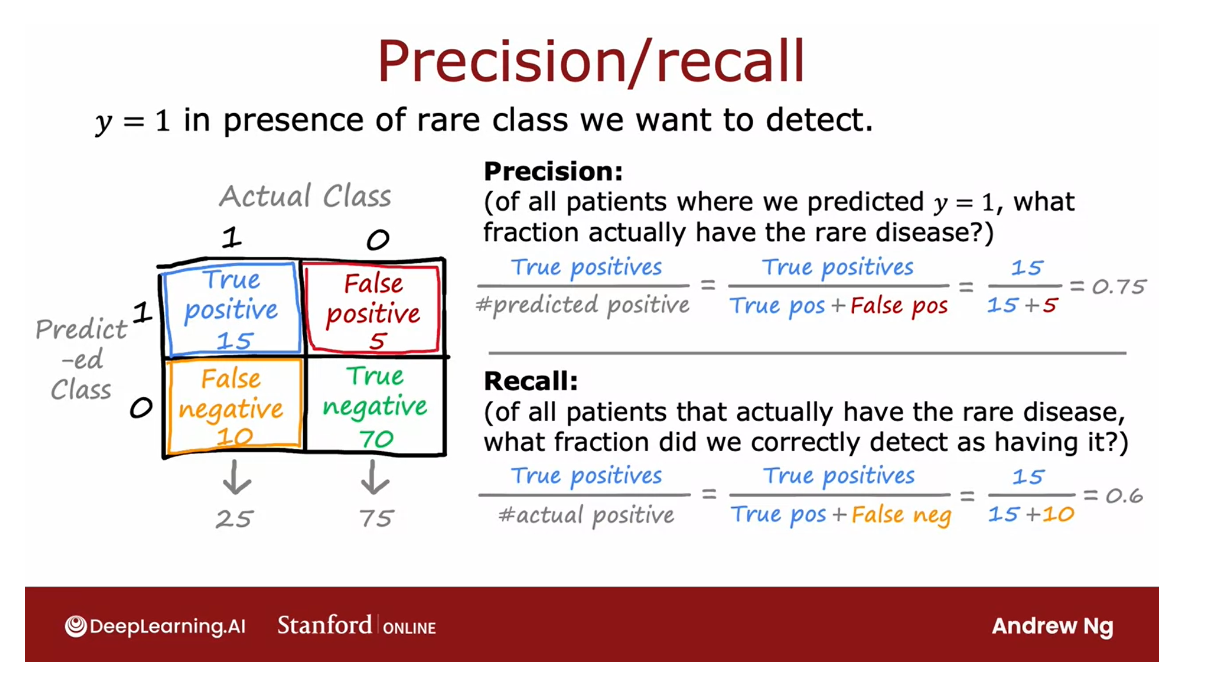

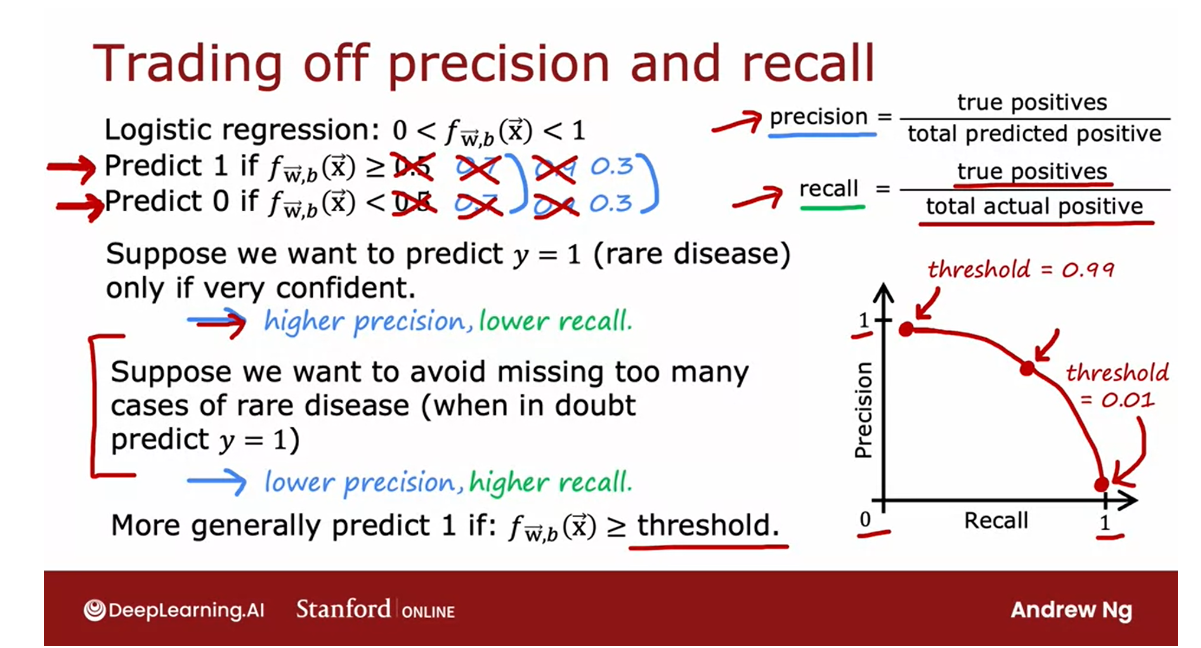

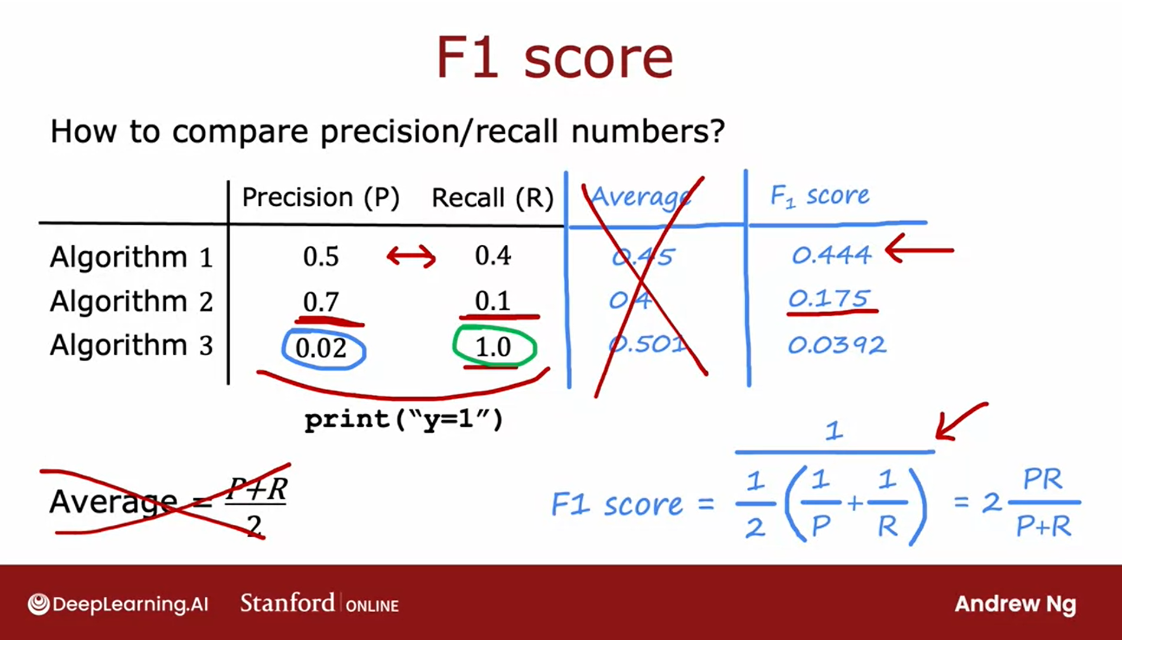

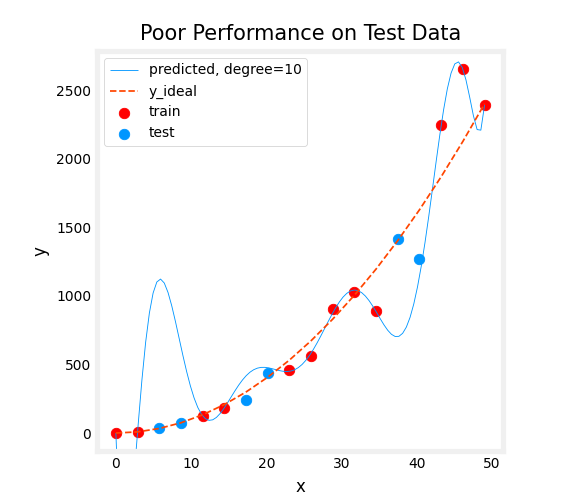

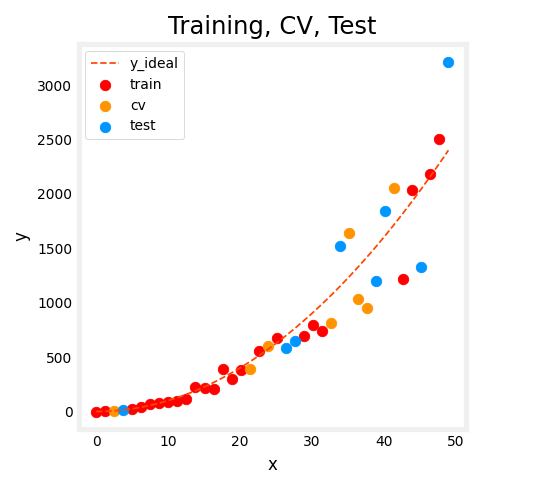

First one is get more