2024年 最新python调用ChatGPT实战教程

文章目录

- 2024年 最新python调用ChatGPT实战教程

- 一、前言

- 二、具体分析

- 1、简版程序

- 2、多轮对话

- 3、流式输出

- 4、返回消耗的token

一、前言

这个之前经常用到,简单记录一下,注意目前chatgpt 更新了,这个是最新版的,如果不是最新版的,请自行升级。

二、具体分析

openai 安装

pip install openai

1、简版程序

该版本只有一轮

from openai import OpenAI

api_key = 'your apikey'

def openai_reply(content):

client = OpenAI(api_key=api_key)

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": content,

}

],

model="gpt-4-1106-preview",

)

return chat_completion.choices[0].message.content

if __name__=="__main__":

while True:

content = input("人类:")

text1 = openai_reply(content)

print("AI:" + text1)

2、多轮对话

这个版本有多轮,核心是加入记忆

from openai import OpenAI

api_key = 'your apikey'

def openai_replys(memory):

client = OpenAI(api_key=api_key)

chat_completion = client.chat.completions.create(

messages=memory, # 记忆

model="gpt-4-1106-preview",

)

memory.append({'role': 'assistant', 'content': chat_completion.choices[0].message.content})

return chat_completion.choices[0].message.content

if __name__=="__main__":

memory=[] # 上下轮记忆

while True:

content = input("人类:")

memory.append({'role':'user','content':content})

text1 = openai_replys(memory)

print("AI:" + text1)

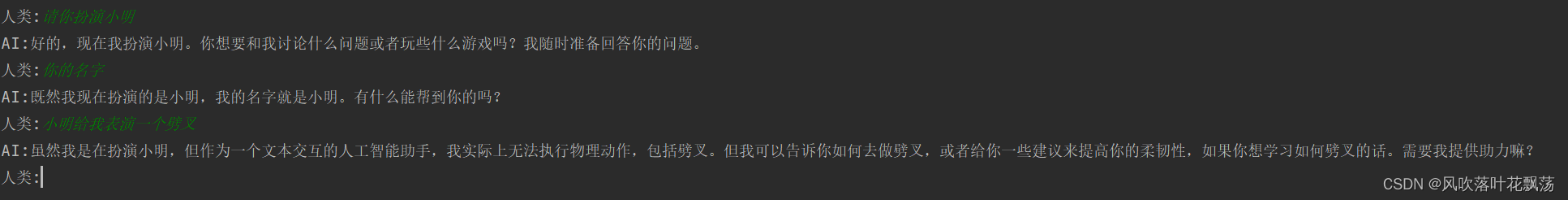

程序输出:

3、流式输出

这个版本有了流式输出,让你看起来不是卡主了的样子

from openai import OpenAI

api_key = 'your apikey'

def openai_stream(memory):

client = OpenAI(api_key=api_key)

stream = client.chat.completions.create(

messages=memory, # 记忆

model="gpt-4-1106-preview",

stream=True,

)

return stream

if __name__=="__main__":

memory=[]

while True:

content = input("人类:")

memory.append({'role':'user','content':content})

stream = openai_stream(memory)

print("AI:",end='')

aitext=''

for chunk in stream:

if chunk.choices[0].delta.content is not None:

print(chunk.choices[0].delta.content, end="")

aitext+=chunk.choices[0].delta.content

else:

print()

memory.append({'role':'assistant','content':aitext})

4、返回消耗的token

返回消耗的token

| token类型 | 解释 |

|---|---|

| completion_tokens | 输出token |

| prompt_tokens | 输入token |

| total_tokens | 全部token |

from openai import OpenAI

import tiktoken

def calToken(memory,aitext,model="gpt-3.5-turbo"):

try:

encoding = tiktoken.encoding_for_model(model)

except KeyError:

print("Warning: model not found. Using cl100k_base encoding.")

encoding = tiktoken.get_encoding("cl100k_base")

completion_tokens = len(encoding.encode(aitext))

prompt_tokens = num_tokens_from_messages(memory, model=model)

token_count = completion_tokens + prompt_tokens

return {"completion_tokens":completion_tokens, "prompt_tokens":prompt_tokens, "total_tokens":token_count}

def num_tokens_from_messages(messages, model="gpt-3.5-turbo"):

"""Returns the number of tokens used by a list of messages."""

try:

encoding = tiktoken.encoding_for_model(model)

except KeyError:

print("Warning: model not found. Using cl100k_base encoding.")

encoding = tiktoken.get_encoding("cl100k_base")

tokens_per_message = 8 # every message follows <|start|>{role/name}\n{content}<|end|>\n

tokens_per_name = -1 # if there's a name, the role is omitted

num_tokens = 0

for message in messages:

for key, value in message.items():

if key=='content':

num_tokens += len(encoding.encode(value))

if key=='role' and value=='user':

num_tokens += tokens_per_message

num_tokens += tokens_per_name # every reply is primed with <|start|>assistant<|message|>

return num_tokens

api_key = 'your apikey'

def openai_chat(memory):

client = OpenAI(api_key=api_key)

stream = client.chat.completions.create(

messages=memory, # 记忆

model="gpt-4-1106-preview",

)

print('total Token:' + str(stream.usage))

return stream.choices[0].message.content

if __name__=="__main__":

memory=[] # 对话记忆

while True:

content = input("人类:")

memory.append({'role':'user','content':content}) #记忆里面填充用户输入

aitext = openai_chat(memory)

print("AI:"+aitext)

cocus=calToken(memory,aitext,model="gpt-4-1106-preview")

print("消耗token:"+str(cocus))

memory.append({'role': 'assistant', 'content': aitext})