目录

- 概述

- 实践

- 安装插件

- docker 在线安装

- containerd安装

- 二进制安装

- yum安装

- 修改containder配置文件

- cni

- etcd

- rsync

- go

- 设置golang代理

- 安装CFSSL

- 下载kubernetes代码

- 编译启动本地单节点集群

- 问题

- k8s没有被正常启动该如何

- k8s正常启动日志

- 测试

- 结束

概述

此文详细说明在 centos 7上编译 k8s 源码,并运行单个集群,测试用例 nginx

# 找不到 vim 等这种源找不到,挂到本地安装 iso 上

[root@test kubernetes]# mount /dev/cdrom /mnt

实践

安装插件

yum install -y gcc g++ gawk autoconf automake python3-cmarkgfm

yum install -y acl libacl1-dev

yum install -y attr libattr1-dev

yum install -y libxxhash-dev libzstd-dev liblz4-dev libssl-dev jq python3-pip

yum install -y vim wget

yum install -y ca-certificates curl gnupg

docker 在线安装

官方文档

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

阿里云

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@test kubernetes]# yum -y install yum-utils

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

yum install -y yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

[root@test kubernetes]# yum install container-selinux

yum list docker-ce --showduplicates|sort -r

# To install the latest version, run:

yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

systemctl start docker

docker run hello-world

系统重启有问题

[root@test kubernetes]# systemctl start docker

[root@test kubernetes]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@test kubernetes]# docker -v

Docker version 25.0.2, build 29cf629

containerd安装

本次采用 yum 安装模式。

二进制安装

wget https://github.com/containerd/containerd/releases/download/v1.7.13/cri-containerd-cni-1.7.13-linux-amd64.tar.gz

curl https://github.com/containerd/containerd/releases/download/v1.7.13/cri-containerd-cni-1.7.13-linux-amd64.tar.gz

yum安装

# 获取YUM源

[root@test ~]# wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

--2024-02-04 13:52:47-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

正在解析主机 mirrors.aliyun.com (mirrors.aliyun.com)... 113.219.178.241, 101.226.26.145, 113.219.178.228, ...

正在连接 mirrors.aliyun.com (mirrors.aliyun.com)|113.219.178.241|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2081 (2.0K) [application/octet-stream]

正在保存至: “/etc/yum.repos.d/docker-ce.repo”

100%[==========================================================================================================================================>] 2,081 --.-K/s 用时 0s

2024-02-04 13:52:47 (183 MB/s) - 已保存 “/etc/yum.repos.d/docker-ce.repo” [2081/2081])

# 使用yum命令安装

[root@test ~]# yum -y install containerd.io

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: ftp.yz.yamagata-u.ac.jp

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

file:///mnt/repodata/repomd.xml: [Errno 14] curl#37 - "Couldn't open file /mnt/repodata/repomd.xml"

正在尝试其它镜像。

软件包 containerd.io-1.6.28-3.1.el7.x86_64 已安装并且是最新版本

无须任何处理

# 使用rpm -qa命令查看是否安装

[root@test ~]# rpm -qa | grep containerd

containerd.io-1.6.28-3.1.el7.x86_64

# 设置containerd服务启动及开机自启动

[root@test ~]# systemctl enable containerd

Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service.

[root@test ~]# systemctl start containerd

# 查看containerd服务启动状态

[root@test ~]# systemctl status containerd

● containerd.service - containerd container runtime

Loaded: loaded (/usr/lib/systemd/system/containerd.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2024-02-04 13:23:26 CST; 31min ago

Docs: https://containerd.io

Main PID: 2363 (containerd)

CGroup: /system.slice/containerd.service

└─2363 /usr/bin/containerd

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.608700310+08:00" level=info msg="loading plugin \"io.containerd.grpc.v1.tasks\"..." type=io.containerd.grpc.v1

2月 04 13:23:26 test systemd[1]: Started containerd container runtime.

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.608713874+08:00" level=info msg="loading plugin \"io.containerd.grpc.v1.version\"..." type=io.containerd.grpc.v1

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.608726440+08:00" level=info msg="loading plugin \"io.containerd.tracing.processor.v1.otlp\"..." type...rocessor.v1

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.608790190+08:00" level=info msg="skip loading plugin \"io.containerd.tracing.processor.v1.otlp\"..."...rocessor.v1

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.608815589+08:00" level=info msg="loading plugin \"io.containerd.internal.v1.tracing\"..." type=io.co...internal.v1

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.609120481+08:00" level=error msg="failed to initialize a tracing processor \"otlp\"" error="no OpenT...kip plugin"

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.610517213+08:00" level=info msg=serving... address=/run/containerd/containerd.sock.ttrpc

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.611638647+08:00" level=info msg=serving... address=/run/containerd/containerd.sock

2月 04 13:23:26 test containerd[2363]: time="2024-02-04T13:23:26.612263169+08:00" level=info msg="containerd successfully booted in 0.053850s"

Hint: Some lines were ellipsized, use -l to show in full.

# 安装Containerd时ctr命令亦可使用,ctr命令主要用于管理容器及容器镜像等。

# 使用ctr命令查看Containerd客户端及服务端相关信息。

[root@test ~]# ctr version

Client:

Version: 1.6.28

Revision: ae07eda36dd25f8a1b98dfbf587313b99c0190bb

Go version: go1.20.13

Server:

Version: 1.6.28

Revision: ae07eda36dd25f8a1b98dfbf587313b99c0190bb

UUID: c839d914-41a9-469f-a3ab-590ced3f6acf

[root@test ~]#

修改containder配置文件

# 创建配置文件

containerd config default > /etc/containerd/config.toml

# 添加配置

sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml

sed -i 's/snapshotter = "overlayfs"/snapshotter = "native"/' /etc/containerd/config.toml

sed -i '/\[plugins\."io\.containerd\.grpc\.v1\.cri"\.registry\.mirrors\]/a\ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]\n endpoint = ["https://registry.aliyuncs.com" ,"https://registry-1.docker.io"]' /etc/containerd/config.toml

# 取消这个配置

[root@test kubernetes]# cat /etc/containerd/config.toml | grep -n "sandbox_image"

61: sandbox_image = "registry.k8s.io/pause:3.6"

systemctl restart containerd

# 这个不要配置,测试时不要配置

SystemdCgroup = false

SystemdCgroup = true

# 被替换

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry.aliyuncs.com" ,"https://registry-1.docker.io"]

cni

这个网好的话,可是不安装,启动时会自动安装的

# 手动下载,使用命令没下载下来

wget https://github.com/containernetworking/plugins/releases/download/v1.4.0/cni-plugins-linux-amd64-v1.4.0.tgz

[root@test ~]# ls

anaconda-ks.cfg cni-plugins-linux-amd64-v1.1.1.tgz default.etcd etcd go go1.20.13.linux-amd64.tar.gz

[root@test ~]# mkdir -p /opt/cni/bin

[root@test ~]# tar -zxf cni-plugins-linux-amd64-v1.1.1.tgz -C /opt/cni/bin

etcd

这个需要预先安装

ETCD_VER=v3.5.12

curl -L https://storage.googleapis.com/etcd/${ETCD_VER}/etcd-${ETCD_VER}-linux-amd64.tar.gz -o /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz

mkdir -p ~/etcd

tar xzvf /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz -C ~/etcd --strip-components=1

rm -f /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz

tar xzvf /data/soft/etcd-${ETCD_VER}-linux-amd64.tar.gz -C ~/etcd --strip-components=1

# 最后加入:export PATH="/root/etcd:${PATH}"

source ~/.bashrc

执行支持 etcd 命令

[root@test ~]# etcd

{"level":"info","ts":"2024-02-03T09:30:46.682+0800","caller":"etcdmain/etcd.go:73","msg":"Running: ","args":["etcd"]}

rsync

yum install -y rsync

go

版本,没有选择最新的,选择次新。

wget https://golang.google.cn/dl/go1.20.13.linux-amd64.tar.gz

rm -rf /usr/local/go && tar -C /usr/local -xzf go1.20.13.linux-amd64.tar.gz

mkdir -p /data/soft/go

mkdir -p /data/soft/go/src

mkdir -p /data/soft/go/bin

export GOPATH="/data/soft/go"

export GOBIN="/data/soft/go/bin"

export PATH="/usr/local/go/bin:$GOPATH/bin:${PATH}"

source ~/.bashrc

设置golang代理

go env -w GO111MODULE=auto

go env -w GOPROXY="https://goproxy.cn,direct"

安装CFSSL

用于 k8s 证书生成

go install github.com/cloudflare/cfssl/cmd/...@latest

# 验证

[root@test ~]# cfssl

No command is given.

Usage:

Available commands:

sign

genkey

gencsr

ocspserve

revoke

certinfo

crl

version

gencert

ocspdump

selfsign

ocsprefresh

scan

bundle

serve

gencrl

ocspsign

info

print-defaults

Top-level flags:

[root@test ~]#

下载kubernetes代码

mkdir $GOPATH/src/k8s.io && cd $GOPATH/src/k8s.io

git clone https://github.com/kubernetes/kubernetes.git

git checkout -b myv1.24.16 v1.24.16

# 编译

[root@test kubernetes]# make

+++ [0203 09:03:04] Building go targets for linux/amd64

k8s.io/kubernetes/cmd/kube-proxy (static)

k8s.io/kubernetes/cmd/kube-apiserver (static)

k8s.io/kubernetes/cmd/kube-controller-manager (static)

k8s.io/kubernetes/cmd/kubelet (non-static)

k8s.io/kubernetes/cmd/kubeadm (static)

k8s.io/kubernetes/cmd/kube-scheduler (static)

k8s.io/component-base/logs/kube-log-runner (static)

k8s.io/kube-aggregator (static)

k8s.io/apiextensions-apiserver (static)

k8s.io/kubernetes/cluster/gce/gci/mounter (non-static)

k8s.io/kubernetes/cmd/kubectl (static)

k8s.io/kubernetes/cmd/kubectl-convert (static)

github.com/onsi/ginkgo/v2/ginkgo (non-static)

k8s.io/kubernetes/test/e2e/e2e.test (test)

k8s.io/kubernetes/test/conformance/image/go-runner (non-static)

k8s.io/kubernetes/cmd/kubemark (static)

github.com/onsi/ginkgo/v2/ginkgo (non-static)

k8s.io/kubernetes/test/e2e_node/e2e_node.test (test)

# 生成的执行文件如下

[root@test bin]# ls

apiextensions-apiserver e2e.test go-runner kube-aggregator kube-controller-manager kubectl-convert kube-log-runner kube-proxy mounter

e2e_node.test ginkgo kubeadm kube-apiserver kubectl kubelet kubemark kube-scheduler

[root@test bin]# pwd

/root/go/src/k8s.io/kubernetes/_output/bin

# 添加环境变量

export PATH="/root/go/src/k8s.io/kubernetes/_output/bin:${PATH}"

[root@test ~]# source .bashrc

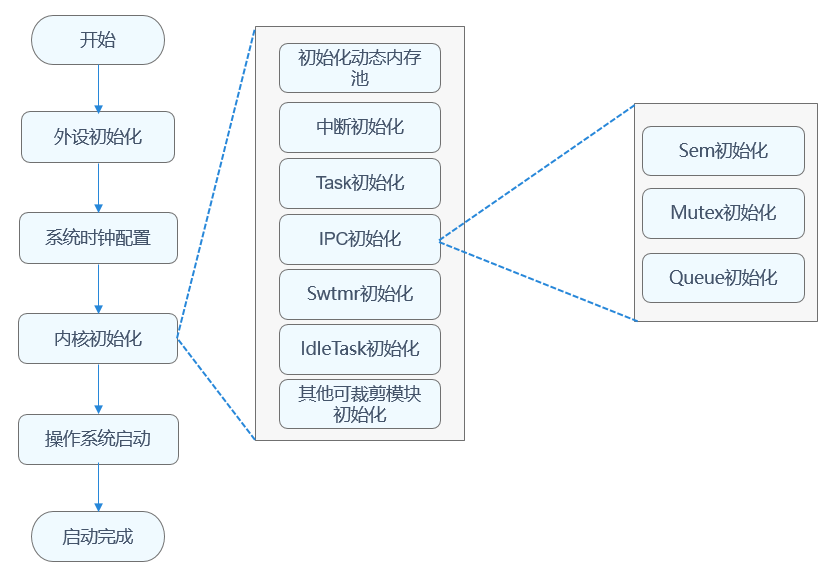

编译启动本地单节点集群

首先通过设置环境变量DBG=1 禁止编译二进制时做任何优化和内联(必须禁止)。

同时通过设置环境变量 ENABLE_DAEMON=true 表示集群启动成功后(执行二进制文件)以守护进程的方式运行,否则过一段时间将自动退出服务。

# 此命令 sh 两个作用,一个编译,一个起单节点的集群 (第一次执行)

[root@test kubernetes]# /data/soft/go/src/k8s.io/kubernetes/hack/local-up-cluster.sh

make: 进入目录“/root/go/src/k8s.io/kubernetes”

cd /data/soft/go/src/k8s.io/kubernetes

# 第二次

ENABLE_DAEMON=true DBG=1 /data/soft/go/src/k8s.io/kubernetes/hack/local-up-cluster.sh -O

export KUBECONFIG=/var/run/kubernetes/admin.kubeconfig

问题

遇到如下问题,一步一步如下解决

[root@test kubernetes]# kubectl describe pod nginx

Name: nginx

Namespace: default

Priority: 0

Node: <none>

Labels: app=nginx

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Containers:

nginx:

Image: nginx

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-6rbkk (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-6rbkk:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 6m13s default-scheduler no nodes available to schedule pods

Warning FailedScheduling 65s default-scheduler no nodes available to schedule pods

[root@test kubernetes]#

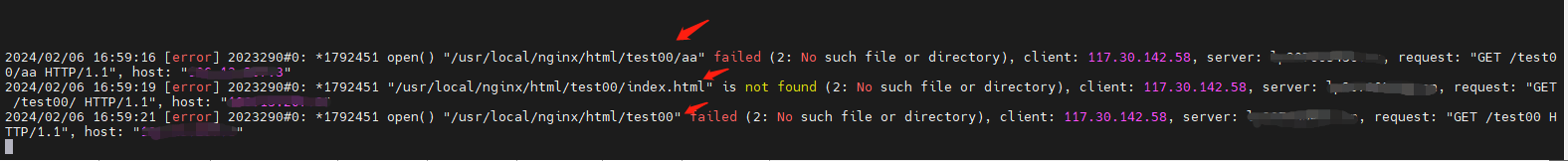

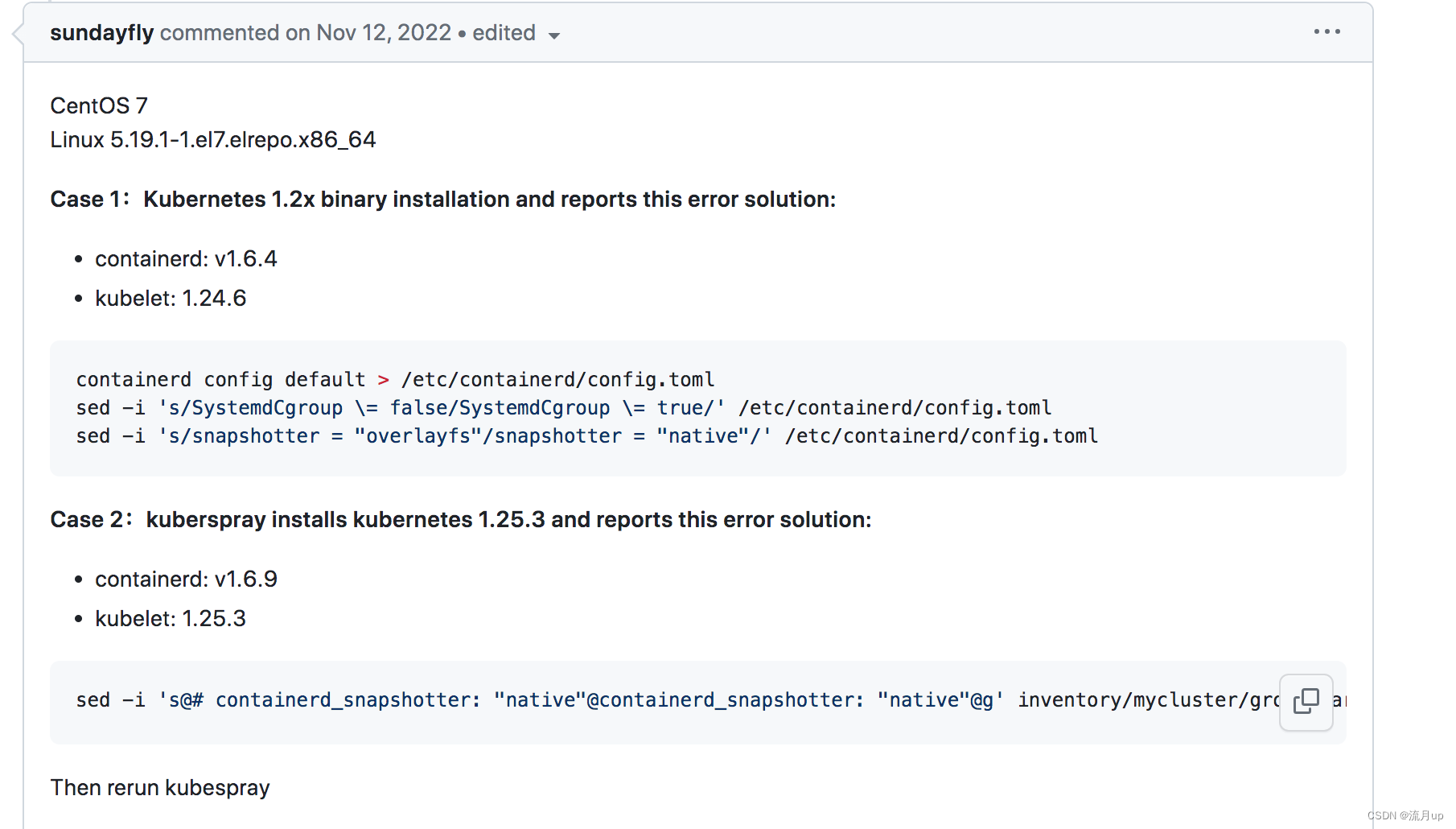

k8s没有被正常启动该如何

看日志: cat /tmp/kubelet.log

--volume-stats-agg-period duration Specifies interval for kubelet to calculate and cache the volume disk usage for all pods and volumes. To disable volume calculations, set to a negative number. (default 1m0s) (DEPRECATED: This parameter should be set via the config file specified by the Kubelet's --config flag. See https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ for more information.)

Error: failed to run Kubelet: failed to create kubelet: get remote runtime typed version failed: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService

实际是由 # 手动修改

systemd_cgroup = true 引起

解决

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml

sed -i 's/snapshotter = "overlayfs"/snapshotter = "native"/' /etc/containerd/config.toml

sed -i '/\[plugins\."io\.containerd\.grpc\.v1\.cri"\.registry\.mirrors\]/a\ [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]\n endpoint = ["https://registry.aliyuncs.com" ,"https://registry-1.docker.io"]' /etc/containerd/config.toml

[root@test kubernetes]# cat /etc/containerd/config.toml | grep -n "sandbox_image"

61: sandbox_image = "registry.k8s.io/pause:3.6"

[root@test kubernetes]# vim /etc/containerd/config.toml

systemctl restart containerd

# 不再配置下面的

[root@Master ~]# vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@Master ~]# docker info|grep Cgroup

Cgroup Driver: systemd

Cgroup Version: 1

[root@Master ~]#

[root@Master ~]# systemctl restart docker

k8s正常启动日志

[root@test kubernetes]# ENABLE_DAEMON=true DBG=1 /data/soft/go/src/k8s.io/kubernetes/hack/local-up-cluster.sh -O

skipped the build.

API SERVER secure port is free, proceeding...

Detected host and ready to start services. Doing some housekeeping first...

Using GO_OUT /data/soft/go/src/k8s.io/kubernetes/_output/bin

Starting services now!

Starting etcd

etcd --advertise-client-urls http://127.0.0.1:2379 --data-dir /tmp/tmp.SIy3gjvswf --listen-client-urls http://127.0.0.1:2379 --log-level=warn 2> "/tmp/etcd.log" >/dev/null

Waiting for etcd to come up.

+++ [0204 15:36:47] On try 2, etcd: : {"health":"true","reason":""}

{"header":{"cluster_id":"14841639068965178418","member_id":"10276657743932975437","revision":"2","raft_term":"2"}}Generating a 2048 bit RSA private key

....................................................................................+++

..+++

writing new private key to '/var/run/kubernetes/server-ca.key'

-----

Generating a 2048 bit RSA private key

.................+++

...................+++

writing new private key to '/var/run/kubernetes/client-ca.key'

-----

Generating a 2048 bit RSA private key

...........+++

...........+++

writing new private key to '/var/run/kubernetes/request-header-ca.key'

-----

2024/02/04 15:36:47 [INFO] generate received request

2024/02/04 15:36:47 [INFO] received CSR

2024/02/04 15:36:47 [INFO] generating key: rsa-2048

2024/02/04 15:36:48 [INFO] encoded CSR

2024/02/04 15:36:48 [INFO] signed certificate with serial number 503559090451029160883764206620515450001598207346

2024/02/04 15:36:48 [INFO] generate received request

2024/02/04 15:36:48 [INFO] received CSR

2024/02/04 15:36:48 [INFO] generating key: rsa-2048

2024/02/04 15:36:49 [INFO] encoded CSR

2024/02/04 15:36:49 [INFO] signed certificate with serial number 493741312692019681510347426741341846315781053599

2024/02/04 15:36:49 [INFO] generate received request

2024/02/04 15:36:49 [INFO] received CSR

2024/02/04 15:36:49 [INFO] generating key: rsa-2048

2024/02/04 15:36:49 [INFO] encoded CSR

2024/02/04 15:36:49 [INFO] signed certificate with serial number 152285310549920910920928212323269736605793904352

2024/02/04 15:36:49 [INFO] generate received request

2024/02/04 15:36:49 [INFO] received CSR

2024/02/04 15:36:49 [INFO] generating key: rsa-2048

2024/02/04 15:36:50 [INFO] encoded CSR

2024/02/04 15:36:50 [INFO] signed certificate with serial number 125312127036331176903810097174162604078302808888

2024/02/04 15:36:50 [INFO] generate received request

2024/02/04 15:36:50 [INFO] received CSR

2024/02/04 15:36:50 [INFO] generating key: rsa-2048

2024/02/04 15:36:50 [INFO] encoded CSR

2024/02/04 15:36:50 [INFO] signed certificate with serial number 181140179293506272832484903295356268063767113896

2024/02/04 15:36:50 [INFO] generate received request

2024/02/04 15:36:50 [INFO] received CSR

2024/02/04 15:36:50 [INFO] generating key: rsa-2048

2024/02/04 15:36:50 [INFO] encoded CSR

2024/02/04 15:36:50 [INFO] signed certificate with serial number 103730507459626501912222037902428030421854814351

2024/02/04 15:36:50 [INFO] generate received request

2024/02/04 15:36:50 [INFO] received CSR

2024/02/04 15:36:50 [INFO] generating key: rsa-2048

2024/02/04 15:36:51 [INFO] encoded CSR

2024/02/04 15:36:51 [INFO] signed certificate with serial number 43096772975744811124579756033905702137004279739

2024/02/04 15:36:51 [INFO] generate received request

2024/02/04 15:36:51 [INFO] received CSR

2024/02/04 15:36:51 [INFO] generating key: rsa-2048

2024/02/04 15:36:51 [INFO] encoded CSR

2024/02/04 15:36:51 [INFO] signed certificate with serial number 592246123162051006791059223014302580834797916367

Waiting for apiserver to come up

+++ [0204 15:36:57] On try 5, apiserver: : ok

clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver-kubelet-admin created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-csr created

Cluster "local-up-cluster" set.

use 'kubectl --kubeconfig=/var/run/kubernetes/admin-kube-aggregator.kubeconfig' to use the aggregated API server

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

coredns addon successfully deployed.

Checking CNI Installation at /opt/cni/bin

WARNING : The kubelet is configured to not fail even if swap is enabled; production deployments should disable swap unless testing NodeSwap feature.

2024/02/04 15:36:59 [INFO] generate received request

2024/02/04 15:36:59 [INFO] received CSR

2024/02/04 15:36:59 [INFO] generating key: rsa-2048

2024/02/04 15:37:00 [INFO] encoded CSR

2024/02/04 15:37:00 [INFO] signed certificate with serial number 274826002626477023551022370298818852502781648689

kubelet ( 12990 ) is running.

wait kubelet ready

No resources found

No resources found

No resources found

No resources found

No resources found

No resources found

No resources found

127.0.0.1 NotReady <none> 1s v1.24.16

2024/02/04 15:37:15 [INFO] generate received request

2024/02/04 15:37:15 [INFO] received CSR

2024/02/04 15:37:15 [INFO] generating key: rsa-2048

2024/02/04 15:37:16 [INFO] encoded CSR

2024/02/04 15:37:16 [INFO] signed certificate with serial number 439607026402203447424075767894402727838125873987

Create default storage class for

storageclass.storage.k8s.io/standard created

Local Kubernetes cluster is running.

Logs:

/tmp/kube-apiserver.log

/tmp/kube-controller-manager.log

/tmp/kube-proxy.log

/tmp/kube-scheduler.log

/tmp/kubelet.log

To start using your cluster, run:

export KUBECONFIG=/var/run/kubernetes/admin.kubeconfig

cluster/kubectl.sh

Alternatively, you can write to the default kubeconfig:

export KUBERNETES_PROVIDER=local

cluster/kubectl.sh config set-cluster local --server=https://localhost:6443 --certificate-authority=/var/run/kubernetes/server-ca.crt

cluster/kubectl.sh config set-credentials myself --client-key=/var/run/kubernetes/client-admin.key --client-certificate=/var/run/kubernetes/client-admin.crt

cluster/kubectl.sh config set-context local --cluster=local --user=myself

cluster/kubectl.sh config use-context local

cluster/kubectl.sh

[root@test kubernetes]#

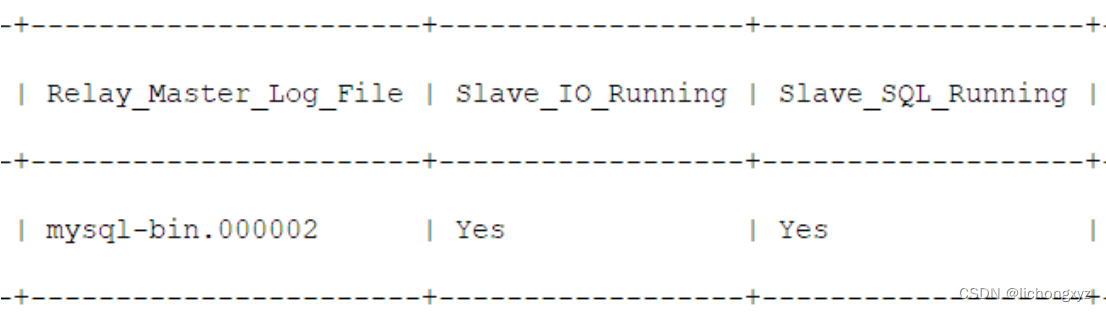

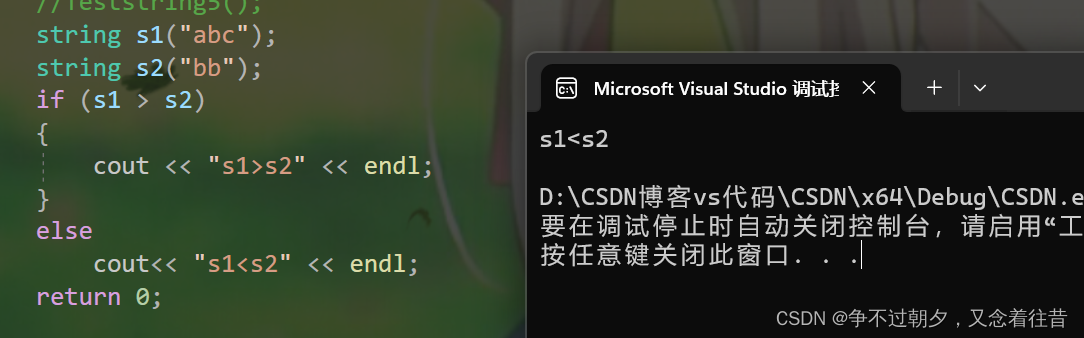

测试

[root@test kubernetes]# export KUBECONFIG=/var/run/kubernetes/admin.kubeconfig

[root@test kubernetes]#

[root@test kubernetes]# ./cluster/kubectl.sh get nodes

NAME STATUS ROLES AGE VERSION

127.0.0.1 Ready <none> 18m v1.24.16

[root@test kubernetes]# ./cluster/kubectl.sh get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 18m

[root@test kubernetes]# ./cluster/kubectl.sh get replicationcontrollers

No resources found in default namespace.

[root@test kubernetes]# ctr --namespace k8s.io image ls

REF TYPE DIGEST SIZE PLATFORMS LABELS

[root@test kubernetes]# ./cluster/kubectl.sh create -f test/fixtures/doc-yaml/user-guide/pod.yaml

pod/nginx created

[root@test kubernetes]# ./cluster/kubectl.sh get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 7s

# 通过此验证是否有问题

kubectl describe pod nginx

[root@test kubernetes]# ./cluster/kubectl.sh get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 89s

[root@test kubernetes]#

结束

linux k8s 源码编译及单集群测试 至此结束,如有疑问,欢迎评论区留言。

![[React] ref属性](https://img-blog.csdnimg.cn/direct/023768efa3024bf69bec74e3b0fc8eb3.png)