目录

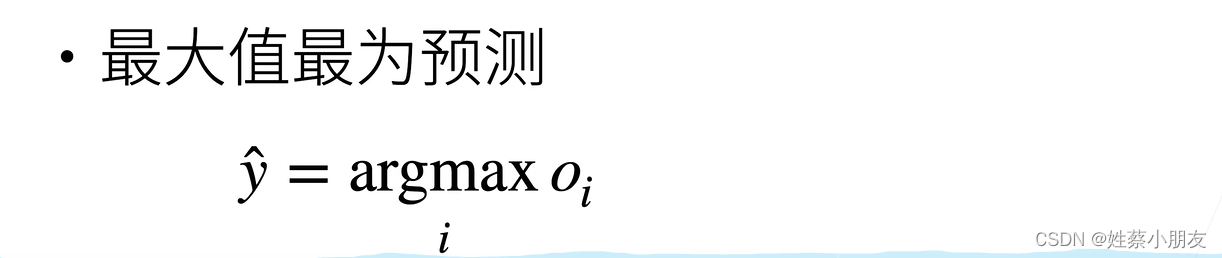

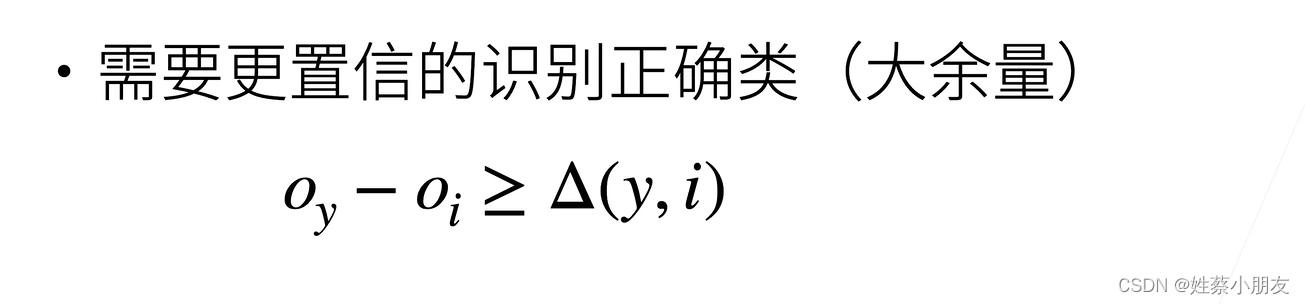

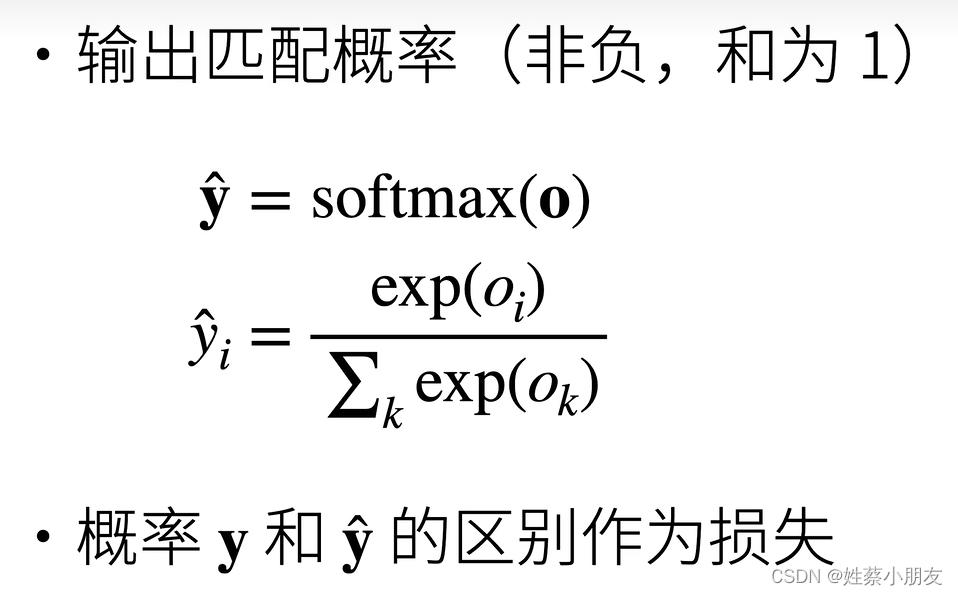

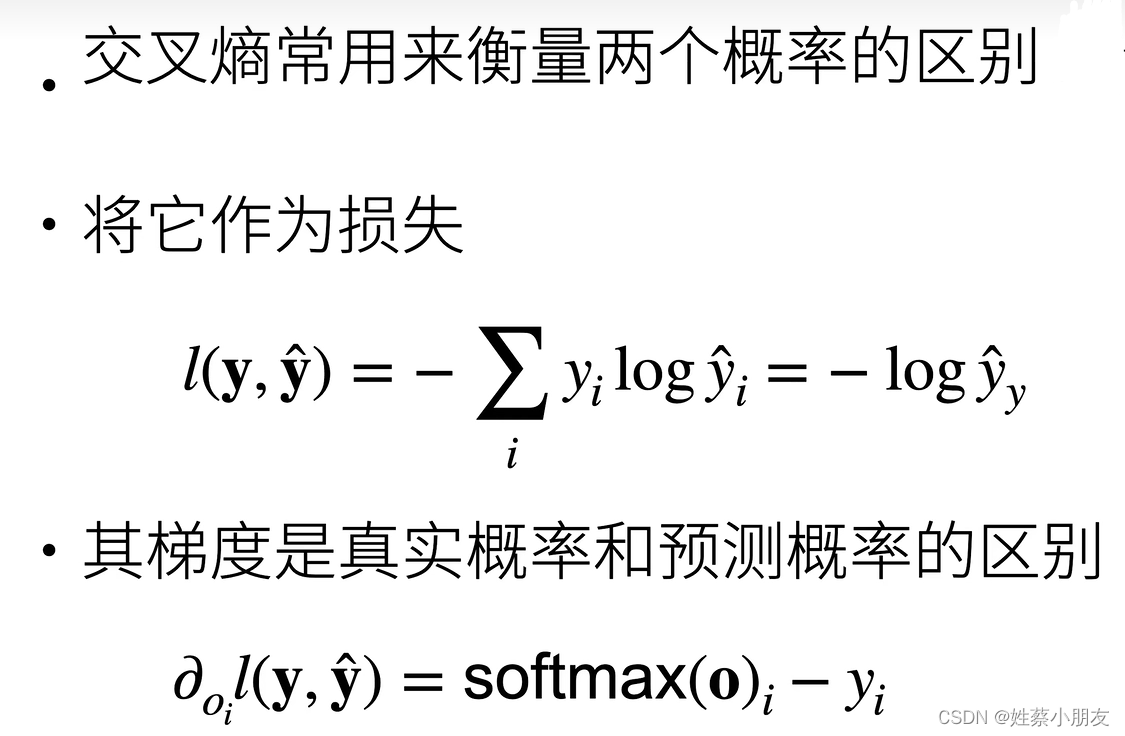

1.对真实值类别编码: 2.预测值: 3.目标函数要求: 4.使用Softmax模型将输出置信度Oi计算转换为输出匹配概率y^i: 5.使用交叉熵作为损失函数: 6.代码实现:

y为真实值,有且仅有一个位置值为1,该位置即为该元素真实类别

对于正确类y的置信度Oy要远远大于其他非正确类的置信度Oi,才能使识别到的正确类与错误类具有更明显的差距

y^为n维向量,每个元素非负且和为1 y^i为元素与类别i匹配的概率

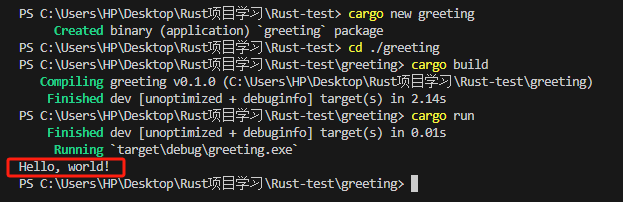

L为真实概率y与预测概率y^的差距 分类问题不关心非正确类的预测值,只关心正确类的预测值有多大 import sys

import os

import matplotlib. pyplot as plt

import torch

import torchvision

from torchvision import transforms

from torch. utils import data

from d2l import torch as d2l

os. environ[ "KMP_DUPLICATE_LIB_OK" ] = "TRUE"

batch_size = 256

trans = transforms. ToTensor( )

mnist_train = torchvision. datasets. FashionMNIST(

root= "../data" , train= True , transform= trans, download= True )

mnist_test = torchvision. datasets. FashionMNIST(

root= "../data" , train= False , transform= trans, download= True )

print ( len ( mnist_train) )

print ( '11111111' )

def get_fashion_mnist_labels ( labels) :

"""返回Fashion-MNIST数据集的文本标签。"""

text_labels = [ 't-shirt' , 'trouser' , 'pullover' , 'dress' , 'coat' ,

'sandal' , 'shirt' , 'sneaker' , 'bag' , 'ankle boot' ]

return [ text_labels[ int ( i) ] for i in labels]

def show_fashion_mnist ( images, labels) :

d2l. use_svg_display( )

_, figs = plt. subplots( 1 , len ( images) , figsize= ( 12 , 12 ) )

for f, img, lbl in zip ( figs, images, labels) :

f. imshow( img. view( ( 28 , 28 ) ) . numpy( ) )

f. set_title( lbl)

f. axes. get_xaxis( ) . set_visible( False )

f. axes. get_yaxis( ) . set_visible( False )

plt. show( )

train_data, train_targets = next ( iter ( data. DataLoader( mnist_train, batch_size= 18 ) ) )

show_fashion_mnist( train_data[ 0 : 10 ] , train_targets[ 0 : 10 ] )

num_inputs = 784

num_outputs = 10

W = torch. normal( 0 , 0.01 , size= ( num_inputs, num_outputs) , requires_grad= True )

b = torch. zeros( num_outputs, requires_grad= True )

def softmax ( X) :

X_exp = X. exp( )

partition = X_exp. sum ( dim= 1 , keepdim= True )

return X_exp / partition

def net ( X) :

return softmax( torch. matmul( X. reshape( - 1 , num_inputs) , W) + b)

y_hat = torch. tensor( [ [ 0.1 , 0.3 , 0.6 ] , [ 0.3 , 0.2 , 0.5 ] ] )

y = torch. LongTensor( [ 0 , 2 ] )

y_hat. gather( 1 , y. view( - 1 , 1 ) )

def cross_entropy ( y_hat, y) :

return - torch. log( y_hat. gather( 1 , y. view( - 1 , 1 ) ) )

def accuracy ( y_hat, y) :

return ( y_hat. argmax( dim= 1 ) == y) . float ( ) . mean( ) . item( )

def evaluate_accuracy ( data_iter, net) :

acc_sum, n = 0.0 , 0

for X, y in data_iter:

acc_sum += ( net( X) . argmax( dim= 1 ) == y) . float ( ) . sum ( ) . item( )

n += y. shape[ 0 ]

return acc_sum / n

num_epochs, lr = 10 , 0.1

def train_ch3 ( net, train_iter, test_iter, loss, num_epochs, batch_size,

params= None , lr= None , optimizer= None ) :

for epoch in range ( num_epochs) :

train_l_sum, train_acc_sum, n = 0.0 , 0.0 , 0

for X, y in train_iter:

y_hat = net( X)

l = loss( y_hat, y) . sum ( )

if params is not None and params[ 0 ] . grad is not None :

for param in params:

param. grad. data. zero_( )

l. backward( )

if optimizer is not None :

optimizer. step( )

else :

d2l. sgd( params, lr, batch_size)

train_l_sum += l. item( )

train_acc_sum += ( y_hat. argmax( dim= 1 ) == y) . sum ( ) . item( )

n += y. shape[ 0 ]

test_acc = evaluate_accuracy( test_iter, net)

print ( 'epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% ( epoch + 1 , train_l_sum / n, train_acc_sum / n, test_acc) )

train_iter, test_iter = d2l. load_data_fashion_mnist( batch_size)

train_ch3( net, train_iter, test_iter, cross_entropy, num_epochs, batch_size, [ W, b] , lr)

for X, y in test_iter:

break

true_labels = get_fashion_mnist_labels( y. numpy( ) )

pred_labels = get_fashion_mnist_labels( net( X) . argmax( dim= 1 ) . numpy( ) )

titles = [ true + '\n' + pred for true, pred in zip ( true_labels, pred_labels) ]

show_fashion_mnist( X[ 0 : 9 ] , titles[ 0 : 9 ] )