- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

文章目录

- 前言

- 1 我的环境

- 2 代码实现与执行结果

- 2.1 前期准备

- 2.1.1 引入库

- 2.1.2 设置GPU(如果设备上支持GPU就使用GPU,否则使用CPU)

- 2.1.3 导入数据

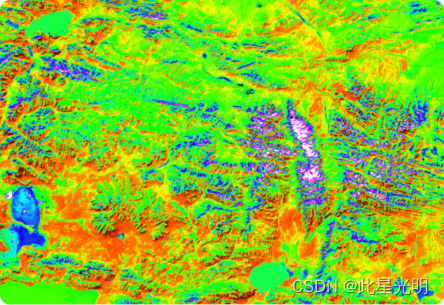

- 2.1.4 可视化数据

- 2.1.4 图像数据变换

- 2.1.4 划分数据集

- 2.1.4 加载数据

- 2.1.4 查看数据

- 2.2 构建CNN网络模型

- 2.3 训练模型

- 2.3.1 设置超参数

- 2.3.2 编写训练函数

- 2.3.3 编写测试函数

- 2.3.4 正式训练

- 2.4 结果可视化

- 2.4 指定图片进行预测

- 2.6 模型评估

- 3 知识点详解

- 3.1 拔高尝试--VGG16+BatchNormalization+Dropout层+全局平均池化层代替全连接层(模型轻量化)

- 总结

前言

本文将采用pytorch框架创建CNN网络,实现咖啡豆识别。讲述实现代码与执行结果,并浅谈涉及知识点。

关键字: 增加Dropout层,全局平均池化层代替全连接层(模型轻量化)

1 我的环境

- 电脑系统:Windows 11

- 语言环境:python 3.8.6

- 编译器:pycharm2020.2.3

- 深度学习环境:

torch == 1.9.1+cu111

torchvision == 0.10.1+cu111 - 显卡:NVIDIA GeForce RTX 4070

2 代码实现与执行结果

2.1 前期准备

2.1.1 引入库

import torch

import torch.nn as nn

from torchvision import transforms, datasets

import time

from pathlib import Path

from PIL import Image

import torchsummary as summary

import torch.nn.functional as F

import copy

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 # 分辨率

import warnings

warnings.filterwarnings('ignore') # 忽略一些warning内容,无需打印

2.1.2 设置GPU(如果设备上支持GPU就使用GPU,否则使用CPU)

"""前期准备-设置GPU"""

# 如果设备上支持GPU就使用GPU,否则使用CPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("Using {} device".format(device))

输出

Using cuda device

2.1.3 导入数据

'''前期工作-导入数据'''

data_dir = r"D:\DeepLearning\data\CoffeeBean"

data_dir = Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[-1] for path in data_paths]

print(classeNames)

输出

['Dark', 'Green', 'Light', 'Medium']

2.1.4 可视化数据

'''前期工作-可视化数据'''

subfolder = Path(data_dir)/"Angelina Jolie"

image_files = list(p.resolve() for p in subfolder.glob('*') if p.suffix in [".jpg", ".png", ".jpeg"])

plt.figure(figsize=(10, 6))

for i in range(len(image_files[:12])):

image_file = image_files[i]

ax = plt.subplot(3, 4, i + 1)

img = Image.open(str(image_file))

plt.imshow(img)

plt.axis("off")

# 显示图片

plt.tight_layout()

plt.show()

2.1.4 图像数据变换

'''前期工作-图像数据变换'''

total_datadir = data_dir

# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder(total_datadir, transform=train_transforms)

print(total_data)

print(total_data.class_to_idx)

输出

Dataset ImageFolder

Number of datapoints: 1200

Root location: D:\DeepLearning\data\CoffeeBean

StandardTransform

Transform: Compose(

Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)

{'Dark': 0, 'Green': 1, 'Light': 2, 'Medium': 3}

2.1.4 划分数据集

'''前期工作-划分数据集'''

train_size = int(0.8 * len(total_data)) # train_size表示训练集大小,通过将总体数据长度的80%转换为整数得到;

test_size = len(total_data) - train_size # test_size表示测试集大小,是总体数据长度减去训练集大小。

# 使用torch.utils.data.random_split()方法进行数据集划分。该方法将总体数据total_data按照指定的大小比例([train_size, test_size])随机划分为训练集和测试集,

# 并将划分结果分别赋值给train_dataset和test_dataset两个变量。

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

print("train_dataset={}\ntest_dataset={}".format(train_dataset, test_dataset))

print("train_size={}\ntest_size={}".format(train_size, test_size))

输出

train_dataset=<torch.utils.data.dataset.Subset object at 0x0000021C2423A610>

test_dataset=<torch.utils.data.dataset.Subset object at 0x0000021C2423A5B0>

train_size=960

test_size=240

2.1.4 加载数据

'''前期工作-加载数据'''

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

2.1.4 查看数据

'''前期工作-查看数据'''

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

输出

Shape of X [N, C, H, W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

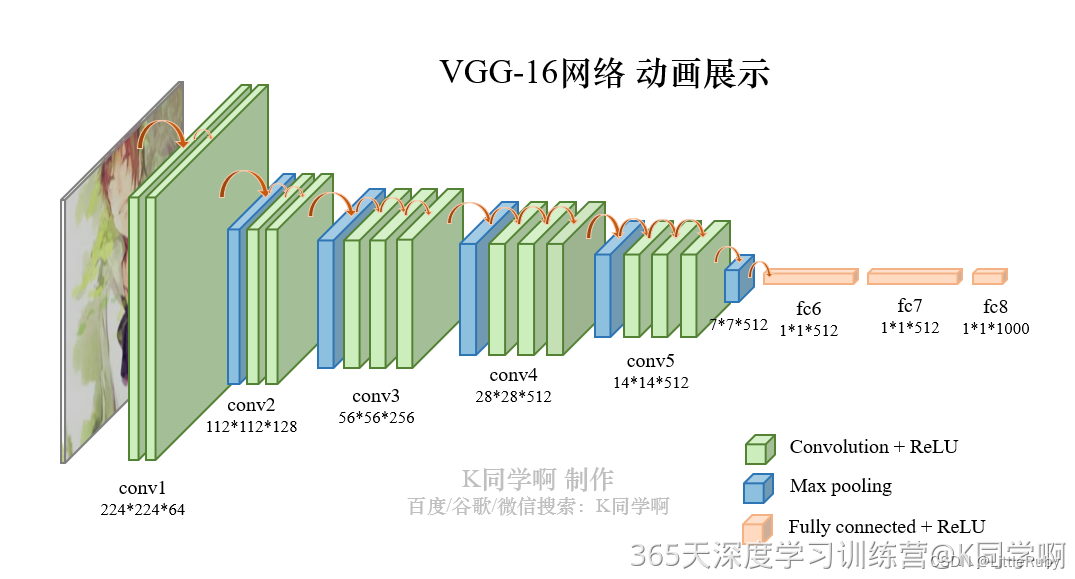

2.2 构建CNN网络模型

"""构建CNN网络"""

class vgg16net(nn.Module):

def __init__(self):

super(vgg16net, self).__init__()

# 卷积块1

self.block1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块2

self.block2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块3

self.block3 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块4

self.block4 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块5

self.block5 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 全连接网络层,用于分类

self.classifier = nn.Sequential(

nn.Linear(in_features=512 * 7 * 7, out_features=4096),

nn.ReLU(),

nn.Linear(in_features=4096, out_features=4096),

nn.ReLU(),

nn.Linear(in_features=4096, out_features=4)

)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

model = vgg16net().to(device)

print(model)

print(summary.summary(model, (3, 224, 224)))#查看模型的参数量以及相关指标

输出

vgg16net(

(block1): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU()

(4): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), padding=0, dilation=1, ceil_mode=False)

)

(block2): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU()

(4): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), padding=0, dilation=1, ceil_mode=False)

)

(block3): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU()

(4): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(5): ReLU()

(6): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), padding=0, dilation=1, ceil_mode=False)

)

(block4): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU()

(4): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(5): ReLU()

(6): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), padding=0, dilation=1, ceil_mode=False)

)

(block5): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU()

(4): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(5): ReLU()

(6): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU()

(2): Linear(in_features=4096, out_features=4096, bias=True)

(3): ReLU()

(4): Linear(in_features=4096, out_features=4, bias=True)

)

)

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 224, 224] 1,792

ReLU-2 [-1, 64, 224, 224] 0

Conv2d-3 [-1, 64, 224, 224] 36,928

ReLU-4 [-1, 64, 224, 224] 0

MaxPool2d-5 [-1, 64, 112, 112] 0

Conv2d-6 [-1, 128, 112, 112] 73,856

ReLU-7 [-1, 128, 112, 112] 0

Conv2d-8 [-1, 128, 112, 112] 147,584

ReLU-9 [-1, 128, 112, 112] 0

MaxPool2d-10 [-1, 128, 56, 56] 0

Conv2d-11 [-1, 256, 56, 56] 295,168

ReLU-12 [-1, 256, 56, 56] 0

Conv2d-13 [-1, 256, 56, 56] 590,080

ReLU-14 [-1, 256, 56, 56] 0

Conv2d-15 [-1, 256, 56, 56] 590,080

ReLU-16 [-1, 256, 56, 56] 0

MaxPool2d-17 [-1, 256, 28, 28] 0

Conv2d-18 [-1, 512, 28, 28] 1,180,160

ReLU-19 [-1, 512, 28, 28] 0

Conv2d-20 [-1, 512, 28, 28] 2,359,808

ReLU-21 [-1, 512, 28, 28] 0

Conv2d-22 [-1, 512, 28, 28] 2,359,808

ReLU-23 [-1, 512, 28, 28] 0

MaxPool2d-24 [-1, 512, 14, 14] 0

Conv2d-25 [-1, 512, 14, 14] 2,359,808

ReLU-26 [-1, 512, 14, 14] 0

Conv2d-27 [-1, 512, 14, 14] 2,359,808

ReLU-28 [-1, 512, 14, 14] 0

Conv2d-29 [-1, 512, 14, 14] 2,359,808

ReLU-30 [-1, 512, 14, 14] 0

MaxPool2d-31 [-1, 512, 7, 7] 0

Linear-32 [-1, 4096] 102,764,544

ReLU-33 [-1, 4096] 0

Linear-34 [-1, 4096] 16,781,312

ReLU-35 [-1, 4096] 0

Linear-36 [-1, 4] 16,388

================================================================

Total params: 134,276,932

Trainable params: 134,276,932

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 218.52

Params size (MB): 512.23

Estimated Total Size (MB): 731.32

----------------------------------------------------------------

None

2.3 训练模型

2.3.1 设置超参数

"""训练模型--设置超参数"""

loss_fn = nn.CrossEntropyLoss() # 创建损失函数,计算实际输出和真实相差多少,交叉熵损失函数,事实上,它就是做图片分类任务时常用的损失函数

learn_rate = 1e-4 # 学习率

optimizer1 = torch.optim.SGD(model.parameters(), lr=learn_rate)# 作用是定义优化器,用来训练时候优化模型参数;其中,SGD表示随机梯度下降,用于控制实际输出y与真实y之间的相差有多大

optimizer2 = torch.optim.Adam(model.parameters(), lr=learn_rate)

lr_opt = optimizer2

model_opt = optimizer2

# 调用官方动态学习率接口时使用2

lambda1 = lambda epoch : 0.92 ** (epoch // 4)

# optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(lr_opt, lr_lambda=lambda1) #选定调整方法

2.3.2 编写训练函数

"""训练模型--编写训练函数"""

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小,一共60000张图片

num_batches = len(dataloader) # 批次数目,1875(60000/32)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 加载数据加载器,得到里面的 X(图片数据)和 y(真实标签)

X, y = X.to(device), y.to(device) # 用于将数据存到显卡

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # 清空过往梯度

loss.backward() # 反向传播,计算当前梯度

optimizer.step() # 根据梯度更新网络参数

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

2.3.3 编写测试函数

"""训练模型--编写测试函数"""

# 测试函数和训练函数大致相同,但是由于不进行梯度下降对网络权重进行更新,所以不需要传入优化器

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小,一共10000张图片

num_batches = len(dataloader) # 批次数目,313(10000/32=312.5,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad(): # 测试时模型参数不用更新,所以 no_grad,整个模型参数正向推就ok,不反向更新参数

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()#统计预测正确的个数

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

2.3.4 正式训练

"""训练模型--正式训练"""

epochs = 40

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_test_acc=0

for epoch in range(epochs):

milliseconds_t1 = int(time.time() * 1000)

# 更新学习率(使用自定义学习率时使用)

# adjust_learning_rate(lr_opt, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, model_opt)

scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = lr_opt.state_dict()['param_groups'][0]['lr']

milliseconds_t2 = int(time.time() * 1000)

template = ('Epoch:{:2d}, duration:{}ms, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}, Lr:{:.2E}')

if best_test_acc < epoch_test_acc:

best_test_acc = epoch_test_acc

#备份最好的模型

best_model = copy.deepcopy(model)

template = (

'Epoch:{:2d}, duration:{}ms, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}, Lr:{:.2E},Update the best model')

print(

template.format(epoch + 1, milliseconds_t2-milliseconds_t1, epoch_train_acc * 100, epoch_train_loss, epoch_test_acc * 100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')

输出最高精度为Test_acc:42.5%

Epoch: 1, duration:8422ms, Train_acc:22.8%, Train_loss:1.389, Test_acc:28.3%,Test_loss:1.386, Lr:1.00E-04,Update the best model

Epoch: 2, duration:8256ms, Train_acc:27.8%, Train_loss:1.380, Test_acc:41.7%,Test_loss:1.182, Lr:1.00E-04,Update the best model

Epoch: 3, duration:8254ms, Train_acc:54.4%, Train_loss:0.875, Test_acc:71.7%,Test_loss:0.626, Lr:1.00E-04,Update the best model

Epoch: 4, duration:8322ms, Train_acc:65.5%, Train_loss:0.686, Test_acc:71.7%,Test_loss:0.647, Lr:9.20E-05

Epoch: 5, duration:8287ms, Train_acc:73.6%, Train_loss:0.535, Test_acc:67.1%,Test_loss:0.772, Lr:9.20E-05

Epoch: 6, duration:8259ms, Train_acc:85.4%, Train_loss:0.346, Test_acc:88.3%,Test_loss:0.282, Lr:9.20E-05,Update the best model

Epoch: 7, duration:8335ms, Train_acc:90.4%, Train_loss:0.274, Test_acc:90.0%,Test_loss:0.231, Lr:9.20E-05,Update the best model

Epoch: 8, duration:8290ms, Train_acc:94.0%, Train_loss:0.162, Test_acc:87.9%,Test_loss:0.330, Lr:8.46E-05

Epoch: 9, duration:8296ms, Train_acc:90.1%, Train_loss:0.286, Test_acc:94.6%,Test_loss:0.133, Lr:8.46E-05,Update the best model

Epoch:10, duration:8431ms, Train_acc:96.5%, Train_loss:0.108, Test_acc:95.4%,Test_loss:0.108, Lr:8.46E-05,Update the best model

Epoch:11, duration:8300ms, Train_acc:94.7%, Train_loss:0.145, Test_acc:95.0%,Test_loss:0.180, Lr:8.46E-05

Epoch:12, duration:8255ms, Train_acc:97.3%, Train_loss:0.080, Test_acc:96.2%,Test_loss:0.150, Lr:7.79E-05,Update the best model

Epoch:13, duration:8306ms, Train_acc:93.6%, Train_loss:0.205, Test_acc:90.4%,Test_loss:0.209, Lr:7.79E-05

Epoch:14, duration:8344ms, Train_acc:97.1%, Train_loss:0.076, Test_acc:97.1%,Test_loss:0.112, Lr:7.79E-05,Update the best model

Epoch:15, duration:8317ms, Train_acc:94.8%, Train_loss:0.154, Test_acc:97.1%,Test_loss:0.085, Lr:7.79E-05

Epoch:16, duration:8247ms, Train_acc:97.4%, Train_loss:0.072, Test_acc:97.5%,Test_loss:0.051, Lr:7.16E-05,Update the best model

Epoch:17, duration:8312ms, Train_acc:98.5%, Train_loss:0.033, Test_acc:97.9%,Test_loss:0.051, Lr:7.16E-05,Update the best model

Epoch:18, duration:8205ms, Train_acc:98.3%, Train_loss:0.039, Test_acc:93.3%,Test_loss:0.208, Lr:7.16E-05

Epoch:19, duration:8188ms, Train_acc:97.1%, Train_loss:0.088, Test_acc:96.7%,Test_loss:0.078, Lr:7.16E-05

Epoch:20, duration:8185ms, Train_acc:98.9%, Train_loss:0.028, Test_acc:97.5%,Test_loss:0.076, Lr:6.59E-05

Epoch:21, duration:8211ms, Train_acc:98.8%, Train_loss:0.038, Test_acc:97.5%,Test_loss:0.073, Lr:6.59E-05

Epoch:22, duration:8200ms, Train_acc:99.3%, Train_loss:0.025, Test_acc:97.5%,Test_loss:0.056, Lr:6.59E-05

Epoch:23, duration:8366ms, Train_acc:99.3%, Train_loss:0.020, Test_acc:98.3%,Test_loss:0.078, Lr:6.59E-05,Update the best model

Epoch:24, duration:8252ms, Train_acc:99.7%, Train_loss:0.012, Test_acc:98.8%,Test_loss:0.045, Lr:6.06E-05,Update the best model

Epoch:25, duration:8319ms, Train_acc:99.6%, Train_loss:0.007, Test_acc:98.3%,Test_loss:0.047, Lr:6.06E-05

Epoch:26, duration:8369ms, Train_acc:99.9%, Train_loss:0.004, Test_acc:98.3%,Test_loss:0.055, Lr:6.06E-05

Epoch:27, duration:8264ms, Train_acc:99.9%, Train_loss:0.004, Test_acc:98.8%,Test_loss:0.070, Lr:6.06E-05

Epoch:28, duration:8388ms, Train_acc:97.6%, Train_loss:0.075, Test_acc:97.5%,Test_loss:0.077, Lr:5.58E-05

Epoch:29, duration:8245ms, Train_acc:97.6%, Train_loss:0.070, Test_acc:97.1%,Test_loss:0.084, Lr:5.58E-05

Epoch:30, duration:8271ms, Train_acc:99.1%, Train_loss:0.020, Test_acc:96.2%,Test_loss:0.194, Lr:5.58E-05

Epoch:31, duration:8385ms, Train_acc:99.4%, Train_loss:0.015, Test_acc:98.3%,Test_loss:0.057, Lr:5.58E-05

Epoch:32, duration:8362ms, Train_acc:99.3%, Train_loss:0.022, Test_acc:98.3%,Test_loss:0.053, Lr:5.13E-05

Epoch:33, duration:8258ms, Train_acc:99.8%, Train_loss:0.005, Test_acc:98.8%,Test_loss:0.093, Lr:5.13E-05

Epoch:34, duration:8248ms, Train_acc:99.9%, Train_loss:0.003, Test_acc:99.2%,Test_loss:0.043, Lr:5.13E-05,Update the best model

Epoch:35, duration:8346ms, Train_acc:99.8%, Train_loss:0.004, Test_acc:98.8%,Test_loss:0.051, Lr:5.13E-05

Epoch:36, duration:8291ms, Train_acc:99.7%, Train_loss:0.012, Test_acc:97.9%,Test_loss:0.052, Lr:4.72E-05

Epoch:37, duration:8284ms, Train_acc:99.7%, Train_loss:0.008, Test_acc:97.1%,Test_loss:0.125, Lr:4.72E-05

Epoch:38, duration:8308ms, Train_acc:100.0%, Train_loss:0.002, Test_acc:98.8%,Test_loss:0.051, Lr:4.72E-05

Epoch:39, duration:8293ms, Train_acc:100.0%, Train_loss:0.000, Test_acc:98.8%,Test_loss:0.050, Lr:4.72E-05

Epoch:40, duration:8315ms, Train_acc:100.0%, Train_loss:0.000, Test_acc:98.8%,Test_loss:0.055, Lr:4.34E-05

最高Test_acc:99.2%

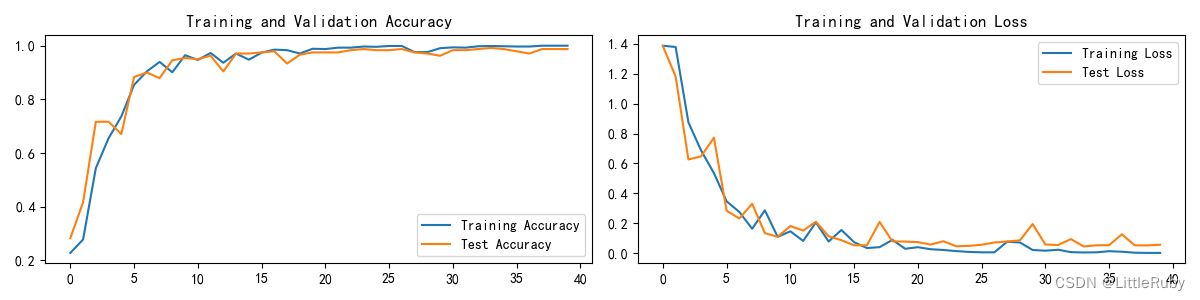

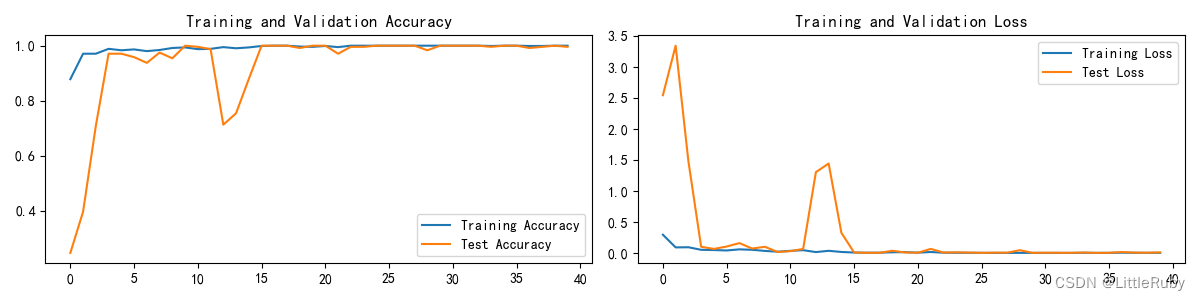

2.4 结果可视化

"""训练模型--结果可视化"""

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

2.4 指定图片进行预测

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img) # 展示预测的图片

plt.show()

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_, pred = torch.max(output, 1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')

# 将参数加载到model当中

model.load_state_dict(torch.load(PATH, map_location=device))

"""指定图片进行预测"""

classes = list(total_data.class_to_idx)

# 预测训练集中的某张照片

predict_one_image(image_path=str(Path(data_dir)/"Dark/dark (1).png"),

model=model,

transform=train_transforms,

classes=classes)

输出

预测结果是:Dark

2.6 模型评估

"""模型评估"""

best_model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, best_model, loss_fn)

# 查看是否与我们记录的最高准确率一致

print(epoch_test_acc, epoch_test_loss)

输出

0.9916666666666667 0.05394061221022639

3 知识点详解

3.1 拔高尝试–VGG16+BatchNormalization+Dropout层+全局平均池化层代替全连接层(模型轻量化)

# 模型轻量化-全局平均池化层代替全连接层+BN+dropout

class vgg16_BN_dropout_globalavgpool(nn.Module):

def __init__(self):

super(vgg16_BN_dropout_globalavgpool, self).__init__()

# 卷积块1

self.block1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.BatchNorm2d(64),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块2

self.block2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.BatchNorm2d(128),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块3

self.block3 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.BatchNorm2d(256),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块4

self.block4 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.BatchNorm2d(512),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

# 卷积块5

self.block5 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)),

nn.ReLU(),

nn.BatchNorm2d(512),

nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))

)

self.dropout = nn.Dropout(p=0.5)

self.avgpool = nn.AdaptiveAvgPool2d(output_size=(1, 1))

# 全连接网络层,用于分类

self.classifier = nn.Sequential(

nn.Linear(in_features=512 , out_features=4),

)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = self.dropout(x)

x = self.avgpool(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

训练过程如下

Epoch: 1, duration:8075ms, Train_acc:87.8%, Train_loss:0.294, Test_acc:24.6%,Test_loss:2.544, Lr:1.00E-04,Update the best model

Epoch: 2, duration:7891ms, Train_acc:97.1%, Train_loss:0.090, Test_acc:39.6%,Test_loss:3.343, Lr:1.00E-04,Update the best model

Epoch: 3, duration:7791ms, Train_acc:97.1%, Train_loss:0.092, Test_acc:70.8%,Test_loss:1.480, Lr:1.00E-04,Update the best model

Epoch: 4, duration:7811ms, Train_acc:98.9%, Train_loss:0.051, Test_acc:97.1%,Test_loss:0.100, Lr:9.20E-05,Update the best model

Epoch: 5, duration:7840ms, Train_acc:98.3%, Train_loss:0.047, Test_acc:97.1%,Test_loss:0.065, Lr:9.20E-05

Epoch: 6, duration:7886ms, Train_acc:98.6%, Train_loss:0.040, Test_acc:95.8%,Test_loss:0.104, Lr:9.20E-05

Epoch: 7, duration:7792ms, Train_acc:98.0%, Train_loss:0.058, Test_acc:93.8%,Test_loss:0.159, Lr:9.20E-05

Epoch: 8, duration:7778ms, Train_acc:98.4%, Train_loss:0.051, Test_acc:97.5%,Test_loss:0.071, Lr:8.46E-05,Update the best model

Epoch: 9, duration:7812ms, Train_acc:99.2%, Train_loss:0.033, Test_acc:95.4%,Test_loss:0.100, Lr:8.46E-05

Epoch:10, duration:7777ms, Train_acc:99.4%, Train_loss:0.020, Test_acc:100.0%,Test_loss:0.015, Lr:8.46E-05,Update the best model

Epoch:11, duration:7785ms, Train_acc:98.8%, Train_loss:0.034, Test_acc:99.6%,Test_loss:0.029, Lr:8.46E-05

Epoch:12, duration:7785ms, Train_acc:98.9%, Train_loss:0.045, Test_acc:98.8%,Test_loss:0.065, Lr:7.79E-05

Epoch:13, duration:7799ms, Train_acc:99.5%, Train_loss:0.014, Test_acc:71.2%,Test_loss:1.304, Lr:7.79E-05

Epoch:14, duration:7784ms, Train_acc:99.1%, Train_loss:0.035, Test_acc:75.4%,Test_loss:1.443, Lr:7.79E-05

Epoch:15, duration:7789ms, Train_acc:99.4%, Train_loss:0.016, Test_acc:87.9%,Test_loss:0.325, Lr:7.79E-05

Epoch:16, duration:7789ms, Train_acc:99.9%, Train_loss:0.007, Test_acc:100.0%,Test_loss:0.007, Lr:7.16E-05

Epoch:17, duration:7820ms, Train_acc:100.0%, Train_loss:0.004, Test_acc:100.0%,Test_loss:0.002, Lr:7.16E-05

Epoch:18, duration:7838ms, Train_acc:100.0%, Train_loss:0.005, Test_acc:100.0%,Test_loss:0.003, Lr:7.16E-05

Epoch:19, duration:7814ms, Train_acc:99.7%, Train_loss:0.011, Test_acc:99.2%,Test_loss:0.035, Lr:7.16E-05

Epoch:20, duration:7837ms, Train_acc:99.6%, Train_loss:0.013, Test_acc:100.0%,Test_loss:0.008, Lr:6.59E-05

Epoch:21, duration:7806ms, Train_acc:99.9%, Train_loss:0.005, Test_acc:100.0%,Test_loss:0.004, Lr:6.59E-05

Epoch:22, duration:7797ms, Train_acc:99.5%, Train_loss:0.015, Test_acc:97.1%,Test_loss:0.065, Lr:6.59E-05

Epoch:23, duration:7798ms, Train_acc:100.0%, Train_loss:0.005, Test_acc:99.6%,Test_loss:0.008, Lr:6.59E-05

Epoch:24, duration:7789ms, Train_acc:100.0%, Train_loss:0.003, Test_acc:99.6%,Test_loss:0.010, Lr:6.06E-05

Epoch:25, duration:7793ms, Train_acc:100.0%, Train_loss:0.003, Test_acc:100.0%,Test_loss:0.007, Lr:6.06E-05

Epoch:26, duration:7797ms, Train_acc:100.0%, Train_loss:0.002, Test_acc:100.0%,Test_loss:0.002, Lr:6.06E-05

Epoch:27, duration:7797ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.005, Lr:6.06E-05

Epoch:28, duration:7819ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.004, Lr:5.58E-05

Epoch:29, duration:7844ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:98.3%,Test_loss:0.045, Lr:5.58E-05

Epoch:30, duration:7806ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.002, Lr:5.58E-05

Epoch:31, duration:7866ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.004, Lr:5.58E-05

Epoch:32, duration:7841ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.003, Lr:5.13E-05

Epoch:33, duration:7839ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.002, Lr:5.13E-05

Epoch:34, duration:7823ms, Train_acc:99.9%, Train_loss:0.003, Test_acc:99.6%,Test_loss:0.007, Lr:5.13E-05

Epoch:35, duration:7816ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.001, Lr:5.13E-05

Epoch:36, duration:7832ms, Train_acc:100.0%, Train_loss:0.001, Test_acc:100.0%,Test_loss:0.003, Lr:4.72E-05

Epoch:37, duration:7911ms, Train_acc:99.9%, Train_loss:0.005, Test_acc:99.2%,Test_loss:0.015, Lr:4.72E-05

Epoch:38, duration:7898ms, Train_acc:99.9%, Train_loss:0.003, Test_acc:99.6%,Test_loss:0.006, Lr:4.72E-05

Epoch:39, duration:7836ms, Train_acc:100.0%, Train_loss:0.002, Test_acc:100.0%,Test_loss:0.004, Lr:4.72E-05

Epoch:40, duration:7845ms, Train_acc:100.0%, Train_loss:0.002, Test_acc:99.6%,Test_loss:0.009, Lr:4.34E-05

最高Test_acc:100.0%

总结

从实验效果看,增加Dropout层,增加训练集比例,全局平均池化层代替全连接层(模型轻量化),模型精度提升较为明显。。