1. 单帧处理

1. pt2onnx

import torch

import numpy as np

from parameters import get_parameters as get_parameters

from models._model_builder import build_model

TORCH_WEIGHT_PATH = './checkpoints/model.pth'

ONNX_MODEL_PATH = './checkpoints/model.onnx'

torch.set_default_tensor_type('torch.FloatTensor')

torch.set_default_tensor_type('torch.cuda.FloatTensor')

def get_numpy_data():

batch_size = 1

img_input = np.ones((batch_size,1,512,512), dtype=np.float32)

return img_input

def get_torch_model():

# args = get_args()

args = get_parameters()

model = build_model(args.model, args)

model.load_state_dict(torch.load(TORCH_WEIGHT_PATH))

model.cuda()

#pass

return model

#定义参数

input_name = ['data']

output_name = ['prob']

'''input为输入模型图片的大小'''

input = torch.randn(1,1,512,512).cuda()

# 创建模型并载入权重

model = get_torch_model()

model.load_state_dict(torch.load(TORCH_WEIGHT_PATH))

model.cuda()

#导出onnx

torch.onnx.export(model, input, ONNX_MODEL_PATH, input_names=input_name, output_names=output_name, verbose=False,opset_version=11)

补充:也可以对onnx进行简化

# pip install onnxsim

from onnxsim import simplify

import onnx

onnx_model = onnx.load("./checkpoints/model.onnx") # load onnx model

model_simp, check = simplify(onnx_model)

assert check, "Simplified ONNX model could not be validated"

onnx.save(model_simp, "./checkpoints/model.onnx")

print('finished exporting onnx')

2. onnx2engine

// OnnxToEngine.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

#include <iostream>

#include <chrono>

#include <vector>

#include "cuda_runtime_api.h"

#include "logging.h"

#include "common.hpp"

#include "NvOnnxParser.h"

#include"NvCaffeParser.h"

const char* INPUT_BLOB_NAME = "data";

using namespace std;

using namespace nvinfer1;

using namespace nvonnxparser;

using namespace nvcaffeparser1;

unsigned int maxBatchSize = 1;

int main()

{

//step1:创建logger:日志记录器

static Logger gLogger;

//step2:创建builder

IBuilder* builder = createInferBuilder(gLogger);

//step3:创建network

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(1);//0改成1,

//step4:创建parser

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, gLogger);

//step5:使用parser解析模型填充network

const char* onnx_filename = "..\\onnx\\model.onnx";

parser->parseFromFile(onnx_filename, static_cast<int>(Logger::Severity::kWARNING));

for (int i = 0; i < parser->getNbErrors(); ++i)

{

std::cout << parser->getError(i)->desc() << std::endl;

}

std::cout << "successfully load the onnx model" << std::endl;

//step6:创建config并设置最大batchsize和最大工作空间

// Create builder

// unsigned int maxBatchSize = 1;

builder->setMaxBatchSize(maxBatchSize);

IBuilderConfig* config = builder->createBuilderConfig();

config->setMaxWorkspaceSize( (1 << int(20)));

//step7:创建engine

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

//assert(engine);

//step8:序列化保存engine到planfile

IHostMemory* serializedModel = engine->serialize();

//assert(serializedModel != nullptr);

//std::ofstream p("D:\\TensorRT-7.2.2.322\\engine\\unet.engine");

//p.write(reinterpret_cast<const char*>(serializedModel->data()), serializedModel->size());

std::string engine_name = "..\\engine\\model.engine";

std::ofstream p(engine_name, std::ios_base::out | std::ios_base::binary);

if (!p) {

std::cerr << "could not open plan output file" << std::endl;

return -1;

}

p.write(reinterpret_cast<const char*>(serializedModel->data()), serializedModel->size());

std::cout << "successfully build an engine model" << std::endl;

//step9:释放资源

serializedModel->destroy();

engine->destroy();

parser->destroy();

network->destroy();

config->destroy();

builder->destroy();

}

2. 多帧处理(加速)

2.1 pt2onnx

import onnx

import torch

import numpy as np

from parameters import get_parameters as get_parameters

from models._model_builder import build_model

TORCH_WEIGHT_PATH = './checkpoints/model.pth'

ONNX_MODEL_PATH = './checkpoints/model.onnx'

args = get_parameters()

def get_torch_model():

# args = get_args()

print(args.model)

model = build_model(args.model, args)

model.load_state_dict(torch.load(TORCH_WEIGHT_PATH))

model.cuda()

#pass

return model

if __name__ == "__main__":

# 设置输入参数

Batch_size = 1

Channel = 1

Height = 384

Width = 640

input_data = torch.rand((Batch_size, Channel, Height, Width)).cuda()

# 实例化模型

# 创建模型并载入权重

model = get_torch_model()

#model.load_state_dict(torch.load(TORCH_WEIGHT_PATH))

#model.cuda()

# 导出为静态输入

input_name = 'data'

output_name = 'prob'

torch.onnx.export(model,

input_data,

ONNX_MODEL_PATH,

verbose=True,

input_names=[input_name],

output_names=[output_name])

# 导出为动态输入

torch.onnx.export(model,

input_data,

ONNX_MODEL_PATH2,

opset_version=11,

input_names=[input_name],

output_names=[output_name],

dynamic_axes={

#input_name: {0: 'batch_size'},

#output_name: {0: 'batch_size'}}

input_name: {0: 'batch_size', 1: 'channel', 2: 'input_height', 3: 'input_width'},

output_name: {0: 'batch_size', 2: 'output_height', 3: 'output_width'}}

)

2.2 onnx2engine

OnnxToEngine.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

#include <iostream>

#include "NvInfer.h"

#include "NvOnnxParser.h"

#include "logging.h"

#include "opencv2/opencv.hpp"

#include <fstream>

#include <sstream>

#include "cuda_runtime_api.h"

static Logger gLogger;

using namespace nvinfer1;

bool saveEngine(const ICudaEngine& engine, const std::string& fileName)

{

std::ofstream engineFile(fileName, std::ios::binary);

if (!engineFile)

{

std::cout << "Cannot open engine file: " << fileName << std::endl;

return false;

}

IHostMemory* serializedEngine = engine.serialize();

if (serializedEngine == nullptr)

{

std::cout << "Engine serialization failed" << std::endl;

return false;

}

engineFile.write(static_cast<char*>(serializedEngine->data()), serializedEngine->size());

return !engineFile.fail();

}

void print_dims(const nvinfer1::Dims& dim)

{

for (int nIdxShape = 0; nIdxShape < dim.nbDims; ++nIdxShape)

{

printf("dim %d=%d\n", nIdxShape, dim.d[nIdxShape]);

}

}

int main()

{

// 1、创建一个builder

IBuilder* pBuilder = createInferBuilder(gLogger);

// 2、 创建一个 network,要求网络结构里,没有隐藏的批量处理维度

INetworkDefinition* pNetwork = pBuilder->createNetworkV2(1U << static_cast<int>(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH));

// 3、 创建一个配置文件

nvinfer1::IBuilderConfig* config = pBuilder->createBuilderConfig();

// 4、 设置profile,这里动态batch专属

IOptimizationProfile* profile = pBuilder->createOptimizationProfile();

// 这里有个OptProfileSelector,这个用来设置优化的参数,比如(Tensor的形状或者动态尺寸),

profile->setDimensions("data", OptProfileSelector::kMIN, Dims4(1, 1, 512, 512));

profile->setDimensions("data", OptProfileSelector::kOPT, Dims4(2, 1, 512, 512));

profile->setDimensions("data", OptProfileSelector::kMAX, Dims4(4, 1, 512, 512));

config->addOptimizationProfile(profile);

auto parser = nvonnxparser::createParser(*pNetwork, gLogger.getTRTLogger());

const char* pchModelPth = "..\\onnx\\model.onnx";

if (!parser->parseFromFile(pchModelPth, static_cast<int>(gLogger.getReportableSeverity())))

{

printf("解析onnx模型失败\n");

}

int maxBatchSize = 4;

//IBuilderConfig::setMaxWorkspaceSize

pBuilder->setMaxWorkspaceSize(1 << 32); //pBuilderg->setMaxWorkspaceSize(1<<32);改为config->setMaxWorkspaceSize(1<<32);

pBuilder->setMaxBatchSize(maxBatchSize);

//设置推理模式

pBuilder->setFp16Mode(true);

ICudaEngine* engine = pBuilder->buildEngineWithConfig(*pNetwork, *config);

std::string strTrtSavedPath = "..\\engine\\model.trt";

// 序列化保存模型

saveEngine(*engine, strTrtSavedPath);

nvinfer1::Dims dim = engine->getBindingDimensions(0);

// 打印维度

print_dims(dim);

}

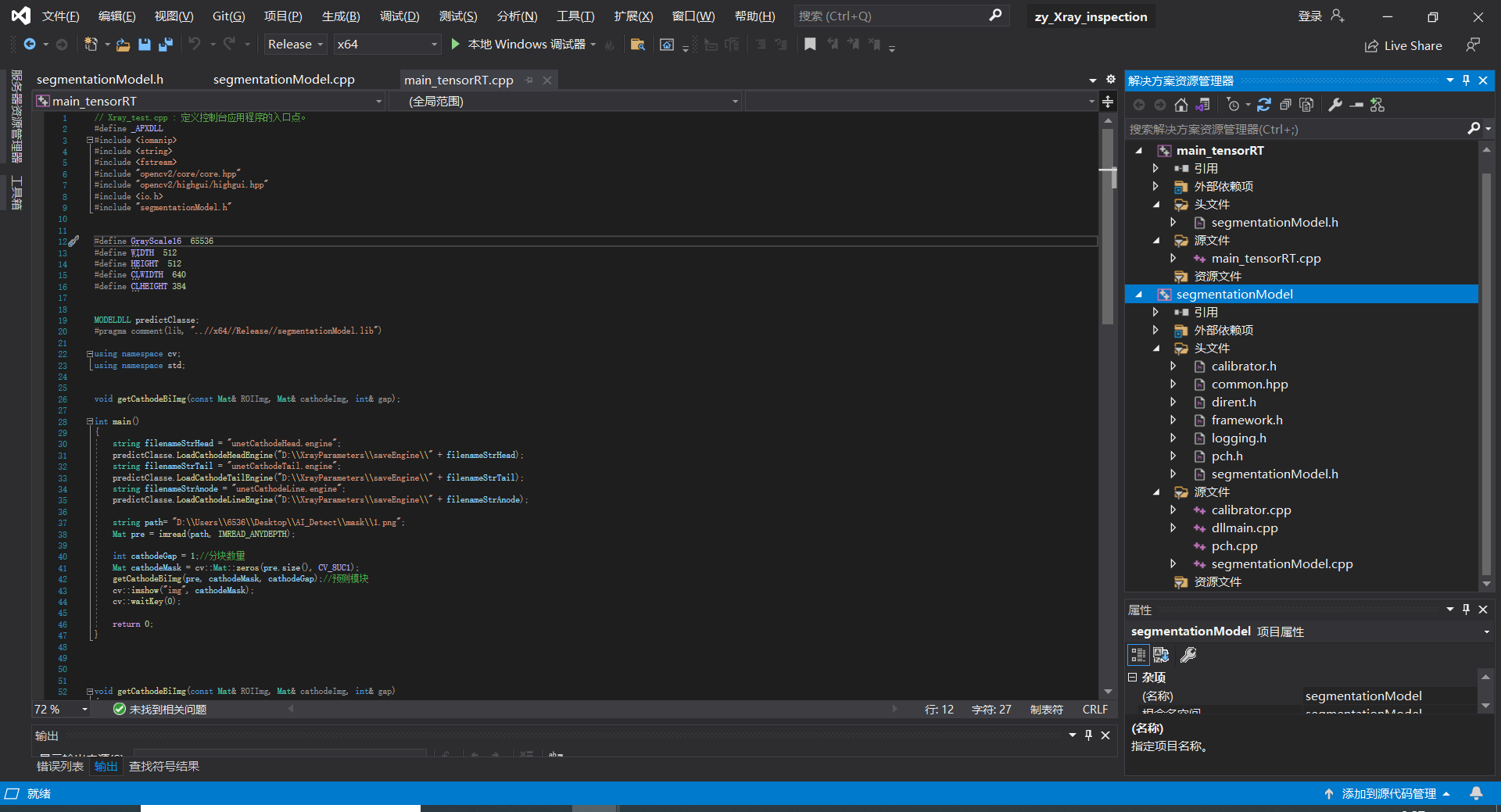

3. c++调用tensorRT模型

整个工程:链接