上篇

ceph-deploy bclinux aarch64 ceph 14.2.10-CSDN博客

安装vdbench

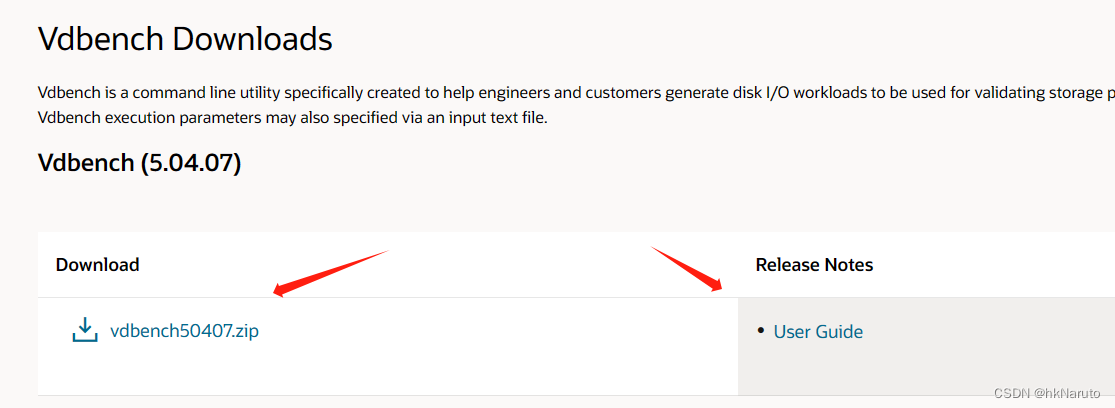

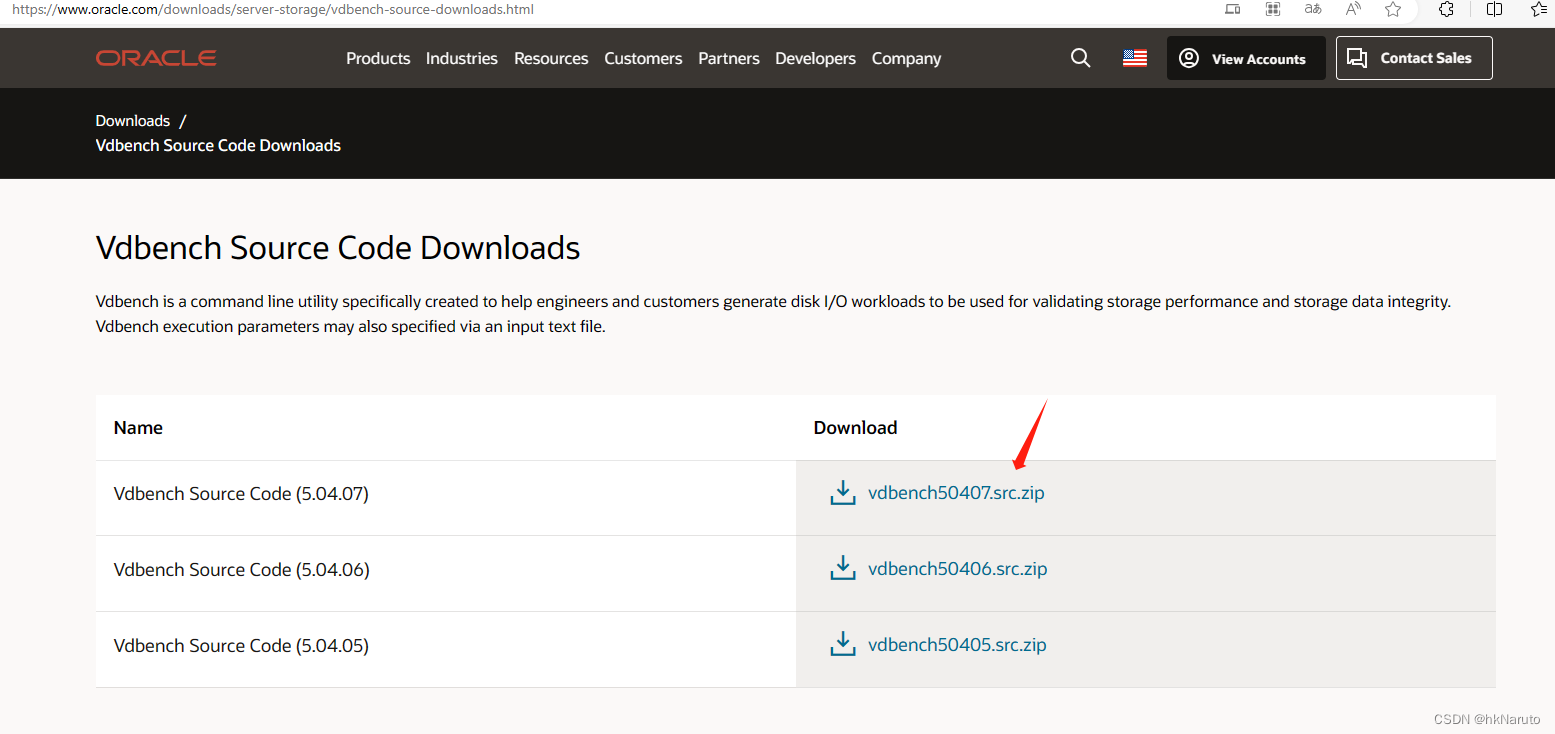

下载vdbench

下载页面

Vdbench Downloads (oracle.com)

包下载

需要账号登录,在弹出层点击同意才能继续下载

用户手册

https://download.oracle.com/otn/utilities_drivers/vdbench/vdbench-50407.pdf

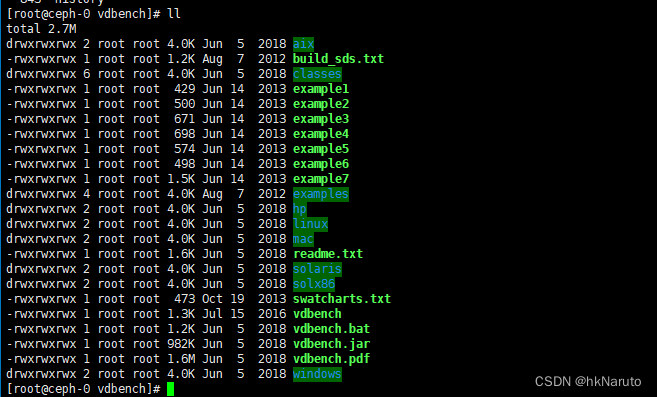

解压vdbench

mkdir vdbench

cd vdbench/

unzip ../vdbench50407.zip

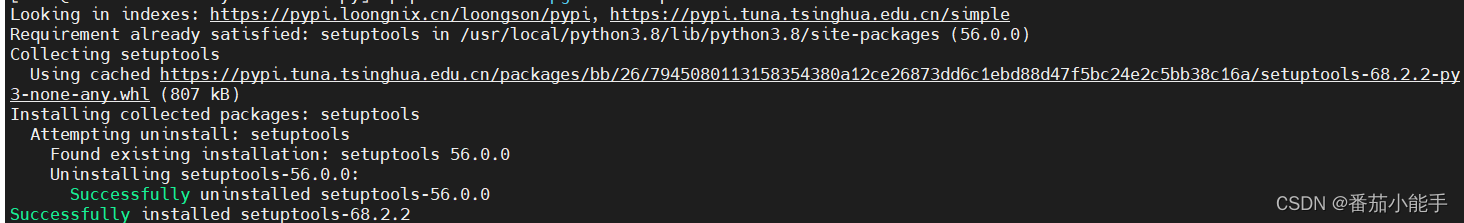

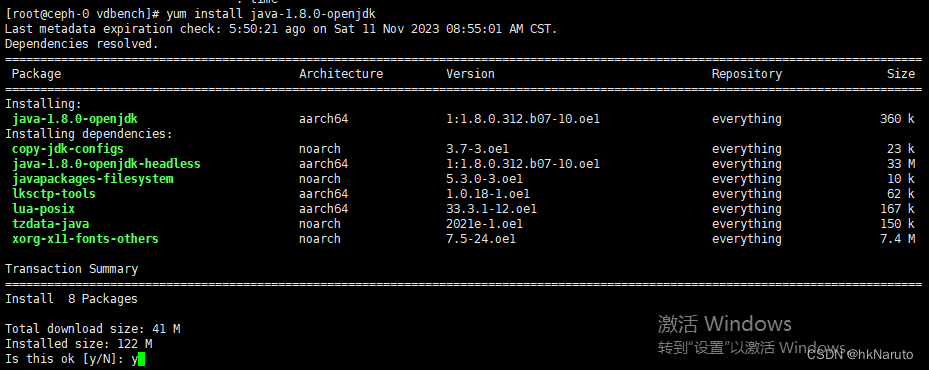

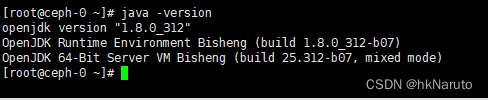

安装jdk8

yum install java-1.8.0-openjdk

故障处理

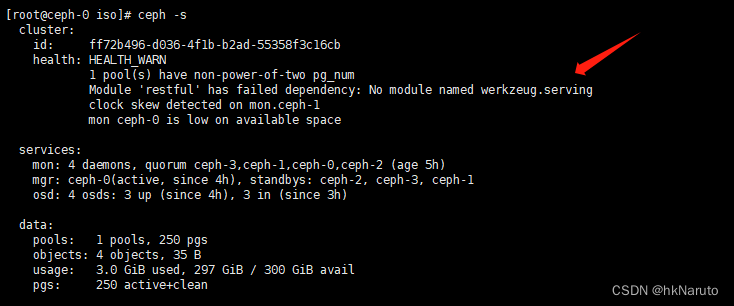

Module 'restful' has failed dependency: No module named werkzeug.serving

# 目前看,python3应该不对

yum install python3-werkzeug -y

ssh ceph-1 yum install python3-werkzeug -y

ssh ceph-2 yum install python3-werkzeug -y

ssh ceph-3 yum install python3-werkzeug -yyum install python2-werkzeug -y

ssh ceph-1 yum install python2-werkzeug -y

ssh ceph-2 yum install python2-werkzeug -y

ssh ceph-3 yum install python2-werkzeug -y重启ceph-mon(ssh 执行方式发现不能批量输入到终端执行,需要一条一条跑)

systemctl restart ceph-mon@ceph-0.servicessh ceph-1 systemctl restart ceph-mon@ceph-1.service

ssh ceph-2 systemctl restart ceph-mon@ceph-2.service

ssh ceph-3 systemctl restart ceph-mon@ceph-3.service结果依旧,reboot所有主机

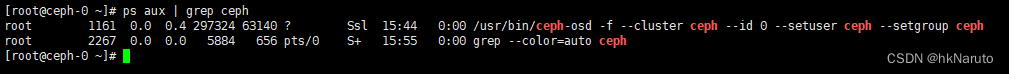

重启后故障,只有osd进程在,其他服务均未自启动

配置服务自启动

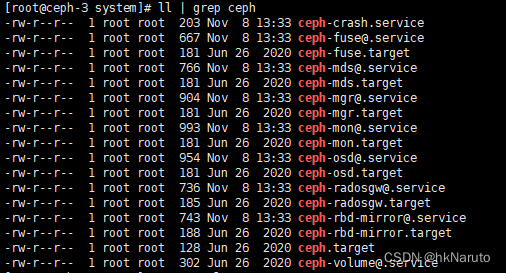

所有服务文件参考如下

[root@ceph-0 ~]# systemctl enable --now ceph-mon@ceph-0.service

[root@ceph-0 ~]# systemctl enable --now ceph-mgr@ceph-0.service[root@ceph-1 ~]# systemctl enable --now ceph-mon@ceph-1.service

[root@ceph-1 ~]# systemctl enable --now ceph-mgr@ceph-1.service[root@ceph-2 ~]# systemctl enable --now ceph-mon@ceph-2.service

[root@ceph-2 ~]# systemctl enable --now ceph-mgr@ceph-2.service [root@ceph-3 ~]# systemctl enable --now ceph-mon@ceph-3.service

[root@ceph-3 ~]# systemctl enable --now ceph-mgr@ceph-3.service 4台服务器禁用防火墙

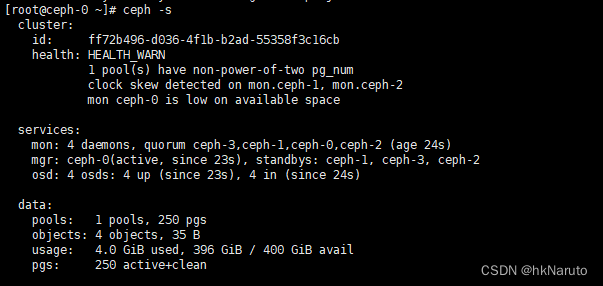

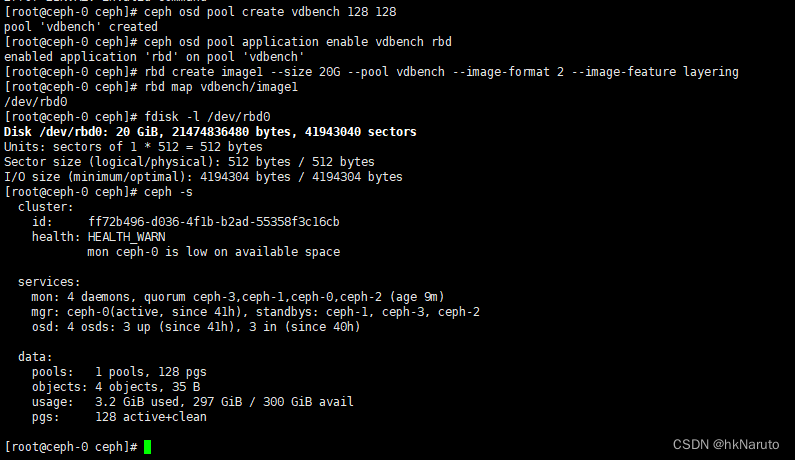

systemctl disable firewalld --now手动恢复服务,关闭防火墙后ceph -s

1 pool(s) have non-power-of-two pg_num

clock skew detected(需要一段时间后才会恢复)

ntpdate ceph-0 重启ntpd效果依旧

原来的语句有错,应该设置为2的次方

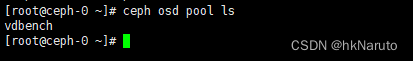

ceph osd pool create vdbench 250 250查看pool列表

修改 pg pgs

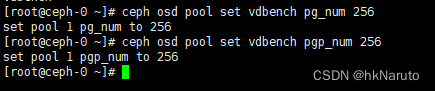

ceph osd pool set vdbench pg_num 256

ceph osd pool set vdbench pgp_num 256

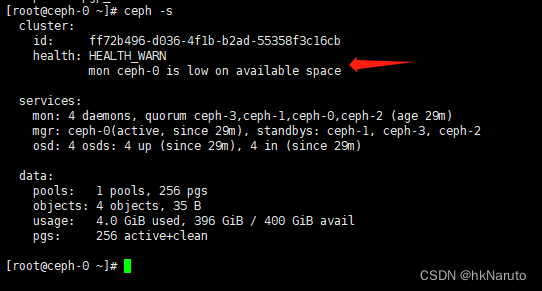

搞定了以上两个问题

too many PGs per OSD (256 > max 250)

一天后

ceph osd pool set vdbench pg_num 128

ceph osd pool set vdbench pgp_num 128

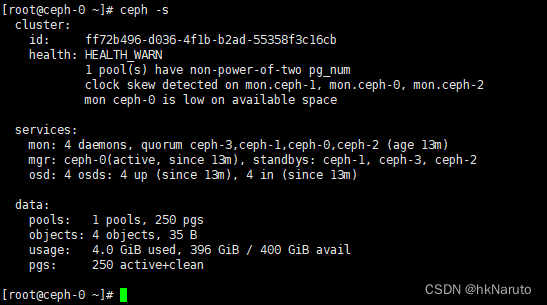

由于防火墙中途其他事项开启了,导致配置未同步。后续重启ceph-mon后,该问题已消失!

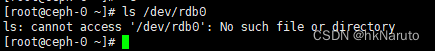

发现块设备消失了

删除image pool(失败)

[root@ceph-0 ~]# rbd ls vdbench

image1

[root@ceph-0 ~]# rbd rm vdbench/image1

Removing image: 100% complete...done.

[root@ceph-0 ~]# ceph osd pool rm vdbench vdbench --yes-i-really-really-mean-it

Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option to true before you can destroy a pool

删除pool 需要提前设置配置文件

mon_allow_pool_delete

修改/etc/ceph/ceph.conf,[global]下添加一句

mon_allow_pool_delete = true

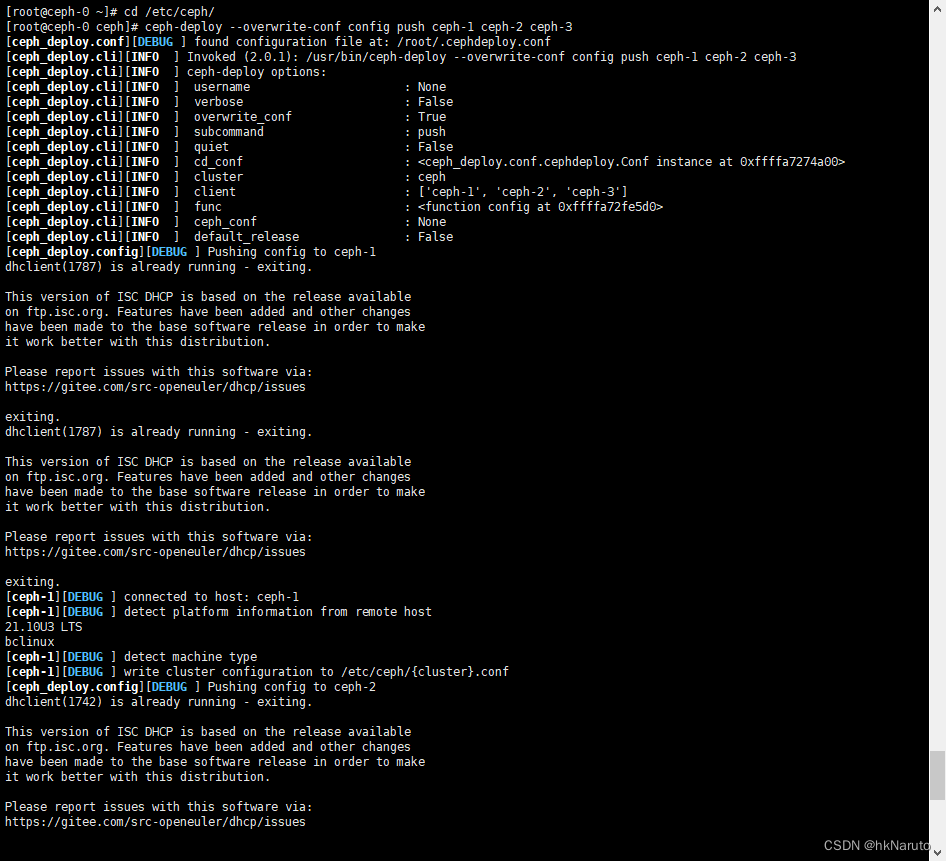

推送配置到其他节点

cd /etc/ceph/

ceph-deploy --overwrite-conf config push ceph-1 ceph-2 ceph-3

重启4个节点的ceph-mon

systemctl restart ceph-mon@ceph-0.servicessh ceph-1 systemctl restart ceph-mon@ceph-1.service

ssh ceph-2 systemctl restart ceph-mon@ceph-2.service

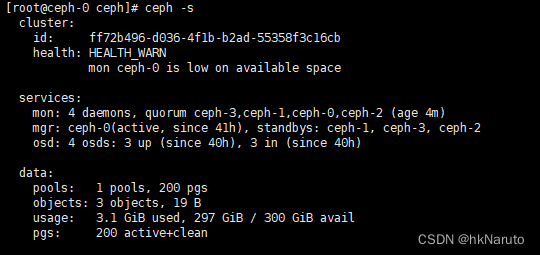

ssh ceph-3 systemctl restart ceph-mon@ceph-3.serviceceph -s 已恢复

继续删除pool vdbench

[root@ceph-0 ceph]# ceph osd pool rm vdbench vdbench --yes-i-really-really-mean-it

pool 'vdbench' removed

注意,删除指令需要重复写一次pool名字,后面加上参数--yes-i-really-really-mean-it

重新创建pool,指定pg pgs 128

[root@ceph-0 ceph]# ceph osd pool create vdbench 128 128

pool 'vdbench' created

[root@ceph-0 ceph]# ceph osd pool application enable vdbench rbd

enabled application 'rbd' on pool 'vdbench'

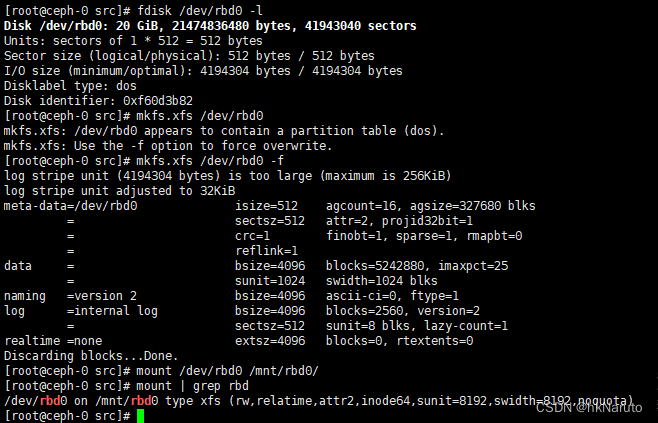

[root@ceph-0 ceph]# rbd create image1 --size 20G --pool vdbench --image-format 2 --image-feature layering

[root@ceph-0 ceph]# rbd map vdbench/image1

/dev/rbd0

[root@ceph-0 ceph]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 20 GiB, 21474836480 bytes, 41943040 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

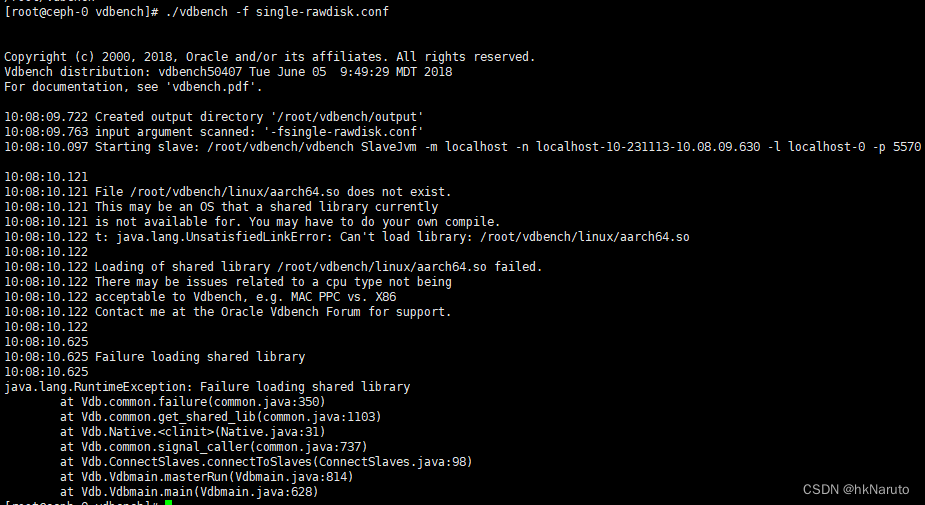

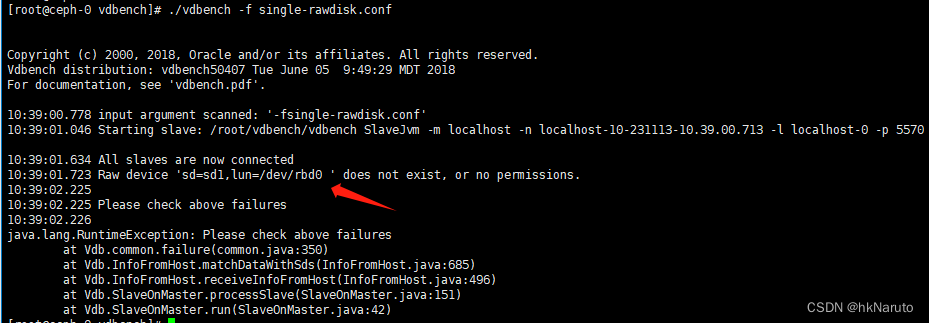

在ceph-0测试vdbench(报错)

配置文件/root/vdbench/single-rawdisk.conf

sd=sd1,lun=/dev/rbd0 ,openflag=o_direct

wd=wd1,sd=sd1,seekpct=0,rdpct=0,xfersize=1M

rd=rd1,wd=wd1,iorate=max,warmup=60,elapsed=600,interval=2执行测试

./vdbench -f single-rawdisk.conf

故障:File /root/vdbench/linux/aarch64.so does not exist

下载源码

Vdbench Source Code Downloads (oracle.com)

解压

cd vdbench-src/

unzip ../vdbench50407.src.zip 安装jdk-devel

yum install -y java-1.8.0-openjdk-devel修改make.linux

差异如下

[root@ceph-0 vdbench-src]# diff -Npr src/Jni/make.linux src/Jni/make.linux.bak

*** src/Jni/make.linux 2023-11-13 10:32:04.279529185 +0800

--- src/Jni/make.linux.bak 2023-11-13 10:27:42.571098663 +0800

***************

*** 34,49 ****

! vdb=/root/vdbench-src/src

! java=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.312.b07-10.oe1.aarch64

jni=$vdb/Jni

echo target directory: $vdb

! INCLUDES32="-w -DLINUX -I$java/include -I/$java/include/linux -I/usr/include/ -fPIC"

! INCLUDES64="-w -DLINUX -I$java/include -I/$java/include/linux -I/usr/include/ -fPIC"

cd /tmp

--- 34,49 ----

! vdb=$mine/vdbench504

! java=/net/sbm-240a.us.oracle.com/export/swat/swat_java/linux/jdk1.5.0_22/

jni=$vdb/Jni

echo target directory: $vdb

! INCLUDES32="-w -m32 -DLINUX -I$java/include -I/$java/include/linux -I/usr/include/ -fPIC"

! INCLUDES64="-w -m64 -DLINUX -I$java/include -I/$java/include/linux -I/usr/include/ -fPIC"

cd /tmp

*************** gcc ${INCLUDES32} -c $jni/chmod.c

*** 62,68 ****

echo Linking 32 bit

echo

! gcc -o $vdb/linux/linux32.so vdbjni.o vdblinux.o vdb_dv.o vdb.o chmod.o -lm -shared -lrt

chmod 777 $vdb/linux/linux32.so

--- 62,68 ----

echo Linking 32 bit

echo

! gcc -o $vdb/linux/linux32.so vdbjni.o vdblinux.o vdb_dv.o vdb.o chmod.o -lm -shared -m32 -lrt

chmod 777 $vdb/linux/linux32.so

*************** gcc ${INCLUDES64} -c $jni/chmod.c

*** 82,88 ****

echo Linking 64 bit

echo

! gcc -o $vdb/linux/linux64.so vdbjni.o vdblinux.o vdb_dv.o vdb.o chmod.o -lm -shared -lrt

chmod 777 $vdb/linux/linux64.so 2>/dev/null

--- 82,88 ----

echo Linking 64 bit

echo

! gcc -o $vdb/linux/linux64.so vdbjni.o vdblinux.o vdb_dv.o vdb.o chmod.o -lm -shared -m64 -lrt

chmod 777 $vdb/linux/linux64.so 2>/dev/null

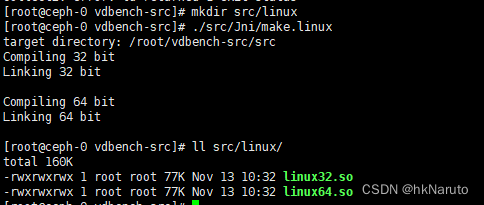

编译

[root@ceph-0 vdbench-src]# mkdir src/linux

[root@ceph-0 vdbench-src]# ./src/Jni/make.linux

覆盖到安装目录

[root@ceph-0 vdbench-src]# cp -v src/linux/linux64.so ~/vdbench/linux/aarch64.so

'src/linux/linux64.so' -> '/root/vdbench/linux/aarch64.so'

[root@ceph-0 vdbench-src]# file /root/vdbench/linux/aarch64.so

/root/vdbench/linux/aarch64.so: ELF 64-bit LSB shared object, ARM aarch64, version 1 (SYSV), dynamically linked, BuildID[sha1]=d49dcabfff65992a8e8ac6b97bea67394190e3de, not stripped

2再次在ceph-0测试vdbench(报错)

cd ~/vdbench

./vdbench -f single-rawdisk.conf

故障:Raw device 'sd=sd1,lun=/dev/rbd0 ' does not exist, or no permissions.

手动挂载测试 ok

手动挂载测试成功

dd测试 ok

[root@ceph-0 src]# dd if=/dev/zero of=/mnt/rbd0/test.bin bs=1M count=1024 oflag=direct

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB, 1.0 GiB) copied, 33.4447 s, 32.1 MB/s

虚拟机带宽太低了

vdbench sd lun修改为挂载目录测试 fail

这个测试应该用fsd

参考

【精选】【存储测试】vdbench存储性能测试工具-CSDN博客

Ceph 命令记录 - 代码先锋网 (codeleading.com)

ceph 中的 PG 和 PGP - 简书 (jianshu.com)

问题处理--ceph集群告警 too many PGs per OSD - 象飞田 - 博客园 (cnblogs.com)

vdbench在ARM服务器上出现共享库aarch64.so问题 - 阿星 (zhoumx.net)