目录

二、模拟登录方法-Requests模块Cookie实现登录

四、selenium使用基本代码

五、scrapy+selenium实现登录

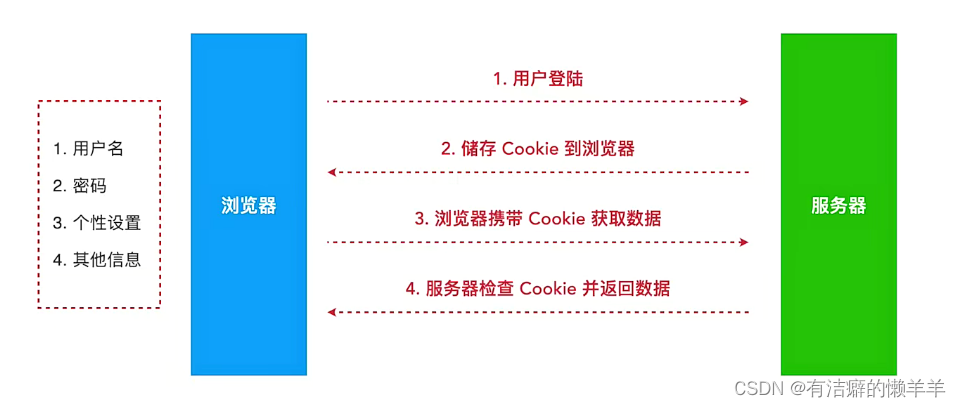

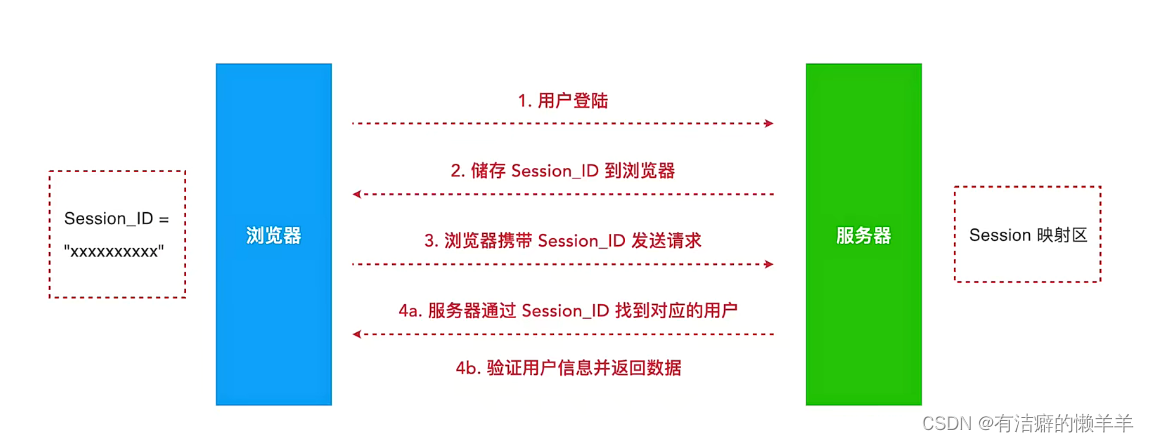

一、cookie和session实现登录原理

cookie:1.网站持久保存在浏览器中的数据2.可以是长期永久或者限时过期

session:1.保存在服务器中的一种映射关系2.在客户端以Cookie形式储存Session_ID

二、模拟登录方法-Requests模块Cookie实现登录

import requests

url = 'http://my.cheshi.com/user/'

headers = {

"User-Agent":"Mxxxxxxxxxxxxxxxxxxxxxx"

}

cookies = "pv_uid=16xxxxx;cheshi_UUID=01xxxxxxxxx;cheshi_pro_city=xxxxxxxxxxx"

cookies = {item.split("=")[0]:item.split("=")[1] for item in cookies.split(";")}

cookies = requests.utils.cookiejar_from_dict(cookies)

res = requests.get(url, headers=headers, cookies=cookies)

with open("./CO8L02.html","w") as f:

f.write(res.text)三、cookie+session实现登录并获取数据

如下两种方法:

import requests

url = "https://api.cheshi.com/services/common/api.php?api=login.Login"

headers = {

"User-Agent":"Mxxxxxxxxxxxxx"

}

data = {

"act":"login",

"xxxx":"xxxx"

........

}

res = requests.post(url, headers=headers, data=data)

print(res.cookies)

admin_url = "http://my.cheshi.com/user/"

admin_res = requests.get(admin_url, headers=headers, cookies=res.cookies)

with open("./C08L03.html","w") as f:

f.write(admin_res.text)import requests

url = "https://api.cheshi.com/services/common/api.php?api=login.Login"

headers = {

"User-Agent":"Mxxxxxxxxxxxxx"

}

data = {

"act":"login",

"xxxx":"xxxx"

........

}

session = requests.session()

session.post(url, headers=headers, data=data)

admin_url = "http://my.cheshi.com/user/"

admin_res = session.get(admin_url, headers=headers)

with open("./C08L03b.html","w") as f:

f.write(admin_res.text)四、selenium使用基本代码

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

# 实现交互的方法

from selenium.webdriver import ActionChains

import time

service = Service(executable_path="../_resources/chromedriver")

driver = webdriver.Chrome(service=service)

driver.get("http://www.cheshi.com/")

# print(drive.page_source)

# print(driver.current_url)

# with open("./C08L05.html", "w") as f:

# f.write(drive.page_source)

# # 屏幕截图

# driver.save_screenshot("C08L05.png")

"""元素定位方法."""

# 注意:不要在xpath里面写text()会报错

h1 = driver.find_element(By.XPATH, "//h1")

h1_text = h1.text

# 虽然是//p 但是text后只能拿到第一个元素,若拿所有元素需要用find_elements后循环遍历

#items = driver.find_element(By.XPATH, "//p")

# print(items.text)

items = driver.find_elements(By.XPATH, "//p")

print(items.text)

for item in items:

print(item.text)

p = driver.find_element(By.XPATH, '//p[@id="primary"]')

print(p.text)

print(p.get_attribute("another-attr"))

# 为了防止报错,可以用try,except方法防止中断程序

"""元素交互方法."""

username = driver.find_element(By.XPATH, '//*[@id="username"]')

password = driver.find_element(By.XPATH, '//*[@id="password"]')

ActionChains(driver).click(username).pause(1).send_keys("abcde").pause(0.5).perform()

ActionChains(driver).click(password).pause(1).send_keys("12345").pause(0.5).perform()

time.sleep(1)

div = driver.find_element(By.XPATH, '//*[@id="toHover"]')

ActionChains(driver).pause(0.5).move_to_element("div").pause(2).perform()

time.sleep(1)

div = driver.find_element(By.XPATH, '//*[@id="end"]')

ActionChains(driver).scroll_to_element("div").pause(2).perform()

time.sleep(1)

ActionChains(driver).scroll_by_amount(0,200).perform()

time.sleep(1)

time.sleep(2)

driver.quit()五、scrapy+selenium实现登录

在scrapy中的爬虫文件(app.py)中修改如下代码(两种方法):

import scrapy

class AppSpider(scrapy.Spider):

name = "app"

# allowed_domains = ["my.cheshi.com"]

# start_urls = ["http://my.cheshi.com/user/"]

def start_requests(self):

url = "http://my.cheshi.com/user/"

cookies = "pv_uid=16xxxxx;cheshi_UUID=01xxxxxxxxx;cheshi_pro_city=xxxxxxxxxxx"

cookies = {item.split("=")[0]:item.split("=")[1] for item in cookies.split(";")}

yield scrapy.Request(url=url, callback=self.parse,cookies=cookies)

def parse(self,response):

print(response.text)import scrapy

class AppSpider(scrapy.Spider):

name = "app"

# allowed_domains = ["my.cheshi.com"]

# start_urls = ["http://my.cheshi.com/user/"]

def start_requests(self):

url = "https://api.cheshi.com/services/common/api.php?api=login.Login"

data = {

"act":"login",

"xxxx":"xxxx"

........

}

yield scrapy.FormRequest(url=url, formdata=data,callback=self.parse)

def parse(self,response):

url = "http://my.cheshi.com/user/"

yield scrapy.Request(url=url, callback=self.parse_admin)

def parse_admin(self, response):

print(response.text)