ArXiv:https://arxiv.org/abs/1910.01108

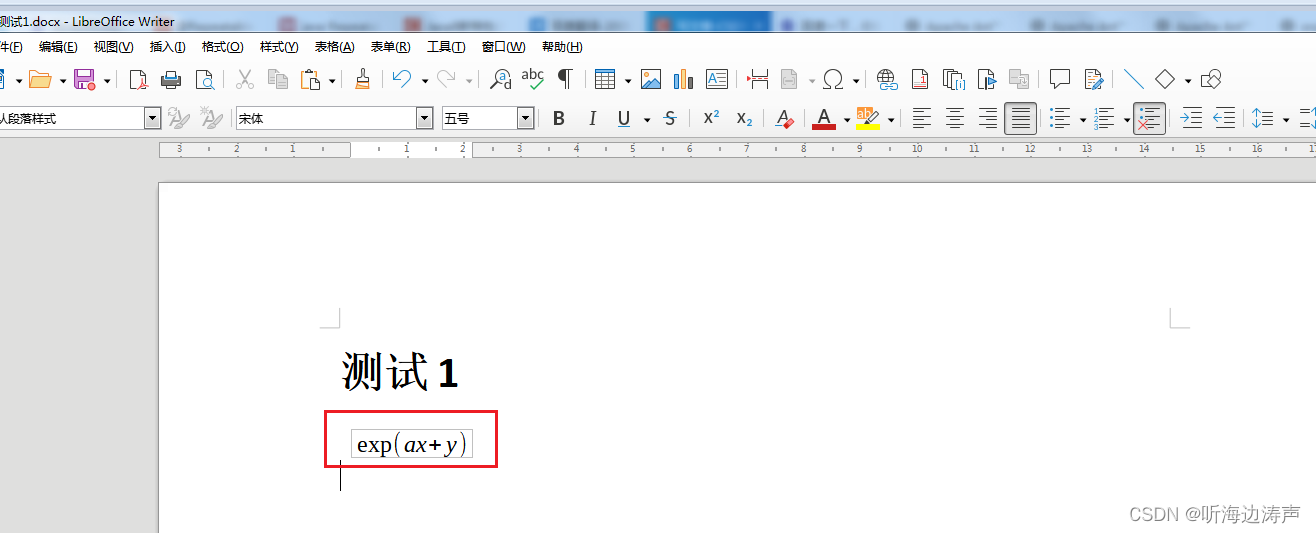

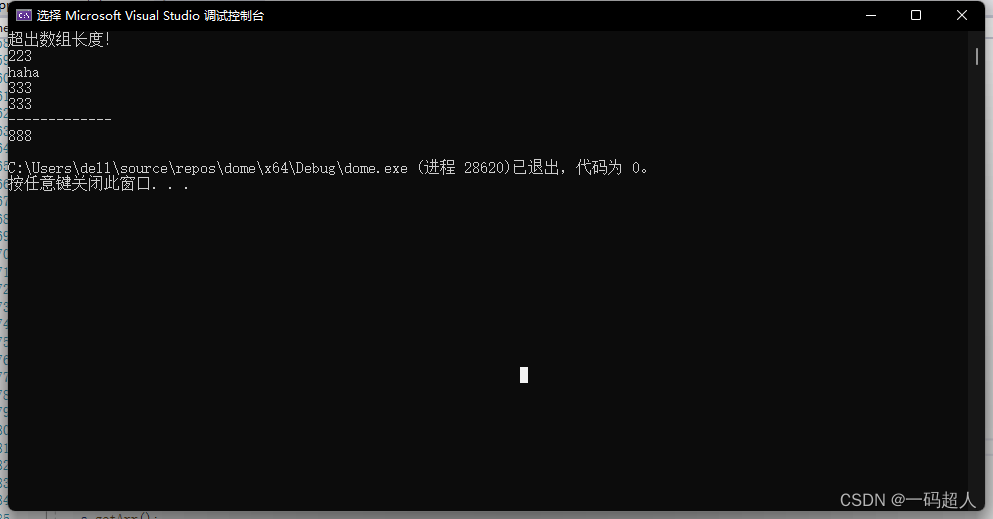

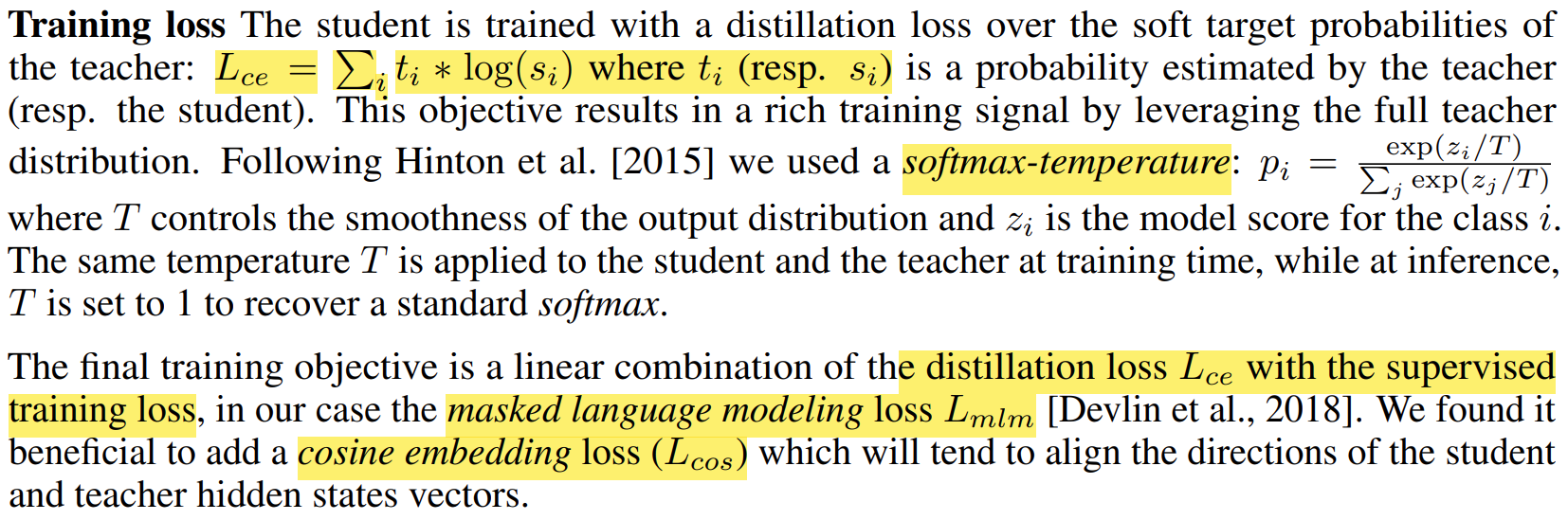

Train Loss:

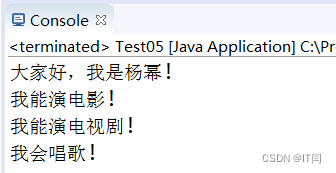

DistilBERT:

DistilBERT具有与BERT相同的一般结构,层数减少2倍,移除token类型嵌入和pooler。从老师那里取一层来初始化学生。

The token-type embeddings and the pooler are removed while the number of layers is reduced by a factor of 2. Most of the operations used in the Transformer architecture (linear layer and layer normalisation) are highly optimized in modern linear algebra frameworks。

we initialize the student from the teacher by taking one layer out of two.

大batch,4k,动态mask,去掉NSP

训练数据:和BERT一样