官方预训练模型转换

- 下载yolov7源码解压到本地,并配置基础运行环境。

- 下载官方预训练模型

- yolov7-tiny.pt

- yolov7.pt

- …

- 进入yolov7-main目录下,新建文件夹weights,并将步骤2中下载的权重文件放进去。

- 修改models/yolo.py文件

def forward(self, x):

# x = x.copy() # for profiling

z = [] # inference output

self.training |= self.export

for i in range(self.nl):

x[i] = self.m[i](x[i]).sigmoid() # conv

return x[0], x[1], x[2]

- 新建export_nnie.py文件

import os

import torch

import onnx

from onnxsim import simplify

import onnxoptimizer

import argparse

from models.yolo import Detect, Model

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='./weights/yolov7.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='./cfg/deploy/yolov7.yaml', help='initial weights path')

#================================================================

opt = parser.parse_args()

print(opt)

#Save Only weights

ckpt = torch.load(opt.weights, map_location=torch.device('cpu'))

torch.save(ckpt['model'].state_dict(), opt.weights.replace(".pt", "-model.pt"))

#Load model without postprocessing

new_model = Model(opt.cfg)

new_model.load_state_dict(torch.load(opt.weights.replace(".pt", "-model.pt"), map_location=torch.device('cpu')), False)

new_model.eval()

#save to JIT script

example = torch.rand(1, 3, 640, 640)

traced_script_module = torch.jit.trace(new_model, example)

traced_script_module.save(opt.weights.replace(".pt", "-jit.pt"))

#save to onnx

f = opt.weights.replace(".pt", ".onnx")

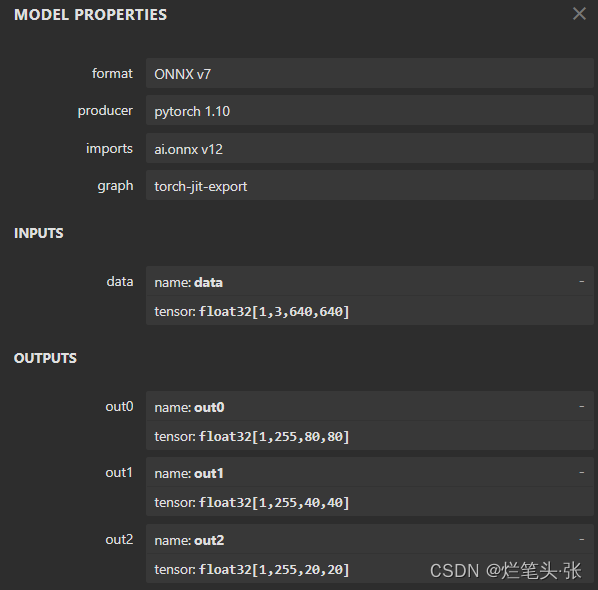

torch.onnx.export(new_model, example, f, verbose=False, opset_version=12,

training=torch.onnx.TrainingMode.EVAL,

do_constant_folding=True,

input_names=['data'],

output_names=['out0','out1','out2'])

#onnxsim

model_simp, check = simplify(f)

assert check, "Simplified ONNX model could not be validated"

onnx.save(model_simp, opt.weights.replace(".pt", "-sim.onnx"))

#optimize onnx

passes = ["extract_constant_to_initializer", "eliminate_unused_initializer"]

optimized_model = onnxoptimizer.optimize(model_simp, passes)

onnx.checker.check_model(optimized_model)

onnx.save(optimized_model, opt.weights.replace(".pt", "-op.onnx"))

print('finished exporting onnx')

- 命令行执行python3 export_nnie.py脚本(默认为yolov7.pt, 加–weights参数可指定权重,–cfg指定模型配置文件),转换成功会输出一下信息, 转换后的模型存于权重同级目录(*-op.onnx后缀模型)

Namespace(cfg='./cfg/deploy/yolov7.yaml', weights='./weights/yolov7.pt')

finished exporting onnx

RKNN开发板植入-模型转换篇

前期准备

- RKNN开发环境(python)

- rknn-toolkit2

详细流程

- 进入rknn-toolkits2/examples/onnx/yolov5示例目录下

- 修改test.py内容(按需修改ONNX_MODEL、RKNN_MODEL、IMG_PATH、DATASET等等超参数)

def sigmoid(x):

# return 1 / (1 + np.exp(-x))

return x

- 命令行执行

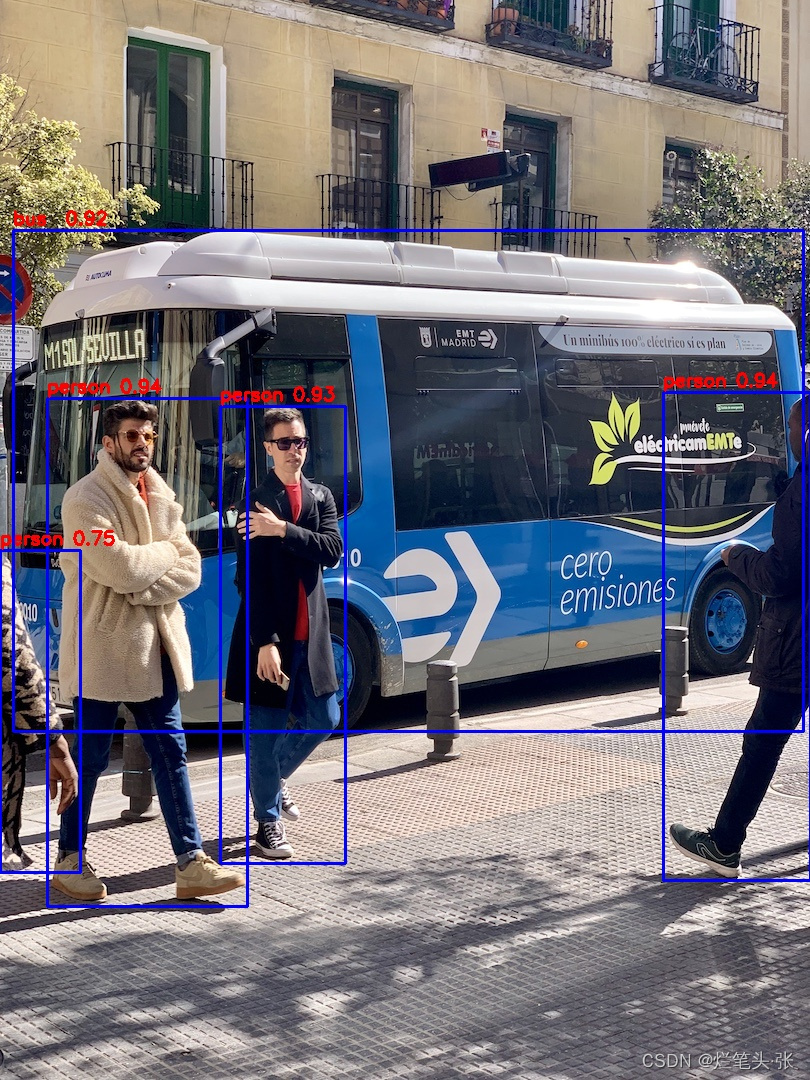

python3 test.py即可获取推理结果

RKNN开发板植入-NPU加载推理篇(C++)

后续放出代码

![[C语言]排序的大乱炖——喵喵的成长记](https://img-blog.csdnimg.cn/9f0f417cdb024a2d9e906ec631c8b1ea.png)