1:版本说明:

qt 5.12.10

opencv 4.5.3 (yolov5模型部署要求opencv>4.5.0)

2:检测的代码

yolo.h

#pragma once

#include<iostream>

#include<cmath>

#include<vector>

#include <opencv2/opencv.hpp>

#include<opencv2/dnn.hpp>

class yolo

{

public:

yolo() {}

~yolo(){}

bool readModel(cv::dnn::Net &net,std::string &netpath, bool isCuda);

struct Output

{

int id;//结果类别id

float confidence;//结果置信度

cv::Rect box;//矩形框

int ship_id;//船的id

int truck_id;//人的id

int person_id;//人的id

int staring_id;//车的id

int forklift_id;//车的id

int unload_car_id;//船的id

int load_car_id;//人的id

int private_car_id;//车的id

};

struct Output_max_confidence

{

int id;//结果类别id

float confidence;//结果置信度

cv::Rect box;//矩形框

};

int ship_num;//总人数

int car_trucks_num;//总车数

int person_num;//总船数

int stacking_area_num;//总人数

int car_forklift_num;//总车数

int unload_car_num;//总船数

int load_car_num;//总人数

int car_private_num;//总车数

bool Detect(cv::Mat &SrcImg, cv::dnn::Net &net, std::vector<Output> &output);

void drawPred(cv::Mat &img, std::vector<Output> result, std::vector<cv::Scalar> color);

//参数为私有参数,当然也可以是设置成公开或者保护。

Output_max_confidence get_only_one_max_confidence(std::vector<Output> result);

float findMax(std::vector<float> vec);

int getPositionOfMax(std::vector<float> vec, int max);

\

void drawRect(cv::Mat &img, yolo::Output_max_confidence result);

private:

//计算归一化函数

float Sigmoid(float x) {

return static_cast<float>(1.f / (1.f + exp(-x)));

}

//anchors

const float netAnchors[3][6] = { { 10.0, 13.0, 16.0, 30.0, 33.0, 23.0 },{ 30.0, 61.0, 62.0, 45.0, 59.0, 119.0 },{ 116.0, 90.0, 156.0, 198.0, 373.0, 326.0 } };

//stride

const float netStride[3] = { 8.0, 16.0, 32.0 };

const int netWidth = 640; //网络模型输入大小

const int netHeight = 640;

float nmsThreshold = 0.02;

float boxThreshold = 0.05;

float classThreshold = 0.45;

//类名

// std::vector<std::string> className = { "car", "person"};

// std::vector<std::string> className = { "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

// "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

// "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

// "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

// "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

// "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

// "potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

// "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

// "hair drier", "toothbrush"};

std::vector<std::string> className = { "ship","car_trucks","person","stacking_area","car_forklift","unload_car","load_car","car_private"};//"car","person"

};

yolo.cpp

#include "yolo.h"

#include<iostream>

#include<cmath>

#include<vector>

#include <opencv2/opencv.hpp>

#include<opencv2/dnn.hpp>

using namespace std;

using namespace cv;

using namespace dnn;

#pragma execution_character_set("utf-8");

bool yolo::readModel(Net &net, string &netPath, bool isCuda = false) {

try {

net = readNetFromONNX(netPath);

cout<<"load net successfull!"<<endl;

}

catch (const std::exception&) {

return false;

}

//cuda

if (isCuda) {

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

}

//cpu

else {

net.setPreferableBackend(cv::dnn::DNN_BACKEND_DEFAULT);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

return true;

}

bool yolo::Detect(Mat &SrcImg, Net &net, vector<Output> &output) {

Mat blob;

int col = SrcImg.cols;

int row = SrcImg.rows;

int maxLen = MAX(col, row);

Mat netInputImg = SrcImg.clone();

if (maxLen > 1.2*col || maxLen > 1.2*row) {

Mat resizeImg = Mat::zeros(maxLen, maxLen, CV_8UC3);

SrcImg.copyTo(resizeImg(Rect(0, 0, col, row)));

netInputImg = resizeImg;

}

blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(netWidth, netHeight), cv::Scalar(104, 117, 123), true, false);

blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(netWidth, netHeight), cv::Scalar(0, 0,0), true, false);//如果训练集未对图片进行减去均值操作,则需要设置为这句

//blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(netWidth, netHeight), cv::Scalar(114, 114,114), true, false);

net.setInput(blob);

std::vector<cv::Mat> netOutputImg;

//vector<string> outputLayerName{"345","403", "461","output" };

//net.forward(netOutputImg, outputLayerName[3]); //获取output的输出

net.forward(netOutputImg, net.getUnconnectedOutLayersNames());

//接上面

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<int> ship_id;//记录下人数的id号码----补充

std::vector<int> trucks_id;//记录下车数的id号码----补充

std::vector<int> person_id;//记录下人数的id号码----补充

std::vector<int> stacking_id;//记录下车数的id号码----补充

std::vector<int> forklift_id;//记录下人数的id号码----补充

std::vector<int> unload_car_id;//记录下车数的id号码----补充

std::vector<int> load_car_id;//记录下人数的id号码----补充

std::vector<int> car_private_id;//记录下车数的id号码----补充

float ratio_h = (float)netInputImg.rows / netHeight;

float ratio_w = (float)netInputImg.cols / netWidth;

int net_width = className.size() + 5; //输出的网络宽度是类别数+5

float* pdata = (float*)netOutputImg[0].data;

for (int stride = 0; stride < 3; stride++) { //stride

int grid_x = (int)(netWidth / netStride[stride]);

int grid_y = (int)(netHeight / netStride[stride]);

for (int anchor = 0; anchor < 3; anchor++) { //anchors

const float anchor_w = netAnchors[stride][anchor * 2];

const float anchor_h = netAnchors[stride][anchor * 2 + 1];

for (int i = 0; i < grid_y; i++) {

for (int j = 0; j < grid_y; j++) {

//float box_score = Sigmoid(pdata[4]);//获取每一行的box框中含有某个物体的概率 yolo5.0

float box_score = pdata[4];//获取每一行的box框中含有某个物体的概率 yolo6.0

if (box_score > boxThreshold) {

//为了使用minMaxLoc(),将85长度数组变成Mat对象

cv::Mat scores(1, className.size(), CV_32FC1, pdata + 5);

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

//max_class_socre = Sigmoid((float)max_class_socre); //yolo 5.0

max_class_socre = (float)max_class_socre; //yolo 6.0

if (max_class_socre > classThreshold) {

//rect [x,y,w,h]

//yolov5 5.0格式

//float x = (Sigmoid(pdata[0]) * 2.f - 0.5f + j) * netStride[stride]; //x

//float y = (Sigmoid(pdata[1]) * 2.f - 0.5f + i) * netStride[stride]; //y

//float w = powf(Sigmoid(pdata[2]) * 2.f, 2.f) * anchor_w; //w

//float h = powf(Sigmoid(pdata[3]) * 2.f, 2.f) * anchor_h; //h

//yolov5 6.0格式:

float x = pdata[0];// (Sigmoid(pdata[0]) * 2.f - 0.5f + j) * netStride[stride]; //x

float y = pdata[1];// (Sigmoid(pdata[1]) * 2.f - 0.5f + i) * netStride[stride]; //y

float w = pdata[2];// powf(Sigmoid(pdata[2]) * 2.f, 2.f) * anchor_w; //w

float h = pdata[3];// powf(Sigmoid(pdata[3]) * 2.f, 2.f) * anchor_h; //h

int left = (x - 0.5*w)*ratio_w;

int top = (y - 0.5*h)*ratio_h;

classIds.push_back(classIdPoint.x);

confidences.push_back(max_class_socre*box_score);

boxes.push_back(Rect(left, top, int(w*ratio_w), int(h*ratio_h)));

}

}

pdata += net_width;//指针移到下一行

}

}

}

}

//接上面执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

vector<int> nms_result;

int ship_id_ini=0;//初始化人数,

int trucks_id_ini=0;//初始化人数,

int person_id_ini=0;//初始化车辆数

int stacking_id_ini=0;//初始化车辆数

int forklift_id_ini=0;//初始化车辆数

int unload_car_id_ini=0;//初始化车辆数

int load_car_id_ini=0;//初始化车辆数

int car_private_id_ini=0;//初始化车辆数

NMSBoxes(boxes, confidences, classThreshold, nmsThreshold, nms_result);

for (int i = 0; i < nms_result.size(); i++) {

int idx = nms_result[i];

Output result;

result.id = classIds[idx];

result.confidence = confidences[idx];

result.box = boxes[idx];

output.push_back(result);

if(result.id==0)//当类别数等于船的时候

{

result.ship_id=ship_id_ini;//当船等于

ship_id_ini=ship_id_ini+1;

}

if(result.id==1)//当类别数等于车的时候

{

result.truck_id=trucks_id_ini;//当车数等于

trucks_id_ini=trucks_id_ini+1;

}

if(result.id==2)//当类别数等于人的时候

{

result.person_id=person_id_ini;//当人数等于

person_id_ini=person_id_ini+1;

}if(result.id==3)//当类别数等于人的时候

{

result.staring_id=stacking_id_ini;//当人数等于

stacking_id_ini=stacking_id_ini+1;

}

if(result.id==4)//当类别数等于人的时候

{

result.forklift_id=forklift_id_ini;//当人数等于

forklift_id_ini=forklift_id_ini+1;

}

if(result.id==5)//当类别数等于人的时候

{

result.unload_car_id=unload_car_id_ini;//当人数等于

unload_car_id_ini=unload_car_id_ini+1;

}

if(result.id==6)//当类别数等于人的时候

{

result.load_car_id=load_car_id_ini;//当人数等于

load_car_id_ini=load_car_id_ini+1;

}

if(result.id==7)//当类别数等于人的时候

{

result.private_car_id=car_private_id_ini;//当人数等于

car_private_id_ini=car_private_id_ini+1;

}

}

ship_num=ship_id_ini;

car_trucks_num=trucks_id_ini;

person_num=person_id_ini;

stacking_area_num=stacking_id_ini;

car_forklift_num=forklift_id_ini ;//总车数

unload_car_num=unload_car_id_ini;//总船数

load_car_num=load_car_id_ini;//总人数

car_private_num=car_private_id_ini;//总车数

if (output.size())

return true;

else

return false;

}//这个括号是最后

void yolo::drawPred(Mat &img, vector<Output> result, vector<Scalar> color)

{

for (int i = 0; i < result.size(); i++)

{

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

// string label = className[result[i].id] + ":" + to_string(result[i].confidence)+" id:"+to_string(result[i].person_id);

string label;

if(result[i].id==0)

{

label = className[result[i].id] ;

}

if(result[i].id==1)

{

label = className[result[i].id] ;

}

if(result[i].id==2)

{

label = className[result[i].id] ;

}

if(result[i].id==3)

{

label = className[result[i].id] ;

}

if(result[i].id==4)

{

label = className[result[i].id] ;

}

if(result[i].id==5)

{

label = className[result[i].id] ;

}

if(result[i].id==6)

{

label = className[result[i].id] ;

}

if(result[i].id==7)

{

label = className[result[i].id];

}

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

//rectangle(frame, Point(left, top - int(1.5 * labelSize.height)), Point(left + int(1.5 * labelSize.width), top + baseLine), Scalar(0, 255, 0), FILLED);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

//imshow("res", img);

//imwrite("./result.jpg", img);

//waitKey();

//destroyAllWindows();

}

//这个是针对悍马的画框

void yolo::drawRect(cv::Mat &img, yolo::Output_max_confidence result)

{

int left, top;

left = result.box.x;

top = result.box.y;

rectangle(img, result.box,Scalar(0,0,255) , 2, 8);

// string label = className[result[i].id] + ":" + to_string(result[i].confidence)+" id:"+to_string(result[i].person_id);

string label;

if(result.id==0)

{

label = className[result.id];

}

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

//rectangle(frame, Point(left, top - int(1.5 * labelSize.height)), Point(left + int(1.5 * labelSize.width), top + baseLine), Scalar(0, 255, 0), FILLED);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 2,Scalar(0,0,255), 2);

}

float yolo::findMax(std::vector<float> vec) {

float max = -999;

for (auto v : vec) {

if (max < v) max = v;

}

return max;

}

int yolo::getPositionOfMax(std::vector<float> vec, int max) {

auto distance = find(vec.begin(), vec.end(), max);

return distance - vec.begin();

}

//返回一个最大置信度的框

yolo::Output_max_confidence yolo::get_only_one_max_confidence(std::vector<Output> result)

{

std::vector<float>confidence;

for (int i = 0; i < result.size(); i++)

{

confidence.push_back(result.at(i).confidence);

// cout<<"result.at(i).confidence"<<result.at(i).confidence<<endl;

}

float maxConfidence=findMax(confidence);

int position = result.size()-getPositionOfMax(confidence, maxConfidence);

// cout<<"max_confidengce"<<maxConfidence<<"position:"<<position<<endl;

Output_max_confidence o_m_c;

o_m_c.confidence=maxConfidence;

o_m_c.id=position;

o_m_c.box=result.at(position).box;

return o_m_c ;

}

检测的调用代码测试案例

这段调用的例子,只要把frame 改成你们自己的图片即可

yolo test; //创建yolo类

cv::dnn::Net net; //创建yolo网络

vector< cv::Scalar> color;//Bounding Box颜色

QString Filename_onnx="quanjingbest.onnx";

cv::String filename_onnx=Filename_onnx.toStdString();

vector<yolo::Output>result_video;

test.readModel(net,filename_onnx,false);

for (int i = 0; i < 80; i++) {

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back( cv::Scalar(b, g, r));

}

cv::Mat frame=cv::imread("D://1.jpg");

if(test.Detect(frame, net, result_video)) //调用YOLO模型检测

test.drawPred(frame, result_video, color);

cv::imshow("a",frame);

cv::waitKey(1);4:分割的主要代码

YoloSeg.h

#ifndef YOLO_SEG_H

#define YOLO_SEG_H

#pragma once

#include<iostream>

#include<opencv2/opencv.hpp>

#include "yolov5_seg_utils.h"

class YoloSeg {

public:

YoloSeg() {

}

~YoloSeg() {}

/** \brief Read onnx-model

* \param[out] read onnx file into cv::dnn::Net

* \param[in] modelPath:onnx-model path

* \param[in] isCuda:if true and opencv built with CUDA(cmake),use OpenCV-GPU,else run it on cpu.

*/

bool ReadModel(cv::dnn::Net& net, std::string& netPath, bool isCuda);

/** \brief detect.

* \param[in] srcImg:a 3-channels image.

* \param[out] output:detection results of input image.

*/

bool Detect(cv::Mat& srcImg, cv::dnn::Net& net, std::vector<OutputSeg>& output);

#if(defined YOLO_P6 && YOLO_P6==true)

const int _netWidth = 1280; //ONNX图片输入宽度

const int _netHeight = 1280; //ONNX图片输入高度

const int _segWidth = 320; //_segWidth=_netWidth/mask_ratio

const int _segHeight = 320;

const int _segChannels = 32;

#else

const int _netWidth = 640; //ONNX图片输入宽度

const int _netHeight = 640; //ONNX图片输入高度

const int _segWidth = 160; //_segWidth=_netWidth/mask_ratio

const int _segHeight = 160;

const int _segChannels = 32;

#endif // YOLO_P6

float _classThreshold = 0.25;

float _nmsThreshold = 0.45;

float _maskThreshold = 0.5;

public:

std::vector<std::string> _className = { "steel"};//类别名,换成自己的模型需要修改此项

};

#endif // YOLO_SEG_H

YoloSeg.cpp

#include "yolo_seg.h"

#include"yolo_seg.h"

using namespace std;

using namespace cv;

using namespace cv::dnn;

bool YoloSeg::ReadModel(Net& net, string& netPath, bool isCuda = false) {

try {

net = readNet(netPath);

#if CV_VERSION_MAJOR==4 &&CV_VERSION_MINOR==7&&CV_VERSION_REVISION==0

net.enableWinograd(false); //bug of opencv4.7.x in AVX only platform ,https://github.com/opencv/opencv/pull/23112 and https://github.com/opencv/opencv/issues/23080

//net.enableWinograd(true); //If your CPU supports AVX2, you can set it true to speed up

#endif

}

catch (const std::exception&) {

return false;

}

if (isCuda) {

//cuda

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA); //or DNN_TARGET_CUDA_FP16

}

else {

//cpu

cout << "Inference device: CPU" << endl;

net.setPreferableBackend(cv::dnn::DNN_BACKEND_DEFAULT);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

return true;

}

bool YoloSeg::Detect(Mat& srcImg, Net& net, vector<OutputSeg>& output) {

Mat blob;

output.clear();

int col = srcImg.cols;

int row = srcImg.rows;

Mat netInputImg;

Vec4d params;

LetterBox(srcImg, netInputImg, params, cv::Size(_netWidth, _netHeight));

blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_netWidth, _netHeight), cv::Scalar(0, 0, 0), true, false);

//**************************************************************************************************************************************************/

//如果在其他设置没有问题的情况下但是结果偏差很大,可以尝试下用下面两句语句

// If there is no problem with other settings, but results are a lot different from Python-onnx , you can try to use the following two sentences

//

//$ blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_netWidth, _netHeight), cv::Scalar(104, 117, 123), true, false);

//$ blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_netWidth, _netHeight), cv::Scalar(114, 114,114), true, false);

//****************************************************************************************************************************************************/

net.setInput(blob);

std::vector<cv::Mat> net_output_img;

//*********************************************************************************************************************************

//net.forward(net_output_img, net.getUnconnectedOutLayersNames());

//opencv4.5.x和4.6.x这里输出不一致,推荐使用下面的固定名称输出

// 如果使用net.forward(net_output_img, net.getUnconnectedOutLayersNames()),需要确认下net.getUnconnectedOutLayersNames()返回值中output0在前,output1在后,否者出错

//

// The outputs of opencv4.5.x and 4.6.x are inconsistent.Please make sure "output0" is in front of "output1" if you use net.forward(net_output_img, net.getUnconnectedOutLayersNames())

//*********************************************************************************************************************************

vector<string> output_layer_names{ "output0","output1" };

net.forward(net_output_img, output_layer_names); //获取output的输出

std::vector<int> class_ids;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<vector<float>> picked_proposals; //output0[:,:, 5 + _className.size():net_width]===> for mask

int net_width = _className.size() + 5 + _segChannels;// 80 + 5 + 32 = 117

int out0_width= net_output_img[0].size[2];

// assert(net_width == out0_width, "Error Wrong number of _className or _segChannels"); //模型类别数目不对或者_segChannels设置错误

int net_height = net_output_img[0].size[1];// 25200

float* pdata = (float*)net_output_img[0].data;

for (int r = 0; r < net_height; r++) { //lines

float box_score = pdata[4];

if (box_score >= _classThreshold) {

cv::Mat scores(1, _className.size(), CV_32FC1, pdata + 5); // 可是 后面不只是有80个类别的概率;

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= _classThreshold) {

vector<float> temp_proto(pdata + 5 + _className.size(), pdata + net_width); // Mask Coeffcients,mask的掩码系数

picked_proposals.push_back(temp_proto);

//rect [x,y,w,h]

float x = (pdata[0] - params[2]) / params[0]; //x

float y = (pdata[1] - params[3]) / params[1]; //y

float w = pdata[2] / params[0]; //w

float h = pdata[3] / params[1]; //h

int left = MAX(int(x - 0.5 * w + 0.5), 0);

int top = MAX(int(y - 0.5 * h + 0.5), 0);

class_ids.push_back(classIdPoint.x);

confidences.push_back(max_class_socre * box_score);

boxes.push_back(Rect(left, top, int(w + 0.5), int(h + 0.5)));

}

}

pdata += net_width;//下一行

}

//NMS

vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, _classThreshold, _nmsThreshold, nms_result);

std::vector<vector<float>> temp_mask_proposals;

Rect holeImgRect(0, 0, srcImg.cols, srcImg.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = class_ids[idx];

result.confidence = confidences[idx];

result.box = boxes[idx] & holeImgRect;

temp_mask_proposals.push_back(picked_proposals[idx]);

output.push_back(result);

}

MaskParams mask_params;

mask_params.params = params;

mask_params.srcImgShape = srcImg.size();

for (int i = 0; i < temp_mask_proposals.size(); ++i) {

GetMask2(Mat(temp_mask_proposals[i]).t(), net_output_img[1], output[i], mask_params); // 注意这里是net_output_img[1],为原型mask

}

//******************** ****************

// 老版本的方案,如果上面GetMask2出错,建议使用这个。

// If the GetMask2() still reports errors , it is recommended to use GetMask().

// Mat mask_proposals;

//for (int i = 0; i < temp_mask_proposals.size(); ++i)

// mask_proposals.push_back(Mat(temp_mask_proposals[i]).t());

//GetMask(mask_proposals, net_output_img[1], output, mask_params);

//*****************************************************/

if (output.size())

return true;

else

return false;

}

yolov5_seg_utils.h

#ifndef YOLOV5_SEG_UTILS_H

#define YOLOV5_SEG_UTILS_H

#pragma once

#include<iostream>

#include <numeric>

#include<opencv2/opencv.hpp>

#define YOLO_P6 false //是否使用P6模型

#define ORT_OLD_VISON 12 //ort1.12.0 之前的版本为旧版本API

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

struct MaskParams {

int segChannels = 32;

int segWidth = 160;

int segHeight = 160;

int netWidth = 640;

int netHeight = 640;

float maskThreshold = 0.5;

cv::Size srcImgShape;

cv::Vec4d params;

};

bool CheckParams(int netHeight, int netWidth, const int* netStride, int strideSize);

void DrawPred(cv::Mat& img, std::vector<OutputSeg> result, std::vector<std::string> classNames, std::vector<cv::Scalar> color);

void LetterBox(const cv::Mat& image, cv::Mat& outImage,

cv::Vec4d& params, //[ratio_x,ratio_y,dw,dh]

const cv::Size& newShape = cv::Size(640, 640),

bool autoShape = false,

bool scaleFill = false,

bool scaleUp = true,

int stride = 32,

const cv::Scalar& color = cv::Scalar(114, 114, 114));

void GetMask(const cv::Mat& maskProposals, const cv::Mat& maskProtos, std::vector<OutputSeg>& output, const MaskParams& maskParams);

void GetMask2(const cv::Mat& maskProposals, const cv::Mat& maskProtos, OutputSeg& output, const MaskParams& maskParams);

#endif // YOLOV5_SEG_UTILS_H

yolov5_seg_utils.cpp

#include "yolov5_seg_utils.h"

#pragma once

#include "yolov5_seg_utils.h"

using namespace cv;

using namespace std;

bool CheckParams(int netHeight, int netWidth, const int* netStride, int strideSize) {

if (netHeight % netStride[strideSize - 1] != 0 || netWidth % netStride[strideSize - 1] != 0)

{

cout << "Error:_netHeight and _netWidth must be multiple of max stride " << netStride[strideSize - 1] << "!" << endl;

return false;

}

return true;

}

void LetterBox(const cv::Mat& image, cv::Mat& outImage, cv::Vec4d& params, const cv::Size& newShape,

bool autoShape, bool scaleFill, bool scaleUp, int stride, const cv::Scalar& color)

{

if (false) {

int maxLen = MAX(image.rows, image.cols);

outImage = Mat::zeros(Size(maxLen, maxLen), CV_8UC3);

image.copyTo(outImage(Rect(0, 0, image.cols, image.rows)));

params[0] = 1;

params[1] = 1;

params[3] = 0;

params[2] = 0;

}

cv::Size shape = image.size();

float r = std::min((float)newShape.height / (float)shape.height,

(float)newShape.width / (float)shape.width);

if (!scaleUp)

r = std::min(r, 1.0f);

float ratio[2]{ r, r };

int new_un_pad[2] = { (int)std::round((float)shape.width * r),(int)std::round((float)shape.height * r) };

auto dw = (float)(newShape.width - new_un_pad[0]);

auto dh = (float)(newShape.height - new_un_pad[1]);

if (autoShape)

{

dw = (float)((int)dw % stride);

dh = (float)((int)dh % stride);

}

else if (scaleFill)

{

dw = 0.0f;

dh = 0.0f;

new_un_pad[0] = newShape.width;

new_un_pad[1] = newShape.height;

ratio[0] = (float)newShape.width / (float)shape.width;

ratio[1] = (float)newShape.height / (float)shape.height;

}

dw /= 2.0f;

dh /= 2.0f;

if (shape.width != new_un_pad[0] && shape.height != new_un_pad[1])

{

cv::resize(image, outImage, cv::Size(new_un_pad[0], new_un_pad[1]));

}

else {

outImage = image.clone();

}

int top = int(std::round(dh - 0.1f));

int bottom = int(std::round(dh + 0.1f));

int left = int(std::round(dw - 0.1f));

int right = int(std::round(dw + 0.1f));

params[0] = ratio[0];

params[1] = ratio[1];

params[2] = left;

params[3] = top;

cv::copyMakeBorder(outImage, outImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);

}

void GetMask(const cv::Mat& maskProposals, const cv::Mat& maskProtos, std::vector<OutputSeg>& output, const MaskParams& maskParams) {

//cout << maskProtos.size << endl;

int seg_channels = maskParams.segChannels;

int net_width = maskParams.netWidth;

int seg_width = maskParams.segWidth;

int net_height = maskParams.netHeight;

int seg_height = maskParams.segHeight;

float mask_threshold = maskParams.maskThreshold;

Vec4f params = maskParams.params;

Size src_img_shape = maskParams.srcImgShape;

Mat protos = maskProtos.reshape(0, { seg_channels,seg_width * seg_height });

Mat matmul_res = (maskProposals * protos).t();

Mat masks = matmul_res.reshape(output.size(), { seg_width,seg_height });

vector<Mat> maskChannels;

split(masks, maskChannels);

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

//sigmoid

cv::exp(-maskChannels[i], dest);

dest = 1.0 / (1.0 + dest);

Rect roi(int(params[2] / net_width * seg_width), int(params[3] / net_height * seg_height), int(seg_width - params[2] / 2), int(seg_height - params[3] / 2));

dest = dest(roi);

resize(dest, mask, src_img_shape, INTER_NEAREST);

//crop

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > mask_threshold;

output[i].boxMask = mask;

}

}

void GetMask2(const Mat& maskProposals, const Mat& mask_protos, OutputSeg& output, const MaskParams& maskParams) {

int seg_channels = maskParams.segChannels;

int net_width = maskParams.netWidth;

int seg_width = maskParams.segWidth;

int net_height = maskParams.netHeight;

int seg_height = maskParams.segHeight;

float mask_threshold = maskParams.maskThreshold;

Vec4f params = maskParams.params;

Size src_img_shape = maskParams.srcImgShape;

Rect temp_rect = output.box;

// 把已经到原图的检测框坐标信息 映射到 获得mask原型分支的输入尺寸上【160, 160】

int rang_x = floor((temp_rect.x * params[0] + params[2]) / net_width * seg_width);

int rang_y = floor((temp_rect.y * params[1] + params[3]) / net_height * seg_height);

int rang_w = ceil(((temp_rect.x + temp_rect.width) * params[0] + params[2]) / net_width * seg_width) - rang_x;

int rang_h = ceil(((temp_rect.y + temp_rect.height) * params[0] + params[3]) / net_width * seg_height) - rang_y;

//

rang_w = MAX(rang_w, 1);

rang_h = MAX(rang_h, 1);

if (rang_x + rang_w > seg_width){

if (seg_width - rang_x > 0)

rang_w =seg_width -rang_x;

else

rang_x -= 1;

}

if (rang_y + rang_h > seg_height) {

if (seg_height - rang_y > 0)

rang_h = seg_height - rang_y;

else

rang_y -= 1;

}

vector<Range> roi_ranges;

roi_ranges.push_back(Range(0,1));

roi_ranges.push_back(Range::all());

roi_ranges.push_back(Range(rang_y, rang_h+rang_y));

roi_ranges.push_back(Range(rang_x, rang_w+rang_x));

// 裁剪mask原型

Mat temp_mask_protos = mask_protos(roi_ranges).clone(); // 剪裁原型,保存检测框内部的原型,其余位置清零, 以此来获得感兴趣区域(roi)

Mat protos = temp_mask_protos.reshape(0, { seg_channels, rang_w*rang_h});// 检测至检测框大小?

// mask系数与mask原型做矩阵乘法

Mat matmul_res = (maskProposals * protos).t(); // mask系数【1,32】 与 mask原型【32, h*w】进行矩阵相称

Mat masks_feature = matmul_res.reshape(1,{rang_h, rang_w}); //【1,h,w】

Mat dest, mask;

// sigmod

cv::exp(-masks_feature, dest);

dest = 1.0 / (1.0 + dest);

// 检测框坐标 映射到 原图尺寸

int left = floor((net_width / seg_width * rang_x - params[2]) / params[0]);

int top = floor((net_width / seg_height * rang_y - params[3]) / params[1]);

int width = ceil(net_width / seg_height * rang_w / params[0]);

int height = ceil(net_height / seg_height * rang_h / params[1]);

// 检测框mask缩放到原图尺寸

resize(dest, mask, Size(width, height), INTER_NEAREST);

// 阈值化

mask = mask(temp_rect - Point(left, top)) > mask_threshold;

output.boxMask = mask;

}

void DrawPred(Mat& img, vector<OutputSeg> result, std::vector<std::string> classNames, vector<Scalar> color) {

Mat mask = img.clone();

for (int i=0; i< result.size(); i++){

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num =i;

// 目标画框

rectangle(img, result[i].box, color[result[i].id], 2, 8);

// 目标mask,这里非目标像素值为0

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

string label = classNames[result[i].id] + ":" + to_string(result[i].confidence);

// 框左上角打印信息

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.5, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.5, 0, img);

}

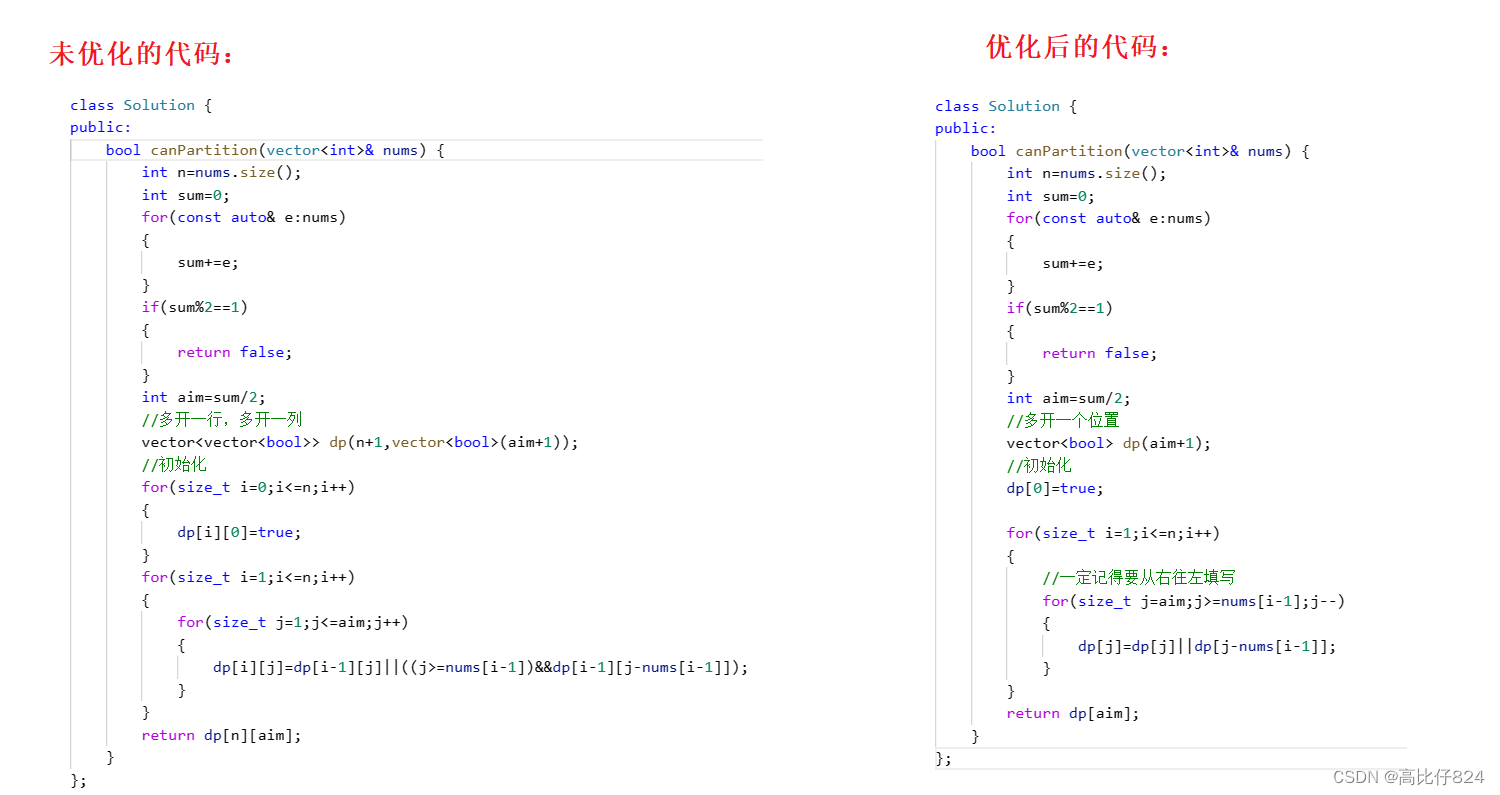

分割的调用代码测试案例

void frmMain::test_yoloseg()

{

string model_path = "20231001segquanjing.onnx";

YoloSeg test;

Net net;

if (test.ReadModel(net, model_path, true)) {

cout << "read net ok!" << endl;

}

else {

return ;

}

vector<OutputSeg> result;

cv::Mat img_seg_test=cv::imread("E:/data_seg/quanjingCameraPicture2/1/segdata2278.jpg");

bool find = test.Detect(img_seg_test, net, result);

if (find) {

DrawPred(img_seg_test, result, test._className, color);

}

else {

cout << "Detect Failed!"<<endl;

}

string label = "steel:" ; //ms

putText(img_seg_test, label, Point(30,30), FONT_HERSHEY_SIMPLEX,0.5, Scalar(0,0,255), 2, 8);

QPixmap img_test_xmap= my_publiction.cvMatToQPixmap(img_seg_test);

// 设置QLabel的最小尺寸

ui->lab1->setMinimumSize(600, 600);

ui->lab1->setScaledContents(true);

img_test_xmap = img_test_xmap.scaled( ui->lab1->width(), ui->lab1->height(), Qt::KeepAspectRatio,Qt::SmoothTransformation); // 将图像缩放到QLabel的大小

ui->lab1->setPixmap(img_test_xmap);

// imshow("result", img_seg_test);

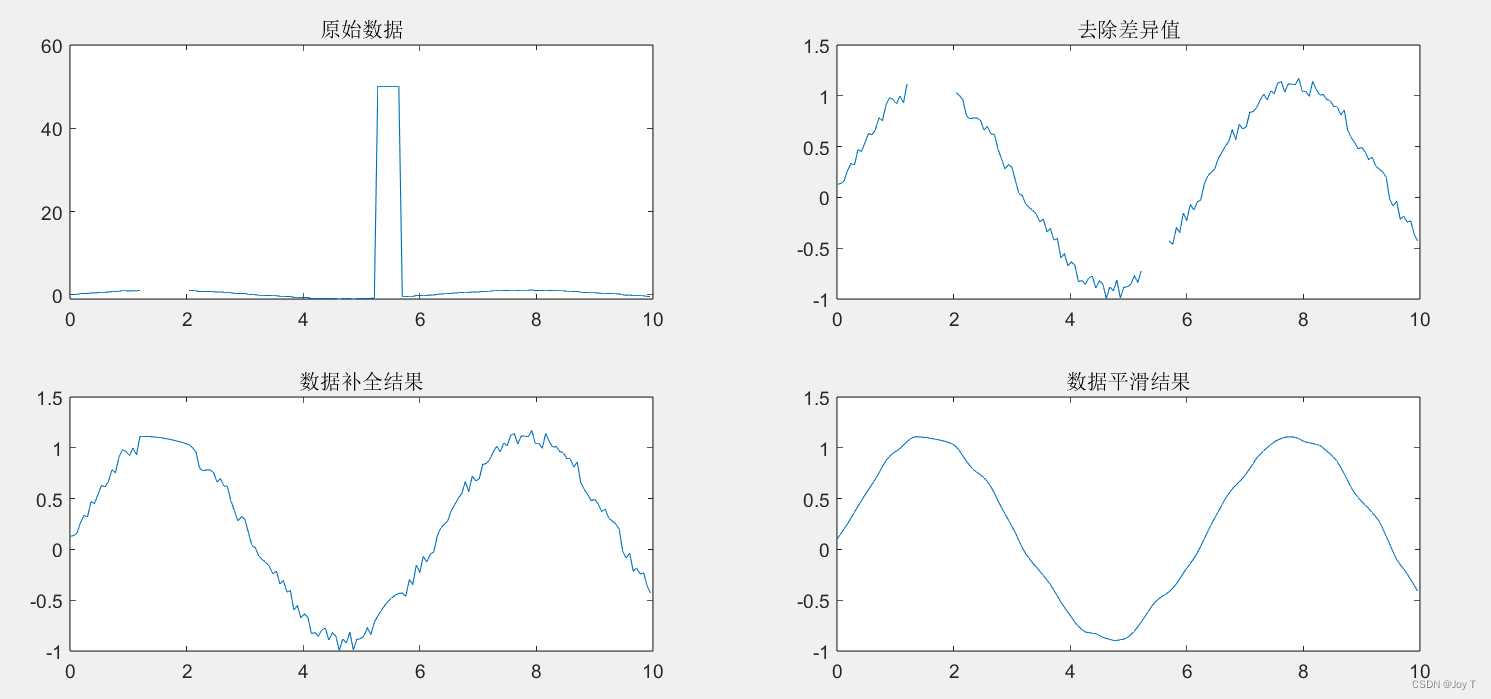

}分割的效果图如下:

![[Spring] Spring5——IOC 简介(二)](https://img-blog.csdnimg.cn/acdd080d850849fcb6e456c3168fb3e6.png)