目录

一.基础环境配置(每个节点都做)

1.hosts解析

2.防火墙和selinux

3.安装基本软件并配置时间同步

4.禁用swap分区

5.更改内核参数

6.配置ipvs

7.k8s下载

(1)配置镜像下载相关软件

(2)配置kubelet上的cgroup

二.下载containerd(每个节点都做)

1.下载基本软件

2.添加软件仓库信息

3.更改docker-ce.repo文件

4.下载containerd并初始化配置

5.更改containerd上的cgroup

6.修改镜像源为阿里

7.配置crictl并拉取镜像验证

三.master节点初始化(只在master做)

1.生成并修改配置文件

2.查看/etc/containerd/config.toml 内的image地址是否已经加载为阿里的地址

3.查看所需镜像并拉取

4.初始化

(1)通过生成的kubeadm.yml文件进行初始化

(2)注意一个报错:

(3)初始化后需要执行的操作

四.node节点加入master

1.根据master初始化成功后的命令进行加入

2.node1/node2加入

3.在master上查看

4.注意报错

(1)/etc/kubernetes下那些文件已存在,一般是由于已经加入过master,我选择的是将其目录下的内容删除,或者使用reset进行重置

(2)端口占用问题尝试将占用进程杀掉

五.安装网络插件(master做,node选做)

1.获取并修改文件

2.应用该文件并进行查看验证

| 192.168.2.190 | master |

|---|---|

| 192.168.2.191 | node2-191.com |

| 192.168.2.193 | node4-193.com |

一.基础环境配置(每个节点都做)

1.hosts解析

[root@master ~]# tail -3 /etc/hosts

192.168.2.190 master

192.168.2.191 node2-191.com

192.168.2.193 node4-193.com2.防火墙和selinux

[root@master ~]# systemctl status firewalld.service;getenforce

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

Disabled

#临时

systemctl stop firewalld

setenforce 0

#禁用

systemctl disable firewalld

sed -i '/^SELINUX=/ c SELINUX=disabled' /etc/selinux/config3.安装基本软件并配置时间同步

[root@master ~]# yum install -y wget tree bash-completion lrzsz psmisc net-tools vim chrony

[root@master ~]# vim /etc/chrony.conf

:3,6 s/^/# #注释掉原有行

server ntp1.aliyun.com iburst

[root@node1-190 ~]# systemctl restart chronyd

[root@node1-190 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 120.25.115.20 2 8 341 431 -357us[ -771us] +/- 20ms4.禁用swap分区

[root@master ~]# swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab && free -m

total used free shared buff/cache available

Mem: 10376 943 8875 11 557 9178

Swap: 0 0 05.更改内核参数

[root@node1-190 ~]# cat >> /etc/sysctl.d/k8s.conf << EOF

vm.swappiness=0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@node1-190 ~]# modprobe br_netfilter && modprobe overlay && sysctl -p /etc/sysctl.d/k8s.conf

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 16.配置ipvs

[root@node1-190 ~]# yum install ipset ipvsadm -y

[root@node1-190 ~]# cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

# 为脚本文件添加执行权限并运行,验证是否加载成功

[root@node1-190 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules && /bin/bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 15053 2

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139264 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack7.k8s下载

(1)配置镜像下载相关软件

[root@master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@master ~]# yum install -y kubeadm kubelet kubectl

[root@master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"28", GitVersion:"v1.28.2", GitCommit:"89a4ea3e1e4ddd7f7572286090359983e0387b2f", GitTreeState:"clean", BuildDate:"2023-09-13T09:34:32Z", GoVersion:"go1.20.8", Compiler:"gc", Platform:"linux/amd64"}(2)配置kubelet上的cgroup

[root@master ~]# cat <<EOF > /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

[root@master ~]# systemctl start kubelet

[root@master ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.二.下载containerd(每个节点都做)

1.下载基本软件

[root@master ~]# yum install -y yum-utils device-mapper-persistent-data lvm22.添加软件仓库信息

[root@master ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo3.更改docker-ce.repo文件

[root@master ~]# sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo4.下载containerd并初始化配置

[root@master ~]# yum install -y containerd

[root@master ~]# containerd config default | tee /etc/containerd/config.toml5.更改containerd上的cgroup

[root@master ~]# sed -i "s#SystemdCgroup\ \=\ false#SystemdCgroup\ \=\ true#g" /etc/containerd/config.toml6.修改镜像源为阿里

[root@master ~]# sed -i "s#registry.k8s.io#registry.aliyuncs.com/google_containers#g" /etc/containerd/config.toml7.配置crictl并拉取镜像验证

[root@master ~]# crictl --version

crictl version v1.26.0

[root@master ~]# cat <<EOF | tee /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

[root@master ~]# systemctl daemon-reload && systemctl start containerd && systemctl enable containerd

Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service.

[root@master ~]# crictl pull nginx

Image is up to date for sha256:61395b4c586da2b9b3b7ca903ea6a448e6783dfdd7f768ff2c1a0f3360aaba99

[root@master ~]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/nginx latest 61395b4c586da 70.5MB三.master节点初始化(只在master做)

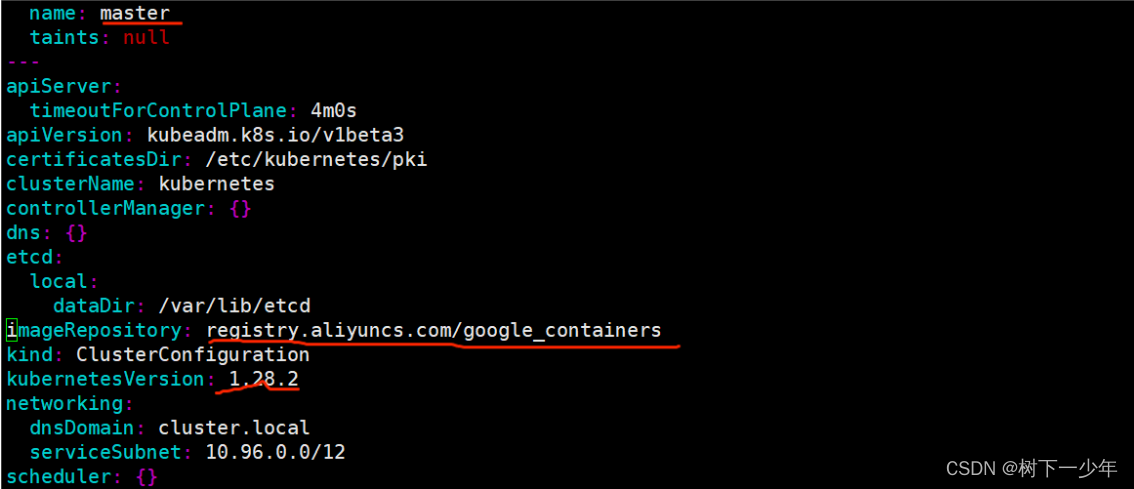

1.生成并修改配置文件

[root@master ~]# kubeadm config print init-defaults > kubeadm.yml

[root@master ~]# ll

total 8

-rw-r--r-- 1 root root 0 Jul 23 09:59 abc

-rw-------. 1 root root 1386 Jul 23 09:02 anaconda-ks.cfg

-rw-r--r-- 1 root root 807 Sep 27 16:18 kubeadm.yml

[root@master ~]# vim kubeadm.yml name修改为master主机的名称

imageRepository修改为阿里的地址registry.aliyuncs.com/google_containers

KubenetesVersion修改为真实版本

2.查看/etc/containerd/config.toml 内的image地址是否已经加载为阿里的地址

[root@master ~]# vim /etc/containerd/config.toml

[root@master ~]# systemctl restart containerd

3.查看所需镜像并拉取

[root@master ~]# kubeadm config images list --config kubeadm.yml

[root@master ~]# kubeadm config images pull --config kubeadm.yml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.28.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.9-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.10.14.初始化

(1)通过生成的kubeadm.yml文件进行初始化

[root@master ~]# kubeadm init --config=kubeadm.yml --upload-certs --v=6

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.190:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:0dbb20609e31e4fe7d8ec76f07e6efd1f56965c5f8aa5d5ae5f1d6e9e958ffbe (2)注意一个报错:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist解决

#编辑此文件并写入内容后重载配置

[root@master ~]# vim /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

[root@master net]# modprobe br_netfilter #加载模块

[root@master net]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1(3)初始化后需要执行的操作

#master节点若是普通用户

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

#master节点若是root用户

[root@master ~]# export KUBECONFIG=/etc/kubernetes/admin.conf四.node节点加入master

1.根据master初始化成功后的命令进行加入

#后续可以通过kubeadm token create --print-join-command再获取

kubeadm join 192.168.2.190:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:0dbb20609e31e4fe7d8ec76f07e6efd1f56965c5f8aa5d5ae5f1d6e9e958ffbe 2.node1/node2加入

[root@node2-191 ~]# kubeadm join 192.168.2.190:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:3e56e3aa62b5835b6ed0d16832a4a13d1154ec09fe9c4f82bff9eaaaee2755c2

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node4-193 ~]# kubeadm join 192.168.2.190:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:3e56e3aa62b5835b6ed0d16832a4a13d1154ec09fe9c4f82bff9eaaaee2755c2

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.3.在master上查看

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 7m32s v1.28.2

node2-191.com Ready <none> 54s v1.28.2

node4-193.com Ready <none> 11s v1.28.24.注意报错

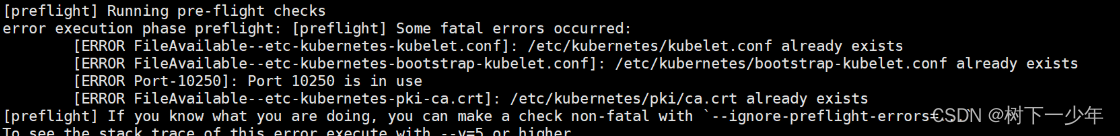

解决:

(1)/etc/kubernetes下那些文件已存在,一般是由于已经加入过master,我选择的是将其目录下的内容删除,或者使用reset进行重置

[root@node4-193 ~]# rm -rf /etc/kubernetes/*

# 或

[root@node4-193 ~]# kubeadm reset(2)端口占用问题尝试将占用进程杀掉

五.安装网络插件(master做,node选做)

1.获取并修改文件

[root@master ~]# wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

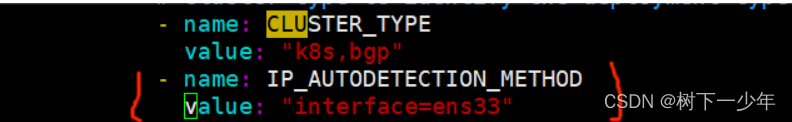

[root@master ~]# vim calico.yaml 找到CLUSTER_TYPE那行,添加后两行,ens33处填写你自己的网卡名称

2.应用该文件并进行查看验证

[root@master ~]# kubectl apply -f calico.yaml

[root@master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-66f779496c-m4rft 0/1 ContainerCreating 0 9m4s <none> master <none> <none>

kube-system coredns-66f779496c-sddmk 0/1 ContainerCreating 0 9m4s <none> master <none> <none>

kube-system etcd-master 1/1 Running 1 9m10s 192.168.2.190 master <none> <none>

kube-system kube-apiserver-master 1/1 Running 1 9m11s 192.168.2.190 master <none> <none>

kube-system kube-controller-manager-master 1/1 Running 1 9m10s 192.168.2.190 master <none> <none>

kube-system kube-proxy-4rqvm 1/1 Running 0 2m35s 192.168.2.191 node2-191.com <none> <none>

kube-system kube-proxy-5g6rb 1/1 Running 0 9m5s 192.168.2.190 master <none> <none>

kube-system kube-proxy-zbd5n 1/1 Running 0 112s 192.168.2.193 node4-193.com <none> <none>

kube-system kube-scheduler-master 1/1 Running 1 9m10s 192.168.2.190 master <none> <none>