1、启动zookeeper集群

/home/cluster/zookeeper.sh start

/home/cluster/zookeeper.sh stop

2、启动hadoop和yarn集群

/home/cluster/hadoop-3.3.6/sbin/start-dfs.sh

/home/cluster/hadoop-3.3.6/sbin/start-yarn.sh

/home/cluster/hadoop-3.3.6/sbin/stop-dfs.sh

/home/cluster/hadoop-3.3.6/sbin/stop-yarn.sh

3、启动spark集群

/home/cluster/spark-3.4.1-bin-hadoop3/sbin/start-all.sh

/home/cluster/spark-3.4.1-bin-hadoop3/sbin/stop-all.sh

4、启动flink集群

/home/cluster/flink/bin/start-cluster.sh

/home/cluster/flink/bin/stop-cluster.sh

5、启动hive

/home/cluster/hive/bin/hive

一、

/home/cluster/hive/bin/hive --service metastore

或者/home/cluster/hive/bin/hive --service metastore 2>&1 >/dev/null &

二、

/home/cluster/hive/bin/hive --service hiveserver2

或者/home/cluster/hive/bin/hive --service hiveserver2 2>&1 >/dev/null &

启动连接测试、数据写入到了Hadoop的HDFS里了。

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

Beeline version 3.1.3 by Apache Hive

beeline> !connect jdbc:hive2://node88:10000

Connecting to jdbc:hive2://node88:10000

Enter username for jdbc:hive2://node88:10000: root

Enter password for jdbc:hive2://node88:10000: ******

Connected to: Apache Hive (version 3.1.3)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://node88:10000> show database;

Error: Error while compiling statement: FAILED: ParseException line 1:5 cannot recognize input near 'show' 'database' '<EOF>' in ddl statement (state=42000,code=40000)

0: jdbc:hive2://node88:10000> show databases;

+----------------+

| database_name |

+----------------+

| default |

+----------------+

1 row selected (0.778 seconds)

0: jdbc:hive2://node88:10000> use default;

No rows affected (0.081 seconds)

0: jdbc:hive2://node88:10000> CREATE TABLE IF NOT EXISTS default.hive_demo(id INT,name STRING,ip STRING,time TIMESTAMP) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

Error: Error while compiling statement: FAILED: ParseException line 1:74 cannot recognize input near 'time' 'TIMESTAMP' ')' in column name or constraint (state=42000,code=40000)

0: jdbc:hive2://node88:10000> CREATE TABLE IF NOT EXISTS default.hive_demo(id INT,name STRING,ip STRING,t TIMESTAMP) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

No rows affected (2.848 seconds)

0: jdbc:hive2://node88:10000> show tables;

+------------+

| tab_name |

+------------+

| hive_demo |

+------------+

1 row selected (0.096 seconds)

0: jdbc:hive2://node88:10000> insert into hive_demo(id,name,ip,t) values("123456","liebe","10.10.10.88",now());

Error: Error while compiling statement: FAILED: SemanticException [Error 10011]: Invalid function now (state=42000,code=10011)

0: jdbc:hive2://node88:10000> insert into hive_demo(id,name,ip,t) values("123456","liebe","10.10.10.88",unix_timestamp());

No rows affected (180.349 seconds)

0: jdbc:hive2://node88:10000>

0: jdbc:hive2://node88:10000>

0: jdbc:hive2://node88:10000> select * from hive_demo

. . . . . . . . . . . . . . > ;

+---------------+-----------------+---------------+--------------------------+

| hive_demo.id | hive_demo.name | hive_demo.ip | hive_demo.t |

+---------------+-----------------+---------------+--------------------------+

| 123456 | liebe | 10.10.10.88 | 1970-01-20 15:00:56.674 |

+---------------+-----------------+---------------+--------------------------+

1 row selected (0.278 seconds)

0: jdbc:hive2://node88:10000>

命令行操作:

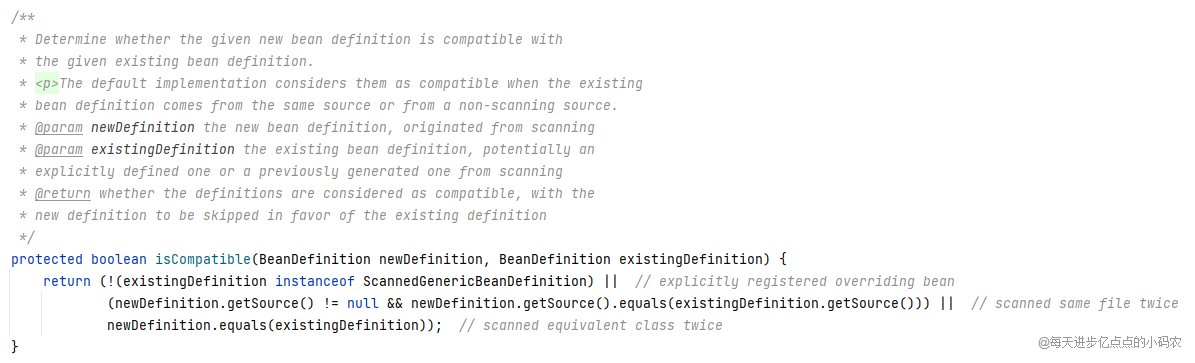

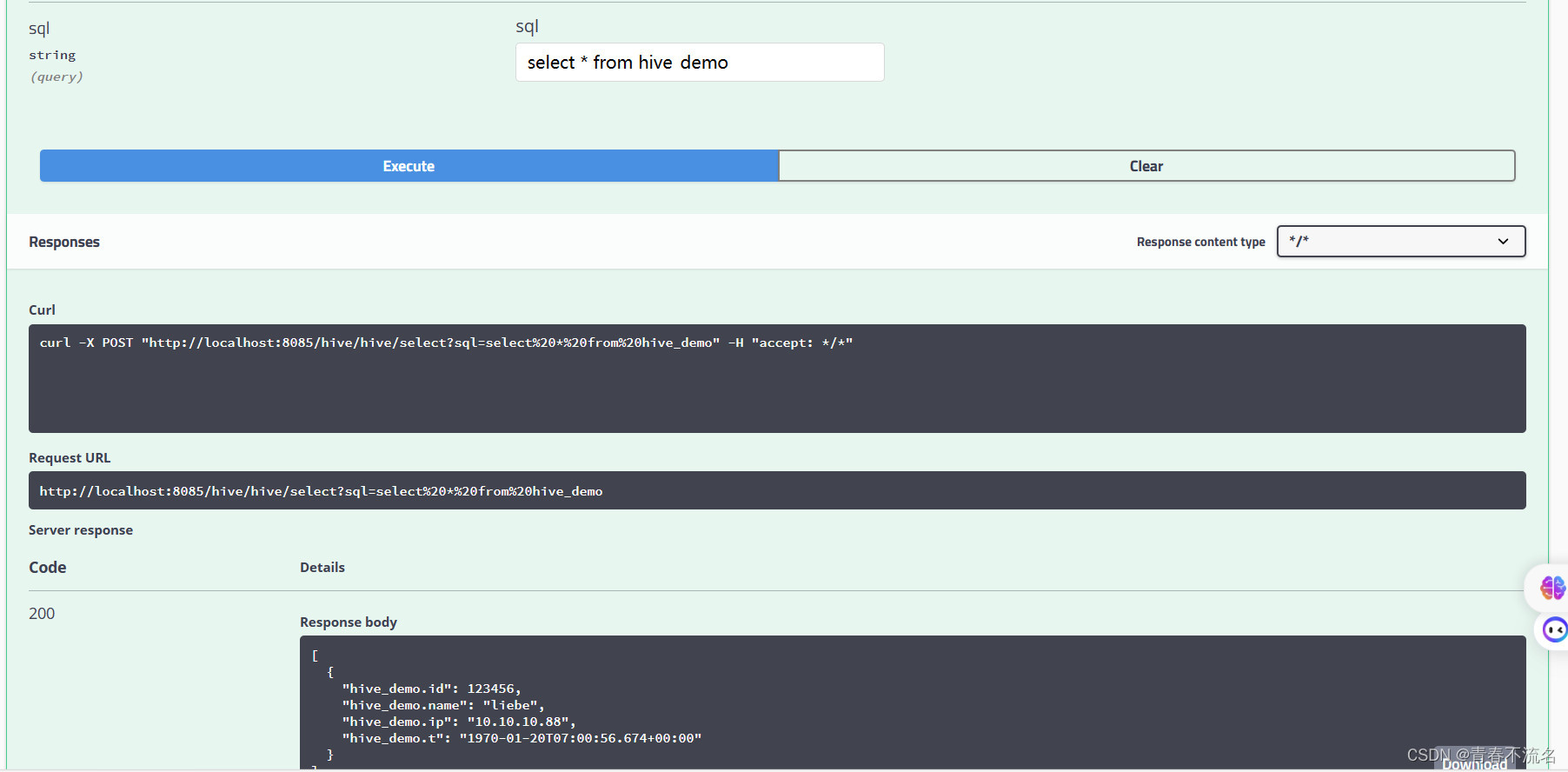

spring-boot查询hive数据

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

server: port: 8085 tomcat: max-http-form-post-size: 200MB servlet: context-path: /hive spring: profiles: active: sql customize: hive: url: jdbc:hive2://10.10.10.88:10000/default type: com.alibaba.druid.pool.DruidDataSource username: root password: 123456 driver-class-name: org.apache.hive.jdbc.HiveDriver

6、启动kafka集群

/home/cluster/kafka_2.12-3.5.1/bin/kafka-server-start.sh /home/cluster/kafka_2.12-3.5.1/config/server.properties

/home/cluster/kafka_2.12-3.5.1/bin/kafka-server-stop.sh /home/cluster/kafka_2.12-3.5.1/config/server.properties

创建topic

/home/cluster/kafka_2.12-3.5.1/bin/kafka-topics.sh --create --topic mysql-flink-kafka --replication-factor 3 --partitions 3 --bootstrap-server 10.10.10.89:9092,10.10.10.89:9092,10.10.10.99:9092

Created topic mysql-flink-kafka.

/home/cluster/kafka_2.12-3.5.1/bin/kafka-topics.sh --describe --topic mysql-flink-kafka --bootstrap-server 10.10.10.89:9092,10.10.10.89:9092,10.10.10.99:9092

Topic: mysql-flink-kafka TopicId: g5_WRWLKR3WClRDZ_Vz2oA PartitionCount: 3 ReplicationFactor: 3 Configs:

Topic: mysql-flink-kafka Partition: 0 Leader: 1 Replicas: 1,0,2 Isr: 1,0,2

Topic: mysql-flink-kafka Partition: 1 Leader: 0 Replicas: 0,2,1 Isr: 0,2,1

Topic: mysql-flink-kafka Partition: 2 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0/home/cluster/kafka_2.12-3.5.1/bin/kafka-console-consumer.sh --bootstrap-server 10.10.10.89:9092,10.10.10.89:9092,10.10.10.99:9092 --topic mysql-flink-kafka --from-beginning

![[WUSTCTF2020]颜值成绩查询 布尔注入二分法](https://img-blog.csdnimg.cn/0592b587c5eb42c2b81108986f6cb789.png)